ML Case-study Interview Question: Personalized Fashion Recommendations at Scale Using Neural Collaborative Filtering Embeddings

Case-Study question

You are tasked with designing and deploying a large-scale product recommendation system for a global fashion e-commerce platform. The platform serves millions of customers, has a catalog of around 100,000 products, and introduces hundreds of new items every week. The company wants highly relevant and personalized product recommendations at various touchpoints (homepage, category pages, search results, and similar item carousels) with low latency. How would you build such a system, ensure it handles the cold-start problem for new products, and maintain scalability under heavy loads?

Detailed Solution

Building this recommendation system requires a two-step approach: first learning core embeddings for both products and customers, then using those embeddings to power multiple downstream personalization tasks.

Step 1: Creating Embeddings with Collaborative and Content-Based Methods

Researchers typically combine collaborative filtering with content-based approaches:

Collaborative filtering learns product embeddings and aggregates a customer embedding from their interacted products. Interaction data can be clicks, purchases, add-to-bags, or save-for-laters. These embeddings capture latent signals that define preference similarities.

Content-based models generate product embeddings for items that lack sufficient interaction data. Product images, textual descriptions, or other attributes pass through neural networks that output embeddings capturing item characteristics.

The combined approach addresses cold-start issues. Even when new products have little interaction data, the content-based model generates embeddings from their features. Then, the system aggregates the interacted product embeddings to create a customer embedding.

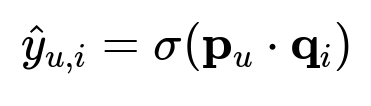

Core Formula for Neural Collaborative Filtering

Below is one possible formula for the neural collaborative filtering component, expressed in latex as the main formula. The predicted interaction score between user u and item i is:

Where:

p_u is the embedding vector for customer u (learned by combining the embeddings of all products they have interacted with).

q_i is the embedding vector for product i.

The dot operation is the dot product between the two vectors.

sigma(...) is a non-linear function (for example, a sigmoid or other activation) that produces the final score.

The collaborative filtering module learns p_u and q_i by optimizing a loss function (for example cross-entropy or mean-squared error) with training data derived from historical user-item interactions.

Step 2: Downstream Personalization Tasks

The learned embeddings are used to create personalized rankings at multiple user touchpoints:

Homepage Carousels: The system ranks items by calculating a similarity score between the user embedding and product embeddings. Different carousels may restrict candidates to a smaller set (e.g., sale items, trending products) but still rank them by relevance to the user vector.

Category Pages and Search Results: Once a user filters or searches for a product type, the system sorts the matching products by how well they align with that user’s embedding, ensuring personalized ordering even within a specific category or search term.

Similar Item Carousels: On a product detail page, the system blends the hero product’s embedding with the user embedding to create a combined vector, then ranks a candidate set for similarity to that blended vector. This approach handles scenarios where the user might be browsing one specific item, but also wants to see related alternatives.

Technical Infrastructure

Data scientists and machine learning engineers often use cloud-based solutions for training at scale and for distributed data pipelines. An example pipeline:

Interaction Logging: Collect user actions (clicks, purchases, etc.) in data storage.

Data Processing: Process these logs with a cluster framework (for example PySpark on a cloud service).

Model Training: Train collaborative and content-based neural models (for example with TensorFlow). Store the resulting embeddings.

Serving: Load embeddings into a serving framework (for example TensorFlow Serving or NVIDIA Triton) to compute similarity scores at inference time. This ensures minimal latency because the system only calculates dot products or lightweight feed-forward passes, which are fast to compute.

Using a common embedding space for multiple tasks boosts agility, since new tasks can reuse existing embeddings without completely retraining from scratch. This also supports quick iteration when refining the embeddings themselves or adding more features.

Scalability and Low Latency

Dot product calculations between a customer embedding and candidate product embeddings are inexpensive. Modern vector libraries and GPU/CPU optimizations reduce latency even for large candidate sets. When traffic spikes (for example during a sale), the system scales horizontally by adding more serving replicas.

Handling Biases and Evaluation

Training data often contains biases (positional bias, popularity bias). Researchers typically mitigate these through careful data sampling, counterfactual approaches, or advanced calibration. To measure performance, teams use both offline metrics (recall, precision, normalized discounted cumulative gain) and online tests (A/B experimentation). One challenge is bridging any gap between offline improvements and real-world user engagement.

Follow-up Question 1

How would you address the cold-start problem for items that have almost no historical clicks or purchases yet?

Answer Content-based modeling helps. The system extracts textual embeddings from product descriptions and image embeddings from product photos. These embeddings feed into dense layers to produce a final vector representation. When no interaction data exists, the model relies on this vector for the item. Once some interactions accumulate, the collaborative filtering model gradually refines it. By merging content-based and collaborative methods, the system can recommend new items accurately without extensive user feedback data.

Follow-up Question 2

What is your strategy for ensuring low latency when ranking products for a high-traffic e-commerce site?

Answer Precompute embeddings for all products and store them in a fast-access environment. Generate the customer embedding on-the-fly or retrieve a cached version if the user is known. Ranking is then just a similarity score between that customer embedding and a candidate set of product embeddings. Dot products can be computed efficiently with hardware acceleration. Scaling horizontally with multiple serving instances spreads the load, and caching repeated computations (for example popular items, or top results for frequent queries) further lowers the response time.

Follow-up Question 3

Explain how you would monitor and measure performance of this recommendation system in production.

Answer Monitor both offline and online metrics:

Offline metrics from test datasets: coverage, mean reciprocal rank, or AUC.

Online A/B testing: compare different versions of the recommendation algorithm in real time with live traffic, tracking click-through rate, conversion, or average order value.

Real-time monitoring: instrument the serving system to watch inference latency, CPU/GPU usage, and memory consumption.

Alerting: define thresholds (e.g., maximum permissible latency) and set up alerts for anomalies.

This hybrid approach balances offline experimentation with direct observation of real-world performance.

Follow-up Question 4

How do you handle data biases like positional bias or popularity bias that might skew the training process?

Answer Train with techniques that re-weight or re-sample data to reduce overemphasis on popular items. Implement randomization in the recommendation layout to gather unbiased feedback on less exposed items. Adjust the loss function to penalize popularity bias or incorporate user-based normalization. Offline evaluation splits (for example time-based splits) help ensure that the model generalizes and does not rely on over-represented products. Periodically inspect recommended items to detect bias creeping in over time.

Follow-up Question 5

Could you adapt this system to support context-aware recommendations, for example time-sensitive or location-sensitive suggestions?

Answer Incorporate contextual signals (time of day, day of week, region, device type) as inputs in either the content-based or collaborative filtering models. One approach is appending context features to the user or product vectors before feeding them into the neural network. Alternatively, maintain multiple embeddings per user for different contexts (for instance, a weekday-evening embedding vs. weekend embedding). A ranking model then adjusts its similarity score based on context patterns. Context-aware training data is crucial; the system learns which product associations matter most during each time window or location scenario.

Follow-up Question 6

Why is maintaining a shared set of embeddings for multiple personalization tasks beneficial for an online retailer?

Answer A shared embedding space makes it easier to add new personalization features and experiment with different ranking rules without retraining from scratch. This shortens iteration cycles and supports the platform’s fast-paced inventory changes. A single representation of the products also keeps the system’s logic consistent across various entry points (home carousels, search, similar item suggestions), which makes debugging simpler and fosters alignment across teams.

Follow-up Question 7

Explain the trade-offs of re-ranking with a blended user-item vector for “similar item” carousels.

Answer When the system blends the user embedding with the hero item embedding, it captures the user’s general preferences plus the current intent signaled by the hero item. This ensures relevant recommendations if the user wants to see close alternatives. However, this approach may overfit to items with similarities only to the hero item, ignoring broader user tastes. Fine-tuning the blend ratio is crucial: too much emphasis on the hero item loses personalization, too much emphasis on the user embedding produces generic results.

Follow-up Question 8

How do you reconcile differences between offline evaluation metrics and real-world user responses once you deploy the model?

Answer Offline metrics are proxies that approximate user satisfaction with historical data. Real-world conditions may differ (seasonal trends, changes in user behavior, new product lines). Continuous online testing helps. By running A/B experiments, you observe genuine user interactions. If offline improvements do not translate to online gains, revisit your dataset or reevaluate the offline metrics used. Regularly update the data sampling strategy, incorporate fresh user feedback, and keep a feedback loop to keep the model aligned with reality.

Final Thoughts

The above framework uses a strong combination of collaborative filtering and content-based embeddings. It handles cold-start scenarios, scales to millions of users and products, and maintains low inference latency. By centralizing the user and product embeddings, you enable multiple personalized experiences across the customer journey and achieve efficient model iteration.