ML Case-study Interview Question: Temporal Fusion Transformers for Robust Multi-Horizon Perishable Demand Forecasting

Browse all the ML Case-Studies here.

Case-Study question

A large online retailer wants to forecast daily demand for perishable goods across multiple cities. Their supply chain is just-in-time. They want accurate multi-horizon forecasts up to seven days in advance, incorporating known and unknown future inputs like weather forecasts, holidays, and static region-level information. Customer behavior changes quickly, so the solution must handle sudden shifts in purchasing patterns. How would you design and implement a robust end-to-end demand forecasting system?

Your response should cover:

Direct steps for data engineering and feature selection, including how to handle data known only in the future and data unknown in the future. Strategies for capturing short-term dynamics (e.g., daily spikes, holidays) and long-term trends (e.g., seasonal behaviors). Quantile regression to produce upper and lower demand forecasts. Explanations for how you would deal with large-scale training needs, hardware considerations, and memory constraints. Methods for model interpretability to convince business stakeholders and guide improvements.

Detailed Solution

Data Preparation

Extract transactional data for all products and aggregate demand at desired levels (article or delivery). Identify static features (city, region) and time-varying features (holidays, weather). Split features into past-only (historical demand) and known-future (weather forecasts or promotional schedules).

Clean and align data into standardized sequences. Ensure consistent indexing over each time series. Handle potential supply constraints or capacity limits by recording true demand signals whenever possible.

Choice of Model

Select the Temporal Fusion Transformer. It supports multi-horizon forecasting and handles static, known-future, and unknown-future inputs. Its gating and attention layers capture complex relationships. It also provides interpretability through attention weights and variable selection.

Model Architecture

Feed time-dependent features through variable selection networks. Pass them into a gated LSTM encoder for short-term context. Use self-attention to capture long-range dependencies like seasonal patterns. Fuse static covariates to enrich temporal representations. Output multiple quantiles to estimate uncertainty.

Quantile Loss

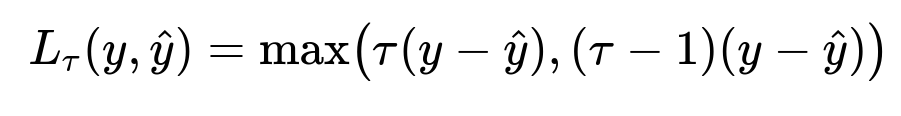

Train by minimizing pinball loss for each quantile. Pinball loss is central to quantile regression:

Here, y is actual demand, hat{y} is predicted demand, and tau is the quantile in [0,1]. If tau = 0.5, the model learns the median estimate. If tau = 0.9, the model learns the 90th percentile.

Training Procedure

Prepare sequences of input windows with sufficient historical context. For each sequence, forecast multiple future steps. Use gradient-based optimization on GPUs. Monitor validation loss per quantile. Watch memory usage because longer history windows increase training data size.

Implementation

In Python, libraries like PyTorch Forecasting or Darts have ready-made TFT modules. Preprocess data into TimeSeriesDataset-like structures. Initialize the TFT with hyperparameters (hidden_size, dropout, etc.). Use GPUs to speed up training. For large datasets, adopt techniques like sample-level batching or time-window sampling to reduce memory. For example:

import pytorch_forecasting

from pytorch_forecasting.models import TemporalFusionTransformer

model = TemporalFusionTransformer(

hidden_size=64,

lstm_layers=2,

dropout=0.1,

output_size=[7], # forecasting 7 days

loss=pytorch_forecasting.metrics.QuantileLoss(quantiles=[0.1, 0.5, 0.9]),

...

)

model.train()

This code shows initialization and the quantile loss setup.

Challenges

Memory usage is high if you include wide historical contexts. Sampling approaches reduce training size. GPU costs rise with bigger models. Fine-tune model size to maintain efficiency.

When behavior shifts (e.g., sudden surges in demand), the gating mechanisms and attention help re-weight variables that matter. The model can handle trends outside training distributions more effectively than tree-based methods.

Performance Gains

TFT often yields double-digit percentage improvements over simpler models. This is especially true for infrequent or slow-moving products, where neural architectures capture subtle temporal signals better. Prediction intervals enable risk management and cost-balanced decisions.

How would you ensure interpretability of this model?

TFT has built-in variable selection. Inspect which features carry the most weight for each time step. The self-attention matrix shows how the model focuses on different historical points. Pinpoint whether the model relies on last-week trends, holiday patterns, or weather data. Shared weights in multi-head attention clarify how the model attends to time lags.

How would you handle supply or capacity constraints in historical data?

Record actual demand signals, not just fulfilled orders. If constraints artificially cap orders, add corrective estimates for missed demand. Tag data points with capacity constraints. The model learns that suppressed demand is not true zero. You can also introduce future capacity as a known-future input if needed.

How do you approach large-scale production training and inference?

Train on GPU clusters. Tune batch sizes to control memory usage. Sample random windows from the entire dataset to cover different seasonal phases. For inference, maintain a single model object that processes daily or weekly demand predictions. Implement background jobs that run scheduled forecasts. Store results in a central system for supply chain actions.

Could gradient boosting handle this problem more easily?

Gradient boosting is simpler to train and interpret for some tasks. It can handle many features. However, it struggles when extrapolating to unseen ranges or capturing complex multi-horizon dependencies. If frequent distribution shifts happen, a more expressive model like TFT captures them better.

How do you decide which quantiles to forecast?

Focus on key operational thresholds. For example, the 50th quantile for median planning, and the 90th quantile for risk-averse inventory. Intervals define safety stock strategies. In uncertain markets, you might add an even higher quantile for minimal stock-outs.

Why not just use LSTM alone?

Vanilla LSTMs handle sequence data but do not always excel at capturing long-range dependencies or integrating diverse static and known-future inputs. TFT uses attention layers that better manage extended histories. It also has built-in variable selection, which adds extra explainability.

How do you maintain stable performance when real-world distribution shifts appear?

Monitor forecasting accuracy with rolling backtests. Re-train or fine-tune the model when error metrics deviate beyond thresholds. Keep data pipelines up to date. Introduce incremental learning if domain changes are frequent. Model gating in TFT helps but still needs re-training for large distribution changes.

What if the model overfits to training data?

Use regularization, dropout, and early stopping. Limit the hidden layer sizes. Keep an eye on validation losses. Check variable selection masks to see if irrelevant features overshadow relevant ones. Adjust the training window. Perform hyperparameter tuning with cross-validation.

How do you communicate these results to non-technical stakeholders?

Show them how the model picks out certain drivers (e.g., special holidays or severe weather) for demand surges. Illustrate attention weights for interpretability. Present quantile-based forecasts in an intuitive range format. Emphasize improved accuracy and lower waste. This builds trust for a neural network solution.