ML Case-study Interview Question: Real-Time User Segmentation for Personalized Featured Marketplace Listings.

Case-Study question

You are consulting for a large online marketplace that hosts hundreds of thousands of vehicle listings. They want to increase user engagement by showing a real-time, personalized “Featured listing” at the top of search results. They already built a Customer Data Platform that ingests user events in real time. They also segment users based on preferences like fuel type (electric, diesel, etc.) and body type (SUV, hatchback, etc.). They now want to use these segments to personalize the “Featured listing” for each user, while ensuring that they do not override explicit filters already applied. They plan to run an AB test to measure the performance uplift from this personalized listing. How would you design this end-to-end system, including the data flow, the real-time segmentation logic, the final query modifications, and the experimental setup?

Explain how you would:

Ingest and unify user data to build a profile in real time

Segment users based on recent browsing behaviors

Inject those segments back into the search process to personalize the first listing

Set up and interpret an AB test to confirm the impact

Provide a complete solution with technical details, data flow diagrams, and strategies for deployment, monitoring, and incremental improvements. Outline how you would handle scale, data freshness, user identification, and fallback scenarios.

Detailed Solution

Overview

The goal is to serve a personalized top listing in real time. The data pipeline must gather user behavior signals, keep an up-to-date user profile, and then adjust search queries on the fly. The organization uses a specialized Customer Data Platform (CDP) fed by high-frequency events. The CDP identifies each user, keeps a rolling history of what the user viewed, and then determines segments indicating their preferences (for instance, an electric-vehicle segment for those who frequently view electric ads).

Data Ingestion and User Profile Construction

Incoming user events arrive from a tracking system. Each event includes metadata such as user ID, the advertisement viewed, and relevant attributes (fuel type, body type, etc.). The events are published to GCP Pub/Sub, relayed into Kafka, then consumed by a Java-based service. This consumer follows these steps: it locates or creates the user’s CDP profile, appends the event to a short-term timeline (like the last 10 days), and updates aggregated attributes that identify the user’s preferences.

When the CDP updates a user profile, it looks at which attributes matter most for segmentation. If a user’s browsing suggests a strong preference for electric vehicles, that user becomes part of the electric segment. If their history suggests a preference for SUVs, they become part of the SUV segment. This assignment occurs whenever the proportion of relevant views passes a threshold.

For example, if p is the fraction of a user’s views matching a particular attribute (like electric), the service checks if p >= 0.5. If true, the user is placed in that segment.

Real-Time Segmentation and Query Customization

Whenever a user visits the search page, a gateway service retrieves their segments from the CDP. The request then carries these segments in headers to the search services. The search services see if the user is in a particular segment (like electric). If they have not explicitly chosen a different filter (for instance, if they did not explicitly filter only for diesel), the search services rewrite the “Featured listing” query to prioritize vehicles from that user’s segment. If the user is in multiple segments, they combine logic to find a single listing that aligns best with the user’s recent interactions.

If the segment data is unavailable or the user is new, the system defaults to a random “Featured listing” or a fallback approach that does not personalize results.

AB Testing Strategy

The platform randomly assigns new sessions to either a test or a control group. Users in the test see the personalized top listing if they have segment memberships. Users in the control group see the existing, non-personalized listing. To measure the effect, the data team compares the average number of vehicles viewed per session (or other success metrics like click-through rates) between test and control cohorts.

Customers not in a segment see no change in either bucket, so their data is excluded from the core analysis. This prevents diluting the effects. Power analysis ensures enough traffic is allocated so that any uplift in clicks or views is statistically significant.

Monitoring and Model Evaluation

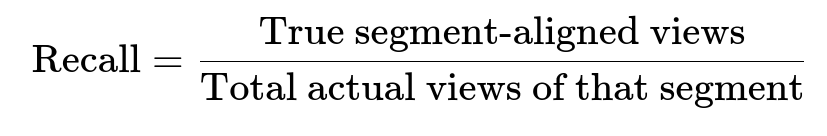

The team tracks precision and recall of segments to confirm they are accurately matching behavior. They measure segment precision by how many segment-labeled views truly align with that segment. They measure recall by how many of the actual relevant views are captured by the segment membership. The standard definitions are:

They also watch how often users in each segment see appropriate listings and how that correlates with engagement.

Example Code Snippet

Below is a simplified pseudo-Java approach for the Kafka consumer that updates user profiles. It illustrates how real-time event consumption might look:

@Service

public class EventConsumer {

@KafkaListener(topics = "user-behavior-topic")

public void consumeBehaviorEvent(String rawEvent) {

// 1. Parse the incoming event JSON

BehaviorEvent event = parseEvent(rawEvent);

// 2. Identify or create the user profile

UserProfile profile = userProfileRepository.findOrCreate(event.getUserId());

// 3. Append the event to the user timeline (limit to 10 days)

profile.appendEvent(event);

// 4. Recompute the segments

SegmentAggregator aggregator = new SegmentAggregator();

Map<String, Boolean> newSegments = aggregator.computeSegments(profile.getRecentEvents());

profile.setSegments(newSegments);

// 5. Save the updated profile

userProfileRepository.save(profile);

}

}

This code appends the event to the user’s recent activity record, calculates new segments, and stores the result.

Deployment and Scaling

The solution must handle thousands of events per second. The Kafka consumer and the storage backend (like Bigtable or another NoSQL store) must be sized for high write throughput. The gateway service must efficiently fetch profiles with low latency. Rolling out the AB test involves switching on personalization logic for a subset of traffic, verifying metrics, and eventually expanding if results are positive.

Next Steps

Adding more refined segments can further enrich personalization. Price-based or mileage-based segments are potential areas of improvement. Weighting multiple segments might improve results for users who like specific combinations. The team can then personalize more positions, not just the top listing, to optimize the entire search page.

Follow-Up Question 1

How would you prevent segment assignments from being overly sensitive to small changes in user behavior?

Answer: Smooth out short-term noise by applying a time-based or count-based minimum. Do not assign a user to a segment unless they have seen enough total ads or have demonstrated consistent preference for a specific segment over a period. Exponential smoothing or moving averages can help. This ensures minimal oscillation in segment membership from a single stray view.

Follow-Up Question 2

What if the user is in multiple segments, such as both electric and a specific body type, but you only have one slot to personalize?

Answer: Define a set of business rules or weighting to decide which segment is more important. If the system sees multiple segments, it can assign a score for each based on observed preference strength. The highest score wins. Alternatively, the platform can randomly choose a segment with a probability proportional to the user’s preference scores. Continuous experimentation can reveal which method yields better engagement metrics.

Follow-Up Question 3

How would you handle privacy and compliance if user profiles have sensitive data?

Answer: Store only necessary attributes. Use pseudonymous IDs to separate personal information from browsing data. Let users opt out. Encrypt data at rest and in transit. Set retention policies to delete user history after a fixed period. Limit segmentation logic to non-sensitive categories like broad vehicle preferences, and avoid direct use of personally identifiable data in the modeling.

Follow-Up Question 4

How would you react if the AB test shows improvements in one segment but a drop in another?

Answer: Isolate each segment to see if a different threshold or logic is needed. Possibly refine the model for the segment that dropped. Test a multi-armed bandit approach or a separate AB test for each segment. Measure net benefit, then adjust or rollback the underperforming personalization logic. Gradually roll out changes to segments that show improvement.

Follow-Up Question 5

How can you accelerate model updates to support new segments or changes in threshold logic without disrupting production?

Answer: Externalize segment rules into a configuration file or a dedicated rules service. Allow dynamic updates so the consumer service picks up new threshold definitions. Maintain versioning for safe rollback. Use canary deployments to test new segments on a small fraction of traffic. Roll forward after confirming stability and performance gains.

Follow-Up Question 6

How would you integrate advanced models (like collaborative filtering or deep learning) if the simple segment-based approach has diminishing returns?

Answer: Prototype a more granular recommendation system that predicts each user’s preference scores for various attributes. Evaluate offline on historical data for predictive accuracy, then run an AB test online. Possibly continue segment-based personalization as a fallback if advanced models fail. Gradually ramp up advanced personalization if the real-time inference infrastructure is ready and cost-effective at scale.

Follow-Up Question 7

What would you do if the “Featured listing” inventory is limited or sponsored?

Answer: Build logic that only personalizes within the sponsored inventory. If the user’s segment leads to no viable sponsored listings, default to another relevant listing or random fallback. Track how often personalization cannot be honored, and consider expanding sponsored supply or providing alternative promotional slots based on user preferences.

Follow-Up Question 8

How do you interpret precision and recall for segment-based personalization, and how do you act on them?

Answer: Precision measures how focused a segment is for those who belong. Recall measures how many relevant views get captured within the segment. Low precision suggests the segment is too broad, so the threshold might be raised. Low recall suggests many relevant users are missed, so the threshold might be lowered or the logic refined to catch more valid users. Monitoring both reveals segment effectiveness and whether it needs tuning or new features.

Follow-Up Question 9

How would you mitigate negative impact if the personalization logic accidentally overrides user intentions?

Answer: Honor explicit user filters above any inferred segments. If they choose a specific fuel type or price range, never override that. Log overrides so you can detect if personalization is clashing with explicit choices. If conflicts happen frequently, refine the personalization to rely less on that segment or adjust thresholds. Clear user control and transparency can also improve trust and prevent frustration.

Follow-Up Question 10

How would you ensure continuous improvement and experimentation across different teams?

Answer: Adopt a systematic experimentation culture where each new segment or attribute-based personalization is tested behind feature flags. Maintain a central dashboard where teams can see test results. Encourage small, iterative experiments and share learnings in weekly or bi-weekly sync-ups. Standardize data definitions, segment naming, and code patterns so that future expansions remain consistent.

This approach unifies real-time event ingestion, user-profile building, segment assignment, query injection, and AB testing. It handles scale with a Kafka-based pipeline, a reliable NoSQL store, and microservices that fetch profile data to personalize searches. The final outcome is a robust, extensible personalization loop that remains safe by honoring explicit filters and verified by ongoing AB tests.