ML Case-study Interview Question: Standardizing Industry Classification with Retrieval-Augmented Generation and LLMs

Browse all the ML Case-Studies here.

Case-Study Question

A financial technology company maintained a custom internal industry classification system that produced inconsistent, broad, and sometimes incorrect labels. Different teams used different mappings, leading to confusion, inefficiencies, and errors. The company decided to standardize on a widely used six-digit classification system. They built an in-house Retrieval-Augmented Generation (RAG) model that took company profile data (e.g., textual descriptions, website information) as input, generated a set of relevant candidate codes, and then used a Large Language Model to pick the final label. How would you design and implement this classification model? How would you handle the old-to-new system migration, maintain data quality, and prove the model’s accuracy to stakeholders?

Detailed Solution

A standardized industry classification enables consistent labeling across the organization. Migrating from a proprietary schema to a recognized six-digit code system solves alignment issues and supports improved compliance, risk analysis, and product decisions.

The solution requires a robust approach to map each business entity to a consistent code. The company chose to build an in-house RAG model to handle data complexity and to keep full control over tuning, costs, and auditing.

RAG typically has two main stages. First, it retrieves relevant code candidates from a knowledge base of valid codes. Second, it uses a Large Language Model to select a final code from the retrieved set. The following sections detail how this works and why it helps.

Old vs. New Taxonomy

The old system relied on a patchwork of third-party data, user inputs, and manual processes. This produced inconsistent categories, made audits nearly impossible, and forced repeated “translation” to standard code systems, creating many opportunities for error.

Adopting a known six-digit standard solves these issues. The code definitions are hierarchical, so each code can be rolled up to broader levels or broken down to fine-grained levels. This flexibility allows the same code to be viewed at varying resolutions, satisfying multiple teams’ needs.

Building the Retrieval-Augmented Generation Pipeline

Stage One (Retrieval): Text embeddings transform the input (company names, descriptions, websites) and the code definitions into numeric vectors. The system measures similarity between these vectors. The top-k most similar codes become the set of candidates for the Large Language Model.

Stage Two (Generation): An LLM sees the retrieved candidate codes plus any relevant metadata. It makes the final selection, effectively answering a multiple-choice question. This constrains the LLM to valid codes and reduces the risk of irrelevant outputs.

Using a RAG pipeline keeps each module focused. The retrieval module is tuned to maximize the chance that the correct code is present in the top-k candidates. The LLM module is tuned to pick the best match from those candidates.

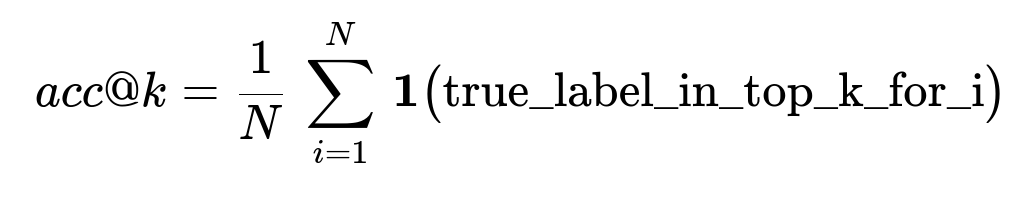

Key Evaluation Metrics

Models must be measured with relevant metrics. For the retrieval stage, many teams use accuracy at k (acc@k). If the true label is missing from the top-k candidates, the final prediction cannot be correct. The formal definition of acc@k can be expressed as:

N is the total number of samples. For each sample i, the term inside the sum is 1 if the true label is in the top-k retrieved codes, or 0 otherwise.

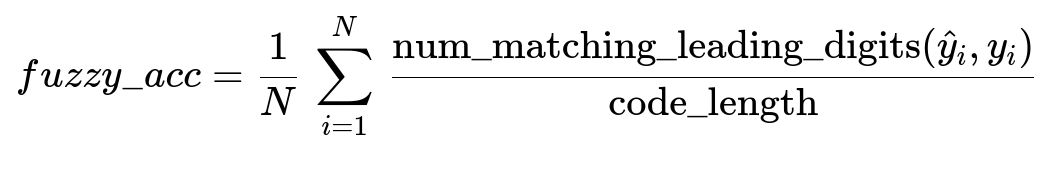

For the generation stage, a simple exact-match accuracy might not capture partial correctness for hierarchical codes. A fuzzy accuracy measure assigns partial credit if a large fraction of the leading digits is correct. One way to define a fuzzy score is:

Here, hat{y}_i is the predicted code, y_i is the true code, and code_length is 6. If the first four digits match but the last two do not, the score for that sample is 4/6.

Implementation Details

Construct a knowledge base with each code’s label, a short text description, and potentially synonyms or extra references. Pre-compute embeddings for these codes once and store them in a high-performance database for vector similarity queries.

For each new business entity, embed its textual features and fetch the top-k closest matches. Provide those matches, plus minimal code definitions, to the LLM prompt. Ask the LLM to narrow the list to the few most relevant codes, then supply that shorter list plus full descriptions in a second prompt. The model selects the final label.

Example Python Snippet

import openai

from vector_db_client import get_top_k_codes

def classify_business(business_text, k=5):

# Stage 1: Retrieval

business_embedding = embed_text(business_text) # your embedding function

candidate_codes = get_top_k_codes(business_embedding, k=k)

# Stage 2: LLM ranking (prompt 1)

prompt_1 = (

"Below is a set of codes with short labels:\n"

f"{candidate_codes}\n"

"Return the 2 most relevant codes for this business:\n"

f"{business_text}"

)

response_1 = openai.ChatCompletion.create(

model="gpt-4",

messages=[{"role": "user", "content": prompt_1}]

)

narrowed_codes = parse_llm_output(response_1)

# Stage 2: LLM final selection (prompt 2)

detailed_definitions = fetch_detailed_definitions(narrowed_codes)

prompt_2 = (

"Here are the final code candidates:\n"

f"{detailed_definitions}\n"

"Choose the single best match:\n"

f"{business_text}"

)

response_2 = openai.ChatCompletion.create(

model="gpt-4",

messages=[{"role": "user", "content": prompt_2}]

)

final_code = parse_llm_output(response_2)

return final_code

The system logs intermediate outputs so data scientists can debug retrieval errors or see where the LLM might be overlooking the correct candidate.

Benefits of the New Model

Inconsistent and overly generic categories become specific codes. Teams unify around the same standard. Audits are easier because each classification decision is logged. The company can tune hyperparameters (embedding models, number of retrieved candidates, prompt length, etc.) to trade off cost, latency, and accuracy as business priorities shift.

Potential Follow-Up Questions

1) How do you handle businesses that span multiple industries?

A single code often dominates, but sometimes it is too narrow. Including multiple codes might help represent a multi-faceted company. One approach is to show the LLM that multiple outputs can be valid and let it provide a ranked list or a primary code plus secondaries. However, the chosen classification standard might require a single primary code. If so, the model should be instructed to pick the code that best represents the largest revenue segment.

Candidate retrieval might produce multiple valid codes, and the final prompt could allow secondary picks. If the classification system only accepts one code, the model must provide just one. In practice, the official standard typically only needs the primary code. If extra detail is important, store additional codes as secondary attributes.

2) How do you measure model performance in the absence of a definitive ground truth?

Many classification tasks lack perfect ground-truth labels. The best workaround is to build a curated dataset of known correct labels via domain experts. You can assemble a high-confidence set of business entities and their correct codes by cross-checking official records, consulting subject matter experts, or verifying from multiple trusted data sources. This set does not need to be huge, but it should be representative of the range of industries you serve.

Once you have a reliable labeled dataset, measure acc@k and fuzzy accuracy. Monitor performance on a rolling basis by sampling new classifications for manual validation. Even if ground truth is fuzzy, some approximation is better than none. Over time, refine that dataset to keep pace with emerging business types.

3) How do you handle changes to the classification standard over time?

Standards like this can release new codes or merge existing ones. The knowledge base can be updated by incorporating new code definitions and removing obsolete ones. Recompute embeddings for any changed codes. If codes are fully removed or merged, unify them under the new official code. For existing businesses, recalculate their embeddings and run them through the updated retrieval stage. If the final chosen code changes, log the reason for auditing. Continuously monitor any newly introduced codes to ensure the LLM receives enough context.

4) How do you deal with hallucinations if the LLM picks a valid-looking code that is not in the top-k retrieved list?

You can add a post-processing check. If the LLM proposes a code that was not in the candidate list, validate that it exists in the official taxonomy. If the code is truly valid but was missed by the retrieval step, highlight that retrieval shortcoming. Possibly re-run retrieval with different parameters. If the code is invalid, reject it and fall back on a correct candidate from the top-k set. This ensures only valid codes pass through. Logging these events helps refine retrieval over time.

5) How do you approach optimizing hyperparameters in the retrieval stage?

Collect a labeled dataset and systematically vary retrieval parameters. Test which fields to embed (business name vs. name + website snippet vs. name + short description), experiment with different embedding models, and test different values of k. Plot acc@k curves for each combination. Identify the smallest k that includes the correct label at a high rate. Keep resource constraints in mind. If a model’s performance is only slightly better but costs far more, weigh that carefully.

After you narrow down a handful of strong configurations, run them through the generation stage to measure overall accuracy. The best retrieval approach might not be the one with the highest raw acc@k, because the LLM can be overwhelmed if you pass too many options. The final selection depends on cost, latency, and downstream performance.

6) How do you reduce cost if you are calling LLM endpoints for every classification?

Limit the number of calls by caching repeated results for common business descriptions. If many entities share similar patterns, re-use embeddings. Use smaller language models for the first pass in the two-step prompting, or reduce the number of recommended codes. The most expensive step is typically an LLM call with long context. By narrowing the candidate list before the final prompt, you reduce token usage. Also consider on-premises or fine-tuned open-source models if volumes grow large.

7) What if a third-party solution or off-the-shelf API is available? Why build in-house?

An off-the-shelf system is faster to deploy, but you may lose fine-grained control. If the cost is high or the updates are slow, that might constrain your roadmap. An in-house solution can tune each aspect of retrieval and generation. You can customize fuzzy accuracy and business logic, and you can add special constraints on certain industries. The tradeoff is the engineering overhead and maintenance. Some organizations start with a third-party solution, measure ROI, and eventually build in-house if the benefits of customization outweigh the costs.

Final Thoughts

An in-house RAG pipeline aligned on a standard six-digit classification code can unify how you describe your customers and unlock consistent insight for compliance, analytics, and product. Tuning each retrieval and generation parameter boosts accuracy and lowers costs. By storing full logs of retrieval scores, final predictions, and LLM justifications, you can continually improve your classification system and maintain full auditing capability. This standardized approach fosters better collaboration, more reliable reporting, and stronger customer understanding.