ML Case-study Interview Question: Optimizing Push Notifications via Uplift Modeling to Maintain Engagement

Browse all the ML Case-Studies here.

Case-Study question

A major photo-sharing platform wants to improve its push notifications about newly posted content. The existing click-through rate model selects notifications to send based on predicted click likelihood. Highly active users often receive many notifications but still view the same content organically. The company wants to reduce notifications for these highly active users without decreasing overall engagement. Formulate a plan to identify which users should receive fewer notifications while maintaining content views, propose a machine learning strategy to evaluate incremental impact of notifications, and describe how to implement an online decision system that controls the sending rate under a fixed budget.

Detailed solution

The solution requires causal inference combined with machine learning uplift modeling to identify user groups who will remain active without frequent notifications. The plan is to estimate the incremental effect of sending each notification versus dropping it, then use that estimate to decide who should receive notifications.

Core causal inference concept

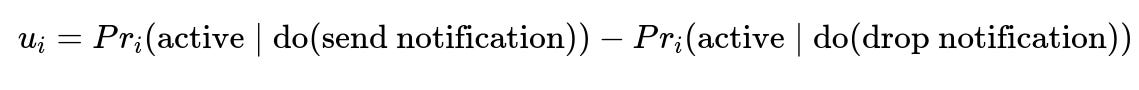

Here:

i is the user index.

Pr_i(active | do(send notification)) is the probability that user i remains active if the notification is sent.

Pr_i(active | do(drop notification)) is the probability that user i remains active if the notification is dropped.

u_i measures the incremental value of sending the notification to that user.

Users with small incremental values likely remain active even without receiving notifications, so dropping notifications for them improves efficiency.

Constructing the uplift model

A randomized experiment is set up. Each eligible notification is randomly sent or dropped with a fixed probability. Activity is then measured. This data is used to train a neural network that predicts incremental value for each potential notification. The model estimates how sending compares to dropping for each user.

Online decision system

Notifications are generated and scored in real time. The system transforms predicted uplift scores to maintain a stable sending rate r. After transforming scores into a uniform distribution, it compares the transformed score to r. If it is higher, the system sends the notification. Otherwise, it drops. This avoids sudden spikes or drops in volume when model outputs shift.

Implementation details

Create a separate experiment layer that randomly drops notifications for part of the user population. Log whether the notification was sent or dropped, along with subsequent user actions. Train a neural network with user features (historical engagement, session length, etc.) to predict incremental value. Incorporate an online quantile transformation service to map raw model scores to [0, 1] uniformly. Determine the sending threshold using the desired sending rate.

Model performance is measured by overall engagement and resource usage. Reducing notifications for users who engage anyway yields better user experience and less system overhead.

How would you ensure the uplift model is correctly identifying incremental value?

Uplift modeling relies on randomized experiments. Ensuring randomization across user segments is critical. Train and test uplift predictions on data with balanced send/drop labels. Compare predicted incremental values with observed differences in activity. Calibrate model outputs to match average uplift measured in holdout experiments. Monitor whether groups with high estimated uplift show higher observed engagement when notifications are sent.

How would you handle a shift in user behavior or drift in model predictions over time?

Retrain the model on fresh data whenever user behavior changes significantly. Implement a continuous evaluation mechanism that checks consistency between predicted versus actual engagement lift. If the difference grows, refresh the uplift model or update certain layers within the neural network. Keep the uniform-score transformation tuned to preserve stable sending rates.

What if certain cohorts are too small for randomization to give reliable uplift estimates?

Combine uplift modeling with domain knowledge. For sparse cohorts, cluster users by similar engagement patterns and pool their data. Increase the randomization level for small cohorts, or add Bayesian hierarchical modeling to borrow statistical strength from related cohorts. Validate that smaller segments are receiving accurate incremental value estimates before applying large-scale changes.

How would you integrate cost or budget constraints more dynamically?

Introduce a continuous cost feedback loop. If the system is near its daily notification budget, adjust the threshold to allow fewer notifications. If the system has unused budget, lower the threshold to send more notifications. Keep real-time monitoring of the cost and user response data. This dynamic approach ensures that notification volume is constantly matched to both budget and engagement impact.

How do you measure success beyond just engagement metrics?

Track user retention, session duration, complaint rate, and unsubscribes. Reduced notification volume with stable or increasing user activity indicates a better user experience. Track resource savings (fewer pushes, server calls) and measure usage improvements. Compare user satisfaction surveys from random holdouts to confirm that reduced notifications do not harm perceived value.