ML Case-study Interview Question: Personalized Fashion Recommendations from Images Using Hybrid Embeddings and Computer Vision

Browse all the ML Case-Studies here.

Case-Study question

A major online retailer wants to build a feature that shows community-generated outfit images to users, then recommends items related to those images. The images serve as a personalized search query. The challenge is to detect clothing items in each uploaded photo, map them to known catalog items, and then produce a list of recommendations that balance item-image similarity with user affinity. How would you design and implement this system, including the underlying models, data pipelines, and ranking logic, to ensure relevant and personalized suggestions?

Detailed solution approach

Use a computer vision pipeline to detect and segment each garment in the community image. Map those detected garments to anchor items in the catalog by comparing visual attributes. Each image becomes a set of sets of known items.

Represent both users and items in a shared embedding space that captures style preferences and item similarity. Each anchor item has a latent embedding. Averaging the anchor embeddings for each detected garment reduces noise from imperfect detection. Construct a final per-garment embedding. Compute a per-garment score against the user embedding, then take the maximum across all detected garments to obtain the image’s overall score.

image_score = max({object_score_1, object_score_2, ...})

object_score_i indicates how much a user is predicted to like garment i in the context of that image.

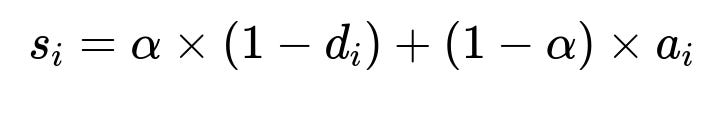

Generate candidate items for each anchor item by looking up the nearest neighbors in a hybrid embedding space that combines image-similarity signals with user-preference signals. Each candidate item i has a distance d_i (to represent similarity with the anchor) and an affinity score a_i (to represent user preference). Rank items with a multi-objective function:

d_i is the distance in hybrid space (lower is better). a_i is the user’s predicted affinity (higher is better). alpha is a weight controlling the trade-off between relevance to the image and user preference.

Select top candidates based on s_i while also enforcing diversity constraints on categories or other item features to avoid repetitive results. Deduplicate identical items that appear under multiple anchors by keeping whichever anchor yields the smallest distance.

Respect user constraints such as size, style feedback, and prior purchase data. After final scoring, present a curated list of items most aligned with both the user’s style and the uploaded image’s context.

Combine all stages into a system that, when a new image arrives, processes it in real time or near real time. For offline modeling, regularly retrain embeddings to reflect changing fashion trends, new items, and new user data. Serve results through an application programming interface that merges the final ranked list into the user’s browsing or shopping experience.

A minimal Python-style code outline for the retrieval and ranking might look like this:

def compute_image_embedding(detected_items, item_embedding_dict):

object_embeddings = []

for items_in_one_garment in detected_items:

garment_embs = [item_embedding_dict[item_id] for item_id in items_in_one_garment]

garment_average = np.mean(garment_embs, axis=0)

object_embeddings.append(garment_average)

image_score = max([user_emb.dot(obj_emb) for obj_emb in object_embeddings])

return image_score

def retrieve_candidates(anchors, hybrid_embedding_dict, user_affinity_func, alpha):

all_candidates = {}

for anchor in anchors:

anchor_emb = hybrid_embedding_dict[anchor]

neighbors = find_knn(anchor_emb) # precomputed index

for candidate in neighbors:

d_i = distance(anchor_emb, hybrid_embedding_dict[candidate])

a_i = user_affinity_func(candidate)

if candidate not in all_candidates:

all_candidates[candidate] = (d_i, a_i)

else:

existing_d, existing_a = all_candidates[candidate]

if d_i < existing_d:

all_candidates[candidate] = (d_i, a_i)

ranked = []

for candidate, (d_i, a_i) in all_candidates.items():

s_i = alpha * (1 - d_i) + (1 - alpha) * a_i

ranked.append((candidate, s_i))

ranked.sort(key=lambda x: x[1], reverse=True)

return diversify(ranked)

size preference checks and advanced deduplication would be integrated into these steps. The diversify function would ensure category-level or brand-level spread.

How do you handle imperfect image detection?

Computer vision sometimes misidentifies garments or misses them entirely. Averaging anchor embeddings for each garment cluster mitigates random errors, because outliers get dampened. If misdetections frequently occur, train or fine-tune the detection model with more domain-specific data. Incorporate robust fallback logic. For instance, if detection confidence is below a threshold, skip that region or reduce its weight.

How do you update embeddings if user preferences evolve?

Regularly retrain or fine-tune embeddings using fresh user feedback, purchase data, and new merchandise images. Store historical embeddings for backward compatibility during A/B testing. Transfer the user representation smoothly by applying incremental training or embeddings alignment. Validate changes on holdout sets.

What if searching for similar items is slow?

Use an approximate nearest-neighbor search library or indexing data structure optimized for high-dimensional spaces. Precompute embeddings offline. Keep them in memory or on fast-access storage. Use distributed query engines if the catalog is large. Build real-time indexes that update incrementally whenever new items arrive.

How do you learn the weight alpha?

Run online experiments or offline simulations. Split traffic into different alpha settings. Track engagement and conversion metrics. Apply a bandit or interleaving design to adapt alpha. Monitor how image similarity vs. user preference contributes to success. Persist the alpha that yields the best overall performance.

How do you ensure diversity in final results?

Group candidate items into categories (e.g., tops, bottoms, shoes) or style clusters. Slice the sorted list into segments and pick top items from each category. Impose a penalty or re-ranking step if too many items of the same style appear consecutively. Track the user’s prior actions for each session to reduce duplication.

How do you handle insufficient data for new users?

Apply a cold-start strategy by relying more on visual similarity or generic popularity data. Over time, gather explicit or implicit feedback from the user’s clicks, purchases, or returns. Incorporate that feedback into the embeddings or user preference model. Gradually shift weighting from generic signals to personalized signals once enough data accumulates.