ML Case-study Interview Question: LLM-Powered Neural Search for Conversational Discovery Across Massive Catalogs

Browse all the ML Case-Studies here.

Case-Study question

A leading tech aggregator wants to enhance user engagement on its platform by enabling users to discover products, services, and restaurants through more natural, conversational queries. They have a massive catalog (50 million-plus items) that makes decision-making cumbersome for users. They want to build a neural search system powered by a Large Language Model. This system should handle open-ended conversational queries, provide real-time personalized recommendations, support voice-based queries, and eventually scale to multiple languages. They also want to enrich their catalog with generated images or descriptions for unfamiliar items, plus deploy a conversational bot for general customer support and partner onboarding. How would you design the complete solution?

Detailed solution

A robust neural search system must extract relevant signals from user queries, transform these queries into vector embeddings, and match them against item embeddings in an efficient similarity-based index. The aggregator’s catalog is large, so a scalable retrieval engine that processes multiple queries concurrently is essential.

An effective approach uses a Large Language Model (LLM) that is tuned with domain-specific data. This fine-tuning stage focuses on terminologies and nuances in user queries and product attributes. When users type open-ended queries like “I just finished my workout. Show me healthy lunch options,” the model identifies the key intent (healthy meal) and relevant subcategories (calories, diet restrictions). The system then ranks items or restaurants that match these preferences.

Neural search flow

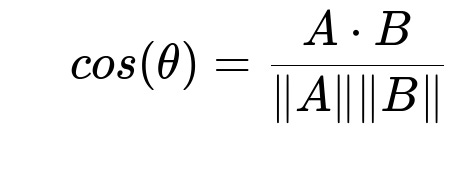

Text queries are tokenized and transformed into vector representations. These embeddings capture semantic similarity. The system finds matching items by comparing the query embedding with each item’s embedding. A scoring function measures closeness in vector space.

A is the user query embedding vector, B is the item embedding vector, and cos(theta) measures their normalized dot product. A higher similarity score indicates a closer match. The embedding model is typically the LLM’s encoder, fine-tuned to ensure domain alignment.

The aggregator can store item embeddings in a vector database, which accelerates similarity search. The returned candidates are reranked using a separate LLM-based scoring module or a lightweight re-ranker. This final ranking step can incorporate personalization signals such as user dietary preferences or cuisine history.

Catalog enrichment

The aggregator can use generative techniques to create or refine item descriptions and images. One approach is to generate short sentences that highlight key features of an item, so users better understand unfamiliar products or dishes. For images, a generative model can produce representative visuals. This content improves user engagement and decision-making.

Conversational bot integration

A conversational AI component can handle the user experience for dynamic queries. This includes recommending items based on broad contexts like “What’s popular around me under 10 dollars?” The system’s pipeline extracts the request structure, queries the neural search index, then responds in a chat format. It also works with voice-based inputs or local language inputs by using speech-to-text or text translators.

For more general support (customer service, partner onboarding), an LLM-based chatbot can handle frequently asked questions and streamline resolutions. Tuning the model with example dialogues from past support tickets is crucial for domain-specific coverage.

Implementation details

A possible Python-based pipeline might involve:

Using a transformer-based model from a library for embedding queries and items.

A vector database for storing embeddings and performing similarity searches.

A reranker or second-stage module for refining the returned candidates.

An API layer that integrates with the aggregator’s app for voice or text inputs, returning results in real time.

A microservice structure where the embedding generation, vector search, and reranking stages can scale horizontally, handling heavy traffic.

Below is a simple snippet that outlines how to generate embeddings and store them:

from transformers import AutoTokenizer, AutoModel

import torch

class EmbeddingModel:

def __init__(self, model_name="sentence-transformers/all-mpnet-base-v2"):

self.tokenizer = AutoTokenizer.from_pretrained(model_name)

self.model = AutoModel.from_pretrained(model_name)

def get_embedding(self, text):

inputs = self.tokenizer(text, return_tensors='pt', truncation=True)

with torch.no_grad():

outputs = self.model(**inputs)

return outputs.last_hidden_state.mean(dim=1).squeeze()

# Instantiate the embedding model

embedder = EmbeddingModel()

# Example usage

query_embedding = embedder.get_embedding("Healthy lunch option")

item_embedding = embedder.get_embedding("Salad with low calories")

similarity_score = torch.nn.functional.cosine_similarity(

query_embedding.unsqueeze(0),

item_embedding.unsqueeze(0)

)

print(similarity_score.item())

This code shows how text is tokenized and converted to embeddings, which can be passed to a vector database for quick lookup. Production systems would be more complex, with autoscaling and caching.

Future expansions

The aggregator could add language-specific tokenizers for regional query support. Voice queries would rely on speech-to-text before passing text to the embedding model. For context retention, a conversation-aware LLM can track user requests across multiple turns. This approach creates a seamless experience.

How would you handle ambiguous user queries or items not found in the catalog?

Ambiguous queries can be tackled by adding a fallback or clarification step. The system might respond with a clarifying prompt like, “Did you mean X or Y?” If the LLM confidence is too low, it routes the question to a smaller curated rules-based system or to a human support agent in critical scenarios.

Why build an in-house LLM instead of using a generic one?

Fine-tuning an in-house model allows control over performance, domain coverage, privacy, and cost optimization. A generic LLM may be too broad. Tuning for the aggregator’s domain ensures more accurate embeddings for queries about specific items and contexts.

What are some scaling challenges for a real-time neural search system?

One challenge is embedding 50 million-plus items. This requires a distributed system that partitions embeddings across multiple nodes. Another is real-time inference under high traffic. Solutions include GPU or specialized hardware acceleration, approximate nearest neighbor indexing, and caching popular queries to reduce computational load.

How would you support multiple languages in the neural search framework?

Multilingual models or separate monolingual models for each language can be used. If the aggregator focuses on certain regional languages, the model can be fine-tuned with domain data in those languages. For voice, an ASR (automatic speech recognition) system must handle each language properly before passing text to the model.

How would you evaluate the performance of this entire pipeline?

One approach uses precision-at-k and recall-at-k for top-k retrieved items. Another tracks click-through rates or final conversions on recommended items. Subjective metrics like user satisfaction or time-to-decision can also reflect performance. A/B testing with different model versions helps in iterating toward optimal results.

How do you ensure the conversational bot remains safe and brand-aligned?

Filtering or gating outputs with a moderation layer is common. The aggregator can maintain a set of prohibited outputs, disclaimers, or post-processing rules. Fine-tuning with carefully curated conversation examples can also reduce risks of irrelevant or harmful responses.

How would you handle real-time personalized ranking?

Incorporate user-specific features (past orders, cuisine preferences, region) into a re-ranking step. The system appends these features to the base item embeddings or passes them into a separate personalization model. The final ranking merges the general semantic similarity with user-specific preferences.