ML Case-study Interview Question: Robust Recipe Recommendations for Cold Starts using Hybrid Embeddings

Browse all the ML Case-Studies here.

Case-Study question

A subscription-based meal service needs a recipe recommendation system that reliably suggests relevant dishes for first-time customers who have minimal interaction data. The weekly menu changes frequently, and newly introduced recipes also lack historical orders or ratings. How would you design and implement a robust solution to handle both new-user and new-recipe cold starts while ensuring recommendations remain accurate and scalable across millions of users?

Proposed Solution

Core Challenges

The system must handle user cold starts by deriving preferences with minimal data. It must also tackle product cold starts, since new recipes have no order history yet. The approach should be scalable to a large customer base and support real-time updates whenever a customer places an order.

Phase 1: Simple Item-Based Collaborative Filtering

Item-based collaborative filtering can be a quick baseline. It leverages interactions from other users who have similar taste profiles. The score can be derived as follows:

Where:

u is the user.

i is the target item (recipe).

S(u) is the set of items the user u has ordered.

rating(u,j) is the user’s implicit or explicit rating for item j.

sim(i,j) is the similarity between item i and j, often computed via cosine similarity or other distance metrics.

This baseline works for users with some order history, but new recipes remain overlooked because sim(i,j) is unknown for those items. Also, new users have only one (or zero) orders.

Phase 2: Hybrid Embedding Architecture

Represent each recipe with multiple features (cuisine, dietary tags, popularity). Construct a neural network that combines content-based embeddings with behavior-based embeddings. Freeze pre-trained content embeddings to ensure new recipes have an immediate representation. For user embedding, aggregate embeddings of previously ordered recipes, or an empty embedding if no orders exist.

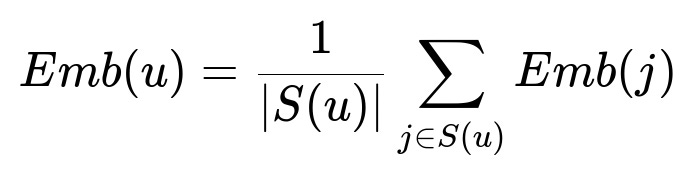

A simplified example for the user embedding:

Where:

Emb(u) is the embedding for user u.

S(u) is the set of items u has ordered.

Emb(j) is the pre-trained embedding of item j.

Train a bi-encoder network where one encoder processes user embeddings (formed from the recipe embeddings of their order history), and the other encoder processes the target recipe embedding. The training objective is to push relevant user–recipe pairs closer in embedding space.

Phase 3: Synchronous Real-Time Recommendations

Expose the model behind an Application Programming Interface so that, when a new order arrives, the system can instantly update the user embedding. Return top-N recommendations by nearest-neighbor search in the embedding space. This ensures the recommendations reflect any recent order.

In Python, a simplified pseudocode snippet for building user embeddings and retrieving recommendations:

import numpy as np

from sklearn.metrics.pairwise import cosine_similarity

# Assume we have item_embeddings dict: item_id -> vector

# user_orders dict: user_id -> list of item_ids

def get_user_embedding(user_id, item_embeddings, user_orders):

items = user_orders.get(user_id, [])

if len(items) == 0:

return np.zeros(embedding_dim)

emb_sum = np.zeros(embedding_dim)

for item in items:

emb_sum += item_embeddings[item]

return emb_sum / len(items)

def recommend(user_id, item_embeddings, user_orders, top_n=15):

user_emb = get_user_embedding(user_id, item_embeddings, user_orders)

all_items = list(item_embeddings.keys())

item_matrix = np.array([item_embeddings[i] for i in all_items])

sims = cosine_similarity([user_emb], item_matrix)[0]

ranked_indices = np.argsort(sims)[::-1]

return [all_items[i] for i in ranked_indices[:top_n]]

Follow-up Question 1

How would you solve the user cold start problem if a user has not placed any order at all?

Explanation

Rely on content-based preference elicitation or lightweight surveys. For instance, present a brief “recipe battle” where the user chooses between distinct dishes. The system infers preliminary taste vectors to initialize user embeddings. If that is not an option, fallback to general popular recipes or category-based recommendations until the user provides at least one order.

Follow-up Question 2

How do you mitigate the product cold start problem when the recipe is entirely new and has no historical orders?

Explanation

Pre-train a content embedding for each recipe from textual descriptions, tags, or images. Freeze this embedding during fine-tuning so the new recipe can appear in recommendations before receiving orders. If additional data arrives, update the recipe embedding with behavioral signals.

Follow-up Question 3

How do you ensure scalability and low latency for real-time recommendations when the user base grows to millions?

Explanation

Adopt an approximate nearest neighbor search solution (like FAISS or ScaNN). Build indexes of recipe embeddings to speed up similarity queries. Keep user embeddings in a fast in-memory store and update them on each purchase. Containerize the model inference service and use load-balancing with caching to handle high throughput.

Follow-up Question 4

What are potential pitfalls with purely implicit data, and how would you address them?

Explanation

Implicit data (orders, clicks) may not capture negative preferences. Users might skip many recipes for time or price reasons rather than dislike. Logging “no interaction” or incorporating dwell time can mitigate biases. Post-purchase feedback or a like/dislike button can further refine user preferences.