ML Case-study Interview Question: Building a Scalable Video Moderation Pipeline with Deep Learning and Human Review

Browse all the ML Case-Studies here.

Case-Study question

You are assigned to build a video moderation pipeline to identify and block inappropriate content. A surge in user-uploaded videos has made it vital to detect harmful content, such as nudity, sexual activity, violence, and extremist imagery. Your goal is to design a high-recall system that minimizes the number of false positives. You must incorporate a manual review workflow to counterbalance the occasional misclassifications and avoid frustrating legitimate users. How would you architect this end-to-end system, handle video-frame processing at scale, and fine-tune thresholds to ensure high precision and recall?

Your tasks:

Propose an architecture that includes an automated deep-learning model and a human moderation loop. Describe how you would reduce inference time for large videos, manage repeated offenders, and combine frame-level classification outputs into a single decision score. Demonstrate how you would evaluate performance and optimize it in a real-world scenario with heavy traffic and strict latency requirements.

Detailed solution

Overall Architecture

Start the ingestion pipeline when a user uploads a video. Immediately apply a matching service that blocks known harmful submissions based on a similarity lookup against a database of previously removed content. Forward videos that pass this check to a multi-label classifier built on deep learning. If the model’s confidence score exceeds a threshold, hide the video and prompt a human review. If the human reviewer confirms a violation, reject the video. If it is flagged incorrectly, restore it.

Deep Learning Model

Train a convolutional neural network or transformer-based architecture that is already proven effective for image moderation. Adapt this model to handle video frames. Reuse the same underlying weights, then fine-tune on frame-level data representing inappropriate content classes such as nudity or violent imagery. Output multi-label probabilities indicating whether a frame violates any category. Evaluate each label independently.

Frame Sampling Strategy

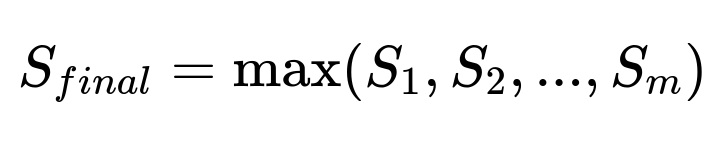

Extract frames at regular intervals. Use a balanced approach to avoid missing crucial frames. Each sampled frame goes through the model for classification. Aggregate the results with a function that yields a single final score. One simple approach uses the maximum predicted score across sampled frames:

Here, S_k is the classifier’s probability of a violation for the k-th sampled frame, and m is the total number of sampled frames. If S_final exceeds the threshold, label the video for human review.

Each label’s threshold is chosen after analyzing validation metrics. A high threshold reduces false positives but may miss some violations. A lower threshold reduces missed detections but risks flagging legitimate content. Calibrate thresholds using validation data that balances the cost of a false positive with the severity of a missed violation.

Human Moderation

Human reviewers examine the flagged videos to overturn false positives and confirm legitimate violations. Keep these reviewers from having to handle vast volumes of harmless content. This improves overall performance, user experience, and keeps employees focused on real violations. Adjust model thresholds over time to reduce the burden on the moderation team.

Reducing Inference Time

Limit the frames sent to the model. This ensures near real-time classification. Pre-block uploads from suspicious users by tracking abnormal account patterns. This reduces the total number of samples that must be fully processed.

Performance Evaluation

Measure recall by counting how many true violations are identified. Measure precision by counting how many flagged videos are truly inappropriate. Evaluate false positives to preserve user trust and maintain engagement. Use a confusion matrix analysis on a validation set to decide final thresholds. Confirm the model’s performance with regular A/B tests in production.

Example Code Snippet

import cv2

import numpy as np

import torch

def sample_frames(video_path, frame_rate=1):

cap = cv2.VideoCapture(video_path)

frames = []

frame_count = 0

success = True

while success:

success, frame = cap.read()

if not success:

break

if frame_count % frame_rate == 0:

frames.append(frame)

frame_count += 1

cap.release()

return frames

def predict_inappropriate(frames, model, threshold):

scores = []

for frame in frames:

# Preprocess frame into model input

input_tensor = preprocess_frame(frame)

with torch.no_grad():

logits = model(input_tensor)

prob = torch.sigmoid(logits) # For multi-label

# Suppose we care about any violation category

violation_score = prob.max().item()

scores.append(violation_score)

final_score = max(scores)

return final_score > threshold

This code samples frames from a video at regular intervals, applies a pre-trained model, and uses the maximum predicted score across frames. If the maximum exceeds the threshold, the video is flagged.

Follow-Up Questions

1) How would you handle an evolving definition of “inappropriate” content?

Train the model on the existing known categories. Include a label for newly discovered unwanted content as soon as patterns emerge. Collect real examples for each novel category. Continuously retrain or fine-tune the model. Maintain a human-in-the-loop pipeline for cases the model has not seen before. This ensures fast adaptation.

2) How do you handle tradeoffs between a single multi-label model and multiple binary classifiers per label?

A single multi-label model reduces infrastructure overhead. It also trains each label together, allowing shared feature representations. Multiple specialized binary classifiers can be fine-tuned more granularly for each label, which might boost performance for niche categories. If you have enough data and you need maximum precision for a label, consider separate models for that label. If bandwidth is a constraint, a single multi-label model is more efficient.

3) How do you manage repeated upload attempts by malicious users?

Track user metadata, IP addresses, and device signatures. Block or throttle uploads from suspicious sources. Store similarity hashes of removed content in a fast key-value store. Compare new submissions against these hashes. Instantly discard any near-duplicate content to spare resources.

4) How would you evaluate threshold settings and decide the best tradeoff between recall and false positives?

Compute metrics across a labeled validation set. Start with recall = TP / (TP + FN) and precision = TP / (TP + FP). Move the threshold in small increments. Measure how it impacts overall recall and false positives. If missing harmful content is unacceptable, shift thresholds to keep recall high. Combine offline evaluation with a small online A/B test, carefully monitoring user satisfaction and flagged rates.

5) How would you improve real-time processing performance for large volumes of videos?

Distribute the pipeline across multiple GPU instances. Use a streaming approach that processes frames as they come in. Cache intermediate CNN feature maps so repeated computations are minimized. Convert the model to an optimized format such as TensorRT for faster inference. Pre-filter known suspicious users so their uploads get instantly flagged for further checks.

6) How would you handle domain-specific edge cases that are not generalizable by your training data?

Curate a high-quality dataset covering edge cases. Gather feedback from users and moderators. Add domain-specific features or textual metadata when it helps. Hybrid approaches might combine visual cues with text in the video’s audio transcript. Fine-tune the neural network using new examples from these niche categories.

7) How do you handle content that requires context beyond a single frame, like borderline sexual imagery that depends on subtle cues over time?

Process consecutive frames in short intervals. Use an architecture that captures temporal patterns, such as 3D convolutions or transformer models with attention over time. Aggregate features from a sequence of frames to form a context-aware classification. If the user’s account has relevant behavioral signals, feed them into the model for more context.

8) How would you ensure that your model does not unfairly flag content related to particular races, religious symbols, or artistic expressions?

Ensure diverse and representative training data. Collaborate with subject matter experts to label data in a way that accounts for cultural and artistic contexts. Evaluate fairness metrics across protected classes. Regularly audit flagged content for bias. If bias is detected, adjust data and model training to mitigate it. Combine algorithmic checks with human oversight.

9) How do you incorporate user feedback on flagged videos to refine the model?

Collect cases where users contest a removal. Route that content through a re-labelling process. Include the newly labeled data in model re-training. Periodically analyze contested videos. If many are flagged incorrectly, adjust thresholds or label definitions. Maintain a pipeline that seamlessly updates the production model with refined data.

10) How do you manage edge cases where the system incorrectly flags crucial business demonstration videos?

Allow a priority-based review queue. If a flagged video is from an established or highly trusted user, move it ahead in the human moderation queue. Collect re-labeled data from these priority cases. This ensures minimal disruption to important users while still maintaining platform safety. Adjust thresholds for certain user types, but keep monitoring for abuse.