ML Case-study Interview Question: Detecting Sensitive Data & Secrets at Scale with ML, Regex, and CI Hooks

Case-Study question

You are tasked with building a data protection solution for a fast-growing company subject to strict privacy and security regulations. The company stores personal, sensitive, and secret data across various data stores and code repositories. How would you design an automated system that scans these data stores, application logs, and codebases to detect personal or sensitive information, and prevent secrets from leaking? Outline your technical approach and explain how your system would handle large-scale data, incorporate machine learning models for more complex detections, and integrate quality assurance and labeling processes to ensure reliable results. Also describe how you would handle secret detection and prevention within the code repository to reduce security risks.

Detailed solution

The essential challenge is automatically classifying personal and sensitive information at scale. The system must locate such data within databases, logs, and code repositories, then flag or prevent issues before they lead to privacy or security breaches. Below is a step-by-step outline of how to implement this solution.

Data classification platform design

The system requires two main services: a task management component and a scanning component. The Task Creator identifies items to scan. The Scanner samples data and applies detection methods. This architecture is scalable and adaptable to changes in data volume.

The Task Creator queries a centralized metadata service for all data assets across the company, including relational tables, data lake objects, and logs. It then creates and queues tasks in a messaging system. The Scanner picks up tasks, connects to the respective data store, randomly samples rows or bytes, and applies one or more detection methods.

Detection methods

Regex checks are used for data with predictable formats. For instance, an email address often includes the "@" symbol, making a carefully crafted regex scan feasible. Tries (such as the Aho-Corasick algorithm) handle patterns that do not fit a strict regex. Machine learning methods handle more complex text formats and global language variability. Hardcoded methods fill any detection gaps for edge cases like IBAN numbers.

ML model hosting

Machine learning models can be served behind an internal platform that provides inference endpoints. When the Scanner encounters a data sample, it sends the text snippet to the model for classification, then interprets the returned probability or label to decide whether to flag the sample.

Quality measurement

It is critical to measure precision, recall, and accuracy. Samples of known true positives and known negatives are regularly tested. The labeled dataset grows over time, which improves ML model performance after periodic re-training.

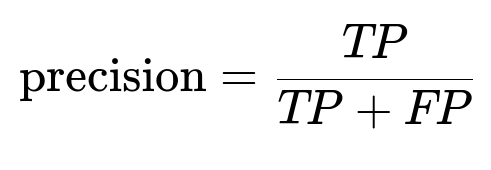

Below is one of the key evaluation formulas often used to measure performance:

Where TP is the number of true positives correctly detected, and FP is the number of false positives incorrectly flagged. Similar definitions apply for recall and accuracy.

These metrics are computed regularly. Any changes to regex or ML models are tested against these labeled datasets to confirm improvements before production rollout.

Secrets detection in code

The second part of the system focuses on detecting (and preventing) secrets in the company’s code repository. For example, production API keys or vendor credentials might be committed by mistake. The company sets up a continuous integration (CI) check that scans every commit with a specialized detection library. If a secret is found, the system creates a ticket and blocks the merge until the secret is rotated or removed.

For stronger security, a pre-commit hook can intercept attempts to commit secrets before they ever reach the remote repository. This reduces exposure risk and avoids expensive secret rotations later. Developers maintain the option to skip lines for rare false positives, but such overrides should be reviewed carefully.

Putting it all together

The complete solution spans multiple scanners for different data types, integrated quality controls, continuous improvement through labeled data, and code scanning. It ensures personal data, sensitive information, and credentials are discovered quickly. To build trust and drive adoption, any flagged data or code triggers an immediate alert. Automated ticketing workflows guide teams to remediate issues promptly.

Follow-up question 1: How do you ensure the data classification system scales effectively with continuous data growth?

A robust task queue lies at the core. New data stores are discovered and queued in smaller units. Each scanner container handles a moderate load by sampling a subset of rows or objects. Horizontal scaling is possible by increasing the pool of scanner instances. The system never attempts full scans of enormous tables. Instead, a random sampling approach catches representative data while limiting resource usage. Schedulers watch queue depth and automatically add scanner capacity as volume spikes.

Follow-up question 2: How do you handle the risk of missing new data elements or formats not covered by existing verifiers?

Define modular verifiers and keep them in a database. When new formats or data elements appear (like new forms of personal data from a product launch), introduce new verifiers without redeploying. The system periodically refreshes its verifier list. The quality measurement pipeline helps identify false negatives over time, prompting additions or updates to the verifiers. Retraining ML models with newly labeled samples also helps expand coverage.

Follow-up question 3: What if a developer bypasses the pre-commit hook or the CI checks when pushing secret credentials?

Developers can try to disable checks, but the approach to mitigate this includes restricting repository write permissions, mandating the CI pipeline for merges, and preventing direct pushes to the main branch. The security team audits skipped code sections and requires justification or manual overrides. If a secret is still pushed, the detection process triggers an alert, forcing secret rotation and providing an additional layer of monitoring for any suspicious commits.

Follow-up question 4: How would you handle unstructured data like freeform text fields or JSON objects?

Sample the raw text and feed it into multiple verifiers. Regex might detect partial matches, while tries match common strings. ML models classify the text contextually. For logs, focus on relevant logging points to reduce noise. Over time, label false positives or missed results to refine the scanning approach. This combination of scanning, tries, and ML ensures wide coverage of unstructured data fields.

Follow-up question 5: Why not use a third-party commercial solution?

Many commercial products excel at scanning certain storage types, but they often lack seamless integrations with custom internal databases and custom ML logic. They might not support a wide variety of data formats or logs. Their licensing can also be cost-prohibitive. Building in-house solutions provides flexibility, fine-tuned performance, and cost savings at scale.

Follow-up question 6: How do you sustain this platform over time?

Commit to ongoing maintenance: check scanning results daily or weekly, adapt verifiers to product changes, and retrain ML models with newly labeled data. Integrate feedback loops into agile development cycles. Provide developer education on best practices. Schedule periodic audits to confirm coverage across existing and emerging data stores. This ensures the platform remains robust as new requirements appear.

That concludes the full breakdown of the case study solution.