ML Case-study Interview Question: Using LLMs to Automate Incident Root Cause Detection and Mitigation Suggestions.

Browse all the ML Case-Studies here.

Case-Study question

You are leading a data science team at a large-scale productivity service provider. The service supports millions of enterprise customers worldwide. Frequent reliability incidents occur, ranging from machine-reported issues with repetitive error patterns to unpredictable customer-facing disruptions. When an incident arises, engineers open a ticket describing the symptoms, error messages, and any logs. Historically, engineers have manually analyzed these tickets to identify root causes and propose mitigations, which can be time-consuming and prone to human oversight. Propose a solution that uses large-language models to automate this incident management process. How would you handle data gathering, model selection or training, evaluation metrics, and the operational flow from ticket ingestion to recommended actions?

Clarify how you would:

Ingest large volumes of incident data with varying levels of detail.

Decide on zero-shot usage versus fine-tuning or retrieval-augmented approaches.

Automate root cause detection and recommended mitigation steps at scale.

Evaluate results using both quantitative metrics and human validation.

Integrate continuous learning so that the system stays current with evolving environments.

Detailed solution

Overview of the Approach

Use large-language models to parse incident ticket data and generate root cause hypotheses plus mitigation suggestions. Rely on historical incident descriptions, logs, and incident resolution notes as training or reference data. Incorporate a retrieval mechanism to fetch relevant past incidents, then feed them into a powerful generative model to produce recommendations for ongoing incidents.

Data Preparation

Gather all historical incident records and sanitize them for privacy. Split them into training and validation sets. Include essential details, such as incident titles, summaries, error messages, suspicious logs, or partial stack traces. Create a consistent format for the input-output mapping (incident description to root cause and mitigation plan).

Model Pipeline

Start with a pretrained large-language model. First evaluate its zero-shot performance, then consider fine-tuning on your specific incident data. For deeper context, augment the model with a retrieval engine that searches for similar past incidents and includes key details in the prompt. This can help the model produce more accurate output.

Fine-Tuning for Better Accuracy

Fine-tuning on domain-specific incident data often yields significant performance gains. Supply the model with examples of incidents, along with detailed solution notes. Train the model to produce the correct root cause narrative and recommended steps. Reduce the staleness problem by periodically updating the model with fresh incidents.

Performance Metrics

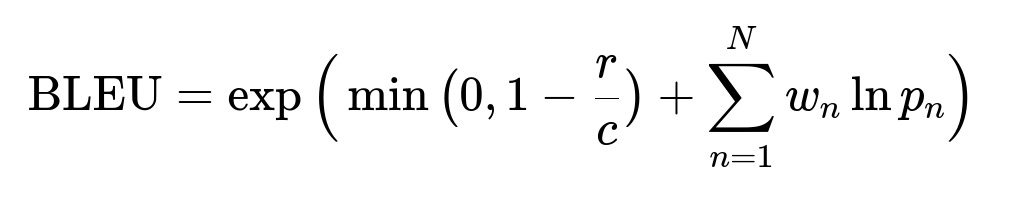

Accuracy of generated solutions is important. Compare generated root causes and mitigation steps with ground-truth data. Use both lexical similarity and semantic measures to see how closely the generated text aligns with actual resolutions. For a widely known lexical measure, BLEU (Bilingual Evaluation Understudy) is often used to compare text closeness.

Here, r is the reference length, c is the candidate length, p_n is the n-gram precision, and w_n is a weighting factor. For semantic similarity, methods like BERTScore or sentence embeddings can capture deeper alignment. Human-in-the-loop validation is always recommended. On-call engineers can rate whether the generated recommendations are plausible and helpful in real-time.

Operational Flow

Ticket Creation: An engineer or automated alert system creates an incident record.

Retrieval: A system fetches relevant past incidents with similar symptoms or logs.

LLM Processing: The model receives both the current incident description and the retrieved historical examples.

Root Cause & Mitigation: The model returns structured output describing likely root cause factors and steps to resolve.

Verification: On-call engineers confirm the suggestions or refine them.

Learning Loop: The system collects feedback on accuracy and stores final solutions for future training updates.

Practical Implementation Notes

Use Python with a well-organized framework. One example code snippet (simplified) might look like this:

import openai

import json

def get_incident_context(ticket_id):

# Retrieve historical incidents with similar logs

# Return context text

return "Relevant context about past incidents..."

def generate_recommendation(ticket_info):

prompt = f"Incident details:\n{ticket_info}\n"

prompt += "Similar historical incidents:\n" + get_incident_context(ticket_info["id"])

prompt += "\nSuggest root cause and mitigation steps.\n"

# Model call

response = openai.Completion.create(

engine="gpt-3.5-turbo",

prompt=prompt,

max_tokens=500,

temperature=0.2

)

return response.choices[0].text

# Example usage:

ticket_data = {

"id": "INC12345",

"description": "Users see repeated server timeout errors in region X..."

}

suggestions = generate_recommendation(ticket_data)

print("Model suggestion:", suggestions)

The code passes the ticket data plus retrieved context to an LLM. The model responds with a plausible root cause summary and steps to fix. Engineers then approve or refine the suggestions.

Long-Term Maintenance

Fine-tune or retrain the model when new incident patterns arise. Keep the retrieval database updated. If the environment changes significantly, conduct an offline evaluation and confirm that the model is still accurate. The conversation-based approach, with the model acting as an incident facilitator, can be extended to chat interfaces where the model actively collaborates with engineers.

Possible Follow-Up Questions

1) How would you handle unstructured data like logs or raw telemetry at scale?

Text logs can be indexed using a document store or specialized log-analytics pipeline. Summarize them with embedding techniques and store them alongside metadata (timestamp, severity, specific service identifiers). When an incident appears, match its relevant logs against prior known issues and pass this condensed log context to the LLM. In large-scale systems, shard the log store by service or region to make retrieval more efficient. Maintain near-real-time indexing so the logs remain fresh.

2) What if the recommended steps from the model are partially incorrect or incomplete?

Always keep a human-in-the-loop for final approval. Monitor how often engineers override the model’s suggestions. If a pattern of systematic errors emerges, update your training data accordingly. Consider confidence scoring by comparing the model’s output with known resolution patterns, or measure how certain the model is in its text generation. Low-confidence responses can automatically request more details or escalate to a specialized engineering team.

3) How do you deal with stale data in a rapidly evolving cloud environment?

Schedule periodic fine-tuning or retraining. Maintain a pipeline that ingests newly resolved incidents into the training set. Also consider a retrieval-augmented approach, so the model always has current examples at prompt time. If incidents require immediate reference to the latest changes (like new configurations or architecture updates), rely on an external knowledge base that is frequently refreshed. The model stays generic, while real-time context retrieval ensures up-to-date recommendations.

4) How do you measure success in real-world usage beyond lexical or semantic similarity?

Production metrics such as time-to-resolve (TTR) or time-to-mitigate (TTM) can demonstrate tangible benefits. If the model consistently reduces TTR for recurring issues, it justifies further investment. You can also track user satisfaction from on-call engineers. Ask them to rate each recommendation. If a mitigation step drastically reduces end-user impact or speeds up resolution, count that as a success. Combine direct user feedback with operational metrics for a fuller picture.

5) Could hallucinations or incorrect root causes harm the system?

Any generative model might produce flawed recommendations if it lacks proper grounding. Minimize risk through strong retrieval augmentation, so the model’s reasoning references confirmed historical data. Provide disclaimers to on-call teams that suggestions are not absolute. Implement gating logic that reviews certain critical or high-severity recommendations. Over time, gather feedback about wrong or “hallucinated” suggestions. Feed these cases back into training to reduce such errors.

6) What additional improvements would you implement to scale this system for enterprise-level reliability?

Deploy a robust monitoring system to track model output quality, usage patterns, and real-world resolution outcomes. Use a queue-based architecture where each new incident triggers parallel calls to both retrieval and inference. Pre-warm the retrieval index to handle sudden bursts of tickets. Implement concurrency checks to avoid conflicting updates if multiple engineers act simultaneously. Explore advanced conversation-based solutions, where the system iterates on clarifications with the engineer before finalizing recommended steps.

7) How would you handle multi-lingual incidents?

Translate non-English incident descriptions into a single pivot language with a machine translation API. Pass the translated summary to the LLM for root cause analysis. Translate the output back to the original language if local engineers need it. Alternatively, train or fine-tune multi-lingual LLMs that understand multiple languages in tickets and logs. Check that each translation step preserves key technical details.

8) Could you adapt this approach for other IT Operations tasks?

Yes. The same approach applies to tasks like automated triage, classification of alerts, proactive anomaly detection, or maintenance scheduling. An LLM can parse and interpret logs, then suggest not only immediate fixes but also proactive monitoring rules. Data distribution shift can occur, so periodically validate the model as new system updates and patterns emerge.

9) How do you protect private or sensitive data in training logs?

Use a data anonymization layer. This ensures tokens such as usernames, email addresses, or hostnames are scrubbed or replaced with synthetic placeholders before training. Store mapping files in a secure vault if you need reverse-lookup later. Strictly control access to the pretraining logs so only privileged data scientists or systems can handle them. Log-based transformations like hashing or tokenization help maintain anonymity while preserving patterns for modeling.

10) Could a simpler classification approach suffice instead of a large-language model?

A simple classifier might categorize broad incident types but struggle with writing detailed, coherent root cause statements and context-dependent mitigation steps. LLMs excel at producing textual explanations that incorporate the unique context of each incident. Classification alone rarely provides a full narrative. However, for certain routine tasks or well-known error codes, a lightweight approach might be cheaper and faster.