ML Case-study Interview Question: Precise Ad Targeting: Uplift Decision Trees for Incremental Conversion Lift

Browse all the ML Case-Studies here.

Case-Study question

A major e-commerce platform wants to optimize its display ads by focusing on the incremental impact of showing each ad to a user. They have set up a randomized control trial with a treatment group who sees an ad and a control group who does not. Their goal is to build a model that identifies the users who will respond positively to the ads (and avoid users who would have purchased anyway or who get annoyed by ads). They have user-level features (browsing history, purchase history, demographics, etc.), the treatment indicator (ad served or not), and the conversion outcome (purchase or not). Propose an uplift modeling solution to accurately quantify the incremental effect of the ads at the user level. Also propose how you would avoid suboptimal splits in the data (for example, splits that isolate most of the treatment group in one branch of the tree). Provide pseudocode or code snippets for any core implementation elements.

Provide your high-level solution plan, key model components, choice of algorithms, and reasoning behind your decisions. Show how you will evaluate the model before deploying it. Also outline any modifications needed if multiple treatments are tested simultaneously. Explain all logic.

Proposed Detailed Solution

Modeling Approach Train an uplift decision tree that directly optimizes the difference in outcomes between treatment and control. Each node stores two probability distributions: one for the treatment group outcome, another for the control group outcome. Splits aim to maximize the divergence of these distributions.

Key Formulae

These two expressions measure how far apart the treatment and control outcome distributions are at a node. KL stands for Kullback-Leibler Divergence; ED stands for Squared Euclidean Distance. p_i and q_i are the probabilities of outcome class i for the treatment and control groups.

This conditional divergence measures how far apart the distributions are in child nodes formed by a split test A. N(a) is the count in child node a, and N is the count in the parent node.

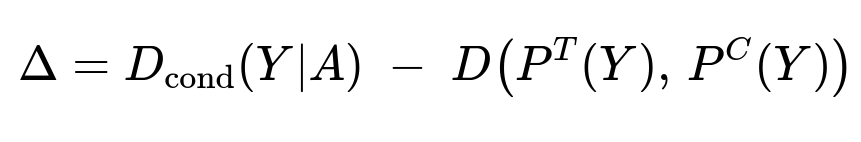

This gain term represents the improvement in separation of treatment vs. control after the split. The node chooses a split that maximizes this gain.

This normalization term (for Euclidean Distance) penalizes uneven splits (large imbalance between treatment and control) and splits with too many children. N(a)/N is the proportion of samples in child node a. The bracket includes a small epsilon to avoid division by zero.

Implementation Explanation Look for candidate splits on a given feature, compute the divergence gain for each possible split, divide by the normalization factor, and choose the split that yields the maximum ratio. Each leaf of the tree ends with an estimated uplift: predicted probability(treatment) - predicted probability(control).

Code Snippet (Pseudocode)

Initialize tree with root node containing all samples

function build_uplift_tree(node):

if stopping_criterion(node):

return

best_gain = 0

best_split = None

for feature in all_features:

for candidate_threshold in possible_splits(feature):

left_child, right_child = split(node, feature, candidate_threshold)

div_cond = divergence_conditional(left_child, right_child)

div_parent = divergence(node)

raw_gain = div_cond - div_parent

norm_val = compute_normalization_factor(left_child, right_child, node)

normalized_gain = raw_gain / norm_val

if normalized_gain > best_gain:

best_gain = normalized_gain

best_split = (feature, candidate_threshold)

if best_split is not None:

node.split_feature = best_split.feature

node.split_threshold = best_split.threshold

node.left_child = build_uplift_tree(left_child)

node.right_child = build_uplift_tree(right_child)

else:

return

build_uplift_tree(root_node)

Stopping criteria can involve a minimum number of treatment/control samples in a node to ensure statistically reliable estimates. Once the tree is grown, each leaf node’s average outcome in treatment minus average outcome in control is used as that node’s uplift prediction.

Handling Multiple Treatments

Use the same strategy but store separate distributions for each treatment variant plus a control group. Extend the divergence measures to multi-distribution format. Evaluate the gain from splits by measuring how the distributions separate across treatments and control.

Evaluation Strategy

Use uplift metrics such as Qini or uplift-at-K on a validation set. These measure how effectively the model ranks individuals by predicted incremental benefit. A typical approach:

Sort users by predicted uplift.

Split them into buckets.

Compare the differences in actual outcomes between treatment and control by bucket.

Avoiding Suboptimal Splits

Normalization addresses the bias toward grouping most treatment samples in one node and minimal control in another. After computing the raw gain, dividing by the normalization term penalizes extremely unbalanced splits. Also impose a minimum node size for both treatment and control subsets. This ensures each leaf has meaningful data from both groups.

Potential Follow-up Questions

How do you handle sparse features when searching for splits?

Convert categorical variables into numerical codes or apply one-hot encoding. Check if a given split threshold leads to enough samples in both subgroups. Possibly group levels to avoid over-splitting. Another approach: treat high-cardinality features through feature hashing or look for domain-specific groupings.

How do you choose hyperparameters for this uplift tree?

Set maximum depth, minimum samples per leaf for each treatment/control group, and the minimum divergence gain for a split. Grid-search these with a validation set. Evaluate performance by measuring how much real incremental uplift the top deciles deliver.

Why not build two separate probability models and subtract their outputs?

That two-model approach can fail when the uplift signal is faint. Separate models magnify noise in each probability estimate, leading to weaker uplift predictions. A direct uplift model optimizes for differential prediction between treatment and control more effectively.

How do you ensure randomization holds in practice?

Check that user assignment to treatment or control is indeed random. Validate that distribution of user-level features in both groups is roughly comparable. If any major shift occurs, the unconfoundedness assumption breaks, reducing reliability of uplift estimates.

How do you handle data leakage?

Block or remove features that might be correlated with the user’s treatment assignment. If certain features are only known after the treatment is assigned, that creates data leakage. Keep feature sets that are available at decision time to avoid inflating results.

How do you handle cold-start users with no history?

Train a default model or fallback node with minimal features. Or use simple demographic or context features that are usually available. As more data accumulates, update the user profile. Alternatively, use hierarchical modeling or a hybrid approach that learns global patterns and then refines for individuals with more data.

How would you extend this approach to real-time bidding scenarios?

Compute uplift scores in real-time based on user context. Use a fast model (like a shallow ensemble of trees). Score each impression, compute estimated uplift, and set a bid strategy accordingly. Use partial feedback loops for online learning if new conversion data arrives continuously.

How do you account for cost vs. reward?

Include cost in the objective. For a marketing campaign with cost c per ad, measure net benefit = (expected incremental revenue) - c. Modify the splitting criterion to reflect net gain. Alternatively, post-process predicted uplift by removing users with negative or low net gains before final decisions.

How do you validate the business impact beyond offline metrics?

Run A/B tests with a subset of traffic. Compare revenue lift or conversion lift between a group targeted via the model vs. a control group. Verify that the differences match offline metrics. If consistent, move forward with larger-scale deployment.

Can you combine uplift modeling with deep learning?

Yes. One approach uses a two-headed neural network that shares lower layers and outputs separate predictions for treatment and control. Another approach tries a direct optimization for uplift. The principle remains the same: align the architecture to capture the differential effect of treatment vs. control. However, interpretability might drop, so care is required in explaining the model’s decisions.