ML Interview Q Series: Calculating Output Shape for Multi-Dimensional Neural Network Embedding Layers

📚 Browse the full ML Interview series here.

16. Suppose I have 1000 sentences and 100-dimensional data (1000, 100) in which there are 512 unique words. If I apply an embedding layer (input_shape=(100), vocab_size=512, embedding_dim=(6,4)), what will be the shape of the output when passed to the next layer?

When an Embedding layer is applied to an input of shape (batch_size, sequence_length) = (1000, 100), and the embedding dimension is specified as (6,4), the output shape will typically be (1000, 100, 6, 4). In other words, each token index in the sequence is replaced by a (6,4) embedding “slice,” resulting in an extra pair of dimensions in the output.

To see why this happens, consider how an embedding layer works in a typical deep learning framework. The key steps are:

You start with input token indices of shape (1000, 100). Each of these indices must be mapped to a trainable vector or tensor. In the usual single-integer embedding_dim scenario (for example, embedding_dim=128), the mapping yields a 2D vector for each token, so the result for each sentence is of shape (100, 128), and for the batch it is (1000, 100, 128).

But in this specific question, embedding_dim=(6,4) indicates the embedding layer is assigning a 2D matrix (6,4) to each input token index. Therefore, each entry in (1000, 100) is replaced by a (6,4) matrix, giving a final shape of (1000, 100, 6, 4).

One important note: Many deep learning libraries primarily allow an integer embedding dimension (e.g., embedding_dim=24), which can be internally reshaped to (6,4) if desired. However, if the layer truly supports multi-dimensional embeddings directly, it will produce a 4D output as stated. If the framework does not directly allow a multi-dimensional embedding shape, one might see (1000, 100, 24) instead, and the user would then manually reshape that to (1000, 100, 6, 4).

Below is a minimal illustration of how one might define and apply such an embedding in code (assuming a framework that permits (6,4) directly):

import torch

import torch.nn as nn

# Hypothetical embedding layer with multi-dimensional embeddings

class MultiDimEmbedding(nn.Module):

def __init__(self, vocab_size, embed_shape):

super(MultiDimEmbedding, self).__init__()

# embed_shape is (6,4). This is effectively a total dimension of 6*4=24

self.weight = nn.Parameter(torch.randn(vocab_size, embed_shape[0], embed_shape[1]))

def forward(self, x):

# x is of shape (batch_size, seq_len)

# For each token index, we pick out the relevant (6,4) slice

# The output shape will be (batch_size, seq_len, 6, 4)

# because we do "lookups" in self.weight

return self.weight[x]

# Usage example

vocab_size = 512

embed_shape = (6,4)

batch_size = 1000

seq_len = 100

embedding_layer = MultiDimEmbedding(vocab_size, embed_shape)

# Suppose we have input tokens of shape (1000, 100)

dummy_input = torch.randint(0, vocab_size, (batch_size, seq_len))

# The output will then be (1000, 100, 6, 4)

output = embedding_layer(dummy_input)

print(output.shape)

Heading deeper:

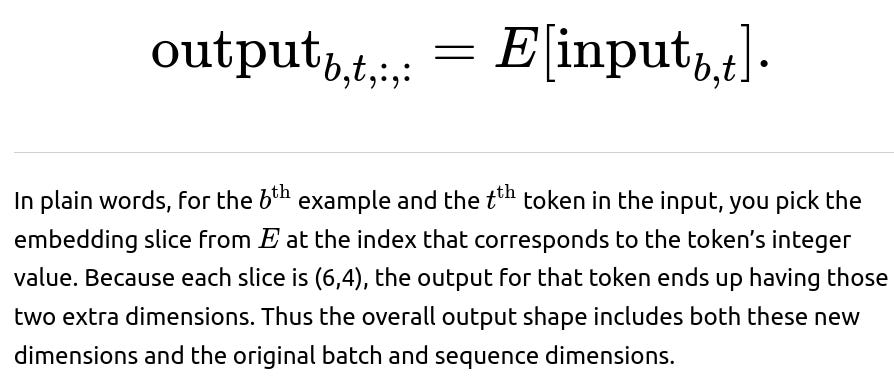

The actual mechanism in a typical embedding layer is a simple matrix (or multi-dimensional tensor) lookup. If a token index is ii, then you fetch the $i$th slice of parameters in the embedding. In many frameworks, the operation is akin to multiplication by a one-hot vector or a gather operation. Concretely, if you let EE be the embedding table (shaped (vocab_size, 6, 4) in this question), then:

Below are some critical details and potential pitfalls that might arise in an interview:

When you see an embedding_dim that is multi-dimensional like (6,4), it might be a shorthand for 24, or it might literally mean the output must maintain a 2D shape for each token. Whether the next layer expects a flattened representation or a 2D representation depends entirely on the network architecture and how it’s defined downstream.

The input_data shape (1000, 100) implies there are 1000 sequences (batch dimension) and each sequence has 100 tokens (the sequence length). Each token is an integer in the range [0, 511] given the vocab_size=512. If the data contains out-of-range tokens or unknown tokens, one must handle that (e.g., mapping them to an [UNK] index or ignoring them entirely).

If you intend to process variable-length sequences, you might pad sequences up to length 100. In that scenario, the embedding layer still produces (1000, 100, 6, 4), but some fraction of that data might correspond to padding tokens, which you can mask out in subsequent layers.

Since each token is replaced with a learned representation, the number of trainable parameters in the embedding layer is vocab_size × 6 × 4 = 512 × 6 × 4 = 12288. This is typically stored as a single weight parameter in memory.

If the next layer after the embedding expects a 3D shape of (batch_size, sequence_length, something), you might flatten the two embedding dimensions (6,4) into 24. If that next layer can operate on 4D inputs (like a convolutional layer in a text-CNN scenario), you might retain the shape (6,4). So the next shape might be either (1000, 100, 24) or (1000, 100, 6, 4), depending on how you plan to pass it forward.

When frameworks do not allow direct multi-dimensional embeddings, the normal solution is to pick an embedding_dim=24 in a single integer form. Then if you truly need it to be (6,4), you reshape downstream. But if the framework or custom code is flexible, you might indeed have a direct shape of (6,4) for each embedding vector.

Below are some common follow-up interview questions, with thorough, detailed answers for each.

What if the embedding_dim was just a single integer, say 24, instead of (6,4)? Would the final shape be different?

When embedding_dim is set to a single integer such as 24, each token index is replaced by a 24-dimensional vector. In that more common scenario, the output shape after the embedding layer is (batch_size, sequence_length, embedding_dim). Because here embedding_dim = 24, the output would be (1000, 100, 24).

The difference is purely in how the dimension is interpreted. Some frameworks do not allow embedding_dim to be specified as (6,4). Instead, you would see embedding_dim=24, and if you needed it to be shaped as (6,4) for a subsequent convolutional layer or something that expects a 2D kernel, you would manually reshape from (1000, 100, 24) into (1000, 100, 6, 4).

What are the trainable parameters in this embedding layer, and how do we calculate them?

The total number of trainable parameters in an embedding layer is usually vocab_size × embedding_dim.

In this question, vocab_size=512 and embedding_dim=(6,4). If we multiply 6×4=24 as the total dimension, then total trainable parameters = 512 × 24 = 12288.

Conceptually, each unique word index (ranging from 0 to 511) has an associated 2D slice of shape (6,4). That means 512 slices. So each slice has 6×4=24 trainable weights.

How does the embedding layer deal with tokens that are out-of-range, for instance if a token’s index is 512 or higher?

Typically, you have to ensure all tokens are in the valid vocabulary range. In many frameworks, an embedding lookup with an out-of-range index leads to an error or an exception. Alternatively, you could define a custom or extended “unknown token” index if you expect tokens outside the known range. For that reason, you might see certain pipelines that map unknown tokens to index 0 or a special index for unknown tokens (often ) so the embedding layer does not break.

This is usually handled in data preprocessing: tokens not in the known 512 words can be replaced with an unknown token index. If you do not handle that, you risk having out-of-range indices that crash the training procedure.

Suppose some sequences are shorter than 100 tokens. How does that affect the embedding layer’s output?

If you batch together sequences of unequal length, you typically pad them to a fixed length of 100. So each input is still (1000, 100), but some portion of that might be padded with a special index (often 0 or some reserved index). Those positions will still have valid embeddings in the shape (6,4). If you do not want your model to treat those padded embeddings as real data, you can use a mask or attention mechanism that ignores those padded positions downstream.

This process ensures that the embedding layer still returns a well-defined shape of (1000, 100, 6, 4) even if sequences differ in actual length.

Are there situations where an embedding layer is not appropriate, or other transformations might be used?

An embedding layer is ideal when your input tokens are discrete and belong to a known, fixed vocabulary. In cases where your input is already some form of continuous or high-dimensional representation (e.g., a 300-dimensional word2vec vector or image data), an embedding layer may not be necessary. Instead, you might feed data directly into dense layers, convolutional layers, or other transformations.

However, for natural language tasks where you have integer-based word indices, an embedding layer is a memory-efficient way to train or fine-tune a word or subword representation.

How might I reshape from (1000, 100, 6, 4) to (1000, 100, 24) if needed downstream?

You can flatten the last two dimensions into a single dimension. In frameworks like PyTorch or TensorFlow, you can call a reshape operation on the output. For instance, in PyTorch:

output_reshaped = output.view(batch_size, seq_len, -1)

or in TensorFlow (assuming a symbolic approach):

import tensorflow as tf

output_reshaped = tf.reshape(output, (batch_size, seq_len, 6*4))

This flattening is often done if a subsequent layer (like an RNN or a linear projection) expects a 2D vector per token. If you want to exploit the two-dimensional nature of the embedding (like a 2D convolution for text), you can keep the shape (6,4).

If I change the batch_size from 1000 to something else, does the embedding layer still produce the same final dimensionality for each token?

Yes. The embedding layer does not care about the batch dimension—this is typically handled by the framework’s automatic batching. The embedding layer just needs to see that each token index is within [0, vocab_size−1]. If you move from batch_size=1000 to batch_size=32, for example, and keep seq_len=100, you’d get an output shape of (32, 100, 6, 4). The last two dimensions remain the same because they represent the embedding shape.

What if we want to tie embeddings in an encoder-decoder architecture?

Sometimes advanced models tie the input (encoder) embeddings and output (decoder) embeddings in the same matrix to reduce parameter size or maintain consistency between encoding and decoding. If so, you might share a single embedding table that each side uses. In a scenario like that, specifying the shape of the embedding still results in the same fundamental approach: each integer token index is a pointer into that shared table. The shape calculations remain identical, but you just ensure that the same embedding parameters are used in both parts of the network.

How is backpropagation handled through the embedding layer?

Backpropagation flows through the parameters of the embedding matrix. For each token index, only the slice corresponding to that token is updated. Typically, the gradient for each embedded token is added to the relevant rows (or slices) in the embedding weight matrix. This means if a particular token “cat” with index i appears multiple times in a batch, the updates for all appearances of “cat” sum onto the row i of the embedding matrix.

Could having an embedding_dim of (6,4) cause confusion downstream if my next layer expects a linear embedding vector?

Yes. Many standard layers for text (e.g., RNNs, Transformers) expect the input shape to be (batch_size, sequence_length, embedding_dim) with a single embedding_dim. If the question explicitly says embedding_dim=(6,4), you must confirm the next layer can handle a shape (6,4), or else flatten it. If you pass a 4D tensor to an RNN that expects 3D, you get an error. So it’s common in practice to keep embedding_dim as a single integer dimension unless your architecture specifically leverages the multi-dimensional embedding shape.

What is the best practice if I want to do 2D convolutions on embedded tokens?

For certain text classification or text CNN architectures, you might structure your embeddings as something akin to “channels” and “spatial dimensions.” For example, you might keep the shape (batch_size, 1, sequence_length, embedding_dim) so a 2D convolution can interpret one dimension as the “time” axis and the other as an embedding dimension. However, if your design truly wants two embedding dimensions (6,4) for each token, you must carefully consider how each dimension corresponds to your convolution’s height and width or how you interpret “channels.” Some approaches keep it simpler by treating the embedding dimension as “width” and the sequence length as “height,” employing 2D kernels that slide across them.

Could I initialize the embedding matrix with pretrained embeddings (e.g., GloVe, FastText) if I had them?

Yes. If you have pretrained embeddings of shape (vocab_size, embedding_dim) or in this case (vocab_size, 6×4=24), you can reshape them accordingly to (vocab_size, 6, 4) and copy them into your embedding matrix. In frameworks like PyTorch, you can manually assign:

embedding_layer.weight.data.copy_(torch.from_numpy(pretrained_numpy_array))

just ensuring that the shapes match exactly. One must also handle tokens that may be missing or differ in indexing between the pretrained data and the local vocabulary.

Summary of the main takeaway

Because the question states that embedding_dim is (6,4), you end up with an output shape (1000, 100, 6, 4). This includes the batch dimension of 1000, the sequence length of 100, and the embedding dimension that is now spread across two dimensions, 6 and 4.

No matter how large or small your batch size is, or how many unique words you have in the vocabulary, the essence is that each integer token index in your input is replaced by a trainable (6,4) matrix, leading to that additional dimensionality in the final tensor shape.

Below are additional follow-up questions

How would the embedding layer behave if we wanted to incorporate subword tokenization (e.g., Byte-Pair Encoding) instead of a traditional word-level vocabulary?

When using subword tokenization (like BPE or WordPiece), each "token" fed into the embedding layer is often a piece of a word rather than a whole word. This changes how we might set up and interpret the embedding layer:

Vocabulary Construction Instead of a 512-word vocabulary, we might have a subword vocabulary of some size (e.g., 8000 or 32000), because subword methods typically produce a larger number of smaller units. The embedding layer’s first dimension (vocab_size) must be at least as large as the total number of unique subword tokens. If we assume the subword vocabulary is 512 in size (for simplicity) and we still want an embedding shape of (6,4), then the only difference is that each integer index now corresponds to a subword unit. The resulting output shape for a batch of 1000 sequences of length 100 remains (1000, 100, 6, 4).

Potential Ambiguities Subword tokenization might produce more tokens in a sequence compared to a word-level approach (since each word can break down into multiple subword pieces). If you still want each sequence to be a fixed length of 100 tokens, you must consider whether that length is sufficient to represent all subword pieces. You might have to truncate longer sequences or use more sophisticated batching.

Edge Cases If you have out-of-vocabulary subword tokens (e.g., an unknown subword piece), you must reserve an index for it, just like in word-level tokenization. Also, in real-world scenarios, the subword vocabulary is often significantly larger than 512. That might impact memory usage for the embedding layer if the total dimension is large.

In practice, subword-level embeddings often help in handling rare words and morphological variants better. But from the embedding-layer perspective, it still performs the same operation: each integer index becomes a slice of shape (6,4), and you get a 4D tensor in the end.

What if we wanted to use positional embeddings in addition to our word embeddings when passing data to a Transformer-like architecture?

A Transformer typically requires the addition of positional embeddings (or encodings) to the token embeddings:

Positional Embeddings In many Transformer implementations, each position in the sequence has a learned or a fixed embedding that encodes its position (0 through sequence_length−1). For example, in a learned scenario, you might have a (max_sequence_length, embedding_dim) matrix that you add (element-wise) to your token embeddings. If the token embeddings are shaped (1000, 100, 6, 4), you might either reshape them to (1000, 100, 24) before adding a (100, 24) positional embedding or keep them as (1000, 100, 6, 4) but then reconfigure how positional embeddings are broadcast or added. Typically, Transformers require a single embedding dimension, so you might flatten your (6,4) shape to (24) and similarly define positional embeddings of dimension 24. Then for each position t in the sequence, you add the learned vector (positional_embedding[t]) to the token embedding at that position.

Implications for Shape You generally end up with a 3D tensor: (batch_size, sequence_length, embedding_dim_flattened). For a standard Transformer, the typical shape is (batch_size, sequence_length, d_model). If your embedding_dim is 24 (flattening 6×4), then d_model=24. After adding positional embeddings, the shape remains (1000, 100, 24) for the entire batch.

Edge Cases If you do not flatten, you have to handle how to incorporate positional embeddings into that 4D shape. This can be cumbersome, because adding a positional vector that is shape (6,4) might not conceptually map well to each token's 2D embedding. In practice, most implementations flatten to a single dimension. Also, if you have variable sequence lengths, you need to manage position indices carefully. The positional embeddings typically have a maximum length, so your training procedure must account for that maximum.

Hence, if a Transformer is your next layer, it almost always expects (batch_size, sequence_length, d_model). You would flatten (6,4) → 24, add or concatenate positional embeddings, and proceed.

How would we handle a scenario where part of the batch has more tokens than the fixed sequence length of 100, while other parts have fewer?

Most deep learning frameworks require a fixed maximum length for a batch of sequences. If some sequences exceed length 100, you typically have two strategies:

Truncation Longer sequences are cut off at the 100th token to keep the input shape (batch_size, 100). This is common when memory constraints or computational limits are strict, although you lose data from the truncated part.

Batching by Similar Sequence Length You can dynamically pad and batch sequences that are close in length together, so you minimize the amount of wasted computation. If one batch has mostly sequences of length ~150, you might have an upper bound of 150 for that batch. Another batch might have an upper bound of 50, and so forth. This approach is used in many production NLP systems for efficiency.

Output Shape Considerations Regardless of truncation or padding, the embedding layer sees a (batch_size, sequence_length) input. If sequence_length=100, the output shape remains (batch_size, 100, 6, 4). If you do dynamic sequence lengths (e.g., 120 in one batch, 80 in another), you must manage that shape carefully in your data loader or input pipeline. The result could be (batch_size, 120, 6, 4) for one batch and (batch_size, 80, 6, 4) for another.

Edge Cases If you forget to pad shorter sequences or if you input raw text of different lengths without adjusting shapes, you’ll get dimension mismatches or errors. You also need to track the actual lengths if your model includes attention masks or if it must ignore padded positions.

Typically, for real-world training, dynamic batching or bucket-based batching is a powerful approach: group sequences by approximate length, then pad them to a common length in each batch. This preserves shape integrity for the embedding layer while minimizing wasted computation.

What are some potential pitfalls when using multi-dimensional embeddings in specialized layers like convolutional layers?

Using a (6,4) embedding shape might suggest that you want to apply a 2D convolution directly on each token’s embedding “image.” Some pitfalls include:

Misalignment of Input Channels Traditional 2D convolutions in image processing treat the first dimension beyond batch size as the number of channels, then height, then width. If you try to feed (batch_size, sequence_length, 6, 4) directly into a 2D convolution without reshaping or reordering dimensions, your framework might interpret “sequence_length=100” as the number of channels, which likely isn’t what you want. You might need a shape like (batch_size, channels, height, width) or some rearrangement.

Loss of Sequential Context Convolution across the “spatial” dimensions (6,4) might or might not be relevant to your problem. If you’re doing text classification, you might want to convolve across the token axis (sequence dimension=100) rather than just the embedding dimension. That typically involves 1D convolutions in the sequence dimension (batch_size, embedding_dim, sequence_length) or 2D in certain stylized ways (e.g., treating each token as a 1D row in a 2D “image”).

Parameter Explosion If you do 2D convolutions with many filters on a shape like (100, 6, 4), be aware that each filter might learn a large number of parameters, especially as you scale up. Make sure your network size is manageable.

Edge Cases in Reshaping Mismatched permutations of axes can silently lead to large performance drops or nonsense outputs if you inadvertently reorder the embedding dimensions incorrectly. For instance, switching height and width or the channel dimension can yield unexpected results.

Many text convolution approaches simply flatten the embeddings to a single dimension, treat that dimension as “channels,” and then convolve across the sequence axis in 1D, which is simpler and often very effective for text tasks.

What if we want to load partial pretrained embeddings but still keep some embeddings trainable for newly introduced words?

This often arises when you have a pretrained embedding matrix for, say, 400 words, and your new vocabulary is 512 words total:

Index Alignment You must align word indices in your new vocabulary with the corresponding rows in the pretrained embedding. For instance, if the first 400 tokens match the pretrained embedding’s vocabulary, you copy those rows. The remaining 112 tokens may have random initialization.

Freezing vs. Fine-Tuning You could freeze the pretrained weights for the 400 known tokens so they don’t update during backpropagation, while the newly added 112 tokens remain trainable. Or you could fine-tune all tokens, though that might risk overwriting the pretrained knowledge if your dataset is small.

Edge Cases If the new vocabulary’s indexing doesn’t match the old one exactly, you might have to create a mapping from old token IDs to new token IDs. Failing to do so can misalign embeddings. Also, if some words in your new vocabulary are entirely missing from the pretrained set, you must carefully handle that portion (e.g., random init).

In frameworks like PyTorch, you can partially assign rows in embedding_layer.weight.data using the pretrained data, leaving the rest randomly initialized. Then you can set requires_grad=False for the pretrained portion if you want to keep it frozen.

In a distributed training environment (multiple GPUs or multiple nodes), how is the embedding layer typically handled?

When training across multiple GPUs or machines, the embedding matrix must be accessible or replicated in each environment:

Data Parallelism Each GPU holds a copy of the entire embedding matrix. When a mini-batch arrives, each GPU processes its subset of the batch, looks up the relevant token embeddings, and computes partial gradients. These partial gradients are then reduced (summed or averaged) across all GPUs, updating the shared embedding weights.

Model Parallelism or Sharding If the embedding matrix is very large (e.g., extremely large vocab), you might shard it across multiple GPUs or nodes. Each GPU holds a portion of the embedding matrix, and token indices are routed to the correct shard for lookup. This approach is more complex but is used in massive-scale language models.

Edge Cases Ensuring consistent updates across shards: if a token index ends up on a shard that does not contain that slice of the embedding matrix, the system must communicate the needed data to the relevant GPU or node. Gradient synchronization overhead can grow if the embedding dimension is large. You must balance communication cost vs. local computation.

Practical Implementation Some frameworks (e.g., PyTorch with fairseq or DeepSpeed) provide specialized sharded embedding modules. If you’re implementing from scratch, you need to handle gather-scatter or all-reduce logic to keep embeddings in sync.

The overall shape of the embedding’s output remains the same from the perspective of the model, but behind the scenes, the embedding parameters might be distributed or replicated.

How do we interpret the embeddings once they are learned, especially if they are two-dimensional (6,4) for each token?

Interpreting embeddings can be challenging even when they are a single dimension. With a (6,4) shape, you have:

Flattening for Visualization Often, you flatten the matrix (6,4) into a single 24-dimensional vector before applying standard techniques like t-SNE or UMAP to visualize them in 2D. This helps see how tokens cluster or relate to each other.

Direct 2D Structure If you’re using 6×4 to reflect some structured representation (for example, morphological features in one dimension and semantic features in another), you might interpret each row or column differently. In practice, though, it’s unusual for these sub-dimensions to neatly separate meaning. Neural networks learn distributed patterns.

Edge Cases If you treat the embedding as 2D in downstream layers, you might glean some structure—like certain filters focusing on columns or rows—but this requires carefully analyzing the weights. Embeddings remain notoriously “black box” even if they have multiple dimensions. You may see partial interpretability by correlation with known linguistic or semantic categories, but that is not guaranteed.

Ultimately, the (6,4) shape is just an internal representation. Most interpretive techniques flatten it or treat it as a 24-dimensional vector for the sake of analysis, unless you have a highly specialized reason to maintain the 2D layout.

How could we dynamically resize the embedding layer if the vocabulary grows after initial training?

In some real-world scenarios, you might need to add new words or tokens after the model is trained:

Extending the Embedding Matrix One approach is to create a new embedding matrix of shape (new_vocab_size, 6, 4). You copy over the previously learned embeddings for the old vocabulary. For the newly added tokens, you randomly initialize their embeddings or initialize them based on some related tokens if you have that mapping.

Freezing Old Indices You might freeze the old portion of the matrix and only train the new portion, especially if you have limited data for these new words. This helps preserve performance on the original vocabulary.

Possible Issues If your model expects a fixed vocabulary size (e.g., fully connected layers that treat the embedding in a classification context), you may need to adapt other parts of the model that reference vocabulary size (like output layers). Also, the new vocabulary might shift indices if you insert tokens in the middle. You should maintain consistent indexing to avoid misalignment with your old model. Often you simply append new tokens at the end of the existing vocabulary. If your next layer is a softmax for language modeling, you must also expand that output layer to handle new tokens. This can be memory-intensive if the vocabulary grows significantly.

In short, dynamically extending an embedding matrix is feasible but must be done carefully to avoid index mismatches and potential catastrophic forgetting. You can either do “incremental training” or re-fine-tune the entire model with the new vocabulary.

What if the real-world data contains tokens that never appear during training, but appear at inference time?

At inference time, if you encounter tokens not in the training vocabulary (indices outside the known range of 512 in this scenario), the embedding layer cannot look them up. Typically:

Unknown Token Handling Many pipelines replace unseen tokens with a special [UNK] token. The embedding layer must have a row for that [UNK] token, and the model effectively processes them as “unknown.”

Subword Method If you are using subword tokenization, many unknown words can still be broken into known subword pieces, thereby mitigating the problem of missing tokens.

OOV (Out-of-Vocabulary) Rate High OOV rates degrade performance since the model lumps many distinct tokens into the same [UNK] embedding. Monitoring OOV is crucial in production systems.

Runtime Error vs. Graceful Degradation Without a [UNK] token index or out-of-vocabulary handling, the system might crash upon seeing an invalid index. So it’s essential to design the inference pipeline to handle unexpected tokens gracefully.

Real-world text is messy, so robust OOV handling is a must. Typically, for a fixed vocabulary approach, [UNK] tokens are used. For subword-based approaches, truly unknown tokens are often minimal, since the system can break words down to subword units in the vocabulary.