ML Interview Q Series: Can Data Cleaning worsen the results of Statistical Analysis?

📚 Browse the full ML Interview series here.

Comprehensive Explanation

Data cleaning is a critical step in any machine learning or statistical analysis pipeline. It encompasses removing duplicates, handling missing values, correcting formatting problems, and dealing with outliers. While these processes are usually beneficial for ensuring consistent and high-quality input data, there are indeed scenarios where data cleaning can inadvertently degrade the performance of a statistical analysis.

Over-aggressive removal or modification of observations can sometimes strip away crucial information. For instance, removing outliers based solely on a statistical threshold without deeper domain knowledge might lead to excluding legitimate but rare events. These rare events could hold valuable signals for tasks like fraud detection, failure analysis, or modeling of tail risks. Similarly, blindly imputing missing values with mean or median can reduce the variance in the data and mask true correlations or distributional patterns.

Errors in data cleaning can also arise due to the subtlety of missing data mechanisms. For example, if data is missing not at random (MNAR) and the missingness depends on some underlying variable of interest, a naive imputation strategy might bias the analysis. Another risk is recoding or transforming categorical variables in a way that merges distinct groups into a single group, thereby losing the signal that originally distinguished them.

In short, data cleaning should be approached with caution and guided by domain knowledge. Over-cleaning or poorly executed cleaning can produce data that looks pristine but no longer represents the underlying generative process.

Illustrative Mathematical Expression: Outlier Detection

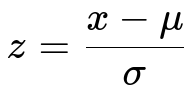

Sometimes, outliers are detected using techniques based on statistical measures, such as the z-score:

Here, x is the value of a particular data point, mu is the mean of the dataset, and sigma is the standard deviation. Data points whose absolute z-score exceeds a certain threshold (often 3) can be deemed “outliers.” However, if these outliers represent meaningful phenomena (like rare but valid events), removing them can harm the integrity of the subsequent analysis.

Potential Practical Example

Suppose you have a dataset that includes extremely large transaction amounts recorded during suspicious activity. A naive cleaning step might remove these data points as outliers to make the distribution more “normal.” After removal, any model or statistical analysis intended to detect fraudulent transactions would be missing the most important signals. Hence, the cleaned dataset no longer reflects the real-world behavior of the process under study.

In Python, a simple approach to filtering out such outliers might look like:

import pandas as pd

# Example DataFrame with a 'transaction_amount' column

df = pd.DataFrame({

'transaction_amount': [10, 15, 20, 10000, 25, 30, 20000, 35]

})

# Suppose we consider values beyond z_threshold as outliers

import numpy as np

mean_value = df['transaction_amount'].mean()

std_value = df['transaction_amount'].std()

z_threshold = 3

df['z_score'] = (df['transaction_amount'] - mean_value) / std_value

df_cleaned = df[df['z_score'].abs() <= z_threshold]

While this approach will indeed remove anomalies and potentially reduce noise, it could also eliminate critical information depending on the domain. If those large transaction_amount entries were genuine yet rare occurrences, removing them will bias any subsequent fraud detection model.

How Data Cleaning Could Worsen Statistical Analysis

Data cleaning can worsen results if it is done without regard for:

Domain expertise. If a domain expert indicates that certain values, though rare, are genuine observations, removing them might delete essential information.

Underlying distribution assumptions. Certain outliers or missing patterns are inherent in the data and must remain to faithfully represent its distribution.

Mechanisms of missingness. Imputing missing data incorrectly or ignoring the reason why data is missing can bias parameter estimates and distort hypothesis testing.

Data shift. The real-world system that generated the data could produce skewed or heavy-tailed distributions, which must be preserved for reliable modeling.

Possible Follow-up Questions

Could you give more real-world examples where cleaning introduces bias?

Data cleaning can introduce bias when dealing with hospital records where extremely high treatment costs or complicated diagnoses could be flagged as outliers. Removing them may skew a cost-benefit analysis. Similarly, in political polling, removing responses that appear inconsistent or extreme might ignore genuine sentiment from specific voter segments. These examples emphasize the need to examine outliers carefully.

How do you detect if your cleaning strategy has degraded performance?

One approach is to track performance metrics (such as error rates, accuracy, or log-likelihoods) on a validation dataset. You might also compare results using different levels of outlier removal (e.g., z-threshold=2.5, z-threshold=3, or no outlier removal at all) and check if the analysis is robust to these changes. If performance drops sharply after cleaning, that might indicate that you removed important signals.

What are best practices to ensure effective data cleaning?

It is vital to incorporate domain knowledge when deciding on data cleaning steps. Collaboration with domain experts can help interpret whether extreme values are truly anomalies or valid occurrences. One should also perform exploratory data analysis, visualizing how outliers or missing data might cluster around specific subsets of the data. It is prudent to iteratively assess model performance before and after each cleaning step to ensure that improvements in dataset consistency do not come at the cost of losing valuable information.

How can overfitting be related to data cleaning?

Overfitting often pertains to modeling, but data cleaning can create a scenario where you “overfit” your cleaning rules to a particular dataset. For instance, applying too many filtering rules might tailor the data to an assumed distribution or remove real variability that future data would exhibit. If your cleaned data ends up being too “perfect,” your model might fail to generalize well when confronted with real-world, messy data.

Conclusion of Discussion

While data cleaning is crucial, it must be carried out judiciously. Careful assessment of each cleaning step, combined with validation on representative data, can help ensure that statistical conclusions remain robust and reliable.

Below are additional follow-up questions

How do you ensure that data cleaning does not violate distributional assumptions used in statistical tests?

One subtle pitfall arises when a statistical test (such as a t-test) assumes data to be normally distributed. Overly aggressive outlier removal can artificially enforce normality in a dataset that was never truly Gaussian in the first place. As a result, subsequent p-values might appear deceptively significant or non-significant.

A recommended practice is to:

Visualize the distribution (e.g., histogram, Q-Q plot) before and after cleaning. This comparison helps confirm whether the data remains consistent with the assumed distribution.

Conduct sensitivity analyses with different thresholds or methods for outlier removal, to see if the test results are robust. If significance drastically changes under slight modifications, it indicates the data cleaning has a strong (and possibly harmful) influence on the result.

Consult domain experts who can help you understand if the tails of the distribution are integral to the domain. Certain fields, like financial transactions, inherently have heavy-tailed distributions. Removing legitimate extreme values solely to adhere to a test’s assumption can cause distorted inferences.

Potential edge case: In time-series data, applying a simple outlier removal ignoring temporal structure can break autocorrelation patterns. This, in turn, affects stationarity assumptions and can invalidate classical time-series modeling approaches (like ARIMA). Always validate that the stationarity or correlations remain intact after cleaning.

What is the difference between data cleaning and data transformation, and how can the latter inadvertently degrade analysis?

Data cleaning typically focuses on fixing or removing data issues (missing values, duplicates, typographical errors), whereas data transformation more broadly shifts the data’s representation (e.g., log transformations, standardization, encoding categorical features). While cleaning tries to preserve the original semantic meaning, transformations can change the nature of the distributions.

Data transformation pitfalls:

Applying a log transform can distort zero or negative values. If you simply force log(negative_number) to some default, you risk misinterpreting the distribution or introducing systematic error.

One-hot encoding might accidentally collapse distinct categories if done improperly, causing a loss of granularity.

Feature scaling (standardization, min-max) can remove natural scale differences that contain vital information. For example, if transaction_amount has an inherently large scale compared to transaction_count, squashing them to similar ranges could reduce interpretability of their real-world importance.

This can degrade statistical analysis by obscuring relationships that existed in the raw data. If a transformation is done incorrectly, subsequent correlation analyses, regression models, or hypothesis tests might yield misleading conclusions.

Can repeated or iterative data cleaning steps cause compounding errors?

In iterative workflows, you might start cleaning, run some preliminary analysis, see some suspicious results, clean again, and so on. If each cleaning pass modifies the data distributions without rigorous validation, small biases accumulate. By the time you do your final analysis, the dataset might bear little resemblance to the real-world phenomenon.

Practical tips to avoid compounding errors:

Keep versioned backups of the dataset after each cleaning iteration. Comparing these versions can highlight the net effect of multiple cleaning passes.

Track data removed or altered in each pass, and maintain logs of the rationale. If a final result appears off, you can pinpoint which cleaning pass introduced the biggest shift.

Use a consistent set of decision rules for dropping, imputing, or recoding data. Iterative changes that rely on ad-hoc judgments frequently introduce creeping bias.

An example scenario: A data scientist notices weird spikes in sensor readings on multiple occasions and successively trims them. Each time, the “noise” threshold is lowered so more points get removed, eventually discarding actual trends. Future analyses might miss critical signals if those spikes were valid and linked to real environmental events.

How does data cleaning interact with cross-validation or hyperparameter tuning?

Cross-validation involves splitting data into multiple folds to estimate model performance reliably. If you do major cleaning (especially if you remove specific rows) before splitting into folds, you risk biasing your folds in ways that don’t reflect real-world data. For instance, if you remove outliers from the entire dataset before cross-validation, your model may never learn how to deal with them in deployment.

Guidelines for safe interaction:

Perform cleaning steps within each fold separately to simulate encountering dirty data in real usage. This ensures each fold’s test set remains as “raw” as possible, revealing how the model performs on uncleaned or partially cleaned data.

For hyperparameter tuning, keep the cleaning method consistent. If you first tune the model on heavily cleaned data and then deploy on less cleaned data, the model might face unexpected data patterns in production.

In some domains, certain cleaning steps might remain universal (e.g., removing duplicates), while outlier handling or imputation should be integrated into a pipeline step that is re-fitted inside each cross-validation fold to avoid data leakage.

Are there ethical or fairness considerations related to data cleaning that could worsen statistical analysis?

Data cleaning can inadvertently remove records representing minority groups, potentially reducing fairness. For example, if a certain subgroup has incomplete address information or systematically unusual feature values, a naïve cleaning process might eliminate them at a higher rate. This leads to a biased model that fails to represent that population segment.

Key fairness considerations:

Monitor group-wise missingness rates. If certain demographics are more prone to missing data, a single imputation strategy might produce biased estimates or degrade fairness metrics.

Periodically check performance metrics disaggregated by sensitive attributes (race, gender, age). If cleaning procedures change these subgroup performances significantly, reconsider the logic behind discarding those observations.

Discuss and formalize an ethical framework for cleaning, especially in regulated industries such as healthcare or finance, where data cleaning can have real-world consequences for protected populations.

Edge case scenario: A credit scoring system’s data cleaning step discards all applications lacking a phone number. In some communities, phone ownership is less common, so entire valid rows are removed from certain demographic segments. The result is a model that does not recognize nor appropriately calibrate risk for those subgroups.

How can incorrectly labeling data during cleaning affect machine learning models?

Label noise is a special type of data quality issue. When cleaning data, you may discover that some labels are incorrect or ambiguous. If you “fix” labels without robust guidelines, you might introduce inconsistencies that degrade model performance more than the original noise.

Typical pitfalls:

In classification tasks, changing the label of an “edge case” example purely based on its feature values can cause the model to learn spurious correlations.

Over-reliance on automated label cleaning (like crowd-sourced re-labeling or heuristic-based reassignments) may not capture the domain’s nuances.

If a small fraction of the dataset is mislabeled but is particularly crucial (e.g., minority class in an imbalanced dataset), any mislabeling can significantly harm recall for that class.

Strategies to mitigate these pitfalls include:

Double-check ambiguous labels with expert annotation or multiple-annotator consensus.

Keep the original labels, and mark flagged instances as uncertain instead of forcibly reassigning them.

Compare model performance before and after labeling adjustments to confirm that changes lead to improvements.

Does data cleaning differ for unstructured data (like text or images), and how can it harm analysis?

Unstructured data cleaning often involves removing stop words from text, normalizing punctuation, or discarding low-quality images. In some tasks, these transformations might harm model performance.

For text:

Overzealous removal of “stop words” can sometimes eliminate context-bearing words in certain languages or domains where these words carry subtle meaning. For example, in sentiment analysis, repeated “not” or “never” might be crucial.

Lemmatization or stemming might collapse distinct word forms that indicate tense or plurality, thus losing nuances.

For images:

Over-compression or resizing might remove valuable details, like subtle textures or small-scale features critical for tasks like medical imaging or fine-grained object classification.

Aggressive augmentation or denoising can distort real-world brightness and color properties that the model needs to learn legitimate invariances.

This can degrade performance if the removed components represent the very signals that the model needs to correctly classify or interpret the data.

How do you handle “cleaning” for data derived from multiple sources with varying quality?

Real-world datasets often merge logs or records from different systems. Each system may have different standards for missing data, scaling, or data entry formats. A uniform approach to cleaning might produce inconsistent handling of each source.

Potential pitfalls in multi-source data:

A certain data field might mean different things in different systems. Combining them and then cleaning them as one field can misrepresent some portion of the data.

Some sources might already have cleaned outliers or missing values differently, causing the aggregated dataset to have heterogeneous cleanliness levels.

If certain sources historically under-report or over-report certain measurements, a naive mean/median imputation might bias the combined dataset in favor of the better-reported sources.

Mitigation strategies:

Perform source-specific audits: identify standard anomalies or unique data quirks for each data system.

Normalize or standardize data fields carefully based on the source’s metadata. For instance, temperature might be in Celsius in one system and Fahrenheit in another.

Document each source’s known limitations. This helps analysts avoid conflating poor data with genuine outliers.

Can cleaning degrade unsupervised learning tasks like clustering?

While the risks of data cleaning for supervised tasks (like regression or classification) are commonly discussed, unsupervised methods (like clustering) are equally susceptible to data cleaning pitfalls.

Why clustering is vulnerable:

Outlier removal can drastically change cluster centroids, particularly if the outliers represent smaller but meaningful subpopulations or cluster boundaries.

Imputing missing data might force points to converge into existing clusters rather than standing alone as potentially new clusters.

Normalizing or scaling data differently can warp cluster structure, causing previously distinct clusters to merge or previously single clusters to split.

Consequences:

You might incorrectly conclude there are fewer (or more) clusters than reality.

Important minority clusters could get merged into large mainstream clusters if their unique attributes are removed or smoothed out.

To address these issues:

Examine cluster stability before and after cleaning. Tools like silhouette scores or visual inspections (e.g., PCA plots) can reveal if the cluster boundaries remain consistent.

Try multiple cleaning strategies side-by-side and see which yields stable, interpretable clusters that domain experts affirm.