ML Interview Q Series: Combinatorial Counting for Ball and Box Distribution Problems

Browse all the Probability Interview Questions here.

Question

Count the number of distinct ways of putting 3 balls into 4 boxes when:

MB: all boxes and balls are distinguishable

BE: the boxes are different but the balls are identical

FD: the balls are identical, the boxes are different but hold at most a single ball

Short Compact solution

Comprehensive Explanation

Distinguishable boxes and distinguishable balls (MB)

In this scenario, each of the 3 balls is unique, and each of the 4 boxes is unique. For each ball, you have 4 distinct choices (which box to place it in). Because there are 3 balls, each making an independent choice among 4 boxes, the total number of ways is 4 * 4 * 4, which is 4^3. More explicitly:

Ball1 can go into any of the 4 boxes.

Ball2 can also independently go into any of the 4 boxes.

Ball3 likewise has 4 choices. Hence, the result 4^3 = 64 arises from the multiplication principle.

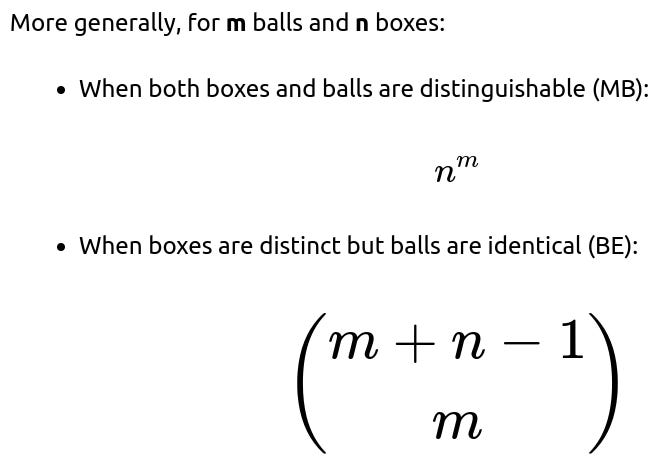

In the general case with m distinguishable balls and n distinguishable boxes, each ball has n choices, and these choices are all independent. That leads to n^m different ways to place the balls in the boxes.

Distinguishable boxes, identical balls (BE)

When the boxes are still considered different, but the balls themselves are identical, we can no longer simply do a direct multiplication because it no longer matters which “specific” ball goes into which box; all that matters is how many total balls go in each box. The classical combinatorial result (sometimes referred to as “stars and bars” or “balls and urns”) says the number of ways to distribute m identical items into n distinct bins is:

Choose (m + n - 1) choose (m).

For the special case m=3, n=4, this becomes (3 + 4 - 1 choose 3) = (6 choose 3). Evaluating (6 choose 3) gives 20. Conceptually, you can think of placing 3 identical balls (the “stars”) and 3 dividers (the “bars”) in a row. The positions of the bars split the sequence into 4 distinct segments corresponding to each box.

Distinguishable boxes, identical balls, at most one ball per box (FD)

Here, because each of the 4 boxes can contain at most one ball, and the balls are identical, we only care which boxes are actually selected to hold a ball. For 3 identical balls, we just choose 3 out of the 4 distinct boxes. So there are (4 choose 3) = 4 ways to make that selection.

In the general case of m identical balls and n boxes, each box holding at most a single ball, the number of ways is (n choose m) because you simply choose which m boxes (out of n distinct boxes) receive one ball each.

Follow-up question 1

What if the boxes were also identical in the case of unlimited capacity?

When both the balls and the boxes are identical, the problem becomes finding the number of partitions of m into at most n parts (or equivalently, the number of partitions of m that do not exceed n in any summand). Unlike the classical “stars and bars” approach, there is no simple closed-form formula for the number of partitions of m into up to n identical parts; one typically needs partition theory results or generating functions. In more advanced combinatorics, we use the partition function p_k(m), which denotes the number of ways to partition m into exactly k parts or up to k parts. There is no straightforward elementary closed-form formula for these partition functions.

Key Pitfalls:

Confusing distribution of identical vs. distinguishable items.

Attempting to use simple binomial coefficients for identical-boxes scenarios often leads to incorrect counts since the identity of boxes can matter in some approaches but not in others.

Follow-up question 2

How do we handle constraints like a minimum number of balls per box or a limit greater than 1?

If each box must contain at least r balls or at most s balls, you adapt the counting logic by introducing constraints into the distribution:

For identical balls into distinct boxes, one can use “stars and bars” and then subtract invalid allocations (where a box has fewer than r or more than s). This often becomes an inclusion-exclusion approach.

For distinguishable balls, you might handle it by direct combinatorial enumeration or dynamic programming. For example, to count the ways to distribute m distinguishable balls into n distinguishable boxes where each box can hold at most s balls, one can track how many balls have been placed in each box iteratively and use a combinatorial argument or a dynamic programming table that accumulates valid distributions.

Pitfalls and Strategies:

For large m and n, naive enumeration is computationally expensive.

Inclusion-exclusion can rapidly get complicated, but it is often the cleanest closed-form approach if carefully applied.

Dynamic programming is a more general technique but might yield an algorithmic (rather than a simple closed-form) solution.

Follow-up question 3

Could you illustrate a direct enumeration approach for the (BE) case using code for small m and n?

Below is a simple Python snippet showing how to directly count the distributions when balls are identical, boxes are distinct, and boxes can hold any number of balls. This is a brute-force check useful only for small values but helps illustrate the concept:

def count_distributions(m, n):

# m identical balls, n distinct boxes

# brute-force approach: try all n^m ways if they were distinguishable,

# but track "how many in each box" ignoring which ball is which.

# Actually simpler: generate all possible combinations of counts of length n

# summing to m.

count = 0

def backtrack(i, remain, distribution):

if i == n - 1:

distribution[i] = remain

# distribution now sums to m

# it's a valid distribution

nonlocal count

count += 1

return

else:

# try all ways to distribute remain among the i-th box

for x in range(remain + 1):

distribution[i] = x

backtrack(i + 1, remain - x, distribution)

backtrack(0, m, [0]*n)

return count

# Example:

print(count_distributions(3, 4)) # should output 20

Explanation of the code:

We recursively build the number of balls assigned to each box (an array

distributionof length n).At each step, we choose how many balls go into the current box i, and then backtrack for the remaining boxes.

Once we fill up to the last box, we are forced to put whatever remains into that last box. This way, we systematically enumerate all ways to distribute identical balls among n boxes.

The final

countmatches (m + n - 1 choose m) for the distribution problem when m and n are small.

Follow-up question 4

How would you explain these combinatorial ideas in a machine learning context?

In machine learning or data science, combinatorial reasoning helps in:

Understanding hyperparameter grid searches, where you choose among discrete sets of hyperparameter options for multiple hyperparameters.

Analyzing the complexity of partition-based clustering methods, where one partitions a dataset into groups (though in clustering, we often consider groups to be indistinguishable if they represent the same “clusters”).

Modeling discrete probability distributions, such as using a Dirichlet-multinomial framework, which is conceptually related to distributing counts (like word counts in topic modeling) into categories (topics).

These combinatorial principles appear across many subfields whenever you are reasoning about discrete allocations, counts, or configurations in high-dimensional discrete spaces.