ML Interview Q Series: Detecting and Fixing Overfitting/Underfitting using Regularization, Data Augmentation, Model Tuning.

📚 Browse the full ML Interview series here.

Overfitting vs Underfitting: What is overfitting and underfitting in the context of machine learning models? How can you detect each problem during model training, and what techniques can be used to address them? Provide examples of remedies (e.g., regularization, more data for overfitting; increasing model complexity for underfitting).

Overfitting occurs when a model fits the training data too closely and captures not only the true underlying patterns but also the noise or random fluctuations present in the training set. When this happens, the model performs extremely well on training data but fails to generalize to unseen data.

Underfitting, on the other hand, occurs when a model is too simple or not sufficiently trained, causing it to fail to capture the underlying structure of the data. Such a model has relatively high bias and is unable to achieve good performance, even on the training data.

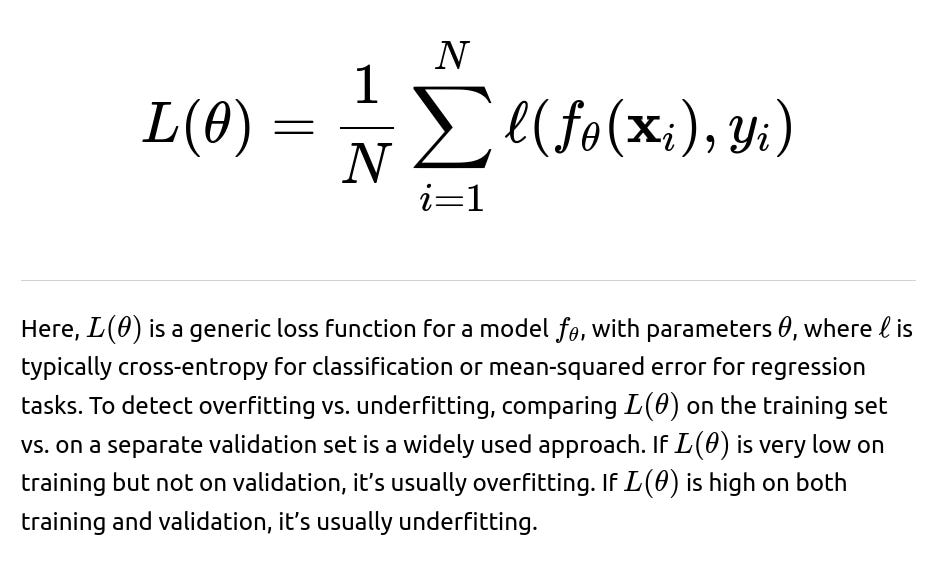

Detecting each problem generally involves monitoring how the model’s training error and validation (or test) error evolve during training:

When the training error continuously decreases while the validation error stops decreasing and begins to increase, the model is usually overfitting.

When both training error and validation error are high (and do not improve significantly with more training epochs), the model is underfitting.

Addressing Underfitting generally involves increasing the model’s capacity, adding more representative features, or simply training for more epochs. Sometimes, changing the model architecture to a more expressive one or employing more sophisticated feature engineering helps the model capture the nuances of the data.

Subsections below provide detailed explanations and practical tips for each aspect.

Overfitting in More Detail

Models that overfit have effectively “memorized” idiosyncrasies of the training data. This memorization typically stems from excessive complexity. In deep neural networks, high capacity means the model has enough parameters to capture even random patterns in the training set.

One important mechanism to detect overfitting is the divergence between training and validation losses. If the training loss continues decreasing steadily, but the validation loss or error metric levels off or even gets worse, it is a strong sign of overfitting. Another indicator is if the model’s performance on a cross-validation set is significantly worse than on the training data.

Underfitting in More Detail

Underfitting typically arises from a lack of complexity in the model (too few parameters, insufficient training epochs, or an overly simplistic architecture). Underfitting also results when the model cannot capture the inherent patterns in the data, either because the features are not informative or the model’s hypothesis space is too narrow.

When underfitting occurs, both the training and validation performance will be poor. Typically, adding more training epochs alone will not help if the model architecture is fundamentally too simple or is missing important features.

Techniques to Address Overfitting

Adding dropout in deep neural networks. Dropout randomly sets some neurons’ outputs to zero during training, forcing the network to not rely too heavily on specific connections. This helps the network develop more robust patterns.

Gathering more data. In many real-world applications, overfitting arises because the training set is too small or not sufficiently representative. Obtaining more data often addresses that problem directly.

Using data augmentation. When adding new data is impractical or expensive, you can artificially expand your dataset by applying transformations such as rotations, shifts, crops, color jitter, or random noise injections for images, or random perturbations for time series and textual transformations for NLP tasks. This effectively reduces overfitting and improves generalization.

Employing early stopping. By monitoring validation loss during training, you can stop training at the point where the validation loss stops decreasing (and starts to worsen). Early stopping prevents the model from converging on spurious details in the training data.

Tuning architectural choices. For instance, if you have an extremely deep neural network with millions of parameters, sometimes scaling back the architecture or using more restrictive hyperparameters can improve the generalization.

Techniques to Address Underfitting

Increasing model capacity. Changing a linear model to a polynomial model, or using deeper architectures in neural networks, can help learn more complex functions.

Adding important features. If the model fails to capture patterns because critical features are missing, feature engineering or domain-specific feature augmentation can reduce underfitting. In neural networks, providing more informative input channels or using specialized embeddings can also help.

Training longer. Sometimes, underfitting can happen simply because the model is not trained to full convergence. Monitoring training and validation curves can show if additional epochs keep driving the training error down.

Reducing regularization. If the model has large regularization factors, it might be forced to maintain smaller weights and reduce capacity in an extreme way. Lowering weight decay or dropout can help the model learn more expressive patterns.

Changing the optimization strategy or hyperparameters. A learning rate that is too high might cause the model to bounce around suboptimal regions, never adequately fitting the training data. Using a more appropriate learning rate schedule or optimizer can improve performance in cases of underfitting.

Illustrative Code Example

Below is a minimal Python snippet (using PyTorch) that demonstrates how one might detect overfitting vs. underfitting by logging training and validation performance each epoch. This code trains a simple feedforward network on an example dataset (like a small portion of MNIST). By monitoring the metrics, you can decide if your model is overfitting or underfitting.

py import torch import torch.nn as nn import torch.optim as optim from torch.utils.data import DataLoader from torchvision import datasets, transforms

Simple feedforward network

class SimpleNet(nn.Module): def init(self, input_size=784, hidden_size=128, num_classes=10): super(SimpleNet, self).init() self.fc1 = nn.Linear(input_size, hidden_size) self.relu = nn.ReLU() self.fc2 = nn.Linear(hidden_size, num_classes)

def forward(self, x):

x = x.view(x.size(0), -1) # Flatten

x = self.fc1(x)

x = self.relu(x)

x = self.fc2(x)

return x

transform = transforms.Compose([transforms.ToTensor()]) train_dataset = datasets.MNIST(root='.', train=True, transform=transform, download=True) val_dataset = datasets.MNIST(root='.', train=False, transform=transform, download=True)

train_loader = DataLoader(train_dataset, batch_size=64, shuffle=True) val_loader = DataLoader(val_dataset, batch_size=64, shuffle=False)

model = SimpleNet() criterion = nn.CrossEntropyLoss() optimizer = optim.Adam(model.parameters(), lr=0.001)

epochs = 5 for epoch in range(epochs): model.train() total_train_loss = 0 for images, labels in train_loader: optimizer.zero_grad() outputs = model(images) loss = criterion(outputs, labels) loss.backward() optimizer.step() total_train_loss += loss.item()

model.eval()

total_val_loss = 0

correct = 0

total = 0

with torch.no_grad():

for images, labels in val_loader:

outputs = model(images)

loss = criterion(outputs, labels)

total_val_loss += loss.item()

_, preds = torch.max(outputs, 1)

correct += (preds == labels).sum().item()

total += labels.size(0)

avg_train_loss = total_train_loss / len(train_loader)

avg_val_loss = total_val_loss / len(val_loader)

accuracy = 100 * correct / total

print(f"Epoch {epoch+1}/{epochs} Train Loss: {avg_train_loss:.4f} Val Loss: {avg_val_loss:.4f} Val Acc: {accuracy:.2f}%")

By examining the trend of average training loss and average validation loss, you can detect:

Overfitting if the training loss continues to decrease significantly, but the validation loss stops decreasing or begins to increase over time.

Underfitting if both training and validation losses remain high and the validation accuracy is still low, suggesting the model lacks capacity or training time to capture the data’s complexity.

Common Pitfalls and Real-World Considerations

Sometimes, a simple train/validation loss comparison does not give the full story. Problems such as data leakage, incorrect splitting, or having too small of a validation set can obscure whether the model is overfitting or underfitting. Cross-validation can help mitigate these issues by averaging performance across multiple splits.

Overfitting can still happen even if the training dataset is large, particularly if your model is extremely large or the data distribution has particular complexities (like heavy tails, very noisy segments, etc.). Regularization remains necessary in many cases regardless of dataset size.

Underfitting might be misdiagnosed if you are not training your model properly or if your hyperparameters are off. If the learning rate is too low, the model might appear to underfit simply because it has not yet converged. Tuning the learning rate schedule can drastically improve results.

In high-stakes applications, you must ensure your validation (or test) data is representative of real-world scenarios. If your training data does not reflect production conditions, the model can fail in deployment—even if it appears fine in offline metrics.

Data imbalance can also complicate diagnosing overfitting vs. underfitting. If certain classes dominate the training set, the model might appear to have low overall loss but perform poorly on minority classes. Evaluating class-specific metrics like F1-scores or confusion matrices can help detect such issues.

Ensemble methods can reduce overfitting by combining multiple models. Techniques like bagging (Random Forests) or boosting (XGBoost, LightGBM) often have built-in mechanisms to mitigate overfitting.

How do we choose when to stop training if we suspect overfitting?

One effective strategy is to monitor validation loss during training. If you see that validation loss starts to plateau or increase (while training loss continues to decline), you can stop training at the point of best validation performance. This approach, called early stopping, prevents the model from further overfitting.

Practically, you can implement a “patience” parameter. For example, stop if the validation loss does not improve for 5 consecutive epochs. Another method is to save the model whenever the validation metric improves and, after training finishes, revert to the best state saved.

Why do large models often overfit, and is bigger always worse?

Large models typically have higher capacity, meaning they can represent more complex functions. While this enables them to capture sophisticated relationships, it also means they can memorize noise if not properly regularized. However, bigger is not always worse in modern deep learning. Techniques like dropout, batch normalization, data augmentation, and large-scale datasets can keep big models generalizing well. For instance, many real-world state-of-the-art deep neural networks (e.g., large language models, big vision transformers) have massive parameter counts but generalize effectively due to robust training protocols, vast datasets, and heavy regularization.

If you lack large datasets or compute resources to train a big model adequately, you might see severe overfitting. In such cases, employing stronger regularization or scaling back the architecture can help.

What if underfitting persists even after adding capacity?

When you increase model complexity but still observe underfitting, consider other angles:

Check the data quality. Poorly labeled or noisy data can make training difficult. No matter how big the model is, it might fail to learn good representations if the data does not contain consistent patterns.

Improve feature engineering or data processing. If essential features are missing or the data representation is suboptimal, the model cannot capture the underlying distribution.

Revisit learning rate and hyperparameters. An extremely high or low learning rate might prevent the model from converging effectively.

Examine your loss function or objective. Sometimes, underfitting is a mismatch between the chosen loss function and the actual objective. For instance, if you are trying to optimize for a certain metric (e.g., F1-score), you might need a specialized loss or at least to closely monitor that metric.

Are data augmentation and additional data always helpful?

If your model is overfitting, adding more data (real or augmented) almost always improves generalization. However, if your problem is underfitting, adding more data will not necessarily fix the issue, because underfitting typically stems from insufficient model capacity, poor features, or incorrect hyperparameters.

Data augmentation needs to be consistent with the problem domain. For example, random horizontal flips for images might make sense for certain object recognition tasks, but random vertical flips might not if the orientation is crucial (e.g., digit recognition). For textual data, naive augmentation can distort semantic meaning if not done carefully.

How does cross-validation help diagnose overfitting vs. underfitting?

Cross-validation splits the data into multiple folds, using one or more folds for validation and others for training. By rotating through the folds, you measure performance across multiple validation subsets. If the model overfits, you might notice widely varying performances for different folds. If the model underfits, it will perform poorly across all folds. This technique also provides a more reliable estimate of true generalization error, helping you make more informed decisions regarding model selection, hyperparameter tuning, and architecture changes.

Is it possible for a model to be both overfitting and underfitting in different parts of the input space?

Yes, in practice, some parts of your data distribution might be “easier” for the model to learn and effectively memorize, while other parts might be more complex, causing the model to fail to capture those subtle patterns. For instance, if you have a large model with insufficient regularization, it can overfit easy examples and memorize some noise, while still underfitting complex edge cases or minority classes. Diagnosing this scenario often involves analyzing error patterns, confusion matrices, or performance by subgroups of your data. A deeper look can reveal that certain classes or feature subsets are not being learned well, indicating localized underfitting, while other classes are being overly memorized.

Could simpler models ever outperform deeper or more complex models?

Yes. Simpler models can outperform deeper models if the dataset is limited, the simpler model’s bias aligns well with the underlying data, or if heavy regularization is beneficial in a particular domain. In real-world applications, linear or logistic regression can sometimes outperform deep neural networks if the input data is well-structured, tabular, or if you only have a small dataset with no complicated non-linear patterns. Furthermore, simpler models are typically easier to interpret and debug, which can matter significantly in certain regulated industries or high-stakes decisions.

How do you decide between collecting more data vs. adjusting your model to address overfitting?

If it is feasible to collect more data and your model is clearly overfitting, acquiring more training samples often gives substantial improvements. However, in many domains, more data is either expensive or difficult to obtain. Thus, you might employ data augmentation or look for methods like regularization, dropout, ensemble approaches, or cross-validation to mitigate the overfitting.

In a large-scale industrial setting (like those at FANG companies), gathering more data might be more straightforward if you already have robust data pipelines or a large user base, but you still might want to carefully weigh the engineering and labeling costs. If data is extremely challenging to gather (e.g., complex medical scenarios), then you must rely more on model-driven solutions like advanced regularization or semi-supervised and self-supervised methods that can learn from unlabeled data.

How do you systematically tune hyperparameters to avoid overfitting or underfitting?

It often helps to do a systematic search or optimization of hyperparameters. Techniques like random search, grid search, Bayesian optimization, or bandit-based methods (e.g., Hyperband) can help identify a good region of hyperparameter space. You typically define search ranges or distributions for key hyperparameters:

Learning rate and learning rate decay schedule.

Regularization strength (like weight decay or dropout rate).

Number of layers or hidden units in neural networks.

Batch size.

Early stopping patience and scheduling.

By running multiple experiments on separate validation sets or folds, you can identify which hyperparameters reduce overfitting or mitigate underfitting. This approach is standard practice in FANG companies given the computational resources available, but you still need to design your search strategies carefully to avoid extremely long training times.

Could we use ensemble methods to address overfitting?

Absolutely. Ensemble methods combine multiple models’ predictions to produce a more robust final output. For example, in bagging (bootstrap aggregating), you train multiple models on different bootstrap samples of the training data. Each model overfits slightly differently, but when you average or vote on their predictions, the combined estimator generalizes better.

Similarly, boosting (as in XGBoost) fits successive models to the residual errors of previous models, but it can also overfit if not regularized properly (e.g., controlling tree depth, learning rate, and so forth). Despite that risk, tuned ensemble methods often generalize better than a single complex model.

How do you handle an imbalanced dataset in terms of overfitting vs. underfitting?

Imbalanced datasets complicate both overfitting and underfitting. The model might appear to have high accuracy simply by learning the majority class thoroughly (a form of overfitting to the majority class). Yet it underfits the minority classes, failing to learn their characteristics. Monitoring metrics like F1-score, precision, recall, ROC-AUC, or confusion matrices for each class is important.

Techniques like oversampling the minority class, undersampling the majority class, or generating synthetic samples (SMOTE in classical machine learning or advanced data augmentation in deep learning) can help the model see enough examples of each class to reduce underfitting. Setting class weights in the loss function can also direct the model to pay more attention to minority classes.

When should we use a simpler approach like regularization vs. more advanced approaches to fix overfitting?

At many top-tier technology companies, adopting advanced solutions is guided by cost-benefit trade-offs. If adding a small amount of dropout or weight decay drastically reduces overfitting, it may be more efficient than building a massive data pipeline to collect millions of additional samples. On the other hand, if your product and user base naturally produce more data, you might incorporate that data to improve your model’s performance and reduce overfitting simultaneously.

How do you ensure the model is not underfitting after you apply strong regularization for overfitting?

Continuously monitor training loss, validation loss, and relevant performance metrics. If after introducing stronger regularization you find that both the training loss and validation loss are higher than before, or that the model is not converging well, this can indicate you have gone too far in fighting overfitting and started underfitting instead. Therefore, a balance is key, typically found through hyperparameter tuning. Keeping track of various metrics over multiple training runs helps you identify that sweet spot.

How does a validation curve help in diagnosing overfitting and underfitting?

A validation curve plots the training and validation scores (like accuracy or F1-score) as a function of a model hyperparameter (e.g., regularization strength, model complexity). When the two curves converge to a low score, you have underfitting. When there is a gap between high training score and low validation score, you have overfitting. The optimal hyperparameter is often somewhere in the region where the two curves are closest together while still yielding high validation score.

Can transfer learning help with underfitting or overfitting?

Transfer learning can help mitigate underfitting when you have insufficient data to learn from scratch. By starting from a pretrained model that has already learned generalizable features (e.g., in computer vision or NLP), you can fine-tune on your dataset with fewer samples. However, if you overfit during fine-tuning on a small dataset, you can apply standard techniques like regularization or freezing certain layers. Transfer learning also helps in scenarios where data collection is expensive, effectively reducing underfitting issues by providing a strong initial feature extractor.

Could using a validation set that is not from the same distribution as the training set create confusion about overfitting vs. underfitting?

Yes. If your validation set does not reflect the distribution of your training set or your intended real-world data distribution, your evaluation might be misleading. The model might appear to underfit or overfit when, in reality, the data shift is to blame. This is known as domain shift or dataset shift. It is crucial to ensure that your validation (and test) data is drawn from the same distribution as your training data or from a distribution that matches your real use case to accurately diagnose overfitting vs. underfitting.

How do you systematically keep track of these issues at scale?

In large companies or in production-level systems, using a robust experiment-tracking system is key. You record:

Model architectures, hyperparameters, random seeds.

Training curves (loss, accuracy) across epochs.

Validation and test results.

Experiment metadata such as dataset version, augmentation strategies, run IDs.

This allows you to quickly compare how different models handle overfitting or underfitting. Automated dashboards, advanced data versioning systems, and collaboration tools further streamline these processes. You can systematically revert to the best-performing model or examine how a particular hyperparameter influenced performance and whether it helped or hurt with respect to overfitting or underfitting.

What happens if the model overfits even while achieving only mediocre training accuracy?

This can occur when the data is noisy or extremely challenging, so the maximum achievable training accuracy is not high. Despite that, the model can still “overfit” the limited features it can glean, failing to generalize beyond them. For instance, in a dataset where certain classes are inherently ambiguous or labels are partially corrupted, the model might overly specialize on a few patterns without broad generalization.

In these scenarios, you might consider data cleaning, label correction, or advanced outlier detection. Robust loss functions (e.g., those that discount outliers) can also help the model ignore noisy portions of the dataset, improving its ability to generalize.

Why does overfitting remain a concern in deep learning when large datasets are available?

Overfitting is not entirely eliminated by having a large dataset, because neural networks’ capacity can grow even faster. Models with billions of parameters can still memorize patterns if they are not carefully regularized or if the distribution is highly complex. Additionally, real-world data is seldom uniformly distributed; there could be portions of the input space that are underrepresented, making it easier for the model to overfit on those rare segments.

Modern training strategies (batch normalization, dropout, careful architecture design, large-scale data augmentation, and specialized regularization approaches) can mitigate the risk. Still, overfitting remains a fundamental issue that practitioners must always watch for.

How do you verify that a remedy for overfitting (like regularization) actually helped?

You compare metrics before and after applying the remedy. If the difference between training performance and validation performance becomes smaller and validation metrics improve, it is likely that the remedy helped. You can also examine learning curves over multiple epochs: the point where validation loss starts to diverge from training loss might shift later (indicating less overfitting), or the gap between training and validation losses might be reduced.

How to handle a model that underfits on particular subgroups (such as certain classes in a classification task) but overfits on others?

A solution is to investigate model capacity with respect to each subgroup. Sometimes you can adopt cost-sensitive training or subgroup-specific data augmentation. Another approach is to re-sample the training data so that underrepresented subgroups appear more frequently. If overfitting persists for the more common classes, you might apply targeted regularization or consider multi-task learning approaches. The model can incorporate knowledge from multiple relevant tasks, balancing representation across different subpopulations of data.

Could an ensemble of simpler models help with underfitting?

Yes. Even when each base learner is simple, combining multiple such learners (e.g., bagging a large number of shallow decision trees) can collectively capture more complex patterns. Random Forests are a classic example. Each individual tree might underfit, but the ensemble’s majority vote or average reduces variance, typically yielding a model that can handle more complexity than a single tree while maintaining good generalization.

How can interpretability techniques help identify overfitting and underfitting?

Examining feature importance or visualizing attention maps (in neural networks) may show if the model is paying attention to spurious patterns or noise. For instance, if a model training on image data focuses on artifacts in the corner of images (like watermarks or label text) rather than the subject, you might suspect overfitting to dataset-specific quirks. If the model’s feature importance is diffuse and not focusing on relevant factors at all, it could indicate underfitting or that the model cannot learn the salient patterns.

Various interpretability tools (like saliency maps, Grad-CAM in computer vision, or LIME/SHAP for tabular data) can offer insights into whether the model’s learned representations are consistent with known meaningful patterns.

Could cross-entropy loss vs. mean squared error affect overfitting or underfitting?

Choosing the right loss function is critical. For classification tasks, cross-entropy is usually preferred because it aligns better with probabilistic interpretation and encourages the model to produce accurate class probabilities. Using mean squared error for classification can sometimes lead to slower convergence or local minima issues, which might manifest as underfitting.

However, even with a theoretically “correct” loss function like cross-entropy, you can still overfit if your model is large and your data is insufficient or lacks diversity. Always match your loss function to your problem type and check for overfitting or underfitting patterns in the standard ways.

Could a high variance in model performance from run to run indicate overfitting?

Large variance in results across multiple runs with different random seeds can indicate an unstable training process. This might be due to overfitting random noise in the data or high sensitivity to weight initialization and data ordering. Tools to address this include:

Stabilizing the training process by setting consistent seeds, using well-tuned learning rates, or employing advanced optimization algorithms.

Applying stronger regularization or data augmentation to reduce the chance that the model latches onto random artifacts.

Averaging multiple runs, or using an ensemble of models trained with different seeds, to get a more robust final predictor.

How do you deal with catastrophic forgetting in continual learning scenarios?

Catastrophic forgetting refers to the model overfitting to new data and effectively underfitting or “forgetting” prior tasks. Techniques like elastic weight consolidation or rehearsal methods (storing and replaying a subset of old data) help the model maintain performance on previous tasks while learning new ones. This is a specialized scenario, but it can be viewed as a dynamic form of balancing overfitting and underfitting across different time windows or tasks.

How do you ensure that your remedy for underfitting (like adding more capacity) does not lead to catastrophic overfitting?

The best approach is incremental. Increase capacity gradually, monitor your metrics, and regularly apply standard forms of regularization or normalization. If you notice your validation performance dropping, you can rein in capacity or introduce stronger regularization. Employing robust hyperparameter tuning methods and cross-validation also helps find the sweet spot.

How might you address overfitting in the context of large language models?

Even large language models can overfit if your fine-tuning dataset is small or domain-specific. Common techniques include:

Using smaller learning rates and shorter fine-tuning schedules, so the pretrained weights do not drastically move away from their general-purpose initialization.

Applying standard dropout, weight decay, or layer-wise learning rate decay.

Using prompt-based methods, which might not require updating the entire model, thereby reducing the chance of memorizing the fine-tuning set.

Monitoring perplexity or validation error, and stopping early if it begins to rise.

Below are additional follow-up questions

How does data preprocessing impact overfitting or underfitting, especially if scaling or normalization is done improperly?

Data preprocessing can significantly influence whether a model overfits or underfits:

Inconsistent Scaling If certain features are scaled differently between the training and validation sets, then the model might learn spurious relationships in the training phase that do not generalize. This typically appears as an overfitting symptom, because the model is essentially memorizing scale-specific nuances rather than generalizable patterns. In a real-world edge case, a batch of training data might have a certain mean and variance, while the validation data has a different distribution, making the model's learned scaling incomplete or misleading.

Over-Aggressive Normalization When applying standardization (e.g., subtract mean, divide by standard deviation) or min-max scaling, one could inadvertently erase important distinctions in the feature distribution. If a feature that legitimately varies widely gets compressed, the model might underfit because it's losing essential variation.

Feature Leakage Through Preprocessing If you use knowledge from the entire dataset (training + validation/test) when normalizing or scaling, you risk data leakage, potentially leading to overfitting. The model indirectly “sees” information about the validation distribution, which inflates performance metrics during development. A subtle example: computing the mean and standard deviation using all available data (including validation) for standardization can give a suspiciously good validation score that won’t generalize in production.

Incorrect Handling of Missing Values If you impute missing values without carefully considering the data distribution, you can inadvertently distort patterns, causing the model to underfit. Alternatively, if you overcomplicate the imputation (fitting an overly complex imputation model), you might embed noise that contributes to overfitting.

In practice, you should isolate each preprocessing step to the training set (fitting scalers and imputers on training data only). Then apply the exact same transformations (parameters) to the validation/test sets. Always keep track of whether transformations might eliminate signal or inadvertently create spurious correlations.

Could poor model initialization lead to overfitting or underfitting, and how would we detect and address it?

Connection Between Initialization and Overfitting If your initialization randomly sets a subset of neurons to large weights, early training might find “easy” ways to drive training loss down on specific patterns. This can cause the model to latch onto idiosyncrasies (noise) of the training data. While the primary reason for overfitting is generally high capacity or insufficient data regularization, pathological initialization can exacerbate the problem by funneling optimization paths into local minima that reflect memorized noise.

Connection Between Initialization and Underfitting An initialization that is too small or zero-initialized can lead to vanishing gradients, preventing the model from learning effectively. You might see consistently high training loss and minimal movement in the parameters, a classic sign of underfitting.

Detection You can detect initialization issues if:

Multiple training runs with different random seeds vary wildly in both training and validation performance (for overfitting or underfitting).

The training loss stagnates early, or the model’s weights barely change after many iterations (signaling underfitting).

The initial epochs show highly erratic validation curves.

Remedies

Use well-established initialization schemes (e.g., Xavier/Glorot, Kaiming/He).

For deep networks, consider strategies like batch normalization to stabilize early layers.

Perform multiple runs with different seeds. If some seeds yield decent results while others do not, it’s likely an initialization stability issue.

Possibly adopt a “warm start” from a pretrained model, which often yields more stable performance and reduces the risk of pathological initialization.

How do data imbalance and rare classes lead to overfitting or underfitting in multiclass classification?

Overfitting to the Majority Class If most training examples belong to one or two dominant classes, the model may “memorize” those classes well but fail to generalize to underrepresented classes. This can appear as overall high training accuracy but poor minority-class recall on the validation set, an overfitting scenario specifically tied to class imbalance.

Underfitting Minority Classes The model may also fail to even learn critical decision boundaries for rare classes, leading to underfitting in those classes. On the training set, it might achieve only moderate accuracy overall because it simply predicts the majority classes all the time.

Detection

Inspect confusion matrices. If minority classes consistently get misclassified, the model is likely ignoring them.

Monitor metrics that are sensitive to class imbalance, like F1-score per class, macro-averaged F1, or balanced accuracy.

Observe distribution of predictions: if the model rarely predicts minority classes, you likely have underfitting for those classes and overfitting on the majority ones.

Mitigation Strategies

Resampling: Oversample minority classes or undersample majority classes.

Synthetic Data Generation: Use techniques like SMOTE (in classical ML) or advanced data augmentation to boost minority samples.

Class Weights: Assign higher loss or penalty for errors on minority classes.

Focal Loss: In deep learning, focal loss can focus training on hard, misclassified examples.

Pitfalls arise if you oversample to the point that repeated minority examples cause the model to simply memorize them. This can inadvertently lead to overfitting again, so balancing the approach is crucial.

How do you use monitoring and alerting in production to detect emerging overfitting or underfitting after deployment?

In production, data distributions can drift, or user behavior changes over time. A model trained on historical data might start overfitting or underfitting relative to new inputs.

Key Metrics to Monitor

Prediction Distribution: Check the distribution of predicted classes or regression outputs over time. If it skews unexpectedly, the model might be focusing on a narrow subset of outcomes (sign of overfitting).

Performance on Rolling Windows: Maintain a small dataset of recent ground-truth-labeled samples (if feasible). Evaluate the model on this rolling set. If performance decays, that could suggest underfitting on new patterns or overfitting to the old distribution.

Error Rates or Confidence: Track how confident the model is. Overly high confidence with a rising error rate might indicate overfitting.

Early Warning Systems

Statistical Checks: Compare real-time input feature distributions to historical training distributions. A large shift can signal that the model may begin to underfit new patterns.

Time-Based Retraining or Online Learning: Periodically retrain or fine-tune the model with fresh data to mitigate performance drift.

Potential Pitfalls

If you rely solely on aggregated metrics (e.g., global accuracy) in a context where the data changes distribution, you might miss subtle signs of overfitting or underfitting on niche subsets.

Overcorrecting by retraining too often with a small window of data might cause catastrophic forgetting of older but still relevant patterns.

How do you ensure cross-validation splits do not inadvertently cause overfitting or underfitting in time series scenarios?

For time series data, the typical random shuffle approach in cross-validation can break the temporal dependencies and leak future information into training sets, leading to artificially optimistic performance (a form of overfitting).

Proper Temporal Splits

Walk-Forward Validation (Rolling Window): Train on data up to a certain time, validate on subsequent time slices, then move forward in increments.

Blocked Time Series Folds: Ensure each fold respects chronological order, preventing data leakage from the future.

Underfitting Possibility If the time series has strong non-stationary behavior, an overly simplistic approach (like not modeling trends or seasonality) can lead to underfitting. Even with correct splitting, you might observe consistently poor performance, indicating the model isn’t sophisticated enough for the temporal patterns.

Edge Cases

Seasonal or cyclical data: If your folds do not capture entire seasonal cycles, you can inadvertently cause the model to underfit crucial seasonal effects.

Sudden regime change: The model might appear well-fit on historical data but drastically underfit the data following a major change in conditions (e.g., external events altering user behavior).

A thorough approach carefully respects time ordering in cross-validation, possibly augmenting with exogenous variables or seasonality modeling to reduce underfitting.

How might domain knowledge help in diagnosing if issues are due to overfitting vs. underfitting?

Identifying Overfitting Clues Domain experts can detect if the model is exploiting quirks rather than legitimate patterns. For instance, if a medical imaging model is focusing on text artifacts or non-diagnostic corners of X-ray images, a clinician might quickly recognize that the learned patterns are noise-driven.

Identifying Underfitting Causes Experts familiar with the domain can reveal if the model is missing certain key factors or not using relevant signals. For example, in financial forecasting, domain experts might note that the model ignores macroeconomic indicators and thus underfits the true dynamics.

Practical Pitfalls

Over-reliance on domain knowledge can lead to ignoring new or unexpected patterns that a more flexible model might capture.

If domain experts have partial or conflicting viewpoints, the model might be declared as “overfitting” or “underfitting” incorrectly.

Hence, domain insight should complement the standard quantitative checks (like validation curves, cross-validation, etc.), giving you an extra layer of interpretability and confidence about the root cause of performance issues.

What is the relationship between feature selection and overfitting/underfitting, and how can the choice of features exacerbate either problem?

Overfitting from Spurious Features If you include too many irrelevant or highly correlated features, your model might latch onto them. This is especially problematic with high-dimensional datasets (e.g., text with large vocabularies, genomic data). The model can memorize random correlations, overfitting the training set but failing on new data.

Underfitting from Missing Key Features If crucial predictors are omitted or incorrectly engineered, the model might remain incapable of capturing key relationships, manifesting as underfitting (high bias, low training accuracy).

Detecting Feature Problems

Pitfalls

Over-reliance on Automated Feature Selection: Automated methods can prune features that appear unhelpful in the training set but might generalize well if the training distribution is not fully representative.

Computational Expense: Thorough feature selection can be costly, especially with large feature spaces, and naive approaches (like exhaustive search) might not be feasible in production environments.

How can specialized loss functions and custom metrics reduce the risk of overfitting or underfitting?

Tailored Loss Functions A standard loss (e.g., cross-entropy for classification, MSE for regression) might not fully capture the real-world cost function. If the model is optimizing a proxy objective that only partially aligns with actual business or real-world objectives, it can overfit on the proxy signals while underfitting the real distribution of interest. A custom loss that penalizes certain types of errors more severely can encourage the model to focus on truly meaningful patterns.

Custom Metrics and Validation Strategies If your ultimate metric is, for example, top-k accuracy, but you train purely on top-1 classification error, the model might remain suboptimal (possible underfitting for your actual goal). Conversely, you might push too hard to maximize a single metric that is easier to overfit. By balancing multiple relevant metrics or using a composite metric (like a weighted sum of multiple objectives), you reduce the chance of focusing on superficial features.

Edge Cases

Highly skewed cost distribution: For instance, in fraud detection, false negatives might be extremely costly. A specialized loss (or a custom weighting scheme) can direct the model to reduce false negatives, possibly at the expense of slightly higher false positives. Without it, the model might “underfit” critical signals or “overfit” to rare spurious indicators.

Exploding gradients with custom losses: If the custom loss is not carefully bounded or scaled, it can introduce extreme gradients that cause training instability or meltdown (leading to underfitting if the optimizer never converges).

How does the presence of outliers or noisy labels change the overfitting vs. underfitting analysis?

Overfitting to Outliers A flexible model might fit extreme points exactly, contorting the decision boundary or regression function to minimize training loss. This results in overfitting if those outliers do not reflect genuine phenomena.

Underfitting due to Noise In the presence of many corrupted labels, the model might struggle to find consistent patterns, effectively “averaging out” conflicting signals. This can degrade performance across the board, making it look like underfitting.

Detection

High variance in training examples: If you suspect many outliers, try robust metrics like median absolute deviation.

Compare performance with and without potential outliers: If removing suspicious points drastically changes your model performance, you have an outlier issue.

Use specialized metrics: For instance, robust training loss or a robust validation scheme can highlight the effect of outliers.

Remedies

Robust Loss Functions: Huber loss (for regression), or label-smoothing (for classification), can mitigate the impact of outliers.

Data Cleaning: Identify questionable data points or labels with domain experts.

Outlier-specific Approaches: If outliers are real but extremely important (e.g., fraudulent transactions), the remedy might be a specialized model focusing on that minority scenario.

How can interpretability methods (like SHAP or LIME) specifically guide you to see if the model is overfitting or underfitting?

Signs of Overfitting

Erratic Feature Attribution: If small changes in the input lead to wildly different attributions, or certain features that should have no impact show high importance, the model might be memorizing random noise.

Unstable Explanations Across Similar Samples: For nearly identical data points, interpretability tools might show drastically different feature importance. This is another sign that the model has a fragile, overfit decision boundary.

Signs of Underfitting

Uniformly Low Feature Importance: If interpretability shows that no feature strongly influences the prediction, this might indicate the model never “latched onto” relevant relationships.

High Reliance on a Single Feature: The model might be ignoring other valuable signals, leading to high bias and underfitting.

Practical Pitfalls

Complex Models: LIME and SHAP approximations themselves can be misleading if the underlying model is extremely deep or has interactions that the method fails to capture. You might incorrectly attribute certain behaviors to overfitting or underfitting.

Local vs. Global Interpretations: Overfitting or underfitting might appear in certain local regions of the feature space. SHAP or LIME can highlight local anomalies, but you must also examine global patterns to form a holistic conclusion.

What is the role of batch size in causing overfitting or underfitting in deep neural networks?

Large Batch Sizes

Potential Overfitting: Large batches can converge to “sharp minima” if not accompanied by proper regularization or learning rate adjustments. Sometimes, these minima overfit the training data.

Potential Underfitting: If you keep the learning rate too small when using large batches, you might end up with fewer parameter updates (per epoch) and fail to adequately learn complex patterns, especially early in training.

Small Batch Sizes

Noisy Gradient Estimates: A smaller batch size typically provides more stochastic gradient signals, which can help escape local minima but can also hamper stable convergence if too small.

Effect on Overfitting: In some cases, small batches act like a form of regularization, potentially reducing overfitting.

Potential for Underfitting: If the batch size is extremely small, training might be chaotic and never effectively converge, resulting in persistent underfitting.

Edge Cases

Memory Constraints: Practitioners might choose batch size based on GPU memory rather than model performance considerations, inadvertently harming the final model quality.

Batch Normalization: Very small batch sizes can degrade the reliability of batch statistics. If BN layers get unreliable estimates, you could see underfitting.

Finding the right balance often involves tuning both batch size and learning rate in tandem, watching for signs of diverging training or plateauing validation metrics.

How can you detect if your regularization is too strong, leading to underfitting, even though your model has high capacity?

Symptom: Persistent High Training Loss If you see that training loss remains high or barely decreases, it often indicates underfitting. Even a large-capacity model won’t be used fully if the regularization is overpowering. Weight decay coefficients might be so high that weights cannot grow to represent necessary patterns.

Testing

Reduce the regularization strength step by step and observe changes in training vs. validation performance. If training performance jumps quickly but validation performance remains stable or improves, it indicates the original setting was too harsh.

Inspect the magnitude of learned weights. If the entire parameter set remains near zero or shows artificially uniform distributions, that’s a red flag for excessive regularization.

Edge Cases

Can ensemble stacking or blending inadvertently lead to overfitting, and what best practices prevent that?

Why Ensemble Methods Can Overfit

Stacking: If the meta-learner that combines predictions from base models sees the entire training dataset, it can overfit by memorizing which base model performs best on particular training samples.

Blending: If the blending set is too small or not representative, the aggregator might learn spurious weighting strategies that look good on the blend set but fail on unseen data.

Mitigations

Proper Data Splits: Train the base models on one subset, then generate predictions on a holdout set. Use that holdout set’s predictions as input features for the meta-learner. A second-level validation ensures you’re not reusing the same data for training base models and the meta-learner.

Edge Cases If the base models are too similar or the dataset is too small, you might not gain any real benefit from ensembling, and you could overfit by complicating the pipeline. On the flip side, if the base models are extremely diverse, the meta-learner might “over-trust” certain outlier models that appear good on the training set but not in general.

In reinforcement learning (RL), how might overfitting or underfitting manifest differently than in supervised learning?

Overfitting in RL

Overfitting to an Environment: The agent memorizes a particular layout or scenario. In tasks like a grid-world or a specific set of game levels, the policy might exploit known patterns that do not generalize to unseen configurations.

Exploration vs. Exploitation Imbalance: If the agent does not explore sufficiently, it might fixate on an early suboptimal strategy that happens to yield decent rewards in the training environment. This can look like it has “overfit” to a local optimum.

Underfitting in RL

Sparse Rewards: If the environment rarely provides positive feedback, the policy network might fail to learn meaningful actions, leading to persistent underfitting where the agent never converges to a competent policy.

Poor Function Approximation: The function approximator (e.g., a shallow neural network) may lack the capacity to represent complex state-action policies, especially in high-dimensional or partially observable environments.

Detection and Remedies

Overfitting: Evaluate the policy on new levels or random seeds. If performance drops significantly, the agent is not generalizing. Techniques like domain randomization or training across multiple levels can reduce environment-specific overfitting.

Underfitting: Increase policy network complexity, refine reward shaping, or incorporate better exploration strategies (like curiosity-driven exploration) so the agent can learn from sparse signals.

Offline RL: Overfitting can also occur when the dataset of experience is limited or not diverse. The policy might memorize patterns in that offline dataset but underperform in a real-time environment with different states.

How does active learning help mitigate overfitting or underfitting in scenarios with limited labeled data?

Active Learning for Overfitting

Reducing Over-Specialization: By actively selecting the most informative and uncertain samples to label, you expand the training data in a targeted way, thereby reducing the chance of overfitting to an unrepresentative dataset.

Diversity in Queries: Strategies like “maximal margin” or “uncertainty sampling” ensure that the labeled set covers a broad region of the input space, limiting the model’s ability to memorize narrow subsets.

Active Learning for Underfitting

Focusing on Complex Regions: If the model is underfitting because it lacks specific types of examples, active learning can pinpoint those regions of feature space that are poorly modeled, thus guiding annotation efforts.

Filling Knowledge Gaps: The model systematically identifies areas where it is most unsure, helping it grow capacity for those previously underrepresented patterns.

Potential Pitfalls

Misleading Uncertainty Measures: If the model is badly calibrated (provides incorrect confidence estimates), active learning might select suboptimal examples, leading to minimal improvement or even further confusion.

Cost of Labeling: In real-world scenarios, labeling can be expensive. If the chosen samples are repeatedly too difficult or outlier-like, you might not see significant gains for general performance, or you might inadvertently overfit to weird corner cases.

How do automated machine learning (AutoML) platforms handle overfitting vs. underfitting, and what risks remain?

AutoML Strategies

Hyperparameter Optimization: AutoML systematically searches the hyperparameter space (learning rate, depth of trees, regularization strength) to find the configuration that balances training and validation performance, thus addressing both overfitting and underfitting.

Model Selection: AutoML tries multiple model families (linear, tree-based, neural networks) and chooses the best performer on validation data, mitigating the risk of manual underfitting if you only tested a single model type.

Risks

Data Leakage: AutoML pipelines might inadvertently leak future or validation data in feature engineering steps if not carefully partitioned. This artificially reduces validation error and hides overfitting.

Over-Search: If the search process is too extensive and the validation set is too small, AutoML can “tune itself” specifically to the validation set, effectively overfitting to that validation distribution.

Underfitting from Simplistic Baselines: Some AutoML solutions limit the search space or time, so they might only find fairly basic models, leaving potential underfitting if the data is highly complex.

Hence, you still need to scrutinize AutoML outcomes, possibly with a separate test set or cross-validation, to confirm that the best candidate model generalizes and neither overfits nor underfits.

How might you adapt overfitting and underfitting concepts to semi-supervised or self-supervised learning scenarios?

Overfitting in Semi-Supervised Even with unlabeled data, the model might still overfit the small labeled portion if it relies heavily on the labeled loss component and not enough on the unlabeled consistency or self-training signals. Additionally, incorrect pseudo-labels (if used) can feed noisy signals back into the model.

Underfitting in Self-Supervised If the self-supervised objective is too simplistic or if the model architecture cannot capture the complexity of the underlying data, you could see underfitting. The learned representations would not be sufficiently rich to transfer well to downstream tasks.

Avoiding Pitfalls

High-Quality Augmentations: Self-supervised approaches often rely on strong augmentations to create different “views” of data. If these augmentations are uninformative or too aggressive, the model might lose important signals (underfitting). Conversely, if augmentations are trivial, the model may overfit to obvious transformations.

Consistency Regularization: Many semi-supervised methods require the model to produce consistent outputs for unlabeled data under different augmentations. This helps reduce overfitting to the small labeled set.

Evaluation Because unlabeled data typically does not provide ground truth, you rely heavily on a separate validation set or eventually a labeled test set to confirm if your self-supervised or semi-supervised approach actually generalizes well (avoid overfitting) and learns sufficiently expressive features (avoid underfitting).

How can advanced optimizers (like AdamW, Ranger, etc.) influence the risk of overfitting or underfitting?

AdamW and Weight Decay AdamW decouples weight decay from the gradient-based updates, making regularization more consistent and often mitigating overfitting. If used incorrectly (e.g., too high or too low weight decay), you might cause underfitting or fail to reduce overfitting.

Adaptive Learning Rates and Overfitting Some adaptive optimizers quickly minimize training loss by aggressively fitting easy patterns, which can expedite memorization if the data is not large or diverse enough. Regularization or careful scheduling helps balance this.

Momentum and Nesterov Momentum-based optimizers help escape small local minima. In some cases, this can reduce underfitting. However, if momentum is too large, the model might overshoot minima, leading to unstable training and potential poor convergence—indirectly causing underfitting.

Pitfalls

Poor Default Settings: Relying on default hyperparameters for specialized tasks can result in suboptimal solutions. Perhaps the default learning rate is too high, leading to continued bouncing and no real convergence (underfitting), or too low, causing slow memorization of training data points (potential overfitting if the model eventually memorizes a small dataset).

Inconsistent Step Schedules: If you switch optimizers mid-training or drastically alter the learning rate schedule at the wrong time, you might jolt the model into overfitting or cause partial forgetting that looks like underfitting.

In online learning or streaming data, how do you prevent the model from “chasing” the training distribution too closely and thus overfitting?

Concept Drift Awareness

If your streaming data distribution changes over time, the model might adapt too aggressively to recent samples and forget established patterns. This can appear like overfitting to short-term noise.

Setting a moderate “learning rate” for the online update is essential so the model does not chase every fluctuation.

Windowing or Buffering

Many online algorithms maintain a buffer or window of the most recent data. If the window is too small, the model might fixate on immediate noise (overfitting). If it’s too large, the model might underfit abrupt changes.

Adaptive windowing strategies adjust the buffer size based on how stable or volatile the data is.

Edge Cases

Abrupt Shifts: If the data distribution drastically changes, your model might severely underfit for new data if it can’t adapt quickly enough.

Noisy or Malicious Inputs: In streaming data that might be adversarial, overfitting can occur if the model is manipulated by a surge of specific training samples designed to push the model in a harmful direction.

Could an overly aggressive early stopping strategy cause underfitting, and how do you calibrate it?

Too Aggressive Early Stopping

If you stop training the moment validation loss stagnates or bounces slightly, you might cut off the model before it fully converges. This can lock in an underfit model.

Some tasks have noisy validation curves, especially with small validation sets or high variance in mini-batches. A small upward blip might not be a true sign of overfitting yet.

Calibration

Patience Parameter: Instead of stopping immediately, you might wait for, say, 5 consecutive epochs without improvement, giving the model a chance to recover from minor fluctuations.

Learning Rate Schedules: Sometimes, switching to a lower learning rate can help your model push beyond a temporary plateau. If you stop too early, you never see potential improvements after the schedule change.

Practical Pitfalls If your dataset is large but your validation set is too small, random noise can trick your early stopping mechanism into halting training prematurely. To mitigate this, you can combine early stopping with a more stable estimate of validation performance (e.g., average performance across multiple runs or multiple folds).

How do label distribution shifts at inference time cause hidden overfitting or underfitting that was not apparent during training?

Hidden Overfitting If the frequency of certain classes changes drastically after deployment, but your model was tuned on the original distribution, it might overfit to the old distribution’s prior. You see a mismatch in real-world performance that was not evident in training or initial validation.

Hidden Underfitting If new types of examples (or new sub-classes) appear, your model might not have learned them well. This results in an underfitting scenario, as the model cannot adapt to distribution shifts it never encountered in training.

Detection

Monitor Real-Time Class Distribution: Compare it to your training set distribution.

Performance Degradation: A sudden drop in F1-score, accuracy, or other metrics in post-deployment monitoring might indicate a label distribution shift.

Potential Remedies

Periodic Retraining: Incorporate updated data distributions into your training set.

Domain Adaptation: If you anticipate systematic shifts, domain adaptation or unsupervised adaptation strategies can help.

Ensemble with Specialized Models: If you detect a new domain or new data cluster, you can spin up a specialized model for that domain and blend predictions.

How can we systematically differentiate between a model that truly underfits versus one that is constrained by fundamental data limitations?

Symptom: True Underfitting You can see improvement if you add features or increase model complexity. Additional training epochs also reduce training loss. The model indeed has room to learn but is not capturing all patterns.

Symptom: Data Limitations

Irreducible Error: If the phenomenon you’re trying to predict is intrinsically noisy (e.g., random elements in user behavior), there is a limit to how well any model can do.

Plateau in Performance: Even after more data or a bigger model, you see minimal gains. This suggests the data or the target variable is partially random or ephemeral.

Tests

Learning Curves: Plot model performance vs. the size of the training set. If performance plateaus well below the desired threshold, you might be up against irreducible noise or incomplete features.

Theoretical Constraints: Domain experts might confirm that certain aspects of the target are inherently unpredictable or require data you do not have.

Real-World Edge Case In many user-facing applications, user decisions or external factors can be too random, meaning no matter how advanced your model is, you will not surpass a certain performance level. Attempting to fix “underfitting” there might be futile if the data simply cannot yield higher predictive power.