📚 Browse the full ML Interview series here.

Comprehensive Explanation

Autoencoders are special types of neural networks that aim to learn a representation (encoding) of input data while being able to reconstruct it from the learned representation. The key idea is to transform input data into a lower-dimensional form (in the hidden layer, often called the latent space), then reconstruct it back to its original dimension. By training a network to minimize reconstruction error, the model learns meaningful features in its latent space. This approach is widely used for dimensionality reduction, denoising, and anomaly detection.

Network Architecture

An autoencoder typically has two primary components known as the Encoder and the Decoder. The Encoder compresses the input x into a latent representation z. The Decoder reconstructs the original data from that latent representation. The entire model is trained jointly by minimizing a reconstruction loss, which measures how close the output x̂ is to the original input x.

Core Mathematical Formulation

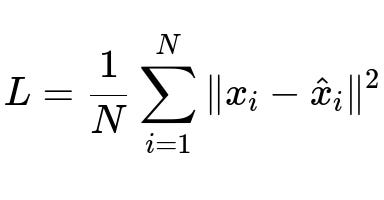

The most common objective for autoencoders is to minimize a mean squared error (MSE) or mean absolute error (MAE) between the original x and reconstructed x̂. If we define x_i as the i-th input sample and x̂_i as its reconstruction, one common loss function is shown below.

Where N is the total number of samples in the training set, x_i is the original input vector, and x̂_i is the reconstructed output for x_i. Minimizing this encourages x̂_i to be as similar as possible to x_i.

Training Process

During forward propagation, data pass through the Encoder network to produce the latent vector z. Then the Decoder network reconstructs the input from z. The reconstruction loss is computed by comparing the reconstructed output x̂ with the original input x. Backpropagation is used to compute gradients of the loss with respect to all network parameters, and an optimizer such as stochastic gradient descent or Adam updates the parameters in both the Encoder and Decoder.

Practical Implementation (PyTorch Example)

Below is a minimal illustration of how one could implement a simple autoencoder using PyTorch:

import torch

import torch.nn as nn

import torch.optim as optim

class SimpleAutoencoder(nn.Module):

def __init__(self, input_dim=784, hidden_dim=32):

super(SimpleAutoencoder, self).__init__()

self.encoder = nn.Sequential(

nn.Linear(input_dim, 128),

nn.ReLU(),

nn.Linear(128, hidden_dim),

nn.ReLU()

)

self.decoder = nn.Sequential(

nn.Linear(hidden_dim, 128),

nn.ReLU(),

nn.Linear(128, input_dim),

nn.Sigmoid()

)

def forward(self, x):

z = self.encoder(x)

x_hat = self.decoder(z)

return x_hat

# Sample usage

model = SimpleAutoencoder()

criterion = nn.MSELoss()

optimizer = optim.Adam(model.parameters(), lr=1e-3)

# Suppose data is in a DataLoader called data_loader

for epoch in range(5): # Dummy 5-epoch run

for batch in data_loader:

inputs, _ = batch # ignoring labels if dataset is labeled

inputs = inputs.view(inputs.size(0), -1) # flatten if needed

optimizer.zero_grad()

outputs = model(inputs)

loss = criterion(outputs, inputs)

loss.backward()

optimizer.step()

In this example, the network compresses a vector of size 784 (often an MNIST image flattened into a single dimension) into a 32-dimensional latent space. It then reconstructs the 784-dimensional input vector. The MSELoss function is a direct implementation of a standard reconstruction objective.

Key Variants

Different variants of autoencoders are widely used in practice:

Undercomplete Autoencoder: The latent dimension is smaller than the input dimension, forcing the network to learn a compressed representation that captures essential features.

Overcomplete Autoencoder: The latent dimension is larger or equal to the input dimension, often combined with additional regularization (like sparsity) to force a useful representation.

Denoising Autoencoder: Noise is added to the inputs before feeding them to the Encoder, but the loss is measured against the clean input. This improves robustness.

Sparse Autoencoder: A regularization term is added to the latent representation, encouraging most of the units in the latent layer to remain inactive, thus learning sparse features.

Variational Autoencoder (VAE): Introduces probabilistic techniques to learn a distribution over the latent space, enabling generative capabilities.

Why This Works

Autoencoders learn features that can efficiently represent data by compressing it into a latent space and reconstructing it. This learned encoding often captures high-level patterns of the data distribution. The process of forcing reconstruction from a smaller latent space can act similarly to principal component analysis (PCA), but with the added flexibility of nonlinear transformations.

Common Challenges

One must carefully choose network size, the dimension of the latent space, and the loss function. If the capacity of the autoencoder is too large, it may memorize the data rather than learning a generalizable representation. Regularization methods such as dropout, weight decay, or sparsity constraints can help address this. Additionally, selecting the correct learning rate and batch size is critical for stable training.

Potential Follow-up Questions

What is the role of the bottleneck layer?

The bottleneck layer represents the latent space. By forcing data through a smaller dimensional space (relative to the input dimension), the network must capture the most salient features of the input to be able to reconstruct it effectively. If the network successfully learns to reconstruct from that limited dimensional space, it indicates that the encoding has extracted meaningful features.

How do overcomplete autoencoders avoid trivial identity mapping?

In an overcomplete autoencoder, the latent dimension can be the same size or larger than the input dimension. A trivial solution is for the model to simply learn an identity map, reproducing the input without meaningful compression. To avoid this, additional constraints like sparsity, denoising, or regularization terms in the loss function encourage the network to learn useful structure in the data rather than replicating inputs directly.

Why might one add noise to inputs for a Denoising Autoencoder?

By introducing noise into the input and still aiming to reconstruct the clean version, the model learns robust features that generalize beyond exact memorization of the training data. This helps the autoencoder become less sensitive to small input perturbations, improving its ability to learn a reliable latent representation.

How do we choose the dimensionality of the latent space?

This choice often depends on the complexity of the data and the goal of the autoencoder. If you choose a latent space that is too small, you may lose critical features and underfit. If it is too large, you risk overfitting and learn representations that simply memorize the training data. A common strategy is to experiment with different sizes, monitoring reconstruction error and generalization performance on validation data.

How does the choice of loss function affect the learned representation?

If you use mean squared error, the model is penalized heavily when any input dimension deviates from the reconstruction. Mean absolute error imposes a different penalty structure, which can lead to different latent representations. Sometimes, more specialized losses (like perceptual loss or adversarial loss) are used in scenarios like image generation where you want specific types of fidelity.

Why are autoencoders not always the best for generating new data compared to other approaches?

While they can generate data by sampling from latent representations, vanilla autoencoders do not explicitly learn a probability distribution over the data. Variational Autoencoders address this by introducing a latent distribution assumption, making them more suitable for generative tasks. Standard autoencoders typically excel at compressing and reconstructing but are less adept at generating new samples that resemble the input data distribution.

What happens if the autoencoder merely copies the input to output without learning useful features?

This can occur if the model capacity is excessively large (overfitting) or if there is no regularization to encourage feature learning. The model might learn trivial mappings that memorize training samples. Strategies such as imposing sparsity, adding noise (denoising autoencoder), or using an undercomplete architecture help mitigate this and ensure that learned representations capture meaningful data properties rather than memorizing.

How does one handle overfitting?

One can employ regularization techniques such as L2 weight decay, dropout, or explicit constraints on the latent representation (like a Kullback-Leibler divergence term in a variational autoencoder). Early stopping, data augmentation, and cross-validation for hyperparameter tuning are also strategies to reduce overfitting.

Can autoencoders be used for anomaly detection?

Yes, after training an autoencoder on normal data, if a test sample produces a high reconstruction error, it could be flagged as anomalous. This relies on the assumption that the autoencoder has only learned to reconstruct patterns that resemble the normal data distribution. If an anomaly does not conform to these learned patterns, its reconstruction error will be significantly higher.

How do autoencoders compare to PCA?

Both can be used for dimensionality reduction. PCA is a linear method that finds orthogonal components explaining variance in the data. Autoencoders can learn nonlinear transformations, potentially capturing more complex relationships. However, PCA offers a closed-form solution, whereas autoencoders require iterative gradient-based training and can be more computationally demanding.

These aspects of autoencoders—bottleneck dimensionality, choice of loss function, added regularizations, and training stability—are critical in building effective representations that can be leveraged in various applications such as compressed sensing, denoising, and anomaly detection.

Below are additional follow-up questions

How does the training data distribution affect the performance of an autoencoder?

The performance of an autoencoder heavily depends on the distribution of the data it is trained on. An autoencoder fundamentally learns patterns by minimizing reconstruction error on whatever data it sees during training. If the data distribution changes (for instance, due to a shift in domain or the introduction of new and different data types), the autoencoder may no longer reconstruct well because it never learned those new or differing patterns.

Influence of Data Diversity

If your training set lacks sufficient diversity, the autoencoder may learn a very narrow representation that doesn’t generalize well. For example, if training images are all of cats and you suddenly test on dogs, the network might fail to reconstruct dog images adequately because the distribution of “dog” was never learned.

Ensuring the dataset covers the full range of expected inputs helps the autoencoder learn robust features.

Data Shifts and Catastrophic Forgetting

When the data distribution changes over time (data drift or concept drift), re-training or fine-tuning might be necessary. An autoencoder trained on older distributions may become irrelevant for new incoming data, a phenomenon related to catastrophic forgetting if you continue training on only new data without balancing old examples.

Edge Cases and Outliers

Highly skewed distributions or the presence of strong outliers can cause the autoencoder to overfit to the majority samples and not learn to handle rare but important edge cases. This is particularly problematic in anomaly detection, where the few anomalous points can be drowned out by normal samples unless specifically accounted for.

Real-World Scenarios

In streaming or time-series contexts (financial data, sensor data), distribution shifts over time. An autoencoder trained on historical data could degrade if it cannot adapt or hasn’t seen the newest patterns.

In practice, you might need to monitor reconstruction error metrics and compare them over time to detect distribution shifts.

How can one measure the “usefulness” of a learned representation beyond reconstruction error?

While reconstruction error is the most common metric for evaluating autoencoders, it may not fully capture how good the latent representation is for certain downstream tasks.

Clustering Performance

One strategy is to take the learned latent vectors and perform clustering (e.g., using k-means). If the latent space meaningfully separates different classes or clusters, it indicates a valuable representation.

A pitfall here is that you may inadvertently evaluate a representation that is only good for that specific clustering algorithm rather than generally useful.

Classification or Other Downstream Tasks

Another way is to train a simple classifier on top of the latent representations. If classification accuracy on a downstream task is high, it implies the latent space preserves discriminative information.

However, if the autoencoder was not trained with labels, it might not necessarily align with class boundaries, so this measure might be misleading unless you specifically want a representation that is beneficial for classification.

Visualization

Techniques like t-SNE or UMAP can help visualize the latent space in 2D. If the points form coherent clusters or manifold structures matching semantic groups, that suggests a meaningful encoding.

Visualization can be subjective: what looks like a neat cluster may not always translate to better performance on tasks.

Reconstruction Versus Regularization Trade-off

Sometimes, an autoencoder might produce low reconstruction error but an unhelpful latent representation (e.g., one that doesn’t generalize or separate classes). Balancing the objective function to encourage structure in the latent space (e.g., adding orthogonality constraints or using a VAE’s KL divergence) can yield more interpretable or task-friendly encodings.

Edge Cases

If your goal is anomaly detection, then even a representation that appears “unstructured” for normal data might be extremely sensitive to anomalies. So “usefulness” is context-dependent and must align with the end application.

In which scenarios might a shallow autoencoder be preferable to a deep autoencoder?

The choice between a shallow (one or two hidden layers) versus a deep autoencoder (multiple hidden layers) depends on data complexity, computational resources, and the specific problem:

Data Complexity

For simple data (e.g., low-dimensional or relatively small input size without complicated relationships), a shallow autoencoder might be sufficient and faster to train. Overly deep architectures can overfit or become computationally unwieldy.

Conversely, if the data has significant hierarchical or non-linear structures (e.g., high-resolution images, complex sensor data), a deeper architecture often captures more complex patterns.

Computational and Resource Constraints

Shallow networks train faster, have fewer parameters, and require less memory, which can be advantageous in resource-limited environments or when rapid training and inference are priorities.

Deep networks can be slow to train and more prone to exploding/vanishing gradients if not carefully designed (batch normalization, residual connections, etc.).

Risk of Overfitting

A deep autoencoder with high capacity might memorize training examples if not properly regularized. If you have a small or homogeneous dataset, shallow architectures can be less prone to memorization.

However, underfitting can also occur if your problem is complex but your architecture is too small.

Real-World Example

In embedded systems (like edge devices) that track temperature or environmental data, a shallow autoencoder might suffice to detect anomalies while remaining computationally efficient.

For large-scale visual tasks (e.g., advanced image compression), a deep autoencoder is often beneficial, as it can capture layered abstractions of the image data.

Edge Cases

Sometimes a shallow autoencoder might perform unexpectedly well if the data has a strong principal component structure. Conversely, a deep autoencoder might fail if it’s poorly tuned or if the data simply doesn’t require a deep hierarchy.

How do convolutional autoencoders differ from fully connected autoencoders?

Convolutional Autoencoders (CAEs) are specialized for data that exhibit spatial or temporal correlation (like images, videos, or time series). They use convolutional layers instead of dense layers in the encoder and decoder.

Local Connectivity and Parameter Efficiency

Convolutional layers learn filters that are applied locally across the spatial (or temporal) dimensions. This greatly reduces the number of parameters compared to fully connected layers when dealing with high-dimensional inputs such as images (e.g., 224×224 pixels).

Fewer parameters often means easier training, less risk of overfitting, and more efficient use of relevant spatial structure.

Preserving Spatial Information

Fully connected autoencoders flatten the input, losing spatial layout. Convolutional autoencoders maintain local patterns (edges, textures) and can reconstruct images more accurately, especially for tasks like denoising or super-resolution.

Pitfalls

If your data is not image-like (or does not exhibit strong local correlations), convolutional layers might not be beneficial, and the overhead might not justify their use.

Choosing filter size, stride, and the number of channels incorrectly can lead to poor reconstructions. Too large a filter might ignore local detail; too small might fail to capture larger patterns.

Edge Cases

Non-traditional data that can be shaped into a 2D “image-like” structure (e.g., spectrograms for audio data or embeddings for text) might see improvement with a convolutional autoencoder, but you must confirm that local patterns in this 2D representation are relevant to your domain.

How do we deploy an autoencoder for real-time or large-scale inference?

Deploying an autoencoder in a production environment—especially for real-time applications—presents unique challenges that go beyond the design of the model itself.

Latency Requirements

For real-time systems (e.g., anomaly detection on streaming sensor data), the inference time must be minimal. Model size, hardware acceleration (GPU/TPU), and efficient batching strategies all affect latency.

A large or poorly optimized autoencoder may not meet real-time performance constraints. Techniques like model pruning or quantization can help reduce computational load.

Scalability

In large-scale scenarios (e.g., millions of data points per second), you must handle high throughput. Deploying the autoencoder on distributed systems or using specialized hardware accelerators (ASICs, GPUs) can help.

Load balancing and caching might be crucial: you could cache intermediate representations if the encoder is expensive but shared across multiple downstream tasks.

Monitoring and Model Updates

Over time, data drift might occur. Continuous monitoring of reconstruction error can detect when the autoencoder is no longer performing well. Periodic re-training or incremental learning could be necessary.

In some edge cases, the model might degrade slowly in performance, going unnoticed until anomalies become significant. A robust monitoring strategy with defined alert thresholds is essential.

Edge Cases

Mission-critical applications (e.g., medical imaging, fraud detection) require robust fallback mechanisms if the autoencoder fails or flags anomalies incorrectly.

Deploying an autoencoder that was trained on partial data or incorrectly labeled data can yield disastrous results (e.g., ignoring crucial anomalies).

What is the best way to handle continuous or streaming data during training?

When data arrives in a continuous stream (e.g., IoT sensor data, log streams), traditional offline batch training may be infeasible or suboptimal.

Online or Incremental Learning

The model updates its parameters as new data arrives, discarding or deemphasizing older data. This requires the training algorithm to be stable under incremental updates (e.g., low learning rate, appropriate batch sizes).

A pitfall is catastrophic forgetting, where the model forgets older patterns that might still be relevant. Solutions involve replay buffers or periodically mixing older data in small amounts with new data.

Mini-Batch Updates

If streaming data can be collected and temporarily buffered, you can still train in small mini-batches. This balances computational efficiency with the ability to update fairly quickly.

The challenge arises when the data distribution shifts mid-stream (concept drift). The autoencoder might get “confused” if it trains on abrupt changes without enough examples.

Windowing or Chunking

One approach is to maintain a rolling window of recent data. The autoencoder is continuously re-trained or fine-tuned on this window. This helps adapt to short-term shifts, but might neglect historical patterns if the window is too small.

Infrastructure and Resource Management

Handling streaming data often requires real-time ingestion pipelines (Kafka, Spark Streaming, Flink). Ensuring the pipeline can process data at the same rate it arrives is non-trivial.

Edge cases include surges or spikes in data volume. The system might lag or drop samples, leading to incomplete training data that biases the model.

Are there domain-specific modifications for autoencoders in text or time-series data?

While autoencoders originated largely in the image domain, they have been adapted to many other data types:

Textual Data

Recurrent neural networks (RNN), LSTM, GRU, or Transformer-based encoders and decoders can replace traditional dense/convolutional layers. This helps capture sequential or contextual dependencies in language.

For denoising tasks in text, the model might learn to reconstruct a masked or corrupted sentence. An example pitfall is “teacher forcing” in RNN-based training, which can lead to over-optimistic training performance if not carefully handled.

Time-Series

Similar to text, time-series data can have temporal correlations. Convolutional or recurrent blocks (or 1D convolutions) are often better than purely dense layers.

A significant challenge is handling variable-length sequences and irregular sampling intervals. Advanced architectures might incorporate attention mechanisms or special interpolation layers for missing data.

Pitfalls

For text and time series, data cleaning and preprocessing can be trickier than for images. Missing data, noise spikes, and domain-specific transformations (e.g., log-scaling) can drastically affect training quality.

Overfitting to domain-specific idiosyncrasies is more common if the autoencoder is not exposed to diverse patterns.

How can we interpret or visualize the latent space of an autoencoder to gain insights?

Interpreting an autoencoder’s latent space is vital for understanding what features it captures:

Dimensionality Reduction for Visualization

Common techniques like t-SNE, UMAP, or PCA are used to project high-dimensional latent vectors onto 2D or 3D. Clusters or patterns in the projection might reveal how the model groups inputs.

A potential edge case is that t-SNE can create visually appealing clusters even if they don’t necessarily correspond to meaningful data separations. Interpretation requires domain knowledge.

Activation Maximization

For each latent dimension, you can try to find an input that maximizes that neuron’s activation. This can give a hint of what concept or pattern that neuron is encoding.

However, these methods can produce unrealistic inputs if not constrained, and might not always correspond to human-interpretable concepts.

Latent Traversals

In simpler autoencoders or VAEs, you can vary a latent coordinate while keeping others fixed. Observing the changes in the reconstructed output can illustrate what that dimension controls.

The pitfall is that in standard autoencoders (non-variational), different dimensions may not represent disentangled factors of variation—changes may be entangled across multiple latent units.

Edge Cases

Some autoencoders may store “shortcut” features in the latent space that are not human-interpretable (like pixel-level or domain-level hacks). This complicates straightforward interpretation.

Nonlinearities in deep architectures might produce latent spaces that are extremely complex to visualize or reason about directly.

Can transfer learning be applied to autoencoders?

Yes, one can leverage autoencoders pre-trained on large datasets and then transfer or fine-tune them on a smaller dataset or a related domain:

Pre-training Strategy

Train a large autoencoder on a massive dataset (e.g., large set of images or text). The encoder learns generic features that might be beneficial for many tasks.

You can then use the encoder’s weights as initialization for your new autoencoder or for a classifier/regressor on your target dataset.

Partial Freezing and Fine-Tuning

Often, early layers are frozen, as they capture low-level features (edges in images or subword embeddings in text). Later layers are fine-tuned to adapt to domain-specific patterns.

A pitfall is overfitting in scenarios where the target domain is very different from the pre-training domain. The features might not transfer well, requiring more from-scratch training.

Practical Benefits

Faster convergence due to already learned low-level features.

Reduced need for large labeled datasets if you primarily rely on unsupervised pre-training.

However, in drastically different domains (e.g., from medical images to general animals), the transferred representations might be suboptimal or even detrimental.

Edge Cases

In very specialized domains (like medical imaging with unique scanning protocols), a generic autoencoder might not be beneficial at all.

If your new dataset is tiny, fine-tuning can still lead to overfitting. Strategies like heavy regularization or data augmentation may be essential.

How do you select an appropriate activation function in an autoencoder?

The choice of activation function—ReLU, Sigmoid, Tanh, or others—can significantly influence learning dynamics and final reconstructions:

ReLU (Rectified Linear Unit)

Commonly used in the hidden layers of autoencoders due to efficient gradient flow (no saturation for positive inputs) and computational simplicity.

Potential issue is the “dying ReLU” problem, where neurons can stop activating if the weights lead to strictly negative pre-activation. This might reduce model capacity if many neurons die.

Sigmoid and Tanh

Historically used in early autoencoders. They can saturate if inputs become large, leading to vanishing gradients.

However, for the final output layer in image-based autoencoders normalized to [0,1], a Sigmoid activation is common since it naturally bounds the output. Tanh might be used if data is normalized to [-1,1].

Leaky ReLU or ELU

Variants that mitigate dying ReLUs by allowing a small negative slope or exponential smoothing. These can improve gradient flow at the cost of a slightly more complex activation function.

Pitfalls

Using unbounded activations (like pure linear or ReLU in the output layer) for data scaled between 0 and 1 can lead to suboptimal reconstructions. The network might produce values outside the valid range.

Overly saturating non-linearities in hidden layers can hamper training, especially if the data is not scaled properly.

Edge Cases

In certain domain-specific tasks (e.g., autoencoders for specialized data distributions), custom activation functions (like Swish or domain-specific designs) might yield better reconstructions or interpretability.

Always ensure your data preprocessing and output activation range are consistent. Using mismatched scales or ignoring normalization details is a common mistake.

Why might someone use a non-traditional reconstruction loss, such as a perceptual or adversarial loss, in an autoencoder?

Most autoencoders minimize pixel-level or direct numerical differences (e.g., MSE), but there are scenarios where these standard losses fail to capture human-perceived quality or high-level structure:

Perceptual Loss

Uses features extracted from a pre-trained network (e.g., VGG) to measure how similar two images are in a feature space rather than just raw pixel space. This can make reconstructions look sharper or more realistic.

A pitfall is reliance on the perceptual network’s biases. If the pre-trained network was trained on ImageNet, it might not “understand” domain-specific content well (e.g., satellite images, medical scans).

Adversarial Loss

In a GAN-style setup, the autoencoder’s output is passed to a discriminator, which tries to distinguish real data from reconstructed data. The autoencoder trains to fool the discriminator, often resulting in more realistic outputs.

The biggest challenge is training instability. GANs are known to be finicky, requiring careful hyperparameter tuning, and risk mode collapse (where the model outputs a narrow range of reconstructions).

Hybrid Loss Functions

Combining standard reconstruction loss with perceptual or adversarial terms can balance faithful reconstruction with high-level realism.

Edge cases arise if one loss dominates too strongly. For instance, weighting the adversarial loss too heavily can produce visually pleasing but semantically incorrect reconstructions.

Real-World Example

In super-resolution or denoising tasks, a simple MSE-based reconstruction can lead to blurry images. Perceptual or adversarial losses help produce sharper, more visually appealing results.

For text, a purely token-level difference might cause unnatural phrasing, so a language-model-based or adversarial objective may yield more coherent outputs.

Edge Cases

In anomaly detection, a perceptual or adversarial loss might incorrectly reconstruct anomalies convincingly if the discriminator fails to spot them. Careful experimental validation is needed before production use.

How do autoencoders handle missing or incomplete data?

Handling missing data is a critical concern in real-world scenarios, where samples may have missing features or entire data segments:

Imputation as a Preprocessing Step

A common technique is to impute missing values (with a mean, median, or advanced method) before feeding them to the autoencoder. The model then operates on “complete” data.

Pitfall: If the imputation is naive, you might introduce biases. The autoencoder learns from artificially completed data, potentially ignoring or corrupting the underlying distribution.

Masking Techniques

Modify the autoencoder to accept a mask indicating which inputs are missing. The network then reconstructs missing values. This approach is often used in denoising autoencoders or partial convolution frameworks for images.

A subtle edge case is how to handle large contiguous blocks of missing data. If entire sections of an image or sequence are missing, the model might guess incorrectly if it hasn’t been trained with enough similar patterns.

Loss Function Adaptations

When only part of the input is present, one might compute reconstruction error only on the known parts. Alternatively, if the goal is to predict missing parts, the loss focuses on those predicted components.

A potential challenge is ensuring the network does not simply memorize typical fill-in patterns without genuinely learning a robust representation.

Real-World Example

In healthcare, patient records often have missing tests or measurements. A specialized autoencoder can attempt to reconstruct the missing measurements for better predictive analytics.

In sensor networks, intermittent sensor failures lead to partial data. A well-trained autoencoder can fill the gaps or flag suspicious missing sections.

Edge Cases

If the proportion of missing data is too large, the autoencoder might not have enough context to infer the correct values.

If missingness is not random but systematically biased (e.g., only certain types of data are missing), the autoencoder might learn an unrealistic reconstruction policy.

How do we integrate constraints or domain knowledge into an autoencoder?

In many fields, certain domain constraints must be honored (e.g., physics, chemistry, finance). A standard autoencoder might generate reconstructions that violate these constraints:

Hard Constraints in the Architecture

Design the architecture so certain invariants are always upheld. For example, if the sum of certain outputs must remain constant, you can enforce that constraint in a custom layer or use a specialized activation function.

A pitfall is that strictly enforcing constraints in the architecture might reduce flexibility, making the model less able to capture real-world variations that don’t strictly follow the theoretical constraint.

Soft Constraints via Regularization

Add a term to the loss function that penalizes outputs or latent variables that break the domain’s rules. For instance, in fluid dynamics, penalize reconstructions that violate flow continuity.

Careful balancing of the reconstruction term and the constraint penalty is crucial. If the penalty is too large, reconstruction fidelity might suffer; if too small, constraints might still be violated.

Physics-Informed Neural Networks (PINNs)

In advanced applications (e.g., engineering simulations), the autoencoder might embed partial differential equations into its loss function. This ensures reconstructions adhere to physical laws.

These models can be computationally heavy to train, and domain knowledge must be accurately translated into differentiable constraints.

Edge Cases

Real-world data may itself violate so-called “constraints” due to noise or measurement errors. The autoencoder might over-constrain the data, ignoring valid outliers that represent true anomalies or new phenomena.

Overly complex or strict domain constraints can hamper the autoencoder’s capacity to learn creative or unexpected representations.

How could you adapt an autoencoder for multi-modal data (e.g., images + text)?

Multi-modal data integrates multiple distinct data types (like an image paired with a text description). Autoencoders can be extended to handle such data, though it introduces additional complexity.

Shared Latent Space

One approach is to encode each modality into a single shared latent space. The decoder for each modality attempts to reconstruct that modality from the shared latent representation.

The challenge is ensuring the representation captures the critical features for all modalities. If one modality dominates the training error, the model might ignore subtleties in the other modality.

Separate Encoders and Decoders

Each modality might have its own encoder and decoder, with a fusion layer or shared bottleneck. This architecture can let each modality learn specialized features while still enforcing a joint representation in the bottleneck.

Pitfalls include deciding how to weight each modality’s reconstruction error. If you weigh them equally but have significantly different dimensionalities or scales, one might overwhelm the other.

Cross-Modal Reconstruction

Some advanced models force the autoencoder to reconstruct text from image embeddings (and vice versa), encouraging a more aligned latent representation. This technique can be used for tasks like image captioning or text-to-image retrieval.

A subtle edge case is that if the modalities are not perfectly aligned or the training data has noise (inconsistent or incorrect text labels for images), the model might learn spurious correlations.

Real-World Example

Medical imaging with associated patient text notes. A multi-modal autoencoder might learn a richer representation that combines visual evidence with textual context.

In audio-visual tasks, combining speech signals with corresponding video frames can improve speech enhancement or lip-reading systems.

Edge Cases

If one modality frequently has missing data (e.g., incomplete text tags), the training might skew toward the modality that is more consistently present.

Domain-specific considerations for scaling, alignment, and synchronization between modalities become crucial. A mismatch in sampling rates between audio and video, for instance, can severely degrade the model’s performance.

How to ensure stability in training very deep autoencoders?

As autoencoders grow deeper, training stability can become a major issue:

Gradient Vanishing or Exploding

Deep networks are prone to vanishing/exploding gradients, especially if the architecture is not well designed (e.g., no batch normalization, poor weight initialization, etc.).

Proper initialization (He or Xavier), careful selection of activation functions, and normalization layers are crucial to maintain stable gradient flow.

Skip Connections or Residual Blocks

Incorporating skip connections (as done in ResNets) can help the model learn identity mappings more easily and reduce gradient-related issues.

A pitfall is that skip connections can let the network bypass the intended bottleneck, potentially undermining the compression if not placed thoughtfully.

Learning Rate Scheduling and Optimizer Choice

Using a dynamically adjusted learning rate (e.g., ReduceLROnPlateau or cosine annealing) can stabilize training.

Adam is a popular choice, but can sometimes lead to unstable dynamics in very deep networks. SGD with momentum, RMSProp, or newer optimizers might be more reliable depending on the dataset.

Regularization Overheads

Weight decay, dropout, or other forms of regularization help control overfitting but must be used carefully so as not to hamper the capacity of the deep network.

Excessive dropout can distort the latent space or hamper the model’s ability to reconstruct.

Edge Cases

In extremely deep architectures, even minor hyperparameter missteps (like choosing a slightly too high learning rate) can cause diverging loss.

Memory constraints might also limit batch sizes or the number of channels in each layer, possibly affecting model expressiveness.

How might pruning or compression techniques be used on an autoencoder after training?

Pruning or compression can reduce model size and speed up inference while retaining most reconstruction accuracy:

Weight Pruning

Weights in the network that are close to zero can be pruned, effectively eliminating unnecessary parameters. This can be done iteratively with fine-tuning after each pruning step.

The pitfall is that too aggressive pruning can degrade reconstruction quality, especially if critical weights are removed.

Knowledge Distillation

A smaller “student” autoencoder is trained to mimic the outputs of a larger “teacher” autoencoder. This can yield a compact model with near-equivalent performance.

Ensuring the student’s architecture is sufficiently capable is crucial. If it’s too small, it may not replicate the teacher’s performance well.

Quantization

Converting floating-point weights to lower precision (e.g., 8-bit integers) can speed up inference and reduce memory usage.

A major edge case is that some tasks are very sensitive to small quantization errors—this might degrade reconstruction or hamper anomaly detection sensitivity.

Structured Pruning

Instead of removing individual weights, entire filters or neurons can be pruned. This leads to bigger speedups on hardware that benefits from contiguous memory usage.

However, structured pruning can drastically alter network capacity. If a pruned filter was crucial for capturing a certain feature, you might see a larger drop in performance compared to unstructured pruning.

Real-World Example

If deploying a denoising autoencoder on mobile devices, compression ensures faster on-device inference.

In industrial settings, compressed autoencoders can handle high throughput with limited GPU memory, enabling real-time anomaly detection on manufacturing lines.

How do we handle highly imbalanced data in an autoencoder training context?

Imbalanced data—where certain classes or types of data are far more common than others—poses a unique challenge for reconstruction-based learning:

Overemphasis on Majority Class

The autoencoder might optimize reconstruction primarily for the dominant class, ignoring minority classes or anomalies.

In anomaly detection tasks, this is actually desirable if you only train on the “normal” majority class. But if multiple classes exist and one is rare but also “normal,” the autoencoder may fail to represent it adequately.

Weighted or Focal Loss Approaches

Incorporating weighting factors in the reconstruction loss can encourage the model to pay more attention to underrepresented data points.

A pitfall is that focusing too heavily on minority data might degrade performance on the majority class, so balancing is key.

Data Augmentation

If feasible, upsample or synthetically generate minority-class data to give the model more examples of these rare instances.

However, synthetic data might not reflect all real variations, and if done poorly, it can mislead the autoencoder into learning unrealistic patterns.

Edge Cases

In real-world datasets (e.g., fraud detection), the minority class is extremely small (<1%). Over-representing them might inadvertently lead to overfitting on those samples.

In high-dimensional settings, minority samples might be so sparse that the autoencoder cannot generalize from them without advanced techniques or additional regularization.

Could autoencoders fail to converge if the dataset is too large or too heterogeneous?

Yes, autoencoders can struggle under certain conditions of data size and heterogeneity:

Overwhelming Complexity

If the data includes vastly different domains (e.g., images of natural scenes, sketches, medical scans, etc.) with no common style, a single autoencoder might find it difficult to learn a unified representation.

A pitfall is that the network can converge to a high-level average-like reconstruction that is suboptimal for every subgroup in the dataset.

Capacity Limitations

Even a large network might not have enough capacity to capture all modes of very heterogeneous data. The training might stall with high reconstruction error.

Alternatively, if you keep increasing capacity, overfitting or excessive training time might become problematic.

Curriculum Learning

One strategy is to first train on subsets of data with narrower distribution, then gradually include more diverse examples. This can help the model incrementally incorporate complexity.

Improper curriculum scheduling can result in forgetting earlier data modes or being unable to effectively integrate new modes.

Edge Cases

Large-scale datasets often have noisy labels or mislabeled data if they are compiled from diverse sources. The autoencoder might effectively “learn” the noise if there is enough of it, making the representation less meaningful.

If the dataset includes truly contradictory samples (like corrupted images or random artifacts), the network might fail to converge unless these are filtered or treated properly.

How can autoencoders integrate with other unsupervised or self-supervised methods?

Autoencoders are typically unsupervised, but they can be combined with other techniques for more powerful learning:

Contrastive Learning

Pair autoencoder reconstruction with a contrastive objective that encourages different augmentations of the same data point to map to similar latent representations. This can yield more robust features.

Pitfall: Combining multiple losses (reconstruction + contrastive) can be challenging to balance. If the contrastive term is too strong, the network might ignore reconstruction needs.

Clustering + Autoencoding

Methods like Deep Clustering networks pair an autoencoder with a clustering objective, aligning latent space with cluster assignments while still reconstructing input data.

An edge case is that if the cluster objective dominates, the network might form tight clusters that hamper reconstruction of transitional or borderline samples.

Generative Adversarial Networks (GANs)

An autoencoder can be augmented with a discriminator, forming an adversarial autoencoder. The discriminator ensures the latent space follows a specific distribution (like Gaussian), making the autoencoder more generative-like.

Training complexity and stability are common pitfalls of adversarial frameworks.

Self-Supervised Label Generation

In scenarios where partial labels exist, the autoencoder can help refine or generate pseudo-labels (e.g., by clustering in latent space). Those labels can then supervise further tasks.

Risk arises if the pseudo-labeling is inaccurate. You can inadvertently amplify labeling errors in subsequent stages.

Edge Cases

Overcomplicating the architecture with multiple unsupervised/self-supervised objectives might yield diminishing returns if the domain or data doesn’t require such complexity.

Debugging these combined methods is harder—if performance degrades, determining which component or objective is the culprit can be non-trivial.