ML Interview Q Series: How would you develop a lead scoring framework to predict whether a prospective business will upgrade to an enterprise customer?

📚 Browse the full ML Interview series here.

Short Compact solution

Clarify the fundamental requirements of the lead scoring system by determining whether it will be an internal solution or a tool for wider external use, establishing any necessary business constraints, and identifying the data sources (CRM, marketing databases, etc.). Once requirements are clear, focus on assembling high-value features. Examples include information on the company’s industry and scale (firmographics), the degree of engagement with marketing campaigns, the number of interactions with sales, and the specifics of any deals under discussion (like the length of the contract or the scope of licenses involved).

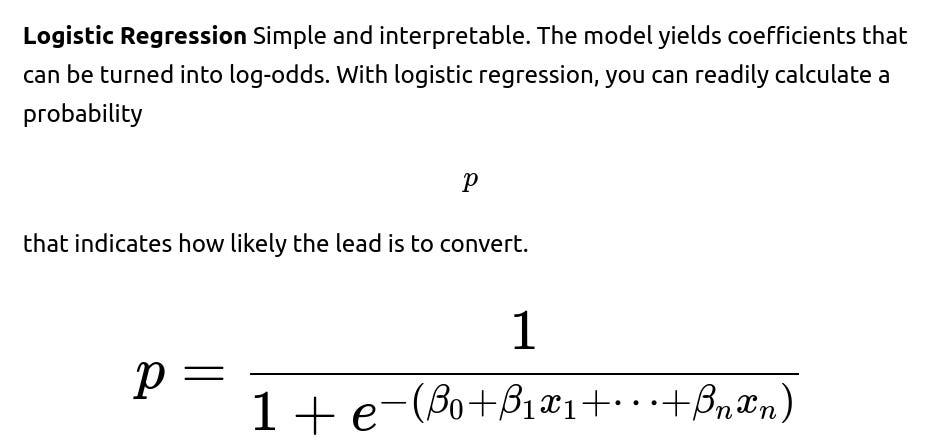

After gathering and cleaning the data, pick a straightforward yet interpretable model such as logistic regression to begin with. If interaction effects or high-dimensional data pose a challenge, other candidates—like neural networks, SVMs, or tree-based models—might be more suitable. Tree-based methods (for example, random forests or gradient boosting) often reveal which features carry the most weight for the predictions. Finally, ensure proper monitoring of the model in production. As the market, product offerings, or customers evolve, the model should be retrained or adjusted periodically to prevent performance degradation.

Comprehensive Explanation

Clarifying Requirements and Scope

The first step in building this type of predictive system is to clarify precisely what stakeholders mean by “becoming an enterprise customer.” Often, this might imply that the deal size crosses a certain revenue threshold, or that the client’s purchase meets predefined enterprise-level criteria in the product suite. It is also important to confirm whether this scoring is purely for in-house teams or whether it could be part of a larger product offering. Clarifying these points narrows the scope of the data you need and the features to be extracted.

Data Sources and Preprocessing

Data typically comes from multiple internal systems, such as a CRM, marketing automation platforms, or usage analytics. Common data categories include:

Firmographic Data Details about the prospective company: industry, revenue range, total number of employees, etc.

Marketing Engagement Records of how the lead’s employees have responded to marketing content: whether they attended webinars, opened emails, downloaded white papers, or clicked on ads.

Sales Interactions The volume and frequency of sales calls, meetings, demos, and any other communication between the prospective client and the sales team.

Deal Information If there is an ongoing negotiation, how large is the deal in terms of revenue, number of seats, length of the contract, and which products or add-on services are included?

Preprocessing tasks will involve combining these disparate data sources into a unified schema, handling missing values, converting categorical variables into numeric representations, and removing any outliers or corrupted records. Sometimes, data from external providers might be integrated to enhance coverage of firmographics.

Feature Engineering and Selection

Feature engineering can greatly affect model performance. Potential transformations or derived features include:

Aggregated Engagement Metrics Summarize how frequently and recently the lead engaged with marketing or sales. Recency can be crucial since older interactions may reflect less interest.

Interaction Ratios The proportion of employees from that lead’s company who interacted with marketing or sales, relative to the total workforce. A higher ratio might indicate broad interest across teams.

Historical Purchase Patterns If the prospective company has smaller existing contracts, the jump to a larger enterprise deal may be more likely if they have historically renewed or upgraded in the past.

Model Choice

A lead scoring algorithm can be formulated as a binary classification problem: predicting whether or not the prospective lead is likely to purchase an enterprise-level product. Options for modeling include:

In certain cases, correlated inputs or a very small dataset can cause issues, but logistic regression remains a reliable baseline.

Tree-Based Models Random forests or gradient boosting (e.g., XGBoost, LightGBM) frequently excel when there are complex interactions or non-linearities. They also can automatically gauge feature importance, which helps stakeholders understand which factors most strongly influence the lead score.

Neural Networks or SVM These can handle large amounts of features and complex patterns but at the cost of interpretability and typically a higher need for data. They may be overkill unless you have a very high-dimensional feature space or sequential data signals.

Model Evaluation

After training, evaluate model performance using appropriate metrics such as AUC (Area Under the ROC Curve), Precision-Recall AUC, or F1-score. The chosen metric should fit the business objective—if the cost of a false positive is high, one may lean more heavily on precision; if missing a worthwhile lead is riskier, recall might be more relevant.

Deployment and Maintenance

The model’s output must be integrated into your sales or marketing workflow, such as a CRM interface where the predicted score is visible. Because lead behavior and market conditions evolve, it is critical to set up continuous monitoring:

Track performance and check if the distribution of new leads shifts drastically from what the model is accustomed to.

Revisit and retrain the model frequently if performance indicators show drift.

Consider a champion-challenger framework, where the champion model is your current production system, and you test new models (the challenger) against it in a safe environment before a full rollout.

Follow-Up Question 1

How do you handle an imbalanced dataset when many more leads do not convert to enterprise customers than those that do?

When predicting the likelihood of enterprise-level conversion, it is common to deal with a relatively small fraction of positive instances. A heavily skewed label distribution can cause standard training algorithms to overfit on the majority class. Techniques to address this include oversampling (e.g., SMOTE), undersampling, or generating class weights for the objective function in models like random forests or logistic regression. Evaluating with metrics robust to imbalance, such as Precision-Recall AUC, is usually recommended. You can also adjust the decision threshold based on your business needs—maximizing recall or precision depending on which error type is costlier.

Follow-Up Question 2

Can you demonstrate a simple example of training a lead scoring model using Python?

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import roc_auc_score, classification_report

# Suppose we have a dataframe 'df' with columns:

# 'industry', 'revenue', 'num_employees', 'marketing_clicks', 'sales_calls', ...

# 'converted_to_enterprise' is our target variable: 1 for converted, 0 for not converted

# Example numeric or preprocessed data

X = df.drop('converted_to_enterprise', axis=1)

y = df['converted_to_enterprise']

# Train-test split

X_train, X_test, y_train, y_test = train_test_split(X, y,

test_size=0.2,

random_state=42,

stratify=y)

# Random Forest model with class weights to handle imbalance

model = RandomForestClassifier(n_estimators=100,

class_weight='balanced_subsample',

random_state=42)

model.fit(X_train, y_train)

# Predictions and evaluation

y_pred = model.predict(X_test)

y_probs = model.predict_proba(X_test)[:, 1]

print("ROC AUC:", roc_auc_score(y_test, y_probs))

print("Classification Report:\n", classification_report(y_test, y_pred))

In this example, we use a random forest classifier with balanced subsampling to help alleviate label imbalance. We then measure how effectively the model distinguishes between positive and negative classes by computing the ROC AUC score, alongside a classification report that includes precision, recall, and F1-score.

Follow-Up Question 3

What if you have minimal data on new leads and suspect concept drift over time?

Concept drift is common if user behavior or market conditions shift. For instance, a lead’s decision patterns could change if a new competitor enters the market. When dealing with limited historical data or changing behavior, you can:

Incorporate a sliding window approach or an online learning framework, where your model regularly re-trains on the most recent data.

Employ a feature store that ingests updated data from multiple sources, ensuring your training sets are continually refreshed.

Maintain a strong feedback loop with sales teams. If they notice that high-potential leads are being missed (or false positives keep appearing), incorporate these signals back into the retraining process.

Run periodic backtesting or holdout sets from recent months to examine whether the model’s predictions for leads from that period match actual outcomes.

Follow-Up Question 4

What are some best practices to interpret the model and explain the scores to non-technical stakeholders?

Non-technical teams often need a clear rationale for why a particular lead’s score is high or low. Best practices include:

Using explainable models (like logistic regression) or post-hoc explanation tools (like SHAP or LIME) for more complex models. SHAP values illustrate how each feature contributed to pushing a prediction higher or lower.

Exposing the top important features when employing tree-based methods. This highlights the elements that exert the greatest influence on the lead score.

Providing an accessible narrative to sales teams (e.g., “The model places heavy weight on the number of recent interactions, the volume of marketing content consumed, and the size of the prospect’s business.”). Translating raw coefficients or feature importances into everyday language fosters trust in the model’s recommendations.

Below are additional follow-up questions

How do you incorporate text data from emails, call transcripts, or meeting notes into a lead scoring model?

When it comes to lead scoring, there is often a treasure trove of text-based information—like email exchanges, call transcripts, or even meeting notes. By incorporating these unstructured textual features, you can reveal deeper insights into lead intent and sentiment.

Potential Approaches

Keyword Extraction or Topic Modeling You can create features indicating the presence of relevant keywords (“budget,” “timeline,” “CEO approval,” etc.) or employ topic modeling (LDA) to cluster conversation themes. For instance, if transcripts repeatedly mention “executive buy-in,” it may predict a stronger likelihood of enterprise conversion.

Sentiment Analysis Sentiment scores derived from text can serve as signals. Positive or encouraging sentiments in conversation might correlate with higher conversion.

Natural Language Embeddings Using modern transformer-based embeddings (e.g., BERT) can convert text into numeric vectors that capture context. You can then feed these embeddings into tree-based models or neural networks as additional features.

Pitfalls and Edge Cases

Data Privacy and Confidentiality Storing and analyzing free-form text raises privacy concerns. Some transcripts might contain confidential or personal data, so secure storage and compliance with regulations (like GDPR) is crucial.

Sparse and Noisy Text Text data can be messy or sparse, with certain leads having extensive logs while others have none. This discrepancy can skew the model. You may need robust handling of missing text features.

Language Variations and Jargon Leads in different regions or industries might use unique terminologies. Pretrained language models might not capture specialized domain jargon out of the box, requiring domain-specific fine-tuning.

How do you decide between a batch or real-time scoring pipeline for lead scoring?

Once a model is developed, you must decide how quickly each lead’s score should be generated. Some systems only require daily or weekly refreshes, while others need instantaneous updates.

Key Considerations

Response Time Requirements If sales teams engage immediately upon a lead showing interest (e.g., completing a web form), real-time scoring becomes valuable. In contrast, if follow-up is done at fixed intervals or if your pipeline does not need instant reaction, batch processing can suffice.

System Complexity Real-time pipelines are more complex to maintain. You will need a streaming infrastructure to handle inbound leads and perhaps an online feature store that can rapidly compute fresh features.

Infrastructure Costs Continuous scoring systems can be more resource-intensive. For large volumes of leads, compute expenses and engineering overhead can surge. Batch processing is less demanding but might deliver outdated scores if leads exhibit fast-changing behavior.

Pitfalls and Edge Cases

Feature Latency Some features are not available in real time (like a record of marketing activity that only updates nightly). Relying on these might hamper real-time scoring accuracy.

Scoring at the Wrong Stage Real-time scoring can lead to reactionary approaches that bombard a lead with communication too early. There might be a risk of appearing intrusive, potentially discouraging the lead from further engagement.

How do you handle seasonality or periodic fluctuations in lead behavior?

Many businesses experience cyclical variations (e.g., end-of-quarter purchasing spikes, seasonal budget approvals). Accounting for these patterns can improve your model’s accuracy.

Techniques

Time-Based Features Incorporate temporal features like the month, quarter, or day of the week. You might find, for instance, that certain industries are more likely to convert at the end of their fiscal year.

Rolling Windows Use rolling windows (e.g., last 3 months of data) to capture near-term seasonality. This can help the model adapt to cyclical patterns, especially if you train frequently.

Separate Models for Different Periods In extreme cases where lead behavior differs significantly across seasons, you might experiment with separate models per quarter or month, though this requires more data and overhead.

Pitfalls and Edge Cases

Data Fragmentation Splitting data by seasons or time windows can limit the amount of data available for training, potentially harming performance if each partition becomes too small.

Changing Seasonality External factors (e.g., economic shifts, changes in budget cycles) can alter or reduce previously strong seasonal patterns, leading to concept drift if the model overfits historical cycles.

How do you address the challenge of leads from new industries or sectors not present in historical training data?

Models rely on patterns learned from historical data. If a new industry or sector emerges (or becomes newly relevant), there may be no direct examples in the training set.

Methods to Mitigate

Closest Match Approaches Estimate features or behaviors by identifying similarities to industries present in the data. For example, you might group a new sub-industry with a broader parent category if that parent is already in your dataset.

Manual Rules If domain experts suspect the new sector behaves similarly to an existing category, you can implement manual adjustments or threshold-based heuristics to bootstrap the model until you gather enough data.

Active Learning Whenever leads from new industries appear, actively track their outcomes (conversion vs. no conversion). Prioritize labeling these new data points to rapidly incorporate them into model retraining.

Pitfalls and Edge Cases

Overgeneralization Clumping new leads under an existing category could hide unique behaviors specific to that new domain, causing suboptimal predictions.

Delayed Adaptation If new leads appear rapidly, you risk a lag in performance until you have enough labeled samples for retraining.

How do you handle lead score explainability when using an ensemble or deep learning model?

Complex models can provide better accuracy but often sacrifice transparency. Stakeholders might want clear insights into why a lead is considered high vs. low probability for enterprise conversion.

Approaches

Local Explanation Methods Tools like SHAP (SHapley Additive exPlanations) or LIME can provide case-by-case explanations by approximating how each feature affects a particular prediction.

Model-Specific Feature Importances Tree-based ensembles typically expose feature importance rankings. This indicates which features globally have the most weight on predictions.

Partial Dependence and ICE Plots Partial dependence plots can show how changes in one (or two) features, holding others constant, impact predicted outcomes. Individual conditional expectation (ICE) plots further drill down to specific data points.

Pitfalls and Edge Cases

Misleading Interpretations While explanations like SHAP are helpful, naive use can lead to oversimplified conclusions. These methods approximate complex relationships, and incorrectly used visualizations might still confuse stakeholders.

Regulatory Compliance In certain domains (banking, insurance, healthcare), you must justify decisions made by automated systems. If your interpretability solutions are not robust or well-documented, you risk non-compliance with regulations.

How do you integrate lead scoring outcomes into broader marketing and sales workflows?

A model’s value largely depends on how effectively teams leverage its scores. Integrating predictions into CRM or marketing automation platforms involves both technical and organizational steps.

Implementation Details

CRM Integration Generate scores automatically for each new or updated lead record, then store them in fields easily visible to sales reps. Include additional insights, such as top reasons for a high score.

Priority Queues and Task Automation Leads above a certain threshold might automatically trigger a high-priority notification for immediate sales follow-up. Or marketing might enroll them in specialized campaigns.

Feedback Loops Encourage sales or marketing reps to mark the accuracy of the predictions or note anomalies in the system, feeding data back to a “validation” pipeline to refine the model.

Pitfalls and Edge Cases

Resistance to Change Sales teams might ignore automated scores if they lack trust or do not understand the system’s rationale. Training and clear demonstration of model benefits is crucial.

Overreliance A high-scoring lead is not a guarantee. Relying solely on the algorithm without human judgment can miss important contextual nuances. Blending model insights with human expertise usually yields better outcomes.

How would you approach lead scoring for multiple product lines or tiers within the same company?

Some organizations sell a range of products, each with its own conversion profile. A single unified score might be less informative than specialized scores for each product line or buyer persona.

Possible Strategies

Multi-Task Learning A single machine learning model can output multiple probabilities, one for each product line. This approach shares representations across tasks and can capture correlations between products.

Segmentation and Separate Models Another option is building distinct models. For instance, a “premium enterprise” model vs. a “standard” model. If the same lead might be eligible for multiple lines, you can compare the predicted probabilities or final scores.

Hierarchical Scoring If you have a tiered approach, you can create a funnel-like chain of models: first predict if the lead is ready for any product, then narrow down to premium vs. standard offerings.

Pitfalls and Edge Cases

Data Fragmentation Splitting the data across multiple product lines can reduce the sample size for each. This can lead to weaker generalization if not enough leads exist for each product category.

Complexity for End Users Sales reps might be overwhelmed if they see different scores for each product line. You may have to develop a coherent user interface that consolidates the model outputs logically.

How do you handle leads that have previously churned or had unsuccessful trials in the past?

In many businesses, leads might have trialed or purchased a smaller plan and later churned. How you incorporate that history can influence the lead’s new score if they return.

Considerations

Churn as a Feature Include whether they previously churned and how long ago. If enough time has passed, circumstances might have changed, making them a fresh lead.

Reason for Churn If your data reveals the cause (e.g., dissatisfaction with a particular product, high pricing, or poor onboarding experience), that nuance can guide how likely they are to convert to a different product or re-engage.

Churn vs. Upsell A lead might have churned from one product but still has potential interest in another solution. Distinguish between leads that churned everything vs. leads that only canceled a subset of services.

Pitfalls and Edge Cases

Biased Data Leads who churned may be absent from typical pipelines (e.g., no new marketing interactions). The model might systematically give them low scores, ignoring changed circumstances.

Data Integration Historical churn details might reside in separate databases. Failing to unify this data accurately can produce incomplete or contradictory feature values.

How do you maintain data quality over time as more features and data sources are introduced?

Lead scoring models often evolve as the business discovers new attributes or integrates new tools. Each additional data source can introduce mismatches or hidden issues.

Data Governance Practices

Schema Versioning Keep track of the schema for every data source. This ensures consistent mapping of features across different points in time.

Automated Data Validation Implement checks for missing or out-of-range values, incorrect data types, and unexpected duplicates.

Data Lineage Tracking Document the path each feature takes from origin to final training set. This transparency is vital if a metric suddenly degrades and you need to pinpoint which data source changed.

Pitfalls and Edge Cases

Multiple Owners Some data might be owned by third parties or separate departments. Lack of coordination can cause delays or data format changes that break your pipeline.

Backward Incompatibility If a new field replaces an old one, your historical data might lose continuity. You might need mapping strategies or partial re-labeling to keep older data relevant.

How would you run A/B testing or hold-out experiments to validate different lead scoring strategies?

Even after you build a robust lead scoring model, you might want to compare it against prior methods (e.g., a rules-based approach) or multiple machine learning models.

Experimental Setup

Split Leads Randomly Assign some portion of new leads to the existing approach (control group) and others to the new model (treatment group).

Define Success Metrics Track actual conversion rates, engagement, or revenue generated from each group. This ensures you’re measuring the downstream effect rather than just predictive accuracy.

Statistical Significance Monitor the difference in outcomes (like conversions or deal size) between groups. Only deploy the new approach to everyone if the improvement is consistent and statistically significant.

Pitfalls and Edge Cases

Contamination Sales reps might mix leads from both groups or treat them differently without your knowledge, diluting the signal. Clear instructions and possibly separate teams might be needed.

Long Sales Cycles Enterprise deals can take months to finalize, so you may not observe conversions right away. Planning an A/B test in this context can require extended timelines.

How do you account for human-in-the-loop feedback in iterating on the lead scoring model?

Sales and marketing teams often have deep intuition about which leads are promising. Incorporating their feedback can refine the model over time.

Practical Approaches

Override Mechanisms Offer an easy way for a sales rep to tag a lead as high-priority despite a lower model score. Analyze the frequency of such overrides and the eventual outcome (did it convert?), then feed these events back into training data.

Active Labeling Prompt sales reps to confirm the model’s predictions at certain thresholds or edge cases. This structured feedback can accelerate data collection for tricky leads.

Continuous Retraining Combine user-generated labels (sales might know a lead is “not a fit”) with new outcomes, ensuring the model evolves as human knowledge accumulates.

Pitfalls and Edge Cases

Overreliance on Subjective Input Human judgments can be biased or inconsistent across different reps. Overfitting to these manual overrides can degrade accuracy.

Feedback Loop Delays It might take significant time to confirm whether a lead eventually converted or not. Sales might mark them as “promising,” but true validation only occurs when the deal closes or doesn’t.

How do you manage lead scoring when multiple stakeholders have competing priorities for the same model?

In some businesses, marketing, sales, and product teams may each want different objectives or use cases within the same scoring system. For example, marketing might care about brand awareness, whereas sales is laser-focused on immediate deal closure.

Conflict Resolution

Define a Unified Metric Get consensus on a primary objective—like “probability of enterprise-tier purchase within 3 months.” Additional sub-metrics can be tracked to satisfy other stakeholders, but the core model must be aligned with the central goal.

Segmented Outputs Consider building separate segments or specialized sub-models. For instance, you might have a lead-lifecycle model for marketing but use a purchase-likelihood model for sales.

Governance and Steering Committee Appoint a cross-functional group that makes decisions about new feature requests or changes to the lead scoring logic. This ensures updates serve the wider organization rather than a single team’s short-term needs.

Pitfalls and Edge Cases

Model Drift via Ad-Hoc Changes If product or marketing requests frequent untested changes, the model can degrade. A well-defined process for implementing changes helps maintain stability.

Misaligned Incentives Different teams might have contradictory performance metrics, causing friction. Transparent communication of how the lead scoring model benefits everyone can alleviate tensions.