ML Interview Q Series: How would you explain Naive Bayes vs. Logistic Regression and recommend one for spam classification?

📚 Browse the full ML Interview series here.

Comprehensive Explanation

A generative model is one that attempts to model the joint probability distribution of the data and labels, P(x, y). By doing so, it effectively learns how the data (feature vectors x) is generated for each possible label y. In contrast, a discriminative model focuses directly on modeling P(y | x), the conditional probability of a label y given a data point x, without necessarily describing how x itself is distributed for each label.

Core Mathematical View

Generative modeling involves learning P(x | y) and P(y). A typical way to make predictions is by applying Bayes’ theorem:

where y is the class label (e.g., spam or not spam), x is the feature vector (e.g., email content features), P(x|y) is the likelihood of observing x given y, P(y) is the prior probability of y, and P(x) is the evidence term (which serves as a normalization constant).

Explanation of Parameters

P(x|y): The probability that the feature vector x (e.g., specific words in the email) occurs given that the email is spam (or not spam).

P(y): The probability that any email is spam (or not spam) before observing any features.

P(x): A normalizing factor ensuring that the total probability sums to 1. It is often hard to estimate directly but can be ignored for classification purposes since it is constant for all classes.

A common generative method for classification is Naive Bayes, where we assume the features in x are conditionally independent given y. Despite the strong independence assumption, Naive Bayes can work well in text classification (like spam detection) because text features (words) often appear or don't appear in an almost independent manner.

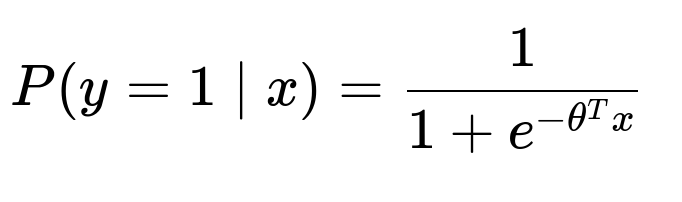

On the other hand, a discriminative model like Logistic Regression aims to learn P(y|x) more directly, often parametrized as:

where x is the feature vector, and θ is the learned parameter vector.

Explanation of Parameters

θ is the weight vector that the algorithm learns.

x is the input feature vector representing the email's attributes (e.g., frequencies of certain words).

e^{-θ^T x} is the exponential function used in the logistic function, turning a linear combination of features into a probability between 0 and 1.

Interpretation and When to Use Each

Generative (Naive Bayes)

Pros: Often trains faster, works well even with smaller datasets, can handle missing features gracefully, and can be quite effective in text classification. It’s also easy to incorporate prior knowledge.

Cons: The strong assumption about feature independence can degrade performance when features are correlated. Not always as flexible in capturing complex decision boundaries.

Discriminative (Logistic Regression)

Pros: Typically yields higher accuracy when data is plentiful. It directly focuses on the decision boundary, often resulting in better generalization if the model is well-regularized.

Cons: Needs more data to achieve good performance, can be computationally more expensive to train compared to simpler generative models, and less straightforward to interpret in terms of how the data is generated.

Whose Suggestion Is More Suitable

In practice, both models can work for spam filtering, and each has been used in real-world systems. Naive Bayes is a classic model for text classification because it’s easy, fast, and surprisingly effective in many spam scenarios. Logistic Regression tends to perform better when there are sufficient data points and more complex feature relationships. Large-scale email systems like Gmail often leverage discriminative models (or ensembles that include them) because they have immense amounts of training data, allowing them to benefit from the better capacity of discriminative methods.

So if your dataset is large and diverse, and you have enough computational resources, Logistic Regression (or even more advanced discriminative models) might be a strong choice. If you need a quick, efficient solution that is easy to implement and still accurate, Naive Bayes can be a perfectly valid option.

Follow-up Question: Why might a company switch from a generative to a discriminative model when it already has large amounts of data?

In scenarios where you have a large and growing dataset, discriminative models like Logistic Regression can capture more complex boundaries between spam and not spam. As the dataset grows, the strong independence assumptions of Naive Bayes might start limiting its accuracy. Logistic Regression (and other discriminative approaches) can leverage the richer, high-dimensional feature space to learn more nuanced patterns. Thus, when a company sees that the data volume has increased, it might switch to a method that benefits from more data and offers higher potential accuracy.

Follow-up Question: In what scenarios could a generative approach be more beneficial despite the availability of large data?

Generative models might be favored if you need:

Interpretability of How Data Is Generated: Naive Bayes can offer insights into how the model views each feature's likelihood under each class.

Dealing With Missing Data: Generative models can handle missing data more naturally by marginalizing over the missing variables, while discriminative models typically require additional strategies.

A Quick, Reliable Baseline: Even with large data, Naive Bayes can be simple, fast to implement, and yields a strong initial baseline. It might be used in production if it is sufficient for the company's performance and cost constraints.

Follow-up Question: How do we decide on the right model if both approaches perform equally well on historical data?

When performance is nearly the same on held-out validation sets, additional criteria should guide the decision:

Scalability: If the dataset grows even larger, which approach will be easier and more cost-effective to retrain?

Interpretability: Is there a need to explicitly understand how certain words or features influence the spam decision?

Deployment Complexity: Which method aligns better with the existing production pipelines (e.g., libraries used, computational resources available)?

Extendability: Do you plan to add more complex features or adapt the model for related tasks (e.g., phishing detection, personalized spam rules)?

Balancing these considerations helps in choosing the model best suited to the organization’s long-term goals and infrastructure.

Follow-up Question: Could ensemble methods play a role here?

Ensemble methods (like combining Naive Bayes and Logistic Regression, or using more sophisticated ensemble techniques such as random forests or gradient boosting) can often outperform a single model. This approach can capture different aspects of the data from both generative and discriminative perspectives, leading to potentially higher accuracy and robustness. Implementing a stacked or blended ensemble of Naive Bayes and Logistic Regression can be advantageous, especially at scale where you have diverse data and can afford the computational overhead.

Follow-up Question: How does regularization impact models like Logistic Regression in spam detection?

Regularization adds a penalty term to the loss function of Logistic Regression, controlling the magnitude of the weight vector θ. It helps prevent overfitting in high-dimensional data (like email text) by encouraging weights for irrelevant features to be small or zero. Common regularization techniques include L2 (ridge) and L1 (lasso). In spam detection, where numerous features may be present, regularization is crucial for maintaining generalization performance and reducing noise from rarely used features.

Follow-up Question: What about real-time adaptability to new spam patterns?

Real-time or online learning is especially important in spam detection as spammers constantly adjust their strategies. Logistic Regression can be adapted using incremental learning algorithms (e.g., stochastic gradient descent). Naive Bayes can also be updated online by re-estimating counts of feature occurrences. While both approaches can be updated in an online fashion, discriminative models typically excel if you continue to refine them with new data in a streaming setting, as they adjust their decision boundaries directly based on recent examples.

By thoroughly considering all these aspects, one can make a more informed decision regarding which model (generative or discriminative) to use, ensuring both immediate effectiveness and future scalability in the fight against spam.

Below are additional follow-up questions

How does a heavily imbalanced dataset (where spam may be much rarer than non-spam, or vice versa) affect the choice between a generative and a discriminative model?

When the label distribution is highly skewed, classification models often exhibit bias toward the majority class. For spam filtering, this can mean missing rare spam examples or over-alerting on non-spam messages.

Detailed Explanation and Pitfalls

Training Naive Bayes on Imbalanced Data Naive Bayes estimates P(y) and P(x|y) by counting occurrences. If spam is a minority class, the prior P(spam) might be very low, which can cause the model to under-predict spam. Even though the likelihood terms P(x|spam) might be strong for certain spammy words, the very small prior may outweigh them, leading to more false negatives.

Training Logistic Regression on Imbalanced Data Logistic Regression focuses on learning boundaries to separate spam from non-spam. In cases of class imbalance, it might also produce skewed predictions that favor the dominant class. Regularization and careful threshold tuning can help mitigate this, as well as techniques like oversampling the minority class or undersampling the majority class.

Edge Cases

Sudden surges in spam volume can temporarily flip the distribution, throwing off prior estimates in Naive Bayes.

If spam changes style rapidly, the model trained on older data may not adapt unless periodically retrained with balanced examples.

Very rare but extremely damaging spam (like phishing emails) might be overlooked if the model is purely optimized for accuracy and not recall or precision for the minority class.

Proper techniques like adjusting decision thresholds, using performance metrics like F1 score or AUC, or applying specialized sampling or weighting methods can help address imbalance in both generative and discriminative frameworks.

In an adversarial environment where spammers constantly evolve their tactics, which model is more robust?

Spam detection is inherently adversarial because spammers actively modify emails to bypass filters. The question arises: which model type—generative or discriminative—tends to be more resilient?

Detailed Explanation and Pitfalls

Adversarial Strategies Spammers can alter words (e.g., inserting random characters, using homographs or synonyms) to fool text-based features. They might exploit the independence assumptions of Naive Bayes by spreading suspicious words across the message or reordering them.

Generative Model Vulnerabilities Naive Bayes can be tricked by small but carefully curated changes in word distributions, especially if it strongly relies on certain keywords. Spammers might embed benign or rare words to offset strong spam probabilities.

Discriminative Model Vulnerabilities Logistic Regression can also be fooled if spammers insert features the model perceives as typical of non-spam. If the model’s weight vector is well-known or guessable, attackers can adapt by targeting certain high-weight features.

Adaptive Defense Both models can incorporate new features (e.g., bigrams, trigrams, orthographic patterns) to adapt. However, if you frequently retrain Logistic Regression with fresh data, it can more directly update its decision boundary in response to new spam tactics. Naive Bayes also can be updated, but subtle correlated features might be missed due to independence assumptions.

In adversarial contexts, building ensemble systems or incorporating additional signals (e.g., email sending patterns, user feedback) is often more critical than model type alone.

How do these models handle non-textual features (like sender reputation, embedded links, or metadata)?

Spam detection in real-world systems often goes beyond text. Features such as IP reputation, domain age, link frequency, attachment type, or user interaction signals may be crucial.

Detailed Explanation and Pitfalls

Naive Bayes with Non-textual Features It can incorporate these additional features as separate dimensions. However, if these features are correlated (e.g., domain age strongly correlates with link patterns), the independence assumption may be violated.

Logistic Regression with Non-textual Features It simply treats them as additional input dimensions. If a certain domain or IP address is more frequently associated with spam, the corresponding weight can become significantly positive (indicating spam). The model can learn complex interactions, especially if polynomial or interaction terms are included (although this expands feature space significantly).

Edge Cases

Rapid changes in IP reputation or domain usage can outdate model assumptions quickly.

Some metadata might not always be available (e.g., partial logs or anonymized data), requiring the model to handle missing features.

If certain metadata is extremely sparse, the model may overfit those rare signals without careful regularization.

When using non-text features, it’s essential to track data shifts: spammers might pivot to new domains or exploit ephemeral IP addresses. Periodic retraining or partial online learning helps keep the model’s representation updated.

How do these models degrade when the data distribution drifts substantially over time?

Spam tactics evolve, user behavior changes, and language usage shifts. This phenomenon, known as concept drift, challenges both generative and discriminative models.

Detailed Explanation and Pitfalls

Concept Drift

Naive Bayes can become outdated if the prior P(y) or the likelihood P(x|y) shifts significantly. For instance, new words appear that are not in its vocabulary, or spammy words become common in legitimate emails.

Logistic Regression might assign large weights to features that become less predictive over time. Without regular updates, these stale weights degrade performance.

Sudden vs. Gradual Drifts

Sudden Drifts: Spammers might abruptly shift from one tactic to another (e.g., from text-based spam to image-based spam). A large portion of the training distribution becomes irrelevant overnight.

Gradual Drifts: Over months or years, changes in language or user behavior slowly make older examples less representative.

Pitfalls

Delayed retraining schedules can cause both models to underperform until the next retraining.

Overfitting to older data can lead to slower adaptation to new spam tactics.

Mitigation

Incremental or online learning updates.

Weighted training or sliding window approaches to emphasize recent data.

Monitoring performance metrics over time to detect distribution shifts and trigger a retraining pipeline.

How do these models handle domain adaptation, such as applying a spam filter trained on one language to another language?

In a global email service, spam might come from multiple languages or character sets. Domain adaptation questions arise when you want to reuse models or data from one language or domain for another.

Detailed Explanation and Pitfalls

Generative Model Adaptation

The word likelihood distributions P(x|y) are language-specific. Directly reusing them across languages can be ineffective.

Naive Bayes might still capture some universal signals (like HTML link patterns), but the text-based features become less meaningful.

Discriminative Model Adaptation

Logistic Regression learns feature weights from labeled data. If the same features (like spammy links or suspicious attachments) are relevant across languages, partial transfer can work. However, new language-specific tokens require fresh training.

Pitfalls

Incomplete or sparse labeled data in the target language might lead to poor performance.

Subtle variations in how spam is formed in different cultural contexts can hamper direct transfer.

Strategies

Train separate models per language or region and maintain a global model for shared features (e.g., IP, domain, user signals).

Use methods like multi-task learning or domain adaptation frameworks, though these can be more complex to implement.

How do these models cope with partially labeled or noisy training data?

Spam labels might be imperfect: user feedback can be subjective, or some emails remain unverified for spam status. Noise and partial labeling affect both generative and discriminative models.

Detailed Explanation and Pitfalls

Naive Bayes with Noisy Labels If the labeled dataset contains mislabeled examples, it skews estimates of P(x|y) and P(y). A small but systematic labeling error (e.g., user incorrectly marking an email as spam) can distort the likelihood significantly.

Logistic Regression with Noisy Labels Log-loss or similar objective functions penalize incorrect predictions but cannot easily distinguish between an inherently ambiguous example vs. a mislabeled example. Over time, the model may underfit or assign suboptimal weights to certain features.

Partial Labeling

Some emails might be labeled “spam” or “not spam,” but a large body of emails is unlabeled. Generative models can incorporate unlabeled data by modeling P(x), but only if we have a good prior or structural assumptions.

Discriminative approaches typically ignore unlabeled data unless combined with semi-supervised learning.

Mitigation

Use outlier detection or iterative re-labeling with human verification for suspicious examples.

Implement semi-supervised or weakly supervised methods that leverage both labeled and unlabeled data.

Filter out or downweight examples with questionable labels.

Could the independence assumptions in Naive Bayes lead to specific failures if certain groups of words commonly occur together?

Naive Bayes often assumes each feature is conditionally independent given the class, but in real text, word co-occurrences are frequent (e.g., “Viagra” and “cheap” often appear together in spam).

Detailed Explanation and Pitfalls

Violation of Independence

Strong correlations between words can cause the model to double-count shared evidence. For instance, if “cheap” and “Viagra” each strongly indicate spam, Naive Bayes might overly boost the probability when both appear together.

If correlated words appear frequently in non-spam contexts (e.g., legitimate medical emails referencing “Viagra” in a cautionary manner), the model might yield more false positives.

Domain-Specific Issues

In certain industries (pharmaceutical, finance), these correlated terms might be perfectly legitimate.

Overfitting to correlated features can degrade generalization if new spam messages use synonyms or obfuscations.

Mitigation

Use n-gram features that capture word pairs or short phrases.

Switch to or integrate with a discriminative approach that can account for correlations, or use more advanced Bayesian techniques (e.g., Bayesian networks) that relax independence assumptions.

How might explainability or transparency demands influence the choice between Naive Bayes and Logistic Regression?

User or regulatory demands for explainable AI may require insight into why certain emails are flagged.

Detailed Explanation and Pitfalls

Naive Bayes Interpretability

One can examine P(x_i|spam) vs. P(x_i|not_spam) to see which features most strongly differentiate the classes. This can be intuitive for categorical features (like word presence or absence).

Potential pitfall: The independence assumption can simplify feature interpretation, but correlated features might be misleading in actual usage.

Logistic Regression Interpretability

The weight vector indicates how each feature influences the log-odds of spam. Features with large positive weights are strong spam indicators; large negative weights are strong not-spam indicators.

Potential pitfall: If the model includes complex interactions or higher-order features, interpretation becomes less straightforward.

Edge Cases

If an oversight board needs fine-grained explanations, a simpler approach (with fewer interactions) might be favored over a large-scale, heavily regularized model.

Non-linear expansions can obscure how a single feature influences predictions.

Effective communication of why an email is flagged can improve user trust. Both models offer relatively straightforward ways to extract feature importance compared to more complex classifiers like deep neural networks or random forests.

How might each model be integrated into a streaming system where new emails arrive in real-time?

Spam detection typically needs to occur at high throughput and low latency, especially for large email providers.

Detailed Explanation and Pitfalls

Naive Bayes in Streaming

One can update counts for P(x|y) and P(y) as new labeled emails come in. If the system discards older data too aggressively, it might lose historical context.

Pitfall: Large memory usage if you store big vocabularies and historical counts for a long period. Frequent updates can also lead to noise if spam campaigns fluctuate.

Logistic Regression in Streaming

Can be updated using online gradient descent or incremental learning techniques. This typically requires a continuous stream of labeled examples.

Pitfall: If labels come with delay (e.g., a user only marks an email as spam weeks later), the model’s updates may lag behind new spam patterns.

Real-Time Requirements

Both models can classify incoming emails very quickly once trained, but the adaptation speed to new spam tactics can differ.

Overfitting might occur if too many quick updates happen in response to short-lived spam campaigns.

Balancing adaptation with stability is crucial: a purely online approach might chase noise, while a purely batch approach might be slow to adapt to shifting spam strategies.

How do we handle interpretability if the model is used as part of a larger pipeline or ensemble?

Many real-world spam detection systems combine multiple signals and algorithms. Ensuring overall explainability becomes more complex when you merge generative and discriminative components.

Detailed Explanation and Pitfalls

Ensemble Complexity

If part of the system is Naive Bayes, another part is Logistic Regression, and further modules might be rules or heuristics, attributing the final decision to any single component is non-trivial.

Weighted or voting-based ensembles complicate which model “dominates” a particular decision.

Partial Explainability

Each sub-model can produce its own explanation (e.g., word likelihoods from Naive Bayes or feature weights from Logistic Regression).

Aggregating these explanations might result in conflicting narratives if the sub-models disagree.

Mitigation

Provide a final aggregated score along with a breakdown from each sub-component.

Offer a high-level global explanation (like key features used across the ensemble) supplemented by local explanations from each model.

Edge Cases

When the ensemble rarely uses a certain sub-model, that sub-model’s explanations might not matter much in practice but could still create confusion.

If a sub-model is consistently overruled by the ensemble, it might be worth removing or retraining.

Ensuring transparency in multi-model pipelines requires careful design so that each model’s contribution is properly weighted and communicated.