ML Interview Q Series: How would you model which local merchants to prioritize when expanding a food delivery platform?

📚 Browse the full ML Interview series here.

Comprehensive Explanation

One effective way to address this problem is to frame it as a classification or ranking task, where your goal is to predict how valuable a merchant might be if you bring them onto the platform. The target variable could be whether a merchant is likely to yield high order volume or revenue within a certain time period.

Data Gathering and Feature Engineering

Data sources typically include: Merchant profile details such as cuisine type, location, average price range, popularity in the local area, and customer ratings on other platforms. Customer demand signals, for instance, demographic insights of the region, competitor penetration, and historical order patterns if available. Operational considerations such as merchant’s in-house delivery capacity, average preparation time, or historical partnerships.

After collecting relevant data, you would engineer features to capture important predictive signals. For example, you might encode merchant ratings, approximate foot traffic (if you have location-based data), or local demographic match (customer preferences).

Choice of Model

A common baseline approach involves a logistic regression model to classify merchants as “high-value” or “low-value” for acquisition. For more complex patterns, you might use tree-based models like XGBoost or Random Forests. For simplicity, consider logistic regression first.

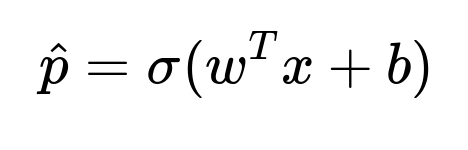

Below is the key formula for logistic regression:

Here:

hat{p}is the predicted probability that the merchant is a high-potential acquisition candidate.sigmais the logistic (sigmoid) function that outputs a value in the range (0,1).wis the parameter vector learned from training data, reflecting the importance of each feature inx.bis the bias term.xis the feature vector representing characteristics of the merchant (e.g., average rating, location variables, expected order volume).

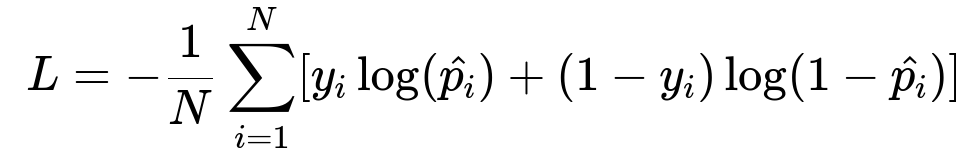

To optimize this model, we often use the binary cross-entropy or log loss as the cost function:

Here:

Nis the number of training samples (merchants).y_iindicates whether merchantiwas ultimately successful or not in historical data (1 or 0).hat{p_i}is the predicted probability that merchantiis a high-value acquisition.The summation is over all merchants in the training set.

Model Training

In practice, you would divide your available data into training, validation, and test sets. The model (logistic regression or a more advanced tree-based approach) will be trained to distinguish between merchants that turned out to be successful partners and those that did not, based on historical examples from previously entered markets.

Below is a simplified code snippet in Python that shows how you might train a model using scikit-learn. This is a very general illustration:

import pandas as pd from sklearn.model_selection import train_test_split from sklearn.linear_model import LogisticRegression from sklearn.metrics import classification_report, roc_auc_score # Assume 'df' is your dataframe with features in X and target in y X = df.drop('target', axis=1) y = df['target'] X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42) model = LogisticRegression() model.fit(X_train, y_train) predictions = model.predict(X_test) probabilities = model.predict_proba(X_test)[:, 1] print(classification_report(y_test, predictions)) print("ROC AUC:", roc_auc_score(y_test, probabilities))Model Evaluation

Model performance can be evaluated using:

Precision, recall, F1-score to measure correctness of classifications.

ROC AUC to measure the model’s ability to distinguish between positive and negative examples across various probability thresholds.

Business-level metrics such as the additional revenue from newly acquired merchants or the number of new orders placed through these merchants.

Using domain knowledge (e.g., from local operations teams) can help refine the model by focusing on the metrics that truly capture merchant success in the local market.

Implementation and Iterative Improvement

Once you have an acceptable baseline, you can improve the model by:

Incorporating additional data sources such as competitor intelligence or geographic and demographic signals.

Employing more powerful models like ensemble methods (XGBoost, CatBoost), neural networks for richer feature embeddings, or even advanced techniques that can integrate time series patterns.

Regularly retraining the model as more merchants from the new market join, providing actual performance data for feedback.

How would you handle data scarcity when entering a brand-new region?

A new market might lack historical data. You can: Use transfer learning or a multi-task learning framework by training on data from established markets while allowing some market-specific tuning. This leverages similarities across different geographical regions yet adapts to local nuances. Bootstrapping data through merchant similarity matching. Identify local merchants that closely resemble existing merchants from other markets in terms of size, cuisine, or location dynamics.

What if the model’s predictions become biased due to certain business requirements?

In real scenarios, you might have an acquisition strategy that disproportionately favors certain merchant categories. This can lead to models that skew predictions to those categories. To handle this: Monitor feature importance and fairness metrics. Apply constraints or weighting in the training loss function to maintain balanced predictions across merchant groups.

How do you incorporate temporal factors?

Merchant performance might vary seasonally or based on local events. Integrating time-based features, such as monthly or weekly trends, can boost predictive power. You can: Add features like merchant sales growth over recent months or city-wide demand surges around certain holidays. Use rolling windows for training data so that the model captures the evolving nature of the market.

What about model interpretability for internal stakeholders?

Acquisition teams and executives might want intuitive explanations. With models such as XGBoost, you can: Generate feature importance scores to identify which signals are most influential in predicting merchant success. Use SHAP (SHapley Additive exPlanations) to visualize how each feature affects individual predictions, giving the team confidence in the decision process.

How would you handle merchants that have minimal prior online presence?

Not all merchants will have rich data footprints. To manage sparse features: Incorporate publicly accessible data or approximate foot traffic from location-based data. Group merchants into “similar” clusters (e.g., by cuisine type or typical price range) and assign cluster-level estimates to fill in missing fields.

What if the objective changes to a ranking approach rather than pure classification?

Sometimes you might want to rank merchants from most promising to least promising. You can: Use a learning-to-rank approach like LambdaMART, which directly optimizes ranking metrics such as NDCG (Normalized Discounted Cumulative Gain). Or adapt your classification model’s predicted probability outputs as a ranking score.

How do you deal with cold starts?

When brand-new merchants appear, your model may struggle due to little historical information. Two strategies: Rely on population-level or cluster-level priors until enough transaction data becomes available. Build a partial feedback loop that frequently updates the model as soon as new merchants gain initial orders, refining the predictions with actual performance data.

Could you share a brief code example for a ranking scenario?

Below is a simplified pseudo-example using the LightGBM library’s built-in ranking objective:

import lightgbm as lgb

# X_train, y_train: your training features and relevance scores

# groups: array of group sizes, indicating how many merchants belong to each 'query' or region

train_data = lgb.Dataset(X_train, label=y_train, group=groups)

params = {

'task': 'train',

'boosting_type': 'gbdt',

'objective': 'lambdarank',

'metric': 'ndcg',

'learning_rate': 0.05,

'num_leaves': 31

}

ranker = lgb.train(params, train_data, num_boost_round=100)

predicted_scores = ranker.predict(X_test)

# Then you can sort merchants based on predicted_scores to get your final ranking.

This approach directly optimizes a ranking-oriented metric and is often more appropriate if the ultimate business decision is “which merchants first?” rather than a simple yes/no classification.

Below are additional follow-up questions

How would you address privacy and compliance concerns when gathering merchant data from external sources?

One of the most critical considerations is ensuring that any data obtained, particularly from external platforms or unofficial channels, respects applicable privacy laws and regulations. If the model relies on information such as average foot traffic or competitor order volume, there might be constraints on how that data can be collected, aggregated, and used. An approach to mitigate these concerns involves aggregating and anonymizing data so no single merchant or customer is personally identifiable. You can set up secure data pipelines where all sensitive identifiers are hashed or removed before reaching the modeling stage. Furthermore, you can implement differential privacy techniques that add noise to the aggregated data in a controlled way. Compliance with regulations such as GDPR or CCPA might require transparent data usage policies and periodic audits of data collection practices. Failing to address these issues properly can lead to both reputational risk and legal liabilities.

What if the target metric changes over time as the business matures in the new market?

Initially, the business may focus on short-term metrics like the number of newly onboarded merchants, but once growth stabilizes, other metrics, such as long-term profitability or order frequency, might become more important. This shifting emphasis can affect how the model is trained and evaluated. One approach is to build a multi-objective framework that captures different phases of the market’s lifecycle. For example, you might create a composite metric that blends short-term growth (e.g., sign-up rate) with mid-term retention (e.g., re-order frequency). Alternatively, you can train separate models optimized for different objectives and switch which model’s output you rely on based on the market’s current state. A continuous monitoring system can help detect when the primary business goal needs to change, ensuring the modeling approach remains aligned with evolving strategy.

How would you handle real-time updates to merchant data when new information becomes available?

Once merchants go live on the platform, their performance data (order volume, completion rates, etc.) may become available in near real time. Incorporating this data promptly can boost model accuracy because fresh information often reveals genuine changes in consumer behavior and merchant readiness. You might adopt an online learning framework or incremental training strategy where models are updated on a rolling basis with small batches of new data. If re-training the entire model is costly, one possibility is to implement a two-stage approach where a slow-to-update baseline model captures broad trends, and a lightweight model refines predictions based on the latest events. Feature stores that maintain a version-controlled record of each feature as it evolves can help ensure consistency in data inputs across all stages of deployment.

How do you validate that a pilot launch in the new market reflects the long-term performance of the merchants you acquire?

A pilot launch might have promotional discounts or special marketing pushes, causing user demand to spike in ways that do not accurately represent normal conditions. To mitigate this risk, you can design the pilot to run in carefully selected districts or with a representative subset of merchants. You can also extend the duration of the pilot and gradually phase out promotions so that the model sees a more typical usage pattern. Matching or stratification techniques can help you identify a similar set of merchants in existing markets to compare performance and reduce external confounders. You should also collect feedback from on-the-ground teams who can identify which differences in performance are due to marketing promotions versus inherent merchant strengths. Analyzing these pilot outcomes can refine the model’s ability to generalize once incentives and marketing campaigns subside.

How do you manage the risk of overfitting to large, well-known merchants while missing smaller but potentially high-growth merchants?

Models trained on historical data might be biased toward restaurants or stores that are already popular, failing to notice niche merchants with rising potential. One tactic is to ensure a balanced training set that includes examples of both well-established and relatively unknown merchants. You can experiment with cost-sensitive learning or upweight minority classes representing smaller merchants. To detect hidden high-potential merchants, you might build specialized features like growth trajectory indicators or local community interest. Additionally, deploying an exploration strategy where you periodically onboard a small percentage of merchants that the model rates as borderline candidates can uncover gems that the model may not be confident about yet. Monitoring how these borderline candidates perform in reality can provide valuable feedback signals to adjust the model’s bias toward large, established players.

How would you incorporate unstructured data, such as images or text reviews, into the merchant acquisition model?

Unstructured data like user reviews, images of food items, or ambiance photos can provide signals about merchant quality that numerical features might miss. You can extract embeddings from pre-trained language or vision models—such as BERT-like transformers for text or convolutional neural networks for images—and then incorporate those embeddings as features into your primary model. For example, text reviews from local forums or social media could reveal subtle sentiment signals about a merchant’s menu changes or service reliability. These embeddings should be thoroughly validated to ensure they add incremental predictive power rather than introducing noise. Proper dimensionality reduction techniques can help handle large embedding vectors efficiently. It is also important to guard against domain mismatches by fine-tuning the embedding models on data that closely resemble your new market’s typical language style or culinary culture.

How do you determine whether to run batch inference or real-time inference for scoring new merchants?

Deciding whether you need to score new merchants in real time or through scheduled batch processing depends on operational constraints and the speed of business decisions. If you only acquire merchants after a multi-week negotiation, real-time scoring might be unnecessary and computationally expensive. In this case, a nightly or weekly batch job that scores prospective leads could be sufficient. Conversely, if your platform allows merchants to sign up instantly and wants an immediate predictive evaluation of their potential, real-time inference might be essential to trigger rapid onboarding decisions. You can deploy a real-time inference endpoint backed by a scalable system like Kubernetes or serverless functions. To avoid inconsistency, you might maintain a unified model artifact that gets periodically updated, ensuring both batch and real-time inference rely on the same model weights and feature transformations.

How can you ensure that the final acquisition decisions reflect not only model predictions but also business and operational constraints?

Even a highly accurate model might recommend merchants that are logistically difficult to support or located in areas outside your current coverage zone. You can define feasibility checks on model output: for example, automatically filter out recommendations for merchants that exceed certain delivery radius or that fail basic partnership criteria. Post-processing steps can handle alignment with local marketing campaigns or operational capacity. In some cases, you might integrate these constraints directly into the model by applying a constrained optimization approach, where the training objective penalizes or disallows certain solutions that violate business rules. The final stage can blend the model’s predictions with a rules-based system to generate a short list of recommended targets, ensuring you do not waste resources on merchants you cannot effectively serve.

How would you deal with scenario analysis if macroeconomic changes suddenly reduce customer spending in the new market?

The level of disposable income or economic stability in a region can shift rapidly, affecting order volumes and merchant viability. You can build macroeconomic features (inflation rate, unemployment rate, consumer confidence index) into the model so it can learn correlations between economic indicators and merchant success. If you anticipate severe changes, you might conduct stress tests or scenario analyses by adjusting these macro-level features to simulate recession or expansion conditions. This approach reveals which merchants are most robust under negative macro shifts. In practice, you can create multiple sets of model predictions for different economic scenarios and weigh them by the likelihood of each scenario as per the business’s economic outlook. This helps you identify the “best bets” that remain strong even under adverse economic conditions.

How do you measure long-term effectiveness of the model after merchants have been onboarded?

Short-term metrics such as immediate order volume or first-month retention might not capture the full impact of the acquisition strategy. A more comprehensive evaluation requires tracking the total lifetime value (LTV) of each onboarded merchant, incorporating recurring orders, expansion into multiple locations, and cross-promotion synergies. You can implement a time horizon for re-checking merchant performance, such as six months or a year after initial onboarding, to update labels in the training data. Creating cohort analyses of merchants onboarded at different times can shed light on how the model’s recommendations evolve and whether it consistently identifies high-potential merchants. If real-world outcomes diverge from predictions, you can perform in-depth root-cause analyses to see whether the model missed certain contextual factors or if the market conditions shifted drastically. By maintaining a feedback loop that captures actual merchant performance over a longer timeline, you ensure the model continuously refines its predictive accuracy.