ML Interview Q Series: How would you infer a cardholder’s residence from spending records for fraud detection?

📚 Browse the full ML Interview series here.

Comprehensive Explanation

One way to approach inferring a person's primary residence from their credit card data is to analyze the distribution of their transaction locations and identify the place where they conduct most of their regular activities. This could be accomplished through a variety of statistical and machine-learning techniques that look for the center or densest region of the transaction data.

Frequency-Based Location Estimation

A straightforward method is to track how often a customer makes purchases in specific locations. You can assume that the area with the highest frequency of transactions over a certain time span is most likely the person’s home location. This can work well if the user consistently makes purchases in the same area, like where they live or where they frequently spend time.

Cluster or Density Estimation

In many cases, transaction data might contain multiple clusters: for example, a user may regularly make purchases near home and near work. To isolate the cluster that corresponds to the true residence, you can look at additional patterns such as transactions on weekends or overnight transactions, which are more likely to reflect where the individual actually resides.

One way to formalize this is to estimate a density function over the spatial coordinates of the transactions and then pick the location at which this density is maximized. A kernel density estimation (KDE) approach can be used here. You could represent each transaction location as a point in 2D space (latitude, longitude). Given n data points x_1, x_2, ..., x_n, you can define the estimated density function f-hat(x) at any spatial coordinate x by summing contributions from each transaction location, typically using a kernel function K.

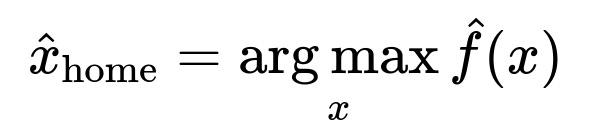

Here, n is the total number of transaction points, h is a bandwidth parameter controlling the smoothness of the kernel, d is the dimensionality (in this case, 2 if we are using latitude and longitude), and K is the kernel function (often Gaussian or another suitable function). Once you have this density function, you can find the location x that maximizes it:

In text, this is the coordinate x in latitude-longitude space that attains the highest estimated density. Practically, you would evaluate the kernel-based density over a grid of possible coordinates or use optimization methods to locate the peak. Whichever point in space yields the largest density is considered the most probable home location.

Time-Based Weighting

Some customers spend more time at home on weekends or evenings. A purely spatial approach might not distinguish between frequent daytime transactions at work and nighttime transactions at home. Therefore, you can incorporate timestamps to give heavier weights to transactions made during presumed "home hours" (e.g., late nights, weekends) when identifying the place of residence.

Outlier Removal

Travel or vacation purchases can appear as outliers far away from the usual set of locations. It is essential to filter out or down-weight these less relevant points. One simple approach is to drop locations that occur very infrequently compared to the main cluster. A more robust approach could be to use clustering algorithms that automatically discount smaller clusters.

Practical Implementation in Python

import numpy as np

from sklearn.neighbors import KernelDensity

# Suppose 'transactions' is a list of (latitude, longitude) tuples

transactions = [(37.7749, -122.4194), (37.7750, -122.4195), (34.0522, -118.2437), ...]

# Convert to a numpy array for scikit-learn

X = np.array(transactions)

# Instantiate the kernel density model

kde = KernelDensity(kernel='gaussian', bandwidth=0.01).fit(X)

# Sample across a grid of points within some bounding box

lat_range = np.linspace(np.min(X[:,0]) - 0.1, np.max(X[:,0]) + 0.1, 100)

lon_range = np.linspace(np.min(X[:,1]) - 0.1, np.max(X[:,1]) + 0.1, 100)

best_coord = None

best_score = -np.inf

for lat in lat_range:

for lon in lon_range:

score = kde.score([[lat, lon]]) # log-density

if score > best_score:

best_score = score

best_coord = (lat, lon)

print("Estimated Home Location:", best_coord)

The above snippet demonstrates one way to find the point in a specified region that maximizes the log-density. The variable best_coord would be your estimated home location.

Potential Follow-up Questions

If someone has multiple homes, how can we accommodate that scenario?

One approach is to look for multiple significant clusters rather than a single dominant one. If the second cluster is comparable in size to the first (or meets a certain threshold), you might conclude there are two residences. You can apply clustering methods like DBSCAN or Gaussian Mixture Models to detect these distinct clusters. Then, incorporate additional temporal information—if one cluster is active mainly in the winter months while the other is consistently active in the rest of the year, the user might have a seasonal residence.

How would you address recent moves or changes in primary residence?

One possibility is to apply a time-decay factor where recent transactions have higher weight than older ones. You might store transaction timestamps and use an exponential decay in the density or cluster assignment. This ensures the system gradually “forgets” old locations and adapts to the user's new address.

How should outliers be removed without discarding legitimate travel activities?

Filtering outliers should be done based on overall frequency, distribution, and context. A robust clustering approach can naturally isolate small clusters as outliers. Alternatively, you could create rules that automatically down-weight clusters that account for only a small fraction of transactions or that occur outside normal usage hours. However, if a user’s travel pattern becomes regular, the outlier cluster might shift into a genuine second residence.

Would you combine spending category data into your analysis?

Enriching the location-based method with merchant category codes, time of day, and day of week can provide extra context. For example, grocery purchases near a location at night might have a stronger correlation with home. Similarly, daytime restaurants might indicate workplaces. Including these categorical features in the clustering or density estimation model can sharpen the identification of the true home location.

How do you handle situations with limited data from a new user?

When the transaction history is short, the location estimate may be quite uncertain. In such cases, you might broaden your data sources by including known address data (e.g., from the account opening form) or require a certain minimum volume of transactions to achieve a reliable estimate. You can also incorporate dynamic updates to refine the location estimate as more transactions come in.

How do you make the solution scalable for millions of customers?

Scalability requires efficient data processing pipelines. For instance, you could:

Implement distributed clustering or KDE on platforms like Spark.

Maintain incremental data structures that update as new transactions arrive.

Use approximate nearest-neighbor techniques to group transactions quickly.

Segment customers by region and process them in parallel.

Through these methods, a system can grow to handle massive transaction datasets while maintaining high performance and accuracy in detecting a user’s primary residence.

Below are additional follow-up questions

How do we handle partially missing or imprecise location data in credit card transactions?

Incomplete or imprecise location data can arise if the merchant's address is missing, the exact geolocation is unavailable, or the transaction is aggregated at the city level. One way to address this is to assign probabilities or confidence levels to each transaction’s latitude-longitude. You could maintain a probability distribution for each transaction instead of treating it as a single point. Then, a specialized clustering or density estimation approach might use weighted data points. If enough data points in a certain region overlap even with moderate precision, the cluster in that location still emerges as significant. Another strategy is to incorporate known merchant address databases or external geocoding services to refine or correct uncertain location information. A potential pitfall is overestimating confidence and discarding transactions deemed too imprecise, which can bias the final residence estimate if the user primarily shops at merchants with incomplete location data.

How would you detect a genuinely nomadic or frequently traveling lifestyle?

Some people might not have a single stable residence, instead moving frequently (e.g., digital nomads). Traditional clustering methods might yield scattered or flat densities across multiple areas. One approach is to create temporal windows—analyzing each segment (week, month, quarter) of data separately to see if a dominant cluster emerges in that period. If every window shows a new primary location, you can classify this user as highly mobile and flag them differently in the fraud detection system. Another angle is to use anomaly detection to distinguish frequent travelers from the typical population. The challenge lies in deciding at what threshold or pattern you designate a user as nomadic, since occasional work travel or extended vacations might appear similar.

In what ways might privacy concerns influence how we infer a user’s residence?

Inferring someone’s location from financial data can raise significant privacy issues. The data collection, storage, and algorithms used should be compliant with regulations like GDPR or other local data protection laws. Ideally, you would implement strict access controls and anonymize raw transaction data so that any residence inference is handled on a need-to-know basis. One potential pitfall is overuse of highly sensitive location data for purposes beyond fraud detection. This can erode trust if users discover their data is repurposed. Additionally, from a machine-learning perspective, building robust privacy-preserving models might involve techniques such as differential privacy to ensure that no individual user’s transaction patterns can be directly identified in aggregated outputs.

How would you manage real-time location inference when transactions arrive continuously?

Real-time fraud detection systems often need to flag suspicious transactions as they occur. One approach is to maintain a continuously updated location model for each user. When a new transaction arrives, the system checks the distance from the user’s known residence clusters to decide if the transaction is suspicious. You can implement incremental or online clustering methods that update cluster centers or density estimates on the fly without retraining from scratch. A potential challenge is balancing responsiveness with accuracy—frequent updates might cause the model to fluctuate too much, whereas infrequent updates can lead to delayed adaptation. You might adopt a mini-batch strategy, updating the model every few transactions or every hour rather than every single event.

How do we differentiate between a user’s real home and a hotel location if the user stays there often?

Repeated hotel transactions can appear as a cluster, especially for those traveling on business for extended durations. A purely spatial approach can mistake this for a residence. Incorporating metadata about the merchant category (e.g., lodging) helps distinguish hotels from residential areas. Further, analyzing transaction timestamps can reveal that purchases at this location occur only intermittently or over a short period, unlike a typical home cluster where you see consistent, long-term transaction patterns. A pitfall here is that some people rent extended-stay properties that resemble hotels, so you might need additional heuristics like total duration of stay or the variety of merchants used around that location before labeling it as a non-residence.

How would you handle online or remote transactions that do not reflect physical purchase locations?

Many credit card transactions occur online, with a billing location that could be the merchant’s central office or a cloud service provider location far from the user. If you don’t treat online merchants differently, your clustering approach might produce significant noise and mislabel the user’s true physical location. One strategy is to classify transactions into categories—physical vs. online—and primarily use the physical transactions for location inference. Alternatively, weight online transactions much lower. A challenge arises when users make nearly all purchases online, leaving very few physical transactions for location estimation. In such cases, you might default to known addresses from account signup data or combine partial signals from shipping addresses (if available) to approximate the home location.

What if multiple individuals share the same credit card account?

Joint accounts or family members using the same card can introduce contradictory location signals if two or more people reside separately or have distinct spending patterns. If frequent transactions occur in two distant cities, the system might incorrectly assume the user is highly mobile or has two homes. One solution is to implement user segmentation at the account level—if the account holder has authorized additional cardholders, each cardholder could be tracked separately based on their unique transaction patterns (e.g., distinct card numbers on the same account). Another possibility is to incorporate personal data fields (like the authorized user’s name) from the transaction record, if available, to differentiate spending patterns.

Could location spoofing or geolocation manipulation affect residence inference?

Fraudsters might deliberately spoof merchant location data or use virtual merchant processing addresses to evade detection. This can introduce false signals into the location model. One partial safeguard is cross-referencing the user’s transaction data with reliable external sources, like known legitimate merchant address directories. Statistical detection of suspicious patterns (e.g., multiple transactions that suddenly switch geolocation in short succession) can help identify spoofing attempts. However, if the merchant location itself is consistently falsified, the model might place a cluster in an incorrect position. This scenario highlights the importance of validating data inputs and possibly combining multiple signals (e.g., user device geolocation or IP address for online transactions) to confirm authenticity.

How would you evaluate or measure the accuracy of a location inference system?

Measuring the accuracy typically involves comparing the inferred residence location against a ground-truth reference such as the address on file for each user. You might calculate the average distance between the inferred location and the actual residence. Other metrics could include the fraction of correct city-level inferences or whether the top inferred location matches the actual home location above a certain probability threshold. A subtlety is that real-world data may change over time—people relocate, or addresses get updated—so an evaluation protocol should consider recency. Another pitfall is that not all incorrect estimates are equally damaging; misplacing someone by a few miles might be less problematic than placing them in an entirely different state. Hence, the choice of metric should reflect operational needs and risk tolerance.

How can external data sources improve the reliability of the inferred home location?

Augmenting transaction-based inference with other data can resolve ambiguities. For instance, if the bank has user-provided addresses from account registration or if there’s utility bill information, these data points can be used to confirm or refine the location clusters. Public records or geocoded census information might also provide context on residential vs. commercial zones. One pitfall is placing too much trust in external data if it’s outdated or inaccurate—people might forget to update their bank’s address. Another risk is violating privacy or data-sharing regulations if such external sources are not used in a compliant manner. Combining multiple sources usually boosts accuracy, but must be done carefully to avoid introducing conflicting or unreliable information.