ML Interview Q Series: How would you use data to convince a fast-food brand to invest in another Snapchat filter?

📚 Browse the full ML Interview series here.

Comprehensive Explanation

To make a strong, data-driven case for why a fast-food chain should renew its partnership for another custom filter, there are several analytical approaches that can be taken. The ultimate goal is to quantify how the previous campaign impacted key business metrics and how a new campaign could replicate or exceed these benefits.

Measuring User Engagement and Brand Awareness

One of the most direct ways to demonstrate the value of a Snapchat filter is to show how many users interacted with it and what kind of visibility it brought to the brand. Snap provides a variety of engagement metrics, such as total uses of the filter, number of shares, average engagement time, and user demographics.

You could connect these engagement metrics to the potential foot traffic or online ordering for that fast-food chain. By comparing the restaurant’s sales or foot traffic data before, during, and after the filter’s availability, you can draw insights into whether the filter contributed to an observable lift in visits or purchases.

Correlating Social Engagement with Restaurant Sales

The core strategy involves connecting filter usage data with store-level sales. This can be done through geolocation data (if users have location services enabled and if there is a measurement framework in place) or through aggregated store traffic patterns over the relevant time window. By analyzing the period when the filter was active, look for a noticeable difference in average daily foot traffic or average ticket size.

If the fast-food chain has mobile ordering or app-based sales data, you can analyze how many in-app coupon redemptions or purchases occurred during the filter’s availability. This helps link digital engagement directly to financial metrics like revenue or transaction volume.

Estimating Incremental Lift

While raw engagement numbers can be persuasive, decision-makers often focus on bottom-line impact. This requires showing how the filter delivered a measurable incremental benefit. In many scenarios, you can adopt a test-control framework:

Test Region: Locations or user segments that were exposed to the filter during the promotional week.

Control Region: Matched locations or user segments that were not exposed to the filter.

By comparing the difference in sales trends, user foot traffic, and brand mentions between test and control groups, you can tease out the portion of uplift attributable to the filter. This helps account for background factors such as seasonality or general marketing trends.

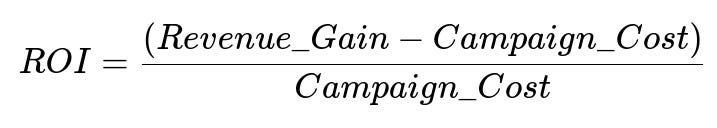

Calculating Return on Investment (ROI)

Even if the fast-food franchise is enthusiastic about brand visibility, they are still looking at cost versus revenue. A straightforward way to demonstrate value is to calculate the return on investment. One common formula for ROI is presented below:

Where:

Revenue_Gainis the incremental revenue that you can attribute to the custom filter, based on comparisons with a control group or historical data.Campaign_Costrepresents all expenses incurred during the custom filter initiative, such as Snapchat’s sponsorship fees, design and development costs, and any additional marketing expenses.

A positive ROI that meets or exceeds the brand’s internal benchmarks is a strong indication of the campaign’s success. By emphasizing how new creative elements, improved targeting, or extended availability might further improve ROI, you provide an even stronger case for renewal.

Validating Brand Lift and Customer Retention

Apart from immediate sales or foot traffic, there may be a sustained brand-awareness effect. Some fast-food franchise customers might be more likely to revisit the restaurant or view it more favorably because of the fun and interactive experience. Data sources like brand surveys and social media sentiment analyses can help measure brand perception. If you can present evidence that the filter boosted brand loyalty or social mentions in a way that persisted beyond the campaign itself, the argument for a second filter grows stronger.

Breaking Down the Data Collection and Analysis Pipeline

Filter Engagement Data: Collected from Snapchat’s analytics (number of uses, number of shares, average time spent on the filter, demographic breakdown, etc.).

Sales and Foot Traffic: Point-of-sale data from the fast-food chain, potentially broken down by store or region. If available, mobile-app ordering data can be invaluable for connecting online activity to offline sales.

Tracking Conversions: Redemption of promotional codes or coupons that are triggered by the filter provides more direct evidence of effect.

A/B Testing Framework: Implement some form of holdout group or control region to establish a baseline for comparison.

Potential Follow-Up Questions

How do we measure ROI accurately if the fast-food brand does not provide precise store-level sales data?

In the absence of granular data, look for proxy metrics. For instance, you can measure website traffic or track how many times a promotional coupon was viewed or saved. You could also incorporate survey data where users self-report whether they visited the restaurant during the filter’s availability. Though less exact than point-of-sale data, combining multiple data sources can still paint a convincing picture of incremental lift.

How do we tease out the filter’s impact when other marketing campaigns happened concurrently?

It can be challenging to disentangle the effects of simultaneous marketing efforts, such as TV commercials or influencer promotions. Try to include those factors as variables in your regression or statistical model. By controlling for known campaigns, you can mitigate confounding influences. You might also use time-series analysis or specific matched market tests where only the Snapchat campaign was introduced, then compare the results to markets that did not have the same mix of marketing campaigns.

What if the demographic using Snapchat doesn’t align perfectly with the fast-food chain’s target audience?

Snapchat usage skews younger, which can actually be advantageous if the fast-food brand is trying to attract a younger demographic. Emphasize that a custom filter campaign can tap into exactly that user segment. If the demographic alignment is still a concern, you could integrate more targeted approaches, such as geofencing around certain locations or focusing on demographic segments within Snapchat’s advertising platform. Combine these targeted efforts with a robust analysis that looks at not just the overall usage, but usage from certain age brackets or regions.

Can we demonstrate long-term customer retention or cross-selling effects from the filter?

To explore longer-term impacts, check whether users who engaged with the filter returned to the restaurant or used relevant coupons in subsequent weeks. If you can track loyalty app usage or continued social media engagement, that might shed light on whether the custom filter can influence repeat business. Highlighting potential cross-selling—like increased purchases of new menu items by filter users—can strengthen the case for the campaign’s broader benefits.

How to address concerns about campaign costs?

Show a careful breakdown of the different cost components, such as filter design, placement fees, and any supportive marketing. Then present realistic revenue forecasts based on historical data, test-and-control analyses, and any pilot campaigns. If you have data that suggests the filter brought in incremental revenue that was significantly higher than costs, emphasize that ratio. You can simulate different scenarios (e.g., a best-case scenario, likely scenario, worst-case scenario) to show how the brand stands to gain or, at minimum, break even.

What if last year’s filter did not produce a noticeable uptick in sales?

Even if the prior campaign didn’t show a major lift, you could do a deeper post-mortem to understand potential pitfalls. Maybe the filter design was not eye-catching, or it didn’t run long enough, or it lacked synergy with other marketing channels. Propose improvements like better creative assets, extended campaign durations, or improved targeting for this year’s filter. Present these learnings as a pivot strategy that can lead to significantly better results if repeated and refined.

Could we integrate data from third parties like Google Trends or local foot traffic data to strengthen our argument?

Definitely. Publicly available data sources such as Google Trends, location-based analytics providers, or social media listening tools can complement in-house metrics. For instance, showing a spike in relevant Google searches or general foot traffic in the vicinity of fast-food outlets during the campaign period can reinforce the notion that the filter contributed to heightened brand interest.

By combining robust quantitative methods and a comprehensive overview of engagement, brand awareness, and ROI, you create a persuasive story that highlights the clear benefits of renewing the custom filter partnership.

Below are additional follow-up questions

How do you handle privacy concerns if you need to combine user-level Snapchat engagement data with restaurant sales data?

Combining individual user data from Snapchat with a restaurant’s point-of-sale system can raise significant privacy and compliance issues. One subtle pitfall occurs when the restaurant wants to see if a specific user who used the filter also made a purchase. From a data governance standpoint, you should anonymize or de-identify all personally identifiable information (PII).

A recommended practice is to perform any linking at an aggregated or hashed user ID level. For instance, you might hash each user’s device ID before matching it with hashed identifiers from store loyalty programs to identify potential overlaps in activity. This approach protects individuals’ privacy while still permitting aggregated analytics. Additionally, keep location data usage consistent with GDPR or CCPA guidelines if you are operating in jurisdictions where these regulations apply. This means acquiring explicit user consent for location tracking and data collection, ensuring that you have a valid legal basis for processing such data, and giving users the option to opt out.

A potential real-world complication arises if your dataset is large but the population using both the filter and the loyalty app is relatively small—this can lead to re-identification risks. Mitigate this by preventing the release of subsets or segmentation analyses that are too granular, such as extremely narrow demographics or locations with only a few customers.

What if multiple filters or lenses from other brands ran concurrently, making it harder to isolate the effects of this custom filter?

Running multiple branded filters or lenses on Snapchat at the same time can create confounding effects. For instance, if two major brands launched filters that overlap in timeframe and target demographic, the user engagement might get divided. To disentangle which filter drove user interest for each brand, you can implement a few strategies:

Filter-Specific Tracking: Tag each filter or lens with a unique campaign identifier. Then, track click-through rates, shares, and usage times associated only with the fast-food chain’s filter.

Sequential Testing: If possible, stagger the filter launches by brand or region. By rolling out the custom filter in a subset of geographies first and then expanding it, you can compare early-adopter regions against areas still exposed to other filters.

Post-Campaign Surveys: Conduct a small-scale user survey asking which filters they engaged with and why. Although self-reported data can be biased, it still offers qualitative insight into user motivations.

A subtle issue is that the fast-food filter might benefit from a “halo effect” if a second filter from a related industry is also trending. Conversely, a filter associated with a rival brand might create confusion or overshadow the fast-food filter. Being transparent about these overlapping campaigns and quantifying them to the best extent possible strengthens your overall analysis.

How do you account for seasonal or macroeconomic factors that might skew the results of your analysis?

If the promotional filter was launched during a highly seasonal period (e.g., a holiday weekend or during back-to-school season), then baseline user behavior and dining patterns might differ from an ordinary week. You need to control for these external factors by comparing historical data from the same seasonal window in prior years. Alternatively, you can use a difference-in-differences approach, where you track metrics over time for both the test group (areas exposed to the filter) and a carefully matched control group (areas not exposed or slightly delayed in exposure).

A real-world complication might be a macroeconomic downturn that causes people to dine out less overall. If the filter ran during an economic slowdown, even stable sales might represent a relative success. By incorporating economic indicators (like consumer confidence or unemployment rates), you can demonstrate that the filter helped maintain or minimize losses relative to broader market trends.

How do you measure intangible brand value changes when users may engage with the filter but not necessarily visit the restaurant?

One of the trickiest aspects of marketing analytics is capturing intangible brand value or changes in consumer perception. A user might see or interact with the filter and develop a positive association with the brand, only to make a purchase weeks later—well after the filter is gone. Some strategies to gauge intangible brand value are:

Brand Lift Studies: Poll or survey users to see if their perception of the fast-food chain changed after seeing/using the filter.

Long-Term Social Media Monitoring: Track sentiment and mentions around the brand on social platforms before, during, and after the filter campaign.

Re-Engagement Trends: Look for changes in how frequently users who engaged with the filter come back to your app, website, or store.

A subtle real-world challenge arises when brand mentions or sentiment might be influenced by unrelated events (a new product launch, a corporate controversy, etc.). Distinguish the impact of the filter from these external influences by focusing on mentions or sentiment specifically tied to the filter’s keywords or hashtags.

What if you lack access to exact timestamped data linking filter usage to store visits in real-time?

When you can’t observe immediate conversions or store visits tied to each user’s filter usage timestamp, you often have to rely on aggregated data windows (e.g., daily or weekly intervals). The method is to look at uplift in store visits or revenue in the window right after filter usage spiked. This approach can lead to some measurement noise: People may see the filter today and only visit the restaurant next week.

To refine your analysis, you can adopt a rolling window approach that tracks shifts in daily or weekly metrics. For example, if the filter launched on a Monday, you could compare Monday-through-Sunday metrics to the historical average of Monday-through-Sunday for the previous four weeks. Consider an extended “lag period” to account for delayed user action.

A real-world snag occurs if there is a significant event (like a local sports final or festival) that inflates store traffic during the same analysis window. Whenever possible, note such anomalies and either exclude those timeframes or use them as additional variables in a regression model.

How do you perform A/B testing if you only have the option of launching the filter globally?

A/B testing typically calls for segmented randomization, but sometimes the filter is rolled out worldwide. One workaround is “synthetic control methods.” In this method, you build a synthetic control group by weighting data from multiple regions (or user groups) so that they collectively match pre-launch metrics of the test region as closely as possible. This allows for an approximate comparison if no explicit holdout region or population is available.

One edge case is if user behavior changes drastically in certain cultural contexts; for example, some regions may inherently have higher Snapchat usage, or fast-food consumption patterns can vary widely. Your synthetic control might not accurately mirror the behaviors in the region where the filter is launched. Ideally, you refine your synthetic control by selecting only similar regions with comparable pre-launch trends.

How do you handle noisy data from external sources, such as coupon redemption or foot traffic counters that might not be perfectly accurate?

Real-world data sources like coupon redemption logs or physical foot counters in restaurants can be error-prone. Coupons can be left unused, lost, or even fraudulently redeemed. Foot traffic counters may double-count individuals who exit and re-enter. Overcoming these inaccuracies can involve cross-referencing multiple data sources. For instance, if you have redemption data from a point-of-sale system and user engagement from Snapchat, you can use both sets to look for converging patterns.

Additionally, robust outlier detection is helpful. Set up thresholds for suspicious patterns (e.g., a sudden spike in redemptions that’s not reflected in store sales). Investigate these anomalies to see if they stem from a genuine marketing success or from data recording errors. Documenting assumptions and potential error margins is key to ensuring stakeholders understand the limitations of your data.

How can you demonstrate the benefits of improved targeting or personalization in the new filter?

One approach is to segment your user base by demographics or behavior (for example, focusing on repeat fast-food customers vs. first-time visitors) and serve slightly different versions of the filter. Compare engagement metrics across these segments. If the personalized version yields a noticeably higher engagement or redemption rate, you have strong evidence for the impact of personalization.

A subtle pitfall is over-segmentation. Splitting your user base into very small subgroups can lead to limited sample sizes and statistically inconclusive results. Another potential edge case is privacy: If personalization is based on sensitive attributes, you need explicit user consent or anonymized modeling to avoid violating data protection regulations.

In what ways can you optimize filter design and call-to-action features to maximize real-world visits?

Even with data-driven targeting, a filter’s visual design and interactive features play a critical role in converting engagement into actual sales. Use iterative design testing before the final rollout. For instance, test how differently worded call-to-action prompts (e.g., “Try our new combo meal!” vs. “Get 20% off now!”) affect user behavior. You can measure micro-conversions, such as how many users share the filter with friends or click on the embedded link.

A realistic challenge is that not every user who interacts with a visually appealing filter will follow through with a purchase. Observing user “drop-off” points—like the moment they exit the filter without clicking further—gives you insight into which aspects need refining. Also, cultural nuances can come into play: a design that resonates strongly in one region may be off-putting or less impactful in another. If your audience is global, localize the look, language, and messaging of the filter to each market.

How do you forecast the potential performance of a new filter based on last year’s campaign data?

Forecasting performance is not just a simple linear extrapolation. You have to account for changes in user behavior, market conditions, and filter features. One method involves building a predictive model using historical metrics from last year’s campaign (e.g., daily filter uses, redemption rates, demographic breakdowns) combined with external variables such as new menu items launched, general growth in Snapchat’s user base, or changes in competitor activity.

A subtle but important consideration is that marketing often encounters diminishing returns. If last year’s filter was a novelty, repeating the same creative concept might generate less excitement. Factor in the potential novelty decay by discounting your projections unless you are introducing innovative features or promotions this time. Conversely, if the new filter introduces significantly enhanced interactive elements, you could see an upside beyond last year’s baseline.