ML Interview Q Series: Interpreting Large Negative Coefficients in Linear Regression: Causes, Verification, and Solutions.

📚 Browse the full ML Interview series here.

43. In building a linear regression model for a particular dataset, you observe that one of the features has a relatively large negative coefficient. What does that imply?

Interpretation of a Large Negative Coefficient

In a linear regression model, each coefficient represents the estimated change in the target variable for a one-unit increase in the corresponding feature, while keeping all other features constant. When you observe a large negative coefficient, it implies that, holding all else equal, an increase in that feature is associated with a significant decrease in the predicted outcome. In other words, the feature has a strong inverse relationship with the target variable. This negative sign is a direct indication that the feature works in opposition to the target variable.

Mathematical Representation and Explanation

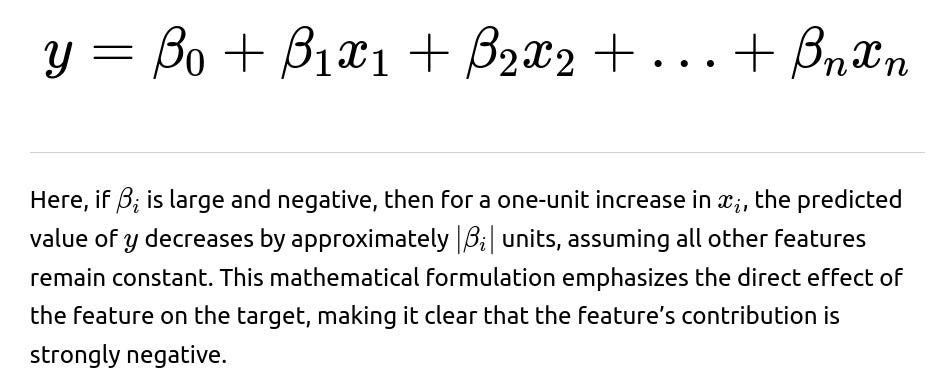

Consider the linear model expressed as

Potential Reasons Behind a Large Negative Coefficient

A large negative coefficient can arise from several scenarios:

True Negative Relationship: The feature might inherently have an inverse effect on the target. For example, in a model predicting house prices, if the feature represents the distance from a city center, a larger distance might naturally lower the price.

Feature Scaling Issues: If the feature is measured on a scale that is significantly different from other features, its coefficient might appear unusually large in magnitude. Standardizing or normalizing the data can help in comparing the relative effects more accurately.

Multicollinearity: If the feature is highly correlated with one or more other features, the estimated coefficients can become unstable. In such cases, a large negative coefficient might be compensating for the influence of correlated variables. Techniques like variance inflation factor (VIF) analysis can help identify such issues.

Outliers or Leverage Points: Extreme values in the feature distribution might skew the regression results. A large negative coefficient might be an artifact of such data points, which could be investigated through residual analysis or robust regression techniques.

Model Misspecification: The negative coefficient might also indicate that the model is not capturing the true underlying relationship due to omitted variable bias or interaction effects. Ensuring that the model specification is correct is crucial in interpreting coefficients accurately.

Practical Implementation Details and Verification

To ensure that the observed large negative coefficient is meaningful and not a byproduct of data issues, consider the following steps:

Examine Correlation Structure: Compute correlation coefficients between features and check for multicollinearity. If high correlations exist, consider techniques like principal component analysis or regularization.

Standardize Features: When features are on different scales, standardizing them can lead to coefficients that are more directly comparable. This helps in assessing which features truly have strong effects.

Residual Analysis: Evaluate the residuals of your model to check for patterns that may suggest the influence of outliers or non-linear relationships.

Cross-validation: Use techniques such as k-fold cross-validation to ensure that the coefficient estimates are stable across different subsets of the data.

Code Examples

Below is a Python code snippet that demonstrates how to standardize features, fit a linear regression model, and inspect the coefficients, including methods to check for multicollinearity:

import numpy as np

import pandas as pd

from sklearn.linear_model import LinearRegression

from sklearn.preprocessing import StandardScaler

import statsmodels.api as sm

from statsmodels.stats.outliers_influence import variance_inflation_factor

# Example dataset

np.random.seed(0)

data = pd.DataFrame({

'feature1': np.random.normal(0, 1, 100),

'feature2': np.random.normal(5, 2, 100),

'target': np.random.normal(10, 5, 100)

})

# Introduce a feature with a strong negative relationship

data['feature_strong_neg'] = 10 - 2 * data['target'] + np.random.normal(0, 1, 100)

# Standardize the features

scaler = StandardScaler()

features = ['feature1', 'feature2', 'feature_strong_neg']

X = scaler.fit_transform(data[features])

y = data['target']

# Fit linear regression model

model = LinearRegression()

model.fit(X, y)

coef_df = pd.DataFrame({

'Feature': features,

'Coefficient': model.coef_

})

print("Coefficients of the model:")

print(coef_df)

# Checking for multicollinearity using VIF

X_with_const = sm.add_constant(pd.DataFrame(X, columns=features))

vif_df = pd.DataFrame()

vif_df["feature"] = X_with_const.columns

vif_df["VIF"] = [variance_inflation_factor(X_with_const.values, i) for i in range(X_with_const.shape[1])]

print("\nVariance Inflation Factor (VIF) for each feature:")

print(vif_df)

Discussion of Edge Cases and Pitfalls

It is important to consider the following potential pitfalls and edge cases:

Overinterpretation of Magnitude: A large magnitude does not necessarily mean the feature is the most important predictor without considering its unit of measurement and scale. Always compare coefficients after appropriate scaling.

Collinearity Effects: In the presence of multicollinearity, a large negative coefficient might simply be the result of redundant information between features. It might not reflect the true causal relationship. Techniques such as ridge regression can help mitigate this issue.

Non-linear Relationships: Linear regression assumes a linear relationship. If the true relationship is non-linear, a large negative coefficient might be compensating for the inadequacies of a linear model. In such cases, using non-linear models or feature transformations could be more appropriate.

Interpretation in Context: The sign and magnitude of a coefficient must be interpreted in the context of domain knowledge. In some domains, a counterintuitive negative relationship might point to deeper underlying mechanisms or data collection issues that need further investigation.

Follow-up: What factors could contribute to a large negative coefficient aside from a genuine negative correlation?

When a large negative coefficient appears in a regression model, factors beyond a genuine inverse relationship could be at play:

An important factor is the scale of measurement. If a feature is measured in a unit that makes its range large relative to other features, its coefficient might be inflated in magnitude. For example, if one feature is measured in thousands while others are in single digits, the coefficient may be large merely because of the unit differences. Standardizing the data typically alleviates this issue.

Another factor is multicollinearity. When two or more features are highly correlated, the regression algorithm struggles to isolate the effect of each feature independently. This can result in coefficients that are unexpectedly large in magnitude (either positive or negative) as the model tries to distribute the shared variance among correlated predictors. The use of VIF (Variance Inflation Factor) or regularization techniques like ridge regression can help identify and mitigate this problem.

Data anomalies such as outliers can also skew coefficient estimates. Outliers exert disproportionate influence on the fitted model and can cause the estimated coefficient for a feature to appear unusually large and negative. Performing diagnostic checks on residuals and using robust regression methods can help address this issue.

Lastly, omitted variable bias and model misspecification can contribute. If an important variable that interacts with the feature is missing from the model, the estimated coefficient might absorb effects that do not solely belong to the feature in question, thereby distorting its magnitude and sign.

Follow-up: How can we verify that the observed negative coefficient is not a result of feature scaling issues or multicollinearity?

To verify that the negative coefficient is not an artifact of scaling or multicollinearity, several steps should be taken:

Firstly, standardize or normalize the features so that each feature contributes on a comparable scale. Standardization centers the features around zero and scales them to unit variance, which allows for a fair comparison of the coefficients.

Secondly, conduct a multicollinearity analysis using the Variance Inflation Factor (VIF). A VIF significantly higher than 1 (typically above 5 or 10) indicates that a feature is highly correlated with one or more other features. If the feature with the large negative coefficient has a high VIF, it is a strong indication that multicollinearity might be affecting the coefficient’s magnitude.

Additionally, you can perform sensitivity analysis by removing or combining correlated features. If the coefficient’s magnitude changes significantly upon altering the model structure, it suggests that the large negative coefficient may be due to collinearity rather than a true negative effect.

Finally, cross-validation helps ensure the stability of coefficient estimates. If the model consistently shows a large negative coefficient across multiple folds of the data, it strengthens the confidence that the relationship is genuine and not a result of particular quirks in the training data.

Follow-up: How would you address issues if multicollinearity is affecting your model coefficients?

If multicollinearity is detected, there are several strategies to address it:

One approach is to remove or combine highly correlated features. Feature selection methods can help identify which features are redundant, and dimensionality reduction techniques like Principal Component Analysis (PCA) can transform correlated features into a set of linearly uncorrelated components.

Another method is to apply regularization techniques. Ridge regression, for example, adds an L2 penalty term to the loss function which shrinks the coefficients, thereby reducing the effect of multicollinearity. Lasso regression, which uses an L1 penalty, not only shrinks coefficients but can also perform feature selection by forcing some coefficients to exactly zero.

It is also important to review the data collection process and ensure that all relevant variables are included in the model to mitigate omitted variable bias. This comprehensive approach will help isolate the genuine effects of the features on the target variable.

By following these strategies, one can obtain more stable and interpretable coefficient estimates, ensuring that the observed large negative coefficient reflects a true relationship rather than modeling artifacts.

Below are additional follow-up questions

How do outliers and leverage points affect the estimation of coefficients, and what strategies can be used to mitigate their influence?

Outliers and leverage points can disproportionately influence the fitted coefficients in a linear regression model. Outliers are data points that deviate significantly from the trend of the rest of the data. Leverage points are observations that have extreme predictor values and thus have a strong impact on the slope of the regression line. A large negative coefficient might be driven by one or more such points rather than reflecting the true underlying relationship.

Detection involves examining residual plots and influence measures such as Cook's distance, leverage statistics, and standardized residuals. If a point is identified as an outlier or high-leverage observation, one must investigate whether it is due to data entry errors, a measurement anomaly, or a legitimate but rare occurrence.

Mitigation strategies include:

Robust Regression: Methods like Huber regression or RANSAC reduce the impact of extreme values.

Data Transformation or Trimming: Transforming variables or removing extreme outliers (with careful justification) can stabilize the coefficient estimates.

Weighted Least Squares: Assigning lower weights to suspected outliers can reduce their influence.

Pitfalls include overzealous removal of data points, which can lead to biased results if the outliers represent real variability in the data. It is crucial to balance robustness with the risk of discarding meaningful observations.

How do interaction terms and non-linear relationships affect the interpretation of a large negative coefficient in a linear model?

When a linear regression model omits important interaction terms or non-linear transformations, a large negative coefficient might not represent the true isolated effect of the predictor. Instead, it might be compensating for the missing complexity in the model. For example, if two variables interact, their joint effect on the target cannot be captured by simple additive terms. This can result in misleading coefficient estimates, including exaggerated negative values.

Incorporating interaction terms allows the model to account for combined effects. Similarly, non-linear relationships might be better modeled with polynomial terms or splines. Without these adjustments, the estimated coefficient might reflect an average effect over a range where the true relationship is more complex.

Edge cases include scenarios where the effect of the predictor changes direction depending on the level of another variable. In such cases, the interpretation of a single coefficient becomes ambiguous, and one must carefully analyze partial derivatives or use visualizations to understand the underlying relationship.

What impact does model misspecification have on coefficient estimates, and how can one verify the adequacy of the model?

Model misspecification occurs when the assumed functional form of the regression model does not align with the true data-generating process. This includes omitting relevant variables, choosing an incorrect relationship (e.g., linear instead of non-linear), or neglecting interaction effects. A large negative coefficient might be a symptom of such misspecification where the model tries to account for omitted factors or incorrect relationships by distorting the coefficient values.

Verification involves:

Residual Analysis: Plotting residuals against fitted values or predictors can reveal patterns indicating non-linearity or heteroscedasticity.

Specification Tests: Tests such as the Ramsey RESET test can provide statistical evidence of misspecification.

Comparative Model Building: Evaluating alternative models (e.g., adding interaction terms or non-linear transformations) helps assess whether the original specification is adequate.

A key pitfall is assuming that a statistically significant coefficient confirms correct model specification. Even if the coefficient is significant, systematic patterns in the residuals may suggest that important dynamics are missing from the model.

How would you interpret a large negative coefficient in the context of feature transformations, such as logarithmic or polynomial transformations?

When a feature undergoes a transformation, the interpretation of its coefficient changes. For a logarithmic transformation, for example, a coefficient represents an elasticity measure. A large negative coefficient in this context implies that a percentage increase in the original variable corresponds to a significant percentage decrease in the target. However, the magnitude of the coefficient must be interpreted in the transformed space, and its effect on the original scale is nonlinear.

For polynomial transformations, the relationship between the predictor and target is modeled as a curve. A large negative coefficient on a higher-order term might indicate a downward curvature at certain ranges of the predictor variable. Here, the coefficient should not be interpreted in isolation; instead, one must consider the combined effect of all polynomial terms.

Potential pitfalls include misinterpreting the effect when back-transforming to the original scale and assuming linearity in the transformed space. It is important to provide a clear explanation of the transformation and use plots or derivative analyses to illustrate the marginal effects across the range of the predictor.

How does heteroscedasticity affect the reliability of coefficient estimates, and what measures can be taken to address it?

Heteroscedasticity occurs when the variance of the errors is not constant across observations. This violates a key assumption of linear regression and can lead to inefficient coefficient estimates and biased standard errors, even if the estimates themselves remain unbiased. A large negative coefficient may appear statistically significant in the presence of heteroscedasticity when, in reality, the confidence intervals are misleading.

To address heteroscedasticity, one can:

Use Robust Standard Errors: Adjusting the standard errors (e.g., via White’s correction) helps obtain more reliable inference.

Transform the Data: Log or other transformations may stabilize the variance.

Weighted Least Squares: Assigning weights inversely proportional to the variance of the errors can mitigate heteroscedasticity.

A potential edge case is when heteroscedasticity is only present in a subset of the data. In such cases, global corrections might not be sufficient, and localized methods or sub-sample analysis may be required. Moreover, correcting for heteroscedasticity is essential for hypothesis testing and drawing reliable conclusions about the effect of the predictor.

How can regularization techniques such as Ridge or Lasso regression affect the magnitude and sign of coefficients, and how should these effects be interpreted?

Regularization techniques modify the loss function to penalize large coefficients, thereby reducing overfitting and multicollinearity issues. Ridge regression (using an L2 penalty) tends to shrink coefficients uniformly, while Lasso regression (using an L1 penalty) can force some coefficients to exactly zero, effectively performing feature selection.

When regularization is applied, a large negative coefficient might be shrunk towards zero. This raises important questions:

Is the original large negative coefficient an artifact of noise or overfitting?

Does the shrinkage improve the model’s generalization performance?

Interpreting the regularized coefficient requires an understanding of the penalty strength. A small change in the regularization parameter can lead to substantial differences in the coefficients. Additionally, the sign of a coefficient may remain negative even after regularization, but its magnitude may be reduced, suggesting that while the inverse relationship persists, its effect size is less extreme when accounting for overfitting.

A potential pitfall is over-penalizing, which might lead to underestimating the true effect of a predictor. Cross-validation is typically used to select the optimal regularization parameter, balancing bias and variance while ensuring that the resulting model remains interpretable.

How would you differentiate between causation and correlation when interpreting a large negative coefficient in your model?

A large negative coefficient in a regression model only indicates correlation between the predictor and the target variable; it does not imply causation. To establish causation, one must consider additional evidence or study designs. Techniques to differentiate include:

Controlled Experiments: Randomized controlled trials help establish causality by eliminating confounding factors.

Instrumental Variables: When experiments are not feasible, instrumental variable methods can help isolate the causal impact by using variables that affect the predictor but not directly the outcome.

Causal Inference Techniques: Methods like propensity score matching or difference-in-differences can provide insights into causal relationships.

A real-world pitfall is over-interpreting the regression coefficient as evidence of causation, especially when the model suffers from omitted variable bias or reverse causality. Even with sophisticated econometric techniques, establishing causality requires careful design and validation. It is critical to communicate the distinction clearly when explaining model results to stakeholders.

How does extrapolation in the prediction space affect the interpretation of a large negative coefficient, particularly when feature values fall outside the range observed in the training data?

Extrapolation refers to using a model to make predictions for feature values that lie outside the range of the training data. In such cases, the estimated large negative coefficient might lead to predictions that are not reliable because the relationship learned by the model is assumed to hold beyond the observed data range. Extrapolating with a large negative coefficient can result in extreme predictions, which might be unrealistic or even nonsensical in a real-world context.

To manage extrapolation:

Model Validation: Limit predictions to the range of the training data or conduct careful validation when extrapolation is necessary.

Domain Expertise: Use domain knowledge to assess whether the model's extrapolated predictions are plausible.

Model Adjustment: Consider augmenting the model with non-linear components or boundary constraints if extrapolation is expected.

Edge cases include situations where the feature’s distribution has a natural boundary (e.g., percentages bounded between 0 and 100). Extrapolating beyond these limits can lead to misinterpretations. It is essential to clearly communicate the limitations of the model when it is applied to data points outside the observed range.