ML Interview Q Series: Is separating users by join year a good strategy to train a boosting model for predicting trust?

📚 Browse the full ML Interview series here.

Comprehensive Explanation

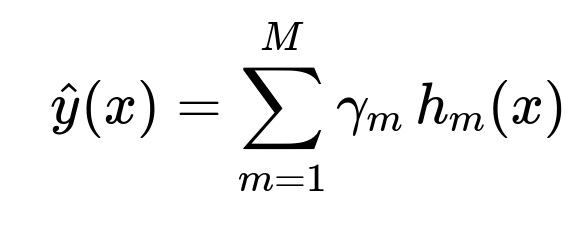

A boosting algorithm builds a strong model in an iterative fashion by combining multiple weaker learners. The ensemble typically takes the form of an additive model, which can be broadly summarized as an iterative summation of base learners, each weighted by a certain coefficient. One of the core formulations is shown below.

In this expression, M is the total number of boosting iterations, h_m(x) represents the mth weak learner (often a decision tree), and gamma_m is the weight or coefficient associated with that weak learner. The final prediction for any input x is the sum of the weighted predictions across all M weak learners.

Time-Based Data in Boosting

When your dataset spans multiple years, there is often a concern about distribution shifts or changes in user behavior over time. A user who joined Netflix in 2010 may behave differently compared to someone who joined in 2020, due to shifts in content offerings, platform interface changes, pricing adjustments, or broader market trends. If these differences are significant, the model should learn that chronology matters.

However, in many real-world scenarios, you must also avoid data leakage, where information from future periods inadvertently influences the model training. For instance, if you randomly shuffle data from 2019 and 2020, you might leak signals from 2020 back into earlier training iterations. This leads to overly optimistic model performance in training but performs poorly in real deployment when truly new data arrives.

Separating User Groups by the Year They Joined

Splitting your training data strictly by the calendar year in which users joined can address certain issues but also introduces new concerns. On one hand, it could help respect the chronological nature of the data, ensuring that any future data remains unseen at training time (mimicking a real scenario). On the other hand, if the dataset is partitioned too strictly—imagine training only on users from 2010–2015 and validating or testing only on users from 2016–2020—then the early model might not see enough examples that represent later behaviors. The model could struggle to generalize if user behavior evolves significantly.

In practice, a balanced approach is often used: preserve time-order in the sense that training data must come from an earlier period than testing data, but use a rolling or expanding window if possible. This ensures the model gets a diverse representation of user behaviors through time while still preventing future information from leaking into training.

Practical Guidance

If you have enough data for all 10 years, a time-series or rolling window approach is often more effective than a simple year-based partition. For instance, you might train on the first six or seven years and then validate on the next two, and finally test on the last year’s data. You can also do a time-based cross-validation scheme where you iteratively move your training and validation windows forward. The key advantage is that it more accurately simulates the real-world scenario in which only historical data is available at the time of model training, and you must predict for the future.

A naive separation purely by year can lead to suboptimal data splits, especially if membership year alone doesn’t perfectly capture user behavior or if it creates unbalanced sets. You need to consider both the quantity and representativeness of data in each split as well as the risk of concept drift over the decade.

Follow-Up Question 1

Is it always essential to do time-based splitting when your data spans multiple years?

It depends on whether there is evidence of temporal drift or changes over time. If user behavior, website design, or marketing strategy has evolved significantly, time-based splitting is crucial to avoid unrealistic leakage of future data and to ensure that the model adapts to changes over time. However, if the data is relatively stable and there is no major distribution shift across years, a random split may still be valid. In many real-world settings, there is almost always some form of drift, so time-based splitting (or at least careful time-aware partitioning) is advisable to simulate real deployment conditions.

Follow-Up Question 2

How do you handle the situation where the dataset is imbalanced across different years?

This can be addressed by resampling techniques or weighted loss functions. For instance, if the majority of users joined in the last two years, your model might overfit to recent patterns. A weighted loss function can help the algorithm pay more attention to the minority group (earlier years). Alternatively, you can employ a rolling window strategy in which the window’s size is chosen so that each training partition has enough samples from each relevant time period. Oversampling or undersampling might also be applied if it is appropriate and does not overly distort the time relationship.

Follow-Up Question 3

What if you discover that user behavior is completely different in the later years, making it harder for the model to generalize?

This is a strong sign of concept drift. The model you trained on earlier data may become less relevant as user behavior changes. In such a scenario, you can apply methods like adaptive or online learning, where the model parameters are updated regularly as new data comes in. Another approach is to maintain a shorter training window containing more recent data, accepting the trade-off that older data might no longer be representative. Periodic model retraining or warm-starting the boosting process with the latest data can help you stay aligned with evolving user behavior.

Follow-Up Question 4

Can you show a small example in Python illustrating a time-based data partitioning approach for boosting?

Below is a minimal illustration of how you might partition the data by date or year, then train a simple gradient boosting model on earlier years and validate on later years. It does not provide an exhaustive solution to concept drift but demonstrates a time-aware split.

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.ensemble import GradientBoostingClassifier

# Suppose df has columns: ['join_year', 'feature1', 'feature2', ..., 'target']

# We'll create a time-based training and validation split using join_year.

# Sort by year to ensure chronological order

df_sorted = df.sort_values(by='join_year')

# Choose a cutoff year for training vs. validation

cutoff_year = 2018

train_data = df_sorted[df_sorted['join_year'] <= cutoff_year]

val_data = df_sorted[df_sorted['join_year'] > cutoff_year]

X_train = train_data[['feature1', 'feature2']] # Add all relevant features

y_train = train_data['target']

X_val = val_data[['feature1', 'feature2']]

y_val = val_data['target']

# Train a gradient boosting model

model = GradientBoostingClassifier(n_estimators=100, learning_rate=0.1)

model.fit(X_train, y_train)

# Evaluate on the validation set

val_predictions = model.predict(X_val)

accuracy = (val_predictions == y_val).mean()

print("Validation Accuracy:", accuracy)

In this simple code, the data is divided based on a cutoff year, ensuring that the training set corresponds to users who joined Netflix on or before that year, while the validation set consists of users joining after that year. This helps mimic real-world conditions where future data is not available at training time.

Below are additional follow-up questions

How do you ensure the model doesn’t get overly influenced by outdated patterns if you include older data?

One common pitfall when using data spanning many years is that earlier behaviors may be radically different from current user behavior, and yet they occupy a large chunk of the training set. This can bias the model to patterns that are no longer relevant. An effective mitigation is to use a moving time window (also called an “expanding” or “rolling” window approach). In such a scheme, rather than training on the entire historical dataset from a decade ago, you limit the training data to the more recent window that still captures enough variety but excludes extremely outdated patterns.

An important edge case is where some older data might still contain rare but essential signals—perhaps because the exact same marketing campaign used in 2010 was reintroduced in 2020. In these scenarios, a complete discard of historical data can cause the model to miss certain recurring patterns. A practical approach is to assign weights inversely proportional to how old the data is. For instance, the data from the last two years can be given higher weight than data from eight or nine years prior. This weighting scheme preserves historical insights while prioritizing more recent trends.

How would you handle external changes that occur over time, such as new competitors or platform redesigns?

Real-world user trust and engagement can be impacted by events external to Netflix, like the entrance of new competitors, sudden regulatory changes, or major platform redesigns. A purely time-based approach doesn’t automatically account for these external transitions. One approach is to add external event indicators or features into your model. For example, you might have a binary feature that indicates “platform redesign phase” or “competitor X launched in this month.” By incorporating such contextual features, your model can learn patterns associated with these external shifts.

A major pitfall arises if you only partially capture external changes. For instance, you might mark a single major competitor's launch date but ignore other minor shifts. If many other changes occur in the same timeframe, the model may attribute the effect to the single indicated competitor event. Always ensure you gather sufficient contextual data to reflect real-world conditions accurately. When such data is unavailable, a practical fallback is to retrain or fine-tune the model more frequently, especially around known milestone events.

How do you handle missing data for entire segments of time or specific user groups?

When you have a decade of user data, it is common for certain years or certain user demographics to be underrepresented or have incomplete features. This situation can bias the model. For instance, if in the early years some metrics (like device usage, watch-time data, or subscription plan type) were not recorded, the model might ignore that dimension entirely or impute it incorrectly.

A key step is careful data cleaning and feature engineering. You might:

Exclude features that are consistently absent in certain years and cannot be accurately imputed.

Use appropriate imputation methods that account for temporal relationships. For instance, forward-fill or backward-fill for time-series data can be employed when it makes sense (though one must be very careful to not introduce future information from forward-filling).

Implement specialized models or modules for time segments with partial features, or create additional binary features that indicate the availability of certain data. These “missingness indicator” features can help the model learn the patterns of missing data.

The subtle pitfall here is that imputing data from future time periods or from drastically different segments can inadvertently lead to leakage or spurious correlations. Always ensure imputation methods rely only on contemporaneous or prior data in a real deployment scenario to avoid hidden leakage.

What strategies can you employ when the performance metric evolves over time or business objectives shift?

In a long time horizon, your definition of “trust” or the associated business goal can shift. For instance, in one period, user trust might be measured solely by entering credit card info for a free trial, whereas a few years later the metric might be “converted to a paid subscription after trial.” The model trained on one definition may not directly optimize for the new definition.

When business objectives or key performance indicators change, you have a few strategies:

Maintain multiple labels. If historically some data only pertains to “entered credit card,” but now you care about “converted to paid subscription,” keep track of both labels. You can then build a model that includes both short-term trust (credit card) and longer-term trust (paid subscription).

Re-label historical data if it is possible to retroactively compute the new metric from older records. This can be challenging if certain historical details were never captured.

Gradually transition the model to the new metric. Start by training with the old label but incorporate partial records that reflect the new objective, until eventually the new definition is consistent enough to be the primary training target.

The pitfall is abruptly switching your training objective without acknowledging that historical data was collected for a different outcome. Always confirm your model’s objective remains consistent with the available ground truth data.

How would you adapt the boosting algorithm if you detect concept drift in real time?

Concept drift occurs when the underlying data distribution shifts over time, often in unpredictable ways. A model trained even a few months prior may become stale if user behavior changes quickly. In boosting frameworks, you can adapt in multiple ways:

Online Learning or Incremental Updates: Some implementations of boosting algorithms (like certain versions of XGBoost or LightGBM) can be adapted incrementally. This allows you to retrain on fresh batches of data without rebuilding from scratch.

Rolling Retraining: Rebuild the entire model at regular intervals (e.g., weekly, monthly). Although this can be computationally expensive, it ensures you have a model that reflects the latest data distributions.

Weighted Ensemble Updates: Combine an older model’s predictions with a new model’s predictions (trained on the latest data) in an ensemble approach. Over time, you gradually reduce reliance on the older model if its performance degrades.

A subtle edge case arises if the drift is abrupt. For example, a sudden shift in user interface might trigger behavior changes overnight. A monthly retraining cycle might be too slow to capture such immediate changes. In that case, you might need a more rapid or even real-time mechanism. Conversely, if drift is only gradual, a less frequent retraining schedule might suffice to balance cost and accuracy.