ML Interview Q Series: Large-scale distributed training splits the cost function, causing issues like stale gradients. How are these addressed?

📚 Browse the full ML Interview series here.

Hint: Consider asynchronous parameter updates, gradient averaging, and synchronization.

Comprehensive Explanation

When training large models on distributed systems, the computational workload is divided among multiple workers. Each worker processes a subset of data and computes the corresponding gradients. Although this parallelization can significantly speed up training, it also introduces certain complications. One of the most critical issues is the possibility of "stale gradients." Stale gradients occur when different workers compute their gradients based on older versions of the model parameters, leading to updates that do not reflect the most recent state of the model.

In synchronous training, all workers wait until every other worker has finished computing gradients for a given iteration, then an averaged gradient update is applied. This ensures consistency at each training iteration but can be relatively slow if one worker is slow (the so-called "straggler" problem). In asynchronous training, workers update parameters as soon as they finish computing their gradients, without waiting for others. This can make training faster, but it also increases the risk of stale gradients because workers might be using outdated parameter values to compute their updates.

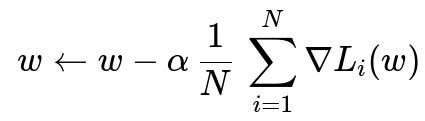

A typical approach for distributed training is to assume a model with parameter vector w, and each worker i computes the local gradient for its assigned mini-batch of data. One way to represent a synchronous update rule is:

Here, N is the number of workers, alpha is the learning rate, and L_{i}(w) is the local loss function computed by the i-th worker on its data subset. The summation of gradients is collected (e.g., via a parameter server, or some other communication mechanism like all-reduce), averaged, and then a single global update is applied to w. Synchronous frameworks wait for all N updates before computing the average, thus ensuring that each iteration uses consistent gradients from the same parameter version. This approach eliminates stale gradients at a particular iteration but might slow down overall throughput if one worker is significantly delayed.

Asynchronous frameworks attempt to improve throughput by not waiting for every worker at each iteration. Each worker pulls a current version of w from the parameter server, computes its local gradient, and pushes the update back to the server. Since some workers may take longer than others, by the time their gradients arrive at the server, the parameter vector w might have been updated already by other, faster workers, creating stale gradients. Modern deep learning frameworks mitigate this by techniques such as bounded staleness (limiting how old the parameters can get before a worker must refresh them) or by using advanced optimizers that adjust learning rates and momentum terms to reduce the negative impact of delayed updates.

Frameworks such as TensorFlow, PyTorch, and those built on top of Horovod or Distributed Data Parallel modules often provide built-in support for synchronous or asynchronous parameter updates. They handle synchronization and gradient averaging automatically in the backend, allowing users to focus more on the model itself rather than the intricate details of distributed communication.

Why Stale Gradients Occur

Stale gradients primarily happen because of asynchrony in reading the parameters, computing the gradient, and writing the updated parameters. If a worker reads w at time t, then calculates gradients and updates at time t+delta, the parameters may have been modified by other workers during that time, causing a mismatch between the version of w used to compute the gradient and the version that eventually gets updated.

How Frameworks Address Stale Gradients

Frameworks address this problem by controlling the trade-off between synchronization frequency and performance. In a fully synchronous approach, the staleness is eliminated at a cost of increased idle time. In an asynchronous approach, the framework might implement:

• Bounded Staleness: A version check ensures that a worker's gradient won't be applied if it's too old compared to the latest version. • Adaptive Optimizers: Techniques like momentum or adaptive learning rates (e.g., Adam) can help mitigate the effect of stale gradients. • Hybrid Methods: Some systems combine synchronous mini-batches with occasional asynchronous updates, or they partition the batch updates into subsets to reduce total waiting time.

Real-World Considerations

Real distributed systems have network latency, unbalanced loads on different machines, or differing computational capacities. This can exacerbate staleness or straggling. Advanced frameworks often leverage efficient collective communication libraries, such as NCCL (NVIDIA Collective Communications Library), to accelerate gradient aggregation and reduce these bottlenecks.

Potential Follow-up Questions

How do asynchronous updates cause stale gradients in more detail?

Asynchronous updates occur when each worker uses whichever version of the model parameters is currently available. By the time a slower worker completes its gradient computation, the "true" parameters may have changed multiple times. When this worker finally pushes its gradients, those gradients are misaligned with the current parameters.

A direct result is that the parameter update might push the model in a direction that was optimal for an older parameter set but may be suboptimal or even detrimental to the current model state. This is called the "staleness" of gradients.

What is the difference between synchronous and asynchronous distributed training?

The key difference lies in whether workers must wait for each other at each iteration. In synchronous training, all workers compute gradients, a central process aggregates them, and then the global model parameters are updated once. This ensures that all updates correspond to the same model version.

In asynchronous training, workers do not wait. As soon as a worker has a gradient, it applies that gradient update to the global parameters. This can speed up throughput but may introduce stale gradients. Synchronous training typically yields more stable convergence, while asynchronous training can be faster but sometimes converges to slightly worse solutions or takes more iterations.

Why might synchronous training be preferred in some cases?

Synchronous training can offer more stable and predictable convergence behaviors because all updates are computed using the same model version. Some deep learning tasks and certain architectures are sensitive to large asynchrony, so requiring all workers to stay in sync can help ensure uniform progress. Synchronous approaches also simplify hyperparameter tuning in many cases because the behavior is more deterministic.

Why might asynchronous training be preferred in other cases?

Asynchronous training can minimize idle time and potentially make better use of hardware resources by allowing faster workers to proceed without waiting for slower ones. In large clusters, where any single worker might occasionally become slow due to network issues or resource contention, asynchronous methods can offer a major speed advantage.

What is gradient averaging?

Gradient averaging is the process by which workers compute their local gradients, sum or average these gradients, and then apply one global update. In synchronous methods, gradient averaging is typically straightforward: all workers wait, gather the gradients, take the mean, and apply. In asynchronous settings, each worker may apply its gradient independently, or there may be a parameter server that aggregates and averages gradients over some time window. The key idea is that the final parameter update reflects contributions from multiple mini-batches across different workers.

What is the parameter server architecture?

In a parameter server architecture, one or more nodes act as the "server" that stores the global model parameters. Worker nodes retrieve parameter values from the server, compute gradients on their local data, and then send those gradients back to the server, which updates the global model accordingly. This approach can be implemented in both synchronous and asynchronous fashions. However, in asynchronous settings, it is possible for different workers to read or write to the server at different times, leading to potential staleness issues if not managed properly.

How do newer frameworks like Horovod or PyTorch Distributed Data Parallel handle this?

Horovod and Distributed Data Parallel typically rely on high-performance collective operations (e.g., NCCL) for allreduce-based updates. Instead of having a designated parameter server, these frameworks use a peer-to-peer approach in which each worker holds a copy of the model parameters, and all workers exchange gradients directly. This can be more scalable because it eliminates the parameter server bottleneck, though synchronization strategies vary. Many of these frameworks default to synchronous allreduce, ensuring that each worker applies the same aggregated gradient. Some also offer asynchronous modes, though these are less commonly used in standard libraries because synchronous allreduce is simpler to configure for stable training.

What are the trade-offs between ring allreduce and a parameter server?

Ring allreduce is a technique that involves passing gradients in a ring-like structure among workers, accumulating sums, and then distributing the final average back to all workers. This can be highly efficient in bandwidth-limited environments because it avoids having all workers communicate with a central server.

The parameter server architecture can be more flexible for asynchronous updates, but in large-scale contexts it can become a bottleneck if too many workers communicate at once. Modern distributed deep learning often employs ring allreduce or tree-based allreduce algorithms for better scalability.

How can one mitigate the negative impact of stale gradients?

Reducing learning rates can sometimes help because any misalignment in parameter updates has a smaller effect. Using momentum-based or adaptive optimizers can also stabilize convergence. In asynchronous training, one can implement bounded staleness, so that any gradient older than a certain version difference is discarded. Gradient clipping is another technique to limit large, potentially harmful updates when staleness is high.

How does synchronization strategy affect model convergence?

In synchronous training, each step is consistent with the same model version, which usually yields stable training curves. In asynchronous training, the training curve might show higher variance, but overall speed to solution could be faster. The final convergence quality depends on many factors, including learning rates, batch sizes, the scale of the dataset, and how well the algorithm handles stale updates.

What if there are straggler nodes in synchronous training?

Straggler nodes—workers that lag behind due to various reasons such as hardware slowdowns or network latency—can hold up the entire training process in synchronous mode. All workers must wait for the slowest one to finish before proceeding to the next iteration. Methods like backup workers, partial updates, or assigning fewer tasks to slower nodes can mitigate the problem. However, this introduces additional complexity into the synchronization logic.

Summary

Distributed training improves scalability and efficiency but also introduces challenges like stale gradients. Frameworks handle these issues through synchronous or asynchronous parameter updates, gradient averaging, and specialized communication strategies. Synchronous methods ensure consistency but can be slowed by stragglers. Asynchronous methods improve throughput at the risk of stale gradients. Modern frameworks provide well-optimized communication and update mechanisms (allreduce, parameter servers, or hybrids) that let practitioners choose which approach best suits their use case and hardware environment.

Below are additional follow-up questions

What are some differences between data parallelism and model parallelism in distributed training, and how can stale gradients manifest differently in each scenario?

Data parallelism splits the dataset across workers so that each worker processes a distinct mini-batch of data. All workers hold a full copy of the model, compute local gradients, and aggregate them. Stale gradients arise when different workers compute updates based on slightly out-of-date parameters. This is often a result of asynchronous gradient updates or network/processing delays.

Model parallelism, on the other hand, partitions the model’s parameters or layers across multiple workers, rather than partitioning the dataset. Each worker processes only a subset of the neural network’s layers for each forward and backward pass. While stale gradients can still occur if different parts of the model are updated at different times, the manifestation can be subtler because each worker’s gradients only correspond to a portion of the model. An out-of-sync parameter in one part of the network can influence how the subsequent stages compute activations and gradients.

Potential pitfalls and edge cases: • If certain parts of the model are significantly more computationally expensive than others, one worker might lag behind, forcing synchronous methods to wait for that worker, or in asynchronous methods to apply out-of-date gradient slices. • Communication overhead can be very different for model parallelism vs. data parallelism. For example, model parallelism may require large cross-device communication of activations, not just gradients. This can exacerbate staleness if partial updates happen at different times. • Debugging becomes trickier when you need to identify which slice of the model had stale updates, especially if layers are partitioned unevenly.

How do frameworks handle partial worker failures in an asynchronous setting, and what kind of staleness issues can arise if certain workers temporarily drop out?

In asynchronous distributed training, workers typically communicate their gradients to a central parameter store or perform decentralized updates. If a worker fails (e.g., hardware crash, network failure) or becomes temporarily unreachable, its gradient contribution may be missing for several iterations. The parameter server or distributed algorithm can either proceed without that worker’s updates (skipping them entirely) or wait for a configurable period before discarding them.

Staleness issues arise if a recovered worker tries to apply gradients computed on older parameter versions. Some frameworks implement checks on parameter version numbers. If the difference between the worker’s version and the current version is beyond a threshold, those gradients may be rejected or scaled down. This approach, sometimes called bounded staleness, reduces the risk of significantly outdated updates being applied.

Potential pitfalls and edge cases: • If a cluster experiences many transient worker failures, the effective batch size could drop, altering the dynamics of training. • Bounded staleness thresholds might be too strict, discarding too many updates and slowing overall training progress. Alternatively, they could be too lenient, applying highly outdated gradients and causing instability. • Logically handling worker restarts (e.g., restoring from checkpoint) can introduce version mismatches if not carefully managed.

How does gradient scaling in large-batch training relate to stale gradient issues?

In large-batch training, the global batch size may be extremely high (e.g., thousands or tens of thousands of samples) to maximize hardware utilization. Practitioners often scale the learning rate accordingly. When a system is distributed, the total effective batch is the sum of all mini-batches processed by each worker.

Gradient scaling refers to the practice of adjusting hyperparameters—particularly the learning rate—proportionally with the batch size. This ensures the magnitude of parameter updates remains reasonable. However, when there are stale gradients, the assumption about synchronized progress in the large-batch setting can be partially violated. A worker might be computing gradients on an earlier parameter version, effectively using a large-batch learning rate but with outdated parameters.

Potential pitfalls and edge cases: • If the learning rate is scaled too aggressively, stale gradients can have a larger detrimental effect, possibly causing overshooting or unstable updates. • When combining gradient scaling with adaptive optimizers, the interplay between momentum buffers and stale gradients can amplify errors from out-of-date parameters. • Tuning gradient scaling for a system with dynamic membership (workers joining/leaving) becomes more complicated and may require on-the-fly recalibration.

How can stale gradients potentially lead not just to slower convergence but also to divergence or numerical instability?

In typical scenarios, stale gradients lead to slower convergence. But under certain conditions, they can cause divergence or explosive parameter updates. If a worker’s gradient is severely out-of-date but still applied at a high learning rate, the parameter update could push the model parameters into a regime that is far from the local optimum, causing subsequent gradient calculations to blow up in magnitude.

Potential pitfalls and edge cases: • If an asynchronous system has a large learning rate and high latency, multiple workers might accumulate large updates that get applied nearly simultaneously once their gradients arrive, resulting in extreme parameter jumps. • Non-convex architectures, such as deep networks with large numbers of parameters, can have highly sensitive loss landscapes. Even a single large, stale gradient step can push the parameters into a saddle point or region of instability. • Floating-point precision issues can become more pronounced if layer activations explode, leading to NaN gradients.

How might distributed reinforcement learning (RL) amplify stale gradient problems compared to supervised learning?

Distributed RL often involves multiple actors exploring the environment in parallel, collecting experiences, and sending them to one or more learners. The learners update policy or value function networks based on incoming experiences. Staleness can be more pronounced in RL for two primary reasons:

• Non-stationary Data Distribution: The environment may change or the policy might shift significantly as training progresses, so experiences collected by different actors can be out-of-date representations of the environment or policy. • Delayed Updates: If multiple actors are sending gradients or parameter updates asynchronously, each actor could be “behind” on the newest policy. This can lead to suboptimal exploration or exploitation because some actors might be acting on stale policies for many steps.

Potential pitfalls and edge cases: • If the environment is highly non-stationary, stale policy parameters can lead to heavily biased data collection. This can slow down or even halt learning. • Divergence is possible if different actors explore contradictory policies that are combined incoherently at the learner. • Communication overhead and latency in RL setups (especially in large simulations or real-time control tasks) might be higher or more variable, aggravating staleness.

What are some ways to measure or detect the extent of gradient staleness in a distributed training system?

Frameworks typically keep track of parameter versions or iteration counters. One measurement approach is to log, for each gradient application, the difference between the gradient’s version (i.e., which model state it was computed from) and the current model version. By monitoring these statistics, one can assess how old the gradients are on average.

Another method is to measure the time lag between when a parameter update was initiated and when it was finally applied. For instance, you could track timestamps of each step at the worker and at the parameter server. Large gaps indicate staleness.

Potential pitfalls and edge cases: • Instrumentation overhead: Frequent logging or collecting fine-grained metrics can affect performance. One might need to sample these metrics rather than record them for every single update. • Interpreting staleness metrics: A certain amount of staleness might be acceptable if the learning rate is low or if the model is robust to delayed updates. But without a clear baseline, deciding what is too stale can be subjective. • Non-uniform staleness: Some workers might consistently produce more stale gradients than others, so it’s essential to look at distribution statistics, not just averages.

When operating in a decentralized (peer-to-peer) environment without a parameter server, how do stale gradients arise, and can they be mitigated?

In a decentralized training environment, each worker holds a local copy of the parameters. They typically use collective operations (e.g., allreduce or gossip-based protocols) to share and update gradients. Stale gradients occur if a node is slow to communicate or processes data at a different rate than peers. By the time it shares its gradient, other nodes may already have performed multiple updates based on more recent parameter states.

Mitigation can occur through carefully timed synchronization rounds (e.g., synchronous allreduce after a certain number of local steps) or bounded staleness gossip protocols (workers only accept updates from peers that are within a certain iteration window). Advanced gossip-based approaches can also integrate momentum or adapt the size of updates to reduce the negative impact of stale gradients.

Potential pitfalls and edge cases: • Partitioning of the network: If a subset of workers is temporarily disconnected from the rest, they might continue training on an outdated parameter version, rejoining later and causing a significant parameter mismatch. • Managing version control in a decentralized environment is complex, as there is no single authority on the “current” version of the parameters. • Gossip-based algorithms can have hyperparameters (e.g., how often to communicate, how many neighbors to contact, etc.) that strongly affect staleness and throughput.

How is checkpointing handled in large-scale systems, and can stale parameters or gradients affect restarts from checkpoints?

In large-scale distributed training, periodic checkpoints are taken to persist the current state of the model (and possibly optimizer states like momentum buffers). If a failure occurs, training resumes from the most recent checkpoint. Stale parameters or gradients can arise if the checkpoint does not capture consistent states from all workers. For instance, in asynchronous systems, some workers might have partially updated the global model when the checkpoint is saved. Upon restart, workers might resume from a model that is slightly out-of-sync relative to their local states.

Potential pitfalls and edge cases: • Race conditions: If a checkpoint is triggered in the middle of applying asynchronous updates, the saved parameters may not reflect a coherent global state. • Version mismatch: A worker might have local momentum buffers based on a parameter version that differs from the checkpoint’s version. This can lead to unusual jumps in parameter values after restart. • To mitigate this, frameworks often quiesce or pause updates briefly while taking a checkpoint, ensuring all workers are consistent at the moment of checkpoint creation. However, this introduces a brief but regular training delay.

How is load balancing handled in distributed training to mitigate stragglers, and are there scenarios where load balancing might introduce new staleness issues?

Load balancing often involves dynamically assigning data or computational tasks so that no single worker consistently lags behind. Techniques include: • Dynamic Batch Allocation: If one worker finishes processing its mini-batch faster, the system can assign more data to that worker in the next iteration. • Heterogeneous Resource Allocation: Workers with more powerful GPUs/CPUs or better network bandwidth might receive larger portions of the data. • Elastic Scaling: Some frameworks allow adding or removing workers on the fly based on resource availability.

Although load balancing can prevent stragglers, frequent reassignments can lead to new staleness complications. A worker might receive fresh tasks partway through an iteration while using an older parameter version. The partial overlap in mini-batch processing might result in updates that do not align precisely with the parameter state.

Potential pitfalls and edge cases: • Overly aggressive rebalancing can cause frequent data re-allocation, incurring large communication overhead and leading to more partial updates. • Workers might oscillate between being overloaded and underloaded if the load balancing heuristics aren’t tuned. This can create bursts of staleness as some workers momentarily lag before the system rebalances again. • Deterministic behavior becomes tricky. Constant shifts in data assignment and worker membership can make debugging or reproducing results more difficult.