ML Interview Q Series: Photo posts on Facebook composer dropped from 3% to 2.5%—how would you investigate the cause?

📚 Browse the full ML Interview series here.

Comprehensive Explanation

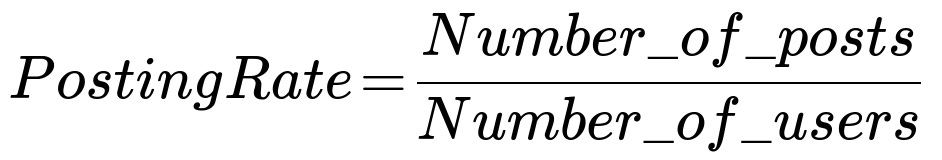

One way to conceptualize this scenario is by examining the posting rate of a certain type of content. A basic formula that you might consider when analyzing posting frequency is shown below:

Where Number_of_posts refers to how many new posts were created over a given timeframe, and Number_of_users is the count of users actively available to post during that timeframe. When there's a drop in PostingRate, it suggests fewer posts per active user, or fewer active users making posts, or a combination of both. In this case, the overall metric has fallen from 3% to 2.5%, which is significant enough to warrant deeper investigation.

Initially, you would:

Investigate User Segments and Time Windows. By dividing the user base into various segments such as geography, device type, new vs. returning users, or demographic categories, you can observe where the most significant decrease is happening. For example, you might find that on Android devices the drop is steeper, or that certain regions show more pronounced declines.

Look at Feature/UI Changes. If there were any recent modifications to the Facebook interface, especially around how photo posts are created or shared, you would look into whether these changes inadvertently caused users to engage less. This might involve analyzing logs of how users interact with the composer.

Examine Engagement Patterns Over Time. Monitoring daily or weekly usage patterns could reveal if the drop is an ongoing trend or coincided with a specific event or product launch. If the decline matches a particular release date or a time when an external factor changed, that is a strong clue about what might be causing the difference.

Compare with Control Groups or A/B Tests. Often, platforms roll out UI changes or back-end modifications to subsets of users first. If you have test groups that were or were not exposed to a new photo-posting feature, that can offer a direct comparison to see if the change correlates with the posting decline.

Check for External Influences. Sometimes, an external factor like a network outage, increased data costs, or changes in smartphone usage patterns can reduce photo uploads. A competitor’s new release might also lure people away from sharing photos on Facebook, so it is wise to monitor competitive shifts in user behavior.

Because the drop is explicitly identified in photo posts, you would investigate whether new friction arose in photo posting. This could involve checking whether the photo upload flow is working correctly, if image quality or load times degraded, or if a user’s privacy settings changed.

How to Narrow Down the Photo Post Drop

After establishing that the drop is concentrated in photo posts, you would delve deeper into areas that can specifically affect photo creation and sharing:

UI or Workflow Friction. If users must now navigate more steps to publish a photo, or if the upload button is not as prominent, these factors might discourage photo posting.

Technical Glitches. In some cases, server errors or app crashes may go unnoticed if not carefully tracked. You would confirm that the photo upload server and the phone’s camera integrations are functioning as intended.

User Sentiment. Gathering feedback through surveys or user research can highlight whether there is a negative perception of the new photo-posting process or a preference shift to other platforms.

Competitive Behavior. Instagram or TikTok might be drawing away users who previously posted photos on Facebook. If there is a general shift toward other forms of media on competing services, it could explain the drop.

App Performance Metrics. It is important to analyze logs for image upload performance, error rates, and page load speeds. A slight slowdown or new bugs in certain devices or OS versions could cause a measurable drop in usage.

Potential Follow-Up Questions

What methods would you use to differentiate a genuine user behavior shift from a measurement or data collection error?

One approach is to run sanity checks on data pipelines. If a new logging framework was introduced, or if an analytics platform changed how it counts users, you might be seeing an artifact of measurement rather than an actual usage shift. You could compare the data from multiple independent sources, such as server logs versus client-side events, to confirm consistency. If both data sources indicate the same drop, it is likely a real behavior change. If not, the discrepancy might reveal a tracking issue.

How would you handle the possibility of seasonal trends affecting posting behavior?

Seasonality can be a common reason for changes in user metrics, especially around holidays or exam periods for certain demographics. You would compare the current month’s data with the same period in previous years. If you detect that in every similar month in previous years the posting rate declined slightly, this year’s drop might be consistent with a known pattern. If the drop is more severe than seasonal norms, that indicates a new factor is at play.

If you suspected a new feature rollout caused the drop in photo posts, how would you validate that hypothesis?

One direct way is to compare cohorts of users who received the new feature against those who did not. By tracking changes in photo post frequency between the two groups, you could see if users on the new feature have a significantly different behavior. You would also examine the event logs during photo posting to identify any added steps or errors that might explain friction. If you confirm a strong correlation between the new feature rollout and the decline, you can then investigate a fix, or revert the change, or refine the feature to reduce friction.

Could a decline in photo posts be related to a shift toward other content formats?

It is possible that people are posting more text-based updates, videos, or ephemeral stories, thereby reducing photo posts specifically. You would verify whether users have stayed on the platform but changed the format of their posts. If the total number of posts remains steady, and the only decline is in photo posts, it implies a shift in user preference. On the other hand, if all forms of posting have dropped, that suggests a broader engagement issue rather than a problem limited to photo sharing.

How would you diagnose an issue with mobile operating systems or cameras that might reduce photo creation or uploads?

You might break down the photo post data by mobile OS version, device model, and app version. If you see that iOS version X or a certain Android release shows a stronger decline, you would investigate potential incompatibilities with the camera interface or the file upload API. Crash logs and error monitoring systems would help you see if certain devices are failing more often when initiating or finalizing a photo post.

If a correlation is detected, you could replicate the bug on test devices to confirm it. Resolving the bug or providing a patch for the problematic OS version could restore the posting rate.

How do you ensure that solutions to address a posting drop do not negatively affect the rest of the product experience?

Testing each proposed solution in controlled experiments is often the best practice. A/B test changes to the UI, the posting workflow, or alternative ways of prompting users to share photos. Monitor not only the change in photo post volume but also overall user satisfaction, retention, and revenue. It is essential to confirm that fixing one issue (like friction in photo posting) does not degrade other aspects of the user experience.

By addressing these aspects in detail—technical, product, user experience, measurement, and external factors—you can more precisely determine why photo posts have declined and effectively strategize how to mitigate or reverse the trend.

Below are additional follow-up questions

How could you investigate if a specific subset of users (for instance, new sign-ups or users in emerging markets) is disproportionately contributing to the decline in photo posts?

You could segment the user base according to relevant attributes—such as user tenure, region, device type, or engagement history—and compare the change in photo posting rates within each segment. For instance, you might find that the newest users, those who have just joined in the past month, are barely posting photos, while long-term users still post at their usual rate. Or you may spot a sudden drop concentrated in a single geographic region that experienced a network outage or economic constraints (like data cost hikes).

A potential pitfall is overlooking subtle correlations, such as user migration patterns within a region or changes in local events. Even though overall posting is dropping, you might miss that the decline is driven by, say, Android users in a certain city suffering from poor network coverage. Another edge case is that the segment you suspect (like brand-new users) might actually be growing, so the percentage of total posts from that segment changes simply because of volume, not due to a change in their behavior.

Investigating deeper involves cross-referencing multiple data sources. For example, track device logs, check region-based bandwidth usage, or analyze sign-up cohorts for changes in the proportion of “spontaneous posters” (users who post frequently within the first few days). By layering multiple viewpoints, you can isolate and confirm if one specific user group truly explains the downward trend.

Could a change in Facebook’s news feed ranking or content recommendation algorithm inadvertently reduce photo posting?

When the ranking algorithm shifts, it might prioritize certain types of content over photos, or it might surface fewer stories from users who typically share photos. If users notice their photos get less engagement or fewer reactions, they could become discouraged from future posting.

One subtle issue is that many people do not consciously notice a small drop in engagement, but it can still influence posting frequency in aggregate. Another pitfall is incorrectly attributing the decline to the ranking algorithm when, in fact, a different factor—such as an unrelated UI change—may be driving the trend.

To investigate, you can compare posting rates before and after the algorithm change, specifically focusing on photo content. You might track the average engagement per photo and see if it drastically declined after the new algorithm was introduced. Further, you could identify whether a test cohort not exposed to the new ranking logic maintained the same posting rate while another cohort (with the new ranking) saw a decline. That differential can confirm whether ranking changes contribute to the drop.

Could recent updates to user privacy settings or security prompts have caused friction in photo sharing?

Sometimes, platforms roll out new security prompts or more stringent privacy checks. Users might need to confirm certain permissions, select audience settings repeatedly, or see more frequent pop-ups about how their data is used. This friction can subtly discourage them from posting.

One edge case is that certain user segments—like minors or those under stricter privacy regulations—may be impacted disproportionately if enhanced compliance measures require more manual steps. Another nuance is that some privacy updates are region-specific, due to local laws or GDPR-like rules, which may lead to a concentrated drop in certain markets.

Diagnosing this requires analyzing how many users encountered additional permission prompts or privacy disclosures right before posting. You can check if the drop correlates with these privacy-related steps and see if there is an uptick in incomplete post flows at the last step. If you find a strong correlation, you could experiment with more transparent and frictionless privacy flows to see if that recovers the posting rate.

How might macro-level user behavior shifts, like moving from desktop to mobile or from mobile to wearable devices, affect photo posting frequency?

If a user shifts from a desktop interface where they post long-form status updates to a phone or smartwatch environment that is more geared toward ephemeral or simplified interactions, the nature of their posting might change. Smartphones do encourage photo sharing in many cases, but if your user base moves to even smaller devices, like wearables, capturing and uploading photos could become less convenient.

A hidden pitfall is assuming that smartphone usage uniformly increases photo posting. While many new phone features simplify sharing, certain phone models or OS versions may have camera limitations or app-related bugs that hurt photo quality or hamper uploads. Another subtlety is that as people switch devices, their habits for “quick share” might involve ephemeral stories or direct messages, bypassing the public posting channel.

One approach is to analyze post creation events specifically by device category or screen size. Confirm whether usage from large-screen devices is declining and wearable usage is rising. You might find that the total number of photos taken remains the same (or even grows), but fewer are being posted publicly. Tracking public post creation against private or story-driven shares can highlight if there is a shift away from feed-based photo posting.

Is it possible that users are receiving too many content creation prompts, leading to “prompt fatigue” and a decrease in overall posting?

Platforms sometimes push “Create a post” or “Share a memory” reminders. Though these can boost short-term engagement, over time they can become noise, causing some users to tune out or even feel pressured, which might discourage them from sharing content organically.

One pitfall is that measuring the direct effect of prompt fatigue is tricky. Reminders might still appear successful initially, only for a slow decline in user goodwill to surface after multiple weeks. Another subtlety is that certain user groups are more sensitive to these reminders—for instance, infrequent posters might find them annoying while power users are unaffected.

To investigate, analyze how many prompt notifications users receive and the subsequent posting rate of those notified compared to those not. You could run an A/B test where one group receives fewer or more targeted prompts and see if that group’s photo posting frequency remains higher over time than users who were bombarded with prompts. This data clarifies whether prompt fatigue is a factor.

How can third-party integrations (such as Instagram cross-posting or sharing from other photo-editing apps) impact the decline in photo posts?

If your platform previously allowed users to quickly cross-post from Instagram or a popular photo-editing tool, but some integration broke or changed policies, it might result in fewer photos being shared on Facebook. Alternatively, a competitor might have introduced an exclusive partnership, funneling photo shares to another platform.

An edge case is that the integration might still exist but has hidden bugs only affecting certain OS versions. Users might keep trying to cross-post and fail, leading them to give up. Another subtle issue is that a policy or API change might limit automatic sharing or require new permissions, so only power users bother to re-enable it.

In diagnosing this, you can look at the total volume of cross-posted content from external sources over time. If you see a sudden dip that matches user complaints, that strongly indicates an integration or API-driven cause. You could also test a minimal or newly updated integration environment and see if the issues reproduce. By reaching out to partner teams or scanning error logs at the API layer, you can confirm whether integration points broke.

If user sentiment around platform trust or brand image changes, could that affect the likelihood of posting personal photos?

Yes, a shift in trust can reduce users’ willingness to share personal images. High-profile news stories about data breaches or controversies around the platform’s policy can nudge people toward more passive consumption.

Pitfalls include conflating short-term PR backlash with a deeper, sustained trust issue. Another subtlety is that certain user demographics might react more strongly—e.g., individuals who share family photos may become especially cautious if new privacy concerns arise. Meanwhile, other groups may remain unaffected.

In investigating this, you could track sentiment through user surveys and see if negative feelings about data security correlate with a drop in photos posted. Also, analyzing the content of user feedback or customer support tickets might reveal privacy or trust concerns. Once you isolate trust as a factor, you can attempt to regain confidence by clarifying data policies or offering more transparent privacy controls.

Could gamification elements (such as badges or streaks) have inadvertently encouraged posting for the wrong reasons and led to a correction or burnout?

Sometimes platforms introduce badges, posting streaks, or other motivational hooks that artificially inflate engagement. Over time, users might realize they are posting primarily to keep a streak alive, rather than to share meaningful content. When the novelty wears off or they break the streak once, they might lose interest entirely.

An edge case here is that different cultures or age groups may respond differently to gamification. Younger users might enjoy playful mechanics for a longer period, while older users could perceive them as gimmicky from the outset. Another pitfall is that once the gamification is deeply ingrained, removing or changing it can produce a sudden drop in posting.

You could analyze the proportion of posts made while a user is “on a streak” or close to achieving a badge. If that proportion is high, your platform might be artificially boosting posts. Also, watch for a sudden drop that coincides with either the removal of a gamification mechanic or a user’s streak ending. This indicates that the decline is tied to burnout or the cessation of extrinsic motivators.

What if the user interface for uploading photos was inadvertently simplified too much, removing users’ motivation to add creative flair?

In some cases, attempts to streamline posting can backfire. Perhaps the platform removed editing features or photo filters to reduce complexity, which ironically might reduce the enjoyment or sense of individuality in sharing photos.

A subtlety here is that not all users want fancy editing tools. A simpler interface might attract novices but alienate power users who valued advanced features. Another pitfall is that you might interpret overall posting declines as a direct result of losing advanced features, while the decline could also coincide with other changes.

To isolate this factor, track usage of advanced photo editing features over time. If advanced editing features were used heavily before they were removed or simplified, and right afterward photo posting plummeted, this strongly implies that the presence of creative tools was a motivator. Running controlled tests that reintroduce those features to a subset of users can help confirm whether reinstating them recovers posting frequency.

Could the observed drop be an artifact of comparing two time periods with different user base sizes or mix?

If the platform’s user base increased in the last month due to many new signups who are not actively posting photos yet, the ratio of posts to total users (posting rate) might decrease even if the absolute number of photo posts remained the same or even went up slightly.

One edge case is that some new users may take time to become comfortable sharing photos, while older users might maintain their usual frequency. The combined effect is a short-term dip in the fraction of users posting. Another potential confusion is that user churn might be rising, causing the denominator (number of users) to fluctuate unpredictably.

To clarify, you can look at absolute counts of photo posts as well as the ratio. If the number of photo posts is stable or rising while the ratio is dropping, that is a strong sign of a growing denominator effect. Additionally, analyzing daily active users (DAU) who actually visit the site (instead of total signups) might yield a more accurate measure of changes in real posting activity.