ML Interview Q Series: Probability of Maximum Value in Six Die Rolls via Cumulative Distribution

Browse all the Probability Interview Questions here.

A fair die is rolled six times. What is the probability that the largest number rolled is r for r = 1,...,6?

Short Compact solution

Define Ar to be the event that the maximum of the six rolls is r. Observe that the total number of possible outcomes in six rolls of a fair die is 66 (each roll can independently be from 1 to 6). Let Bk be the event that all six outcomes are at most k. Hence, P(Bk) = k6 / 66. Then Ar can be interpreted as the event that all six rolls are at most r, but not all six at most (r-1). Therefore,

Ar = Br \ Br-1,

leading to

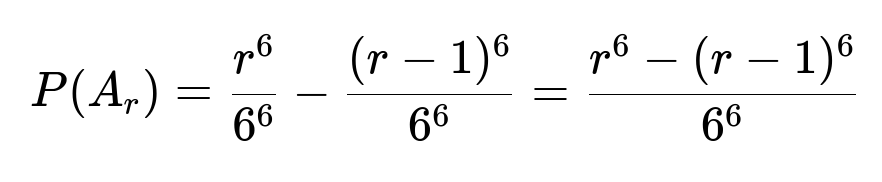

P(Ar) = P(Br) - P(Br-1) = (r6/66) - ((r-1)6/66) = [r6 - (r-1)6] / 66.

An alternative derivation is to consider j of the rolls exactly equal to r and the remaining (6 - j) rolls to be strictly below r. Summing over j from 1 to 6 (since we must have at least one roll equal to r for the maximum to be r) gives the same result.

Comprehensive Explanation

When we roll a fair six-sided die six times, each outcome is a sequence of length 6, where each element can be an integer from 1 to 6. Thus, there are 66 equally likely ways to roll the die six times.

To find the probability that the largest number observed is exactly r, we use two main methods:

Difference of Cumulative Counts Approach

We introduce:

Bk = "All six dice show a value from the set {1, 2, ..., k}."

Because there are k choices per roll if the dice values must be at most k, the number of sequences that satisfy Bk is k6. So:

P(Bk) = (k6) / (66).

Now, Ar = "The maximum value in the six rolls is r." This is the same as saying "All six rolls are at most r, and at least one of them is equal to r." Equivalently, Ar = Br \ Br-1, because Br is the event that the rolls are all ≤ r, and Br-1 is the event that the rolls are all ≤ r-1, so removing Br-1 from Br leaves exactly the set of outcomes whose largest number is r (none larger than r, but at least one r).

Hence:

Here:

r6 is the number of ways to roll six dice with faces in {1, 2, ..., r}.

(r-1)6 is the number of ways to roll six dice with faces in {1, 2, ..., r-1}.

66 is the total number of equally likely outcomes for six rolls.

Summation / Binomial Approach

Alternatively, we can think of Ar by enumerating the ways in which the largest die can be r:

Suppose exactly j of the dice show r (j ≥ 1), and the other (6 - j) dice each show a value from 1 to (r-1).

The probability that any particular sequence of j dice being r and the other (6 - j) being below r is (1/6)j · ((r-1)/6)(6 - j).

The number of ways to choose which j dice show r is C(6, j).

Summing over j from 1 to 6 gives:

This summation can be shown to be identical to [r6 - (r-1)6]/66 by the binomial expansion of r6 - (r-1)6.

Edge Cases and Basic Checks

When r = 1, the largest number is 1 if and only if all dice are 1. Then (16 - 06) / 66 = 1/66.

When r = 6, the probability is 66/66 - 56/66 = 1 - (56/66).

These edge cases match intuition: r=1 means all dice must be 1 (a very small probability), r=6 means at least one 6 occurs.

Example Computation in Python

from math import comb

def prob_largest_r(r):

# Using difference approach

return (r**6 - (r-1)**6) / (6**6)

# Alternatively using binomial approach

def prob_largest_r_binomial(r):

total = 0

for j in range(1, 7):

ways = comb(6, j)

p_r = (1/6)**j

p_less = ((r-1)/6)**(6-j)

total += ways * p_r * p_less

return total

for r in range(1, 7):

print("r =", r, "=>", prob_largest_r(r), " / binomial =>", prob_largest_r_binomial(r))

Either approach returns the same probabilities.

Possible Follow-up Questions

If an interviewer asks: "How do we verify that these probabilities sum to 1?"

We note that the events A1, A2, …, A6 partition the entire sample space. Each sequence of six rolls has a unique maximum (it must be exactly one of 1, 2, 3, 4, 5, or 6). Hence,

P(A1) + P(A2) + ... + P(A6) = 1.

Mathematically, from the difference expression:

Σ[r=1..6] (r6/66 - (r-1)6/66) = (16 - 06 + 26 - 16 + ... + 66 - 56) / 66 = (66 - 06) / 66 = 1.

If asked: "How can we extend this to dice with n sides or more than six rolls?"

For an n-sided fair die rolled m times, the same reasoning applies, with total outcomes nm. The probability that the largest roll is r (for r = 1, …, n) becomes:

Largest number ≤ r means Br has rm outcomes. Then:

P(Ar) = [rm - (r-1)m] / nm,

or via the combinatorial approach:

P(Ar) = Σ[j=1..m] C(m, j) (1/n)j ((r-1)/n)(m-j).

If asked: "What is the expected value of the largest roll in six throws of a fair die?"

The expected largest roll L is:

E[L] = Σ[r=1..6] r × P(Ar).

This quantity can be computed directly by plugging in the probabilities found. Numerically, it is often around 4.45 for six rolls of a fair six-sided die.

If asked: "What if the die is biased so that each face i has probability pi?"

Then the direct counting method (r6 etc.) no longer applies because the total number of sequences is still 66, but they are not equally likely. Instead, we sum over the probabilities:

Let the largest roll be r. This means each face is at most r, at least one face is exactly r, and we use:

P(Ar) = Probability(all rolls ≤ r) - Probability(all rolls ≤ r-1).

But "Probability(all rolls ≤ r)" is [p(1) + p(2) + ... + p(r)]6. So:

P(Ar) = [p(1) + ... + p(r)]6 - [p(1) + ... + p(r-1)]6.

One must be careful since the p1, p2, …, p6 might not all be 1/6.

If asked: "How can you use these probabilities in a real application, like gaming or simulation?"

In many games, the distribution of the maximum roll determines chances of success (for instance, if you roll multiple dice and only the largest matters). One can compute expected payoffs, or the chance of exceeding a certain threshold, by knowing P(Ar). Similarly, in simulations, checking if the maximum surpasses a boundary is relevant in bounding extreme events.

All these points demonstrate how the straightforward idea of "largest number among multiple throws" can be systematically tackled by either:

Subtraction of cumulative event probabilities for "values not exceeding r."

A combinatorial sum capturing the possibility of having exactly j dice land on r and the rest below r.

Below are additional follow-up questions

If an interviewer asks: "How would you find the probability that exactly k distinct faces appear among the six rolls, and how is that related to the distribution of the largest roll?"

One might wonder how often we see exactly k distinct numbers in 6 throws and how that event might constrain the largest roll. To approach this:

First, recognize that “exactly k distinct faces” is a more general event encompassing multiple possible largest values.

We can count the number of ways to choose which k faces appear, assign them among the six positions, and ensure all chosen faces occur at least once. This involves Stirling numbers of the second kind or the inclusion-exclusion principle.

If we want to incorporate the largest face condition, we can fix the largest face to be r and then count how many distinct faces are used among the remaining (r-1) possible faces plus the forced presence of r. That is a more intricate inclusion-exclusion question.

Potential pitfalls:

Overcounting if we do not carefully enforce “exactly k distinct faces.”

Failing to account for the forced presence of r if we want the largest face to be r.

The complexity can grow quickly, so it is easy to introduce small miscounts that distort the result.

If an interviewer asks: "How do we calculate the probability of the largest roll being at least r, and how might that be more useful than finding exactly r?"

Sometimes, we care about “at least r” rather than “exactly r.” For instance, “What is the chance of rolling at least one 5 or 6 in six throws?”

We define A≥r = “The largest roll is at least r.”

A direct approach is: P(A≥r) = 1 - P(all rolls < r). Because if the largest is not at least r, then all rolls must be ≤ (r-1).

So, P(A≥r) = 1 - ((r-1)/6)6.

Pitfall: Overlaps might occur if we try to do the complement incorrectly (for example, mixing up “all rolls < r” with “all rolls ≤ r”).

This is typically simpler than “exactly r” because we avoid enumerating each maximal outcome in detail and can just exploit the complement.

If an interviewer asks: "What is the probability that the largest roll appears exactly m times (for example, we want the largest face r to appear exactly 2 times), and how can this be computed in tandem with P(Ar)?"

This question combines the concept of having a largest roll r with a specific frequency of r:

We already know P(Ar) is the probability the largest value is r.

Within that event, we might want the largest value r to appear exactly m times.

To drill down:

First ensure at least one r occurs (so the max can be r). Then we specifically count the number of ways for exactly m dice to show r.

The other (6 - m) dice must be strictly from {1, 2, ..., r-1}, but none of those (6 - m) dice can be r (otherwise we exceed m occurrences of r).

We can use combinations to choose which m positions are r, multiply by the number of ways to fill the remaining positions with faces < r, and then normalize by 66.

Pitfalls include forgetting to exclude the possibility of a larger face or not ensuring that if m < 6, we still have no dice face > r.

If an interviewer asks: "How would you handle dependent dice rolls or real-world scenarios where the outcomes of the rolls are not truly independent?"

Real dice rolls in an ideal environment are typically modeled as independent. But some real-world situations (or contrived problems) might introduce correlations among the rolls:

For instance, consider a scenario where we suspect a mechanical or physical dependency: if one roll is high, maybe the next is more likely to be low due to how the dice are tossed.

In that case, the standard counting approach (r6 out of 66) doesn’t apply directly because it relies on independence to ensure the 66 equally likely outcomes.

We would need the joint distribution of all six rolls. The “largest roll = r” event would then be integrated (or summed) over this joint distribution.

Potential pitfalls:

Assuming independence incorrectly, leading to flawed results.

Attempting to apply the simple formula (r6 / 66) when the probabilities of each combination are not uniform.

If an interviewer asks: "How do we simulate rolling a die six times, record only the largest result, and use that to estimate P(Ar) empirically? What mistakes do people often make in simulation-based estimates?"

A simulation-based approach can empirically approximate P(Ar):

Generate a large number of trials, each with six i.i.d. uniform(1..6) draws.

Track the maximum of each trial’s six draws.

Estimate P(Ar) by the frequency of trials whose maximum is r.

Common mistakes:

Not using enough trials, leading to high variance estimates or confidence intervals that are too wide.

Mixing up the index of the largest die face with the face value (for instance, confusing the position of the largest roll with its numeric value).

Failing to reset random seeds or incorrectly collecting data, which can skew results or hamper reproducibility.

If an interviewer asks: "How do we extend or adapt these ideas if dice have faces that are not integer-labeled (e.g., a specialized gaming die with arbitrary symbols)?"

In many custom dice or specialized gaming scenarios, each side might have a different label or symbol, possibly not even numeric. To adapt:

The notion of 'largest roll' might be replaced with 'the highest-ranked symbol' if we can define a ranking or ordering among the symbols.

We must identify an ordering from least to greatest among those faces. Once established, the count of “faces ≤ r” can be used similarly.

Potential pitfalls:

If no clear total ordering exists (some games may have multiple categories rather than a single rank), the concept of “largest” might be ambiguous.

If each face does not share equal probability, the uniform count approach breaks down, and we must rely on the sum of probabilities for faces up to “r” instead of counting them as consecutive integers.

If an interviewer asks: "How does the concept of 'the largest of n random variables' generalize to continuous distributions, and what parallels exist with the discrete dice roll approach?"

Though dice are discrete, there is a continuous analogue:

For continuous i.i.d. random variables X1, X2, ..., Xn with cumulative distribution function F(x), the probability the maximum is less than or equal to some value x is F(x)n.

Therefore, the probability the maximum is in (a, b] is F(b)n - F(a)n.

This directly mirrors the discrete approach (k6 out of 66 for dice) but with integrals or continuous CDFs.

Pitfalls:

Failing to adjust for the fact that continuous distributions have infinitely many possible values, so we can’t just “count.”

Mixing up the PDF and CDF in computations for the maximum or misusing independence assumptions.

If an interviewer asks: "What if we are interested in the median or other order statistics (like the third highest) rather than the largest roll? How do we generalize from 'maximum' to 'k-th order statistic'?"

While we have formulas for the largest roll, order statistics in general are about the k-th largest (or k-th smallest) roll. The method:

For discrete uniform dice, we can count the ways that exactly (k−1) dice exceed a certain value, a certain number of dice equal that value, and the rest are less. This gets complicated, but binomial coefficients and combinatorial arguments extend.

A standard approach uses the “distribution of order statistics” in i.i.d. discrete random variables, where the probability mass function can be computed by enumerating how many samples are above, how many are equal, how many are below.

Pitfall: The biggest challenge is complexity. The formula for a general order statistic has more terms, and it is easy to lose track of constraints like “no more than that many dice can exceed the stated value.”

If an interviewer asks: "How might rounding or measurement error affect the analysis if the 'die roll' can come from sensors or real-world measurements rather than perfect discrete outcomes of 1 through 6?"

In some physical or sensor-based scenarios:

The measurement for each roll might be an approximate value, say a floating-point reading.

We then typically define thresholds for deciding if the measured outcome is ‘close to 1,’ ‘close to 2,’ etc.

The event “largest measured outcome is r” can get blurred if we have misclassifications or rounding: a true 5 might be recorded as 4.99 or 5.01.

Pitfalls:

Failing to account for the uncertainty in classification can lead to skewed probability estimates of the largest face.

If the thresholds for rounding are not consistent, then the distribution of outcomes could systematically bias the reported largest roll.