ML Interview Q Series: Should your Test Data be Cleaned the same way that the Training Data is?

📚 Browse the full ML Interview series here.

Comprehensive Explanation

Data cleaning for test data should be done using exactly the same transformations that were performed on the training data. The central goal is to ensure that the model sees test data that is consistent with the distribution and format of the training data. If you apply any additional or different operations to the test data, you risk altering its distribution in a way that does not match what the model was trained on, resulting in misleading performance metrics.

If you standardize your features, for instance, you must calculate the mean and standard deviation from the training data and then apply the same exact mean and standard deviation to transform the test data. The same idea applies to other transformations such as min-max scaling, imputation of missing values, or even more complex feature engineering steps.

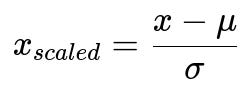

Below is a well-known core formula for standardizing numerical data. This formula is normally derived from and fitted on the training set, and then applied to the test set in the same way.

Here, x is the original (unscaled) value from the dataset. mu is the mean of the feature in the training set. sigma is the standard deviation of the feature in the training set. You never recalculate these values from the test set. Instead, you use the exact same values determined from the training data, ensuring that the distribution of your transformed test features is consistent with the training data.

Failing to apply identical transformations leads to distribution mismatches. For example, if you impute missing values in the training data by using the mean from the training set but then in the test phase use some new mean computed from the test set, the test data might end up with a different scale or center. This discrepancy will degrade your model’s performance or produce unrealistic estimates of the model’s effectiveness.

Practical Implementation

Below is a short Python code snippet illustrating how you might apply the same cleaning steps (in this case, using scikit-learn’s StandardScaler) consistently to training and test data:

import numpy as np

from sklearn.preprocessing import StandardScaler

from sklearn.model_selection import train_test_split

# Sample data

X = np.array([[1, 2], [2, 3], [3, 6], [4, 8], [5, 10], [6, 15]], dtype=float)

y = np.array([0, 0, 1, 1, 1, 1])

# Split into train and test

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Create the scaler and fit on the training set

scaler = StandardScaler()

scaler.fit(X_train)

# Transform the training set

X_train_scaled = scaler.transform(X_train)

# Apply the exact same transformation to the test set

X_test_scaled = scaler.transform(X_test)

# Now X_train_scaled and X_test_scaled have consistent transformations

Potential Pitfalls

If you mistakenly recalculate cleaning parameters (for example, the mean or median for missing-value imputation) from the test set, you effectively leak information about the distribution of the test data into your model-building process. This is a form of data leakage and can cause overly optimistic performance results that do not generalize to real-world scenarios.

Another subtle issue is if the test data contains outlier values that you decide to remove or treat differently than in the training phase. This can skew your metrics and is not representative of how your model will handle future data in a production environment. The same rule applies to outlier removal, one-hot encoding categories, or even text-processing steps.

Follow-up Questions

How do you handle missing values that appear in the test set but not in the training set?

Even if no missing values existed in the training data, you must still decide on a consistent policy for how to treat them if they appear in the test set. One approach is to incorporate an imputation mechanism in your pipeline that uses training set statistics for filling missing values, such as the training set mean. You do not recalculate the mean for the test set alone; you always use the mean (or relevant statistic) taken from the training set to maintain consistency.

What if the test data has new categorical levels not seen during training?

If you encounter new categories in the test data that were never present in the training set, you generally cannot straightforwardly apply the same transformation that was used during training (like one-hot encoding) because the original transformation does not recognize those new categories. One strategy is to treat unknown categories as an “unknown” category. This is often handled by your pipeline through setting certain encoder parameters, or by custom code that assigns all unseen categories to a default “unknown” token. This keeps the dimensionality consistent while preventing errors or accidental data leakage.

Why shouldn’t you fit a StandardScaler (or any scaler) separately on the training and test sets?

Fitting a scaler separately on the test set reintroduces knowledge of that test distribution into the process, violating the principle that test data should simulate unseen real-world data. If you fit on the test set, you are effectively letting your model “peek” at the test distribution’s mean and standard deviation. This will cause overly optimistic performance estimates and is not representative of true generalization.

How can you avoid accidental data leakage when building data-cleaning pipelines?

Data leakage can creep in if you merge or combine training and test data before cleaning or if you do steps in the wrong order. A common best practice is to construct a pipeline with strict isolation of fit (on training data only) versus transform (on both training and test sets). For example, in scikit-learn you can use Pipeline objects that ensure each step is properly separated, making it less likely to accidentally learn parameters from the test set.

If your training data is large, is it always safe to remove outliers that might seem problematic in the test set?

Removing outliers can be tricky because your notion of what is an outlier may be tied to the distribution of the training set. A data point that appears unusual in the test set might not necessarily be an error—it could be a legitimate instance that your model needs to handle. If you remove it, you risk ignoring a real scenario in production. You should only remove outliers in the training data with a well-justified reason and replicate that exact strategy on the test data. If truly unrepresentative data appears in the test set, you still apply the same rules. If the data is suspicious and you decide it should be excluded, it should be guided by the identical outlier definition used for your training data.

How do you handle feature engineering steps for text or images that might differ slightly between training and test sets?

Feature engineering transforms for text (such as tokenization, lemmatization, or n-gram extraction) or images (such as resizing, cropping, or normalization) also must be performed identically on both training and test sets. For example, if you have a text-processing pipeline that removes stopwords, you must remove the same stopwords in the test data. For image data, if you apply a specific type of cropping or resizing based on the training set’s statistics (like cropping around a detected object’s bounding box size), you should preserve the exact same transformation pipeline for test images. If you deviate, you risk introducing inconsistencies that will distort your evaluation of the model’s performance.

Below are additional follow-up questions

What if the distribution of your test data is drastically different from your training data?

When the test data distribution differs significantly from the training data distribution, a common impulse is to perform additional transformations to align the two distributions. However, if you alter the test data distribution beyond the same transformations applied to the training data, you risk obscuring genuine distribution shifts that may happen in real-world usage. For example, if your training data largely consists of images taken indoors, but your test data is from outdoor settings with different lighting conditions, you should still apply the same cleaning and preprocessing steps (e.g., image resizing, color normalization) to the test images. After that, you can analyze any performance degradation to determine whether a domain adaptation approach is warranted.

A subtle pitfall is overfitting to the test distribution shift. If you see drastically worse performance, you might be tempted to iterate on your cleaning or modeling in a way that specifically tailors the model to the test data. But this effectively uses test data in your model optimization pipeline, violating the principle that test data should remain unseen until final evaluation. If the new test distribution is truly representative of the production environment, you might need to collect or augment your training data with similar distributions to retrain (or fine-tune) your model rather than forcibly cleaning the test data in a different way.

How should you handle feature engineering when you suspect the test data might contain novel data patterns that training data cleaning didn’t anticipate?

If your model encounters new patterns in the test set that were never present in training (e.g., new words in a text classification task or new sensor readings in a predictive maintenance scenario), your initial approach must still remain consistent with the transformations you applied to the training set. For instance, if your text preprocessing pipeline tokenizes and removes stopwords from the training data, you apply the exact same pipeline to the test data—no modifications like adding extra steps or skipping steps.

However, you should separately analyze the extent of these new patterns. If they are common in real-world usage, that is an indication that your original training data was incomplete. The solution is usually to expand or refine your training set rather than altering your test cleaning pipeline alone. A pitfall arises if you modify your pipeline at test time by adding special steps to handle these new patterns (e.g., new domain-specific synonyms or additional spelling corrections). Doing so can artificially inflate test performance by tailoring transformations specifically to the test data. The correct approach is to re-train or re-fit any newly introduced transformations on an appropriately expanded training set that includes those patterns.

Should you clean or transform the labels in your test set differently than in your training set?

Sometimes labels (or targets) need to be standardized or processed, such as applying a log transform for regression tasks. The same transform applied to the training labels must be consistently applied to the test labels to evaluate performance in the same space. If your training pipeline includes something like log(label + 1) to stabilize variance, you should apply that same transformation to the test labels before feeding them to any metrics that require label transformation.

An edge case arises when the range of labels in the test set differs from the training set. For example, if your training labels are in the range [0, 100], but your test labels show values up to 150. In such a scenario, you still apply the same transform. If you recalculate or adapt the transformation just for test labels, you will end up with inconsistent label scales and misleading performance metrics. After the model predicts, you can invert the transformation consistently for the predictions and compute error metrics in the original scale.

What if you discover data integrity issues in the test set that you missed in the training set?

Occasionally, you may discover that some subset of the test data is badly corrupted or incomplete in ways not observed in the training data. Perhaps entire columns are missing in a portion of the test data, or there is a mismatch in how certain features were logged. In such a case, the immediate reaction might be to fix or remove those problematic entries in a way that was never performed on the training set.

The risk is that you might artificially improve your test performance by discarding difficult test points that your model should otherwise attempt to handle. The more robust approach is to maintain the principle of applying identical transformations and then investigate the root cause of why the test data has these integrity issues. If a real-world production system can produce data with these issues, your training pipeline or model architecture should be adapted to handle such conditions. If, however, you truly believe they are data-logging errors that do not occur in practice, removing them might be justifiable—but you then must document that removal step and replicate it on the training set (or at least clarify that these anomalies never existed in your training data).

In scenarios with time-series data, how do you ensure your cleaning process is consistent across training and test sets over different time windows?

For time-series data, the test set is typically from a later time window than the training set. If the time-series distribution shifts over time, your cleaning steps (like filling missing values) and feature engineering (like computing rolling averages or differences) must be carefully designed to avoid “peeking” into future data. You typically fit any transform (e.g., a rolling mean) only with past data. This means you use the training window to determine your cleaning strategy, and then you apply that strategy in chronological order to the test window without recalculating from future points.

A subtle pitfall is ignoring that some cleaning methods might implicitly use future data. For instance, if your pipeline calculates a rolling median over the entire dataset to fill missing values, you might inadvertently use future points when filling missing data in earlier points. This leads to a form of leakage. The correct approach is to apply each transformation in a strictly causal manner. You’d still do the same transformations on the test set as you did on the training set, but you carefully ensure you only leverage historical information for any data cleaning or feature engineering steps.

How do you decide whether to update your cleaning pipelines if you see a significant performance drop on the test set?

A sudden performance drop on test data might indicate a shift in distribution, new types of anomalies, or changes in how data is collected. A knee-jerk reaction might be to modify the cleaning pipeline for the test set alone, which breaks the principle of using identical transformations. Instead, you should:

Validate that your existing training pipeline has not been inadvertently broken.

Investigate the root cause of the performance drop.

If the distribution changes are legitimate, update (and retrain) your cleaning pipeline, along with your model, on new data that reflects current realities.

This approach ensures consistency. If you only patch the test pipeline, you lose a key property of having a model that was trained and tested under the same transformations. A more robust fix is to incorporate the new data scenarios into your training dataset and re-fit your entire pipeline so that the transformations remain consistent and up-to-date.

Could external knowledge or domain expertise justify a different cleaning approach for the test set?

Sometimes domain experts may argue that certain data anomalies in the test set are known errors that should be addressed differently from the training set. This is especially common in specialized fields like healthcare or finance, where domain knowledge can reveal that some portion of the test set is “faulty” or “non-representative.” Even in these scenarios, you still want a consistent cleaning methodology—either apply the same domain-driven rules to both training and test sets if relevant, or exclude the faulty test samples entirely (and do the same if they had occurred in training).

A real-world trap can occur when domain experts try to “fix” only the test data. This leads to an optimistic performance estimate that might not hold in production. A more sustainable approach is to revisit your training pipeline and incorporate the domain-driven cleaning rules universally, ensuring the model’s training data and test data are aligned under the same transformations.

How do you handle transformations that involve complex statistical models in your cleaning pipeline?

Certain data cleaning approaches, such as advanced outlier detection or dimensionality reduction with an algorithm like PCA, can be viewed as additional “learned” steps. For instance, if you do PCA on the training data to reduce dimensionality, you must learn the PCA mapping from the training data alone, then apply that exact mapping to the test data. A hidden challenge arises if your PCA-based pipeline sees test data points that lie outside the training distribution, which can lead to unusual projected values.

Similarly, if you use advanced outlier detection (like a one-class SVM) to remove outliers from the training data, you might see different data characteristics at test time. The consistent approach is still to apply the same outlier detection model to the test data. Do not re-fit or loosen its parameters to accommodate test anomalies. If you observe a spike in outliers, this likely indicates your training distribution is incomplete.

How do you document and maintain multiple versions of the cleaning pipeline if your data changes over time?

In real-world production systems, data distributions can evolve, and you may update your cleaning or preprocessing steps as you discover new anomalies or domain-specific nuances. To ensure consistency, you need version control for your data cleaning pipeline just as you would for code. This might mean tagging each release of your data pipeline with a specific version identifier. Then, the same pipeline version is used for both the training data and the test data. If an update is necessary, you create a new version of the pipeline and retrain your model from scratch (or fine-tune if your approach allows it) using the updated transformations, then apply those same transformations to a newly partitioned test set.

A major pitfall is having multiple production systems out in the field that each apply slightly different cleaning transformations. Without careful versioning, you risk mismatch between how your training set was processed and how incoming new data is processed. The solution is a well-documented pipeline that logs the exact set of transformations and parameters used.

If data collection tools change mid-project, how do you ensure your test cleaning still matches training cleaning?

It’s not uncommon for organizations to upgrade their data collection methods, add new sensors, or adopt different logging formats. If the test data was gathered post-upgrade, it might have different data types, sampling rates, or feature encoding. Ideally, you update the entire pipeline to handle these changes, then re-collect or re-label data in a way that is consistent. However, if you only discover the change late in the process, you still must strive to replicate the same transformations (e.g., the same normalization strategies, the same tokenization approach) if feasible.

When the new data format is incompatible with the old pipeline, the correct approach is often to unify data schemas, converting older data or newly arrived data into a common format and then re-running the pipeline from scratch. A major misstep would be to have one pipeline for older training data and a different pipeline for new test data. This will compromise the validity of your final model evaluation.