ML Interview Q Series: Suppose we intend to create a new algorithm for Lyft Line.How could we run appropriate tests to validate its performance,define a success metric & eventually deploy it to users?

📚 Browse the full ML Interview series here.

Comprehensive Explanation

One of the key priorities when developing a shared-ride service like Lyft Line is to validate any new algorithm thoroughly before releasing it to real customers. There are generally three core steps:

• Experimentation (testing and evaluation) • Measuring success with relevant metrics • Implementation and gradual rollout

Below is a detailed look at these steps.

Designing the Experimental Setup

A robust approach involves running controlled experiments (commonly A/B tests) to compare the performance of the new algorithm against the current production algorithm (the control). In real-world ride-sharing, the system is complex, with dynamic demand and supply, so ensuring that the experiment is unbiased and representative is crucial. Key considerations include:

• Randomized Assignment: A random set of users or geographic regions should be served by the new algorithm, while others remain with the existing setup. • Sufficient Sample Size: Make sure enough rides are allocated to the test group so that differences in performance can be measured confidently. • Isolation of Factors: Introduce only this new algorithmic change (rather than mixing it with multiple updates at once).

Metrics for Measuring Success

When deciding how to measure success, it is important to define metrics that accurately capture both user satisfaction and business impact. In the context of a ride-sharing algorithm, these might include:

• Average Wait Time (time between request and pickup) • Trip Duration (time from pickup to drop-off, factoring in possible detours) • Cost-Efficiency for both the company and drivers (e.g., fewer deadhead miles, better occupancy rates) • Cancellation Rates (driver cancellations, rider cancellations) • User Satisfaction (ratings, retention, or Net Promoter Score)

A common performance indicator to track is the average passenger wait time. This can be represented as follows:

Where N is the total number of ride requests considered in the experiment; PickupTime_i is the actual time the i-th rider is picked up; RequestTime_i is the moment the i-th rider initially requested the ride. This expression shows, for each ride, how long the passenger waited, and then calculates the average across all rides.

Alongside this, the following inline expressions might also be monitored:

• total driver idle_time • average cost_per_mile

Rolling Out the Algorithm

After analyzing the experimental data:

• Phase 1: Pilot in a Single Market: If results look promising, begin with one small geographical market or sub-population of riders. • Phase 2: Gradual Scale: Expand the rollout incrementally to more cities or user segments, continuously checking metrics (wait time, satisfaction, etc.). • Phase 3: Full Deployment: Once stable performance and better metrics than the control are consistently observed, deploy system-wide.

To reduce risk, canary or shadow deployments may be used, where the new system runs silently in the background, making predictions without acting on them, just to compare outcomes with the legacy system in real-time.

Implementation Details and Example

In Python, a pseudo-code snippet for measuring average wait time across experimental rides might look like:

import statistics

def compute_average_wait_time(rides_data):

"""

rides_data: list of tuples (request_time, pickup_time)

"""

wait_times = []

for request_time, pickup_time in rides_data:

wait_times.append(pickup_time - request_time)

return statistics.mean(wait_times)

# Example usage

experiment_rides = [(10, 15), (22, 28), (30, 36)] # hypothetical times

avg_wait = compute_average_wait_time(experiment_rides)

print("Average wait time in experiment:", avg_wait)

This focuses solely on wait times, but in a production environment, you would gather many more metrics, such as cost, occupancy, or user-reported feedback, and store all those metrics in a data pipeline for analytics.

Possible Follow-up Questions

How do we handle the possibility that A/B tests might interfere with each other if multiple experiments are running simultaneously?

When several experiments run in parallel, they can overlap or interact in ways that contaminate the results. For instance, two experiments might both affect how drivers are dispatched, confusing which effect results from which experiment. To address this: • Use mutually exclusive user groups or regions for different experiments. • Carefully track interactions so you can statistically disentangle individual effects. • Limit the number of concurrent experiments that might conflict on the same part of the system.

Why might average wait time not be enough to measure success?

Although average wait time is an important indicator of how quickly passengers are being served, it can neglect other vital aspects, such as driver earnings or operational costs. A system that minimizes wait times but significantly raises company costs might be unsustainable. Similarly, low wait time does not necessarily ensure user satisfaction if the final route length is too long or the fare is too high. Hence, consider a comprehensive set of metrics, including driver-related measures (e.g., driver satisfaction, idle time), rider satisfaction scores, cost-to-serve, and overall utilization.

How can we ensure that the test results generalize to real-world scenarios?

• Conduct a large, randomized experiment across diverse locations and rider profiles to capture different rider behaviors and traffic patterns. • Run tests over sufficiently long periods of time, covering different times of day and even different days of the week, to reduce bias from specific traffic peaks or unusual events (e.g., holidays, storms). • Re-evaluate post-deployment with continuous monitoring and, if necessary, rollback if metrics degrade.

What strategies help mitigate risk when rolling out major changes to a live platform?

• Gradual Rollouts: Start with a small percentage of traffic; if metrics remain stable, slowly ramp up. • Real-time Monitoring: Implement dashboards and alerting systems for key metrics to catch regressions promptly. • Rollback Plans: Always keep the old version accessible so you can revert in case of unforeseen issues.

Could offline experiments with historical data replace the need for online A/B tests?

Offline experiments on historical data can indeed provide a preliminary sense of how the new algorithm might perform and can be cheaper and faster to iterate. However, they do not capture real-world rider behavior shifts (for example, new route options may change user acceptance patterns in ways that differ from historical data). Hence, offline evaluations are useful for early-stage validation, but an online test remains the definitive method for measuring real user response.

What if the updated algorithm improves wait times but increases driver churn?

Driver churn can rise if, for example, route assignments become less profitable for certain drivers. In a marketplace system like Lyft, balancing rider and driver happiness is vital. This scenario highlights the need to track multiple metrics, not only wait times. A final go/no-go decision to deploy system-wide should weigh the trade-off between user benefits and driver retention. If driver churn is too high, it can lead to long-term negative effects such as reduced supply, eventually degrading user experience.

How might we handle cold-start issues for new users?

For brand-new riders with no history or usage patterns, a matching algorithm might not have enough data to accurately predict preferences or wait-time tolerance. Possible solutions include: • Default Heuristics: Use simple strategies until the system gathers enough user data. • Contextual Clues: Geographic data, time of day, or demographic patterns may help initial estimates. • Progressive Personalization: Adapt to user behavior over time, offering more optimized matches after each ride.

These questions test not only your technical ability to build and evaluate ride-sharing algorithms but also your capacity to foresee real-world challenges and align business metrics with engineering solutions.

Below are additional follow-up questions

How do we ensure the algorithm is fair across different neighborhoods or demographic groups?

Fairness can be challenging when managing a ride-sharing platform that serves diverse populations. The algorithm might inadvertently favor areas with higher density or more profitable trips, leading to longer wait times or lower service levels in less dense or lower-income regions.

One approach is to track metrics that compare service levels across demographic segments or geographic zones. For instance, measuring the average wait time for each neighborhood and ensuring there is no significant disparity can help. Another strategy might be capping maximum wait times to avoid extremely long waits in underserved areas.

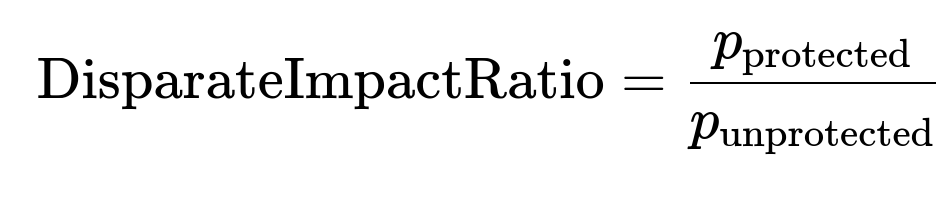

A useful yardstick for fairness is to measure something akin to a “disparate impact ratio.” If we denote the probability of a favorable outcome (for example, receiving a ride with a minimal wait) for a protected group as p_protected and for a non-protected group as p_unprotected, then:

When this ratio is significantly below 1, it might indicate a fairness gap. Potential pitfalls include: • Limited data for certain demographic groups, making fairness estimates noisy. • Conflicting objectives (cost vs. fairness) that might require multi-objective optimization. • The need to continuously retrain models so that fairness is not just a one-time fix but a sustained commitment.

How might drivers attempt to game or manipulate the new algorithm?

A new matching or routing system can introduce incentives that drivers exploit. Examples include: • Accepting rides only in high-demand zones to boost earnings. • Manipulating location data if the system incorrectly rewards false proximity to surge areas. • Cancelling requests that do not appear profitable, leading to passenger dissatisfaction.

To counter these issues: • Build in robust checks and validations (e.g., verifying driver GPS signals across multiple pings). • Impose penalties or warnings for too many cancellations or suspicious activity. • Employ real-time anomaly detection models that look for outlier behaviors compared to baseline driver patterns.

Edge cases might arise if legitimate drivers end up flagged or if unscrupulous drivers discover new exploits. Thorough testing, combined with continuous monitoring and frequent driver feedback, helps keep the system honest.

What if the algorithm inadvertently causes bias or discrimination?

Algorithmic bias can slip in when the training data or system design reflects historical disparities. For instance, a model trained on trip completion rates might learn to deprioritize certain neighborhoods if drivers historically avoided them.

Mitigation strategies include: • Regularly auditing the training data for skew and proactively supplementing underrepresented examples. • Using fairness-aware objective functions that penalize large discrepancies in service quality across regions or demographics. • Conducting post-deployment analyses on real-world outcomes to detect emergent biases not visible in offline data.

A subtle issue is that improvements in one metric (e.g., average wait times) might inadvertently worsen bias if certain areas are deprioritized for overall gain in the metric.

How do we handle spikes in demand, such as major events or rush hour?

Peak demand scenarios, such as festivals, sporting events, or daily rush hours, stress-test a system’s capacity. A new algorithm might outperform in regular conditions yet fail under surges. For instance, the model might overcommit the same driver to too many ride pickups, or fail to account for slowed traffic in gridlocked areas.

Best practices: • Conduct stress tests with simulated or historical high-demand data to see if the matching or pricing system becomes overloaded. • Introduce protective measures like demand-based surge pricing or temporary ride caps per driver. • Implement fallback heuristics (e.g., simpler rule-based assignments) if the advanced model is too slow or unstable under load.

Edge cases include sudden weather changes or multiple city-wide events on the same date. Building robust fail-safes ensures that your new algorithm degrades gracefully instead of collapsing entirely.

Could there be unintended effects on the environment or traffic congestion?

Ride-pooling solutions like Lyft Line can, in theory, reduce the number of cars on the road by matching multiple passengers. However, if the new algorithm starts sending vehicles on longer detours to pick up additional riders, it might increase overall vehicle miles traveled and worsen congestion or emissions.

Mitigation measures: • Track metrics like total miles traveled or average occupancy of each ride. • Incorporate an environmental cost component into your objective (for example, a penalty on unnecessary extra miles). • Conduct scenario analyses to see if a seemingly efficient algorithm in terms of wait times inadvertently escalates total miles on the road.

A tricky corner case is when marginally adding one extra passenger saves them from calling a separate car, but extends the route so much that the total miles outstrip the benefit.

How do we address model drift over time?

Data drift occurs when the underlying patterns that the algorithm relies on shift. For example, changes in traffic flow, new rider behaviors, or the advent of new user segments can degrade the performance of a once-accurate model.

Countermeasures: • Continuous retraining with recent data so the model stays aligned with current trends. • Monitoring key performance metrics (average wait time, route efficiency) for consistent drops or anomalies. • Versioning models to revert quickly to a stable previous version if a new retrained model performs worse in production.

Edge cases might arise if the model updates at an inopportune time (e.g., during the holiday season with abnormal traffic patterns) or if a new city is added with very different rider behaviors, necessitating specialized model tuning.

Could third-party changes (like new competitor strategies or altered road regulations) impact our rollout?

Yes. External factors that the model did not anticipate can cause performance to deteriorate. Examples include: • A new competitor offering cheaper rides, which changes demand patterns. • Government regulations limiting ride-sharing in certain districts or instituting road tolls. • Additional data sources becoming available or existing data becoming restricted (e.g., privacy law changes).

To handle these effectively: • Maintain awareness of the regulatory environment, and build contingency rules or fallback pricing strategies if new laws appear. • Design the algorithm to be modular, so changes in external data or third-party APIs do not break the entire system. • Keep a strategic reserve of operational levers (like surge price modifications or driver incentive adjustments) to quickly adapt to sudden market changes.

One subtle issue is underestimating how quickly a competitor’s new feature can shift demand. If an event-based competitor discount draws away riders, data drift can escalate, and the new Lyft Line algorithm might need immediate adaptation to retain riders.

How can we manage user privacy while collecting highly granular location and trip data?

Location data is highly sensitive, and storing precise latitude and longitude over time can create privacy risks. When building and testing new route-matching algorithms: • Use strict access controls so only authorized systems or individuals can view raw location data. • Aggregate or anonymize data to train models (e.g., removing rider IDs and only preserving necessary context like zip code or time-of-day patterns). • Comply with relevant data protection regulations, such as GDPR or CCPA, especially if data is collected across international boundaries.

The biggest pitfall is that any data leak or misuse can harm user trust and risk legal repercussions. Balancing personalization benefits with privacy compliance requires carefully thought-out data architectures, privacy impact assessments, and encryption of data at rest and in transit.

How to handle user feedback and complaints if the new matching system causes issues?

Despite careful testing, real-world complexities might lead to unforeseen user dissatisfaction. Examples: • Riders complaining about longer detours to pick up additional passengers. • Frequent mismatches where a driver not suitable for certain route constraints is assigned. • Inaccurate predicted pickup times leading to frustration.

Managing user feedback: • Offer in-app channels to report negative experiences directly tied to multi-rider matching or unusual routes. • Automate the process of triaging and categorizing complaints to identify systematic algorithmic issues. • Release quick patches or model updates (if an identifiable pattern emerges) and follow up with riders and drivers impacted.

In worst-case scenarios, a popular social media post might highlight a glitch, damaging brand reputation. Being prepared with transparent communication and rapid remediation is vital in these edge cases.

What measures should be taken to ensure the real-time system remains fast and reliable?

Latency is crucial in ride-share apps, as decisions must be made quickly in response to dynamic conditions. A new advanced algorithm that is too computationally heavy could slow the system: • Consider approximate or heuristic-based solutions if the optimal approach is too slow in large cities or at peak times. • Use distributed computing frameworks to handle high request volumes. • Optimize the model inference pipeline (for instance, by batching requests or employing hardware accelerators).

A subtle pitfall is that a model that performs well on offline benchmarks might fail in production if it cannot generate route assignments fast enough, leading to degraded user experience and potential outages under high demand.

What if stakeholders disagree on the metrics of success?

Different teams may value different success metrics: product managers might focus on user retention, operations might prioritize cost reduction, and data scientists may want algorithmic efficiency. If an algorithm improves some metrics but damages others, there could be internal conflict on whether to move forward.

Resolution: • Organize cross-functional meetings to align on a core set of metrics and relative priorities (e.g., some weighting of user wait time, driver earnings, business costs, fairness). • Use multi-objective optimization to strike a balance rather than maximizing a single measure. • Maintain clear documentation on how trade-offs are made so all stakeholders understand the rationale behind final decisions.

Edge cases include newly discovered metrics post-deployment (like congestion or environmental impact) that might cause reevaluation of earlier priorities. This process can lead to iteration on how success is defined and measured.