ML Interview Q Series: Suppose you’re constructing a classification model and want to reduce overfitting issues in tree-based methods. How would you address that challenge?

📚 Browse the full ML Interview series here.

Comprehensive Explanation

Tree-based methods, such as decision trees and their ensemble variants (random forests, gradient boosting, and so on), can easily overfit by creating overly complex trees. Overfitting occurs when the model memorizes training data patterns, including noise, and loses its ability to generalize to unseen data. A key strategy is to regularize the tree-building process through constraints, pruning, and ensemble techniques.

Decision trees split data at internal nodes based on some impurity measure. Common impurity metrics for classification are entropy and Gini index. When using the entropy measure, the impurity for a dataset S with K classes can be expressed as:

Here p_k is the proportion of samples belonging to class k in S, and K is the total number of classes. The sign is negative because p_k log_2 p_k is negative for values 0 < p_k < 1.

A tree may continue splitting until each leaf contains only one sample or all samples belong to the same class. This extreme scenario usually indicates overfitting, because the model is tailored too closely to the training set’s specific characteristics.

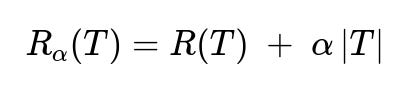

A common solution is cost complexity pruning, where a complexity term penalizes the size of the tree. The cost complexity measure for a tree T can be written as:

Here R(T) is the classification error (or another measure of training set error) of the tree T, |T| is the number of terminal nodes (leaves), and α is a regularization parameter that controls the trade-off between having a simpler tree and a tree that fits training data well. Larger α emphasizes smaller trees, and smaller α allows deeper, more complex trees.

In practice, you can also limit the growth of the tree by specifying parameters like max_depth (the maximum depth of the tree), min_samples_split (the minimum number of samples required to split an internal node), and min_samples_leaf (the minimum number of samples required to be at a leaf node). These constraints help prevent the tree from modeling noise in the training data.

Ensemble methods further reduce overfitting risks. A random forest, for example, trains many trees on bootstrap samples of the dataset and then averages their outputs. This averaging effect typically reduces variance. Gradient boosting methods iteratively refine new trees that focus on the errors of previous ones, but they also need regularization (like learning rate shrinkage, subsampling of features, and early stopping) to avoid overfitting.

Cross-validation is another powerful technique. By splitting the dataset into training and validation folds, you can monitor performance across multiple folds and select hyperparameters such that the model performs well on unseen data.

Below is a short Python code snippet illustrating how to set some of these parameters to reduce overfitting in a decision tree using scikit-learn.

from sklearn.tree import DecisionTreeClassifier

from sklearn.model_selection import cross_val_score

X = [[...], [...], ...] # feature vectors

y = [...] # labels

tree_model = DecisionTreeClassifier(

max_depth=5,

min_samples_split=10,

min_samples_leaf=5,

ccp_alpha=0.01 # cost complexity pruning parameter

)

scores = cross_val_score(tree_model, X, y, cv=5)

print("Cross-validation scores:", scores)

print("Mean CV score:", scores.mean())

In this example, max_depth restricts how deep the tree can grow, min_samples_split sets how many samples must be in a node before a split is attempted, min_samples_leaf sets the minimum number of samples in a leaf, and ccp_alpha directly introduces pruning based on the cost complexity measure.

How Do Regularization Parameters Interact with Tree Depth?

Regularization parameters such as min_samples_split, min_samples_leaf, and max_depth work together to reduce the complexity of the tree structure. If max_depth is small, the tree will not have excessively deep paths, and the training set errors might increase slightly, but the model is less likely to overfit. Larger values for min_samples_leaf or min_samples_split ensure that splits occur only when there are enough samples to justify partitioning the data further.

What About Bagging Versus Boosting to Mitigate Overfitting?

Bagging methods, such as random forests, average multiple independently trained trees. Because each tree is trained on different bootstrap samples, the variance is reduced without increasing bias drastically. Boosting methods fit new models to the residual errors of previous models. Gradient boosting can potentially overfit if the number of boosting stages is very large or if the learning rate is not set appropriately, but tuning parameters like learning_rate, subsample, and max_depth helps mitigate overfitting.

Could Cross-Validation Itself Prevent Overfitting?

Cross-validation does not directly prevent overfitting; instead, it measures how well a model generalizes to new data by simulating multiple train-validation splits. It helps detect overfitting by showing a discrepancy between training performance and validation performance. If validation scores are significantly lower than training scores, you know that the model might be overfitting. Cross-validation also helps in choosing hyperparameters that generalize better across folds.

When Would a Large Tree Actually Perform Well?

A large tree can perform well if the training dataset is extremely large and the underlying relationships are genuinely complex. In scenarios with vast amounts of data, a deeper tree may capture real structure rather than noise. Nevertheless, even in that situation, it is prudent to validate performance on a held-out set or use cross-validation to confirm that the added complexity is not simply fitting noise.

Below are additional follow-up questions

In What Ways Could Restricting Tree Depth Too Aggressively Lead to Underfitting, and How Would You Detect It?

Underfitting can occur if you set max_depth to a value that prevents the tree from splitting enough times to capture important structure in the data. For instance, if there are genuinely complex interactions between features, limiting depth too severely can cause the tree to miss these relationships, resulting in poor training and validation performance. Typically, you can detect underfitting when both training and validation accuracies are relatively low. A practical approach is to gradually increase max_depth and evaluate performance on a validation set (or via cross-validation). If performance on both training and validation sets consistently improves with slightly deeper trees, it suggests that the model was underfitting at a shallower depth.

Pitfalls or edge cases include data with strong nonlinear interactions or large feature spaces where the interactions are crucial to classification. In those scenarios, capping max_depth too aggressively fails to capture the necessary splits, causing the model to oversimplify.

How Does Highly Correlated or Redundant Features Affect Overfitting in Decision Trees and Tree Ensembles?

Decision trees naturally handle correlated features by often picking one correlated feature as a split early and ignoring the others (because once a dominant feature split is made, correlated features do not provide much further gain). However, in ensembles like random forests where each tree sees a random subset of features, correlated or redundant features might still lead to more complex trees if those features appear frequently in different trees. This could inadvertently capture noise or spurious patterns.

A subtle pitfall is when correlated features are not just correlated with each other but also with the target in inconsistent ways across subgroups of data. The model might chase these inconsistent relationships, leading to overfitting. You can mitigate this by: • Performing a correlation analysis and removing or combining highly redundant features. • Using dimensionality reduction, if appropriate, to avoid having a large set of nearly duplicate predictors. • Checking the importance scores of features in the trained model—if multiple correlated features all have high importance, it may be a sign of inflated importance due to correlated splits.

Can You Rely on Model Interpretability to Diagnose Overfitting or Underfitting in Tree-Based Models?

Interpretability in tree-based models often comes from measures such as feature importance or from examining individual splits in a single decision tree. In large ensembles like random forests or gradient boosting, individual tree paths might be too many and too complex for clear interpretability. A highly interpretable single decision tree is often small and shallow, which might underfit. Conversely, a very large tree might “look” like it fits the data meticulously, but that alone does not confirm if it’s overfitting or genuinely capturing important interactions.

One edge case is when the data truly has many complex interactions and non-linear relationships. A large tree can appear overfitted but might actually be correct. Conversely, a small tree might appear more interpretable but miss important structure. Thus, interpretability alone is not a reliable indicator of overfitting or underfitting. You still need quantitative measures, such as validation loss or cross-validation scores, to confirm whether the model generalizes well.

How Should Overfitting Be Handled When the Training Data Is Extremely Imbalanced?

With severe class imbalance, the tree might predominantly learn how to split the majority class and fail to properly model the minority class, effectively overfitting to the majority. In extreme cases, the model could predict almost all samples as the majority class and still achieve high accuracy but poor recall for the minority class.

Potential mitigation strategies: • Use class weights or cost-sensitive learning to penalize misclassifications of the minority class more heavily. • Sample the data (undersampling the majority or oversampling the minority) before training. Methods like SMOTE can create synthetic samples for the minority class. • Evaluate performance using metrics like F1 score, precision/recall, or AUC-ROC. Relying solely on accuracy can mask overfitting to the majority class.

The subtlety here is that overfitting might not always be evident if you only look at accuracy. You may see strong performance numbers if the model predicts the majority class frequently. Thoroughly analyzing confusion matrices or per-class metrics is essential.

What Are Some Potential Overfitting Traps When Handling High Cardinality Categorical Features in Tree-Based Models?

High cardinality categorical features (e.g., zip codes, user IDs, or product IDs) can induce many splits, especially if the training set is large enough. A decision tree might learn extremely specific rules for rare categories that appear only a few times in the training set, leading to overfitting.

Pitfalls include: • Over-partitioning: The model could create separate leaves for each rare category, effectively memorizing each unique identifier. • Poor generalization on unseen categories: In real-world settings, new or rare categories might appear at inference time, and the model has no robust way to handle them.

Solutions: • Group rare categories into an “Other” bucket if they do not provide meaningful predictive power. • Use target encoding, but apply it carefully with regularization or cross-fold strategies to avoid leakage. • Consider hashing or dimensionality reduction for large categorical features.

When Might Pruning (Cost Complexity Pruning) Fail to Alleviate Overfitting Completely, and What Are the Remedies?

Pruning cuts back a fully grown tree by removing splits that offer only marginal improvements. However, it might fail if the original tree is already too large and has grown around noise patterns. Sometimes the pruning algorithm gets stuck in a suboptimal structure if the initial splits were heavily influenced by spurious correlations.

Remedies include: • Adjusting α (the complexity parameter) more aggressively so that more branches get pruned. • Setting constraints at the outset: for example, using min_samples_leaf or max_depth to guide tree growth so that it never overfits in the first place. • Trying ensemble methods (e.g., random forests or gradient boosting) which can mitigate some overfitting through averaging or sequential corrections. • Using more robust training/validation procedures, such as repeated cross-validation, to ensure splits leading to noise memorization are less likely to survive in all folds.

Edge cases where pruning fails often involve extremely noisy data with many irrelevant features. The initial splits might latch onto random fluctuations, making it challenging for post-hoc pruning to find an optimal tree structure.

How Do You Validate That an Overfit Model Is Truly Overfitting and Not Merely Very Accurate?

Sometimes a model can appear suspiciously high-performing, but it might simply be well-tuned for a complex dataset. To confirm overfitting, you can: • Evaluate on a hold-out set or use cross-validation. If the training performance is very high while validation performance drops substantially, that’s a classic overfitting indicator. • Check performance stability. If small changes in training data cause drastic changes in predictions, it might be a sign of an overfit model with high variance. • Consider real-world plausibility. If the model predicts with near-perfect accuracy in a domain known to be noisy or complicated, it’s wise to investigate whether it has memorized artifacts in the training data.

A subtle scenario arises in highly structured problems (like certain biological or physics-based tasks). Models can achieve near-perfect performance because the data follows well-defined rules. Verifying domain knowledge and performing thorough cross-validation are the best ways to distinguish genuine performance from overfitting.