ML Interview Q Series: Time on Site & Purchases: Establishing Causality with A/B Testing and Confounder Analysis.

📚 Browse the full ML Interview series here.

Correlation vs Causation (Scenario): Suppose a dataset shows that users who spend more time on a website tend to make more purchases. Does this imply that increasing a user’s time on site will cause them to buy more? Discuss how you would investigate causality, and what confounding factors or experiments you would consider to validate the relationship.

Understanding Why Correlation Alone Does Not Imply Causation Correlation indicates the degree to which two variables move together. If users who spend more time on a site also purchase more, these two factors are correlated. However, correlation by itself does not tell us whether one variable directly causes changes in the other. It could be that users who are already highly motivated to buy will naturally linger longer, or that a third variable (like site personalization or user demographics) drives both extended browsing and higher purchase rates.

Investigating Causality One cannot simply conclude that pushing users to stay longer will directly lead to more purchases. To demonstrate that time spent on the site causes an increase in conversion, one should conduct studies or experiments designed to reduce biases and control for confounding variables.

Potential Confounders Confounders are factors that affect both time on site and purchasing behavior. Some examples:

User Engagement Level Highly interested or loyal users might spend more time reading product details or exploring reviews before making purchases. Their inherent engagement level drives both the time-on-site metric and the purchasing decision.

User Demographics Certain demographics (e.g., younger tech-savvy users) might naturally spend more time exploring websites and also have a tendency to convert at higher rates. Demographic differences could influence both variables without a direct causal link between them.

Content Quality or Website Experience If the site is easier to navigate or has engaging content in certain product categories, users might stay longer and also be more likely to convert. That means improvements in site design or content quality drive both time on site and conversion rather than one causing the other.

Investigating Causality Through Observational Analysis In observational datasets, one may look for ways to tease out confounders. Statistical techniques help to isolate the relationship between time on site and purchase likelihood. One might attempt:

Propensity Score Matching Group users with similar characteristics (e.g., demographics, prior purchase history, device used) so that the main difference between groups is their time on site. If the matched groups show different purchase rates, it becomes more plausible that time on site has a causal role. However, this depends on the extent to which we can measure and include all relevant confounders. Unmeasured confounders can still bias the results.

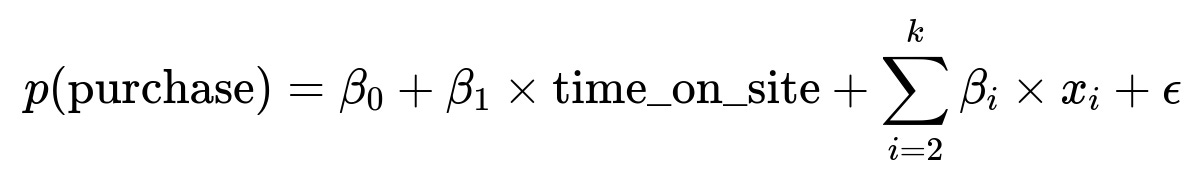

Multivariable Regression One might use a regression approach controlling for many features that affect purchase. A simplified expression could be:

Here, ( x_i ) are other variables such as demographics or previous purchases that might confound the relationship. Even then, regression alone does not guarantee causality. It only improves our confidence by reducing the omitted variable bias if we have measured the confounders correctly.

Experimental Approach for Validating Causality In practice, experiments are usually the most reliable way to infer causality:

Randomized A/B Testing One can split users randomly into two groups. In the experimental group, the website design might be subtly modified to encourage longer browsing sessions (for instance, adding interactive product tours or personalized suggestions). The control group experiences the usual interface. If the experimental group truly ends up with significantly higher purchase rates, we gain direct evidence that increased time on site causes the lift in purchases. By randomizing users, most confounders balance across the two groups.

Ethical and Practical Considerations In attempting to force users to stay on a site, user experience might degrade if the intervention is too intrusive. One must balance user satisfaction with the desire to see if increased site time drives higher revenue. Also, the tested mechanism for increasing time should be realistic—perhaps more personalized recommendations or better product content—rather than artificially locking navigation.

Potential Pitfalls in Experimentation If the experiment is not carefully designed, confounders can remain. For instance, power users might be more likely to notice new website features. If the new feature inadvertently targets certain kinds of users, the results may be skewed. Additionally, large sample sizes are needed to detect differences in purchase behavior, especially if the baseline rate is low. The duration of the experiment should be long enough to capture typical user behavior patterns and any delayed effects.

Summary of the Overall Approach A simple correlation does not establish that increasing time on site causes an increase in purchases. To investigate, one should:

• Identify relevant confounders such as user engagement, demographics, and site design features. • Use observational techniques (like propensity score matching or regression with comprehensive controls) to look for robust relationships, while recognizing residual confounding might remain. • Conduct an A/B test or a well-defined experiment that randomly manipulates the amount of time users spend on the site (or manipulates features known to prolong session duration) and measure differences in purchase rates. • Evaluate the experiment carefully to ensure randomization is done properly, sample sizes are sufficient, and any changes in user experience are understood.

How might you deal with users who are forced to stay longer artificially?

One approach is to create an experience or feature that organically encourages a longer session, rather than forcing it. For example, you might introduce product recommendation widgets or more informative content. By deploying this feature only in a randomized set of sessions, you can gauge whether the additional content (which should logically increase session length) also leads to higher conversion. If the only systematic difference between the control and test groups is the presence of that new feature, then any difference in purchases can be attributed, with some level of confidence, to that feature and thus potentially to longer session times (though the precise mechanism might be richer product information, rather than just “longer time” per se).

What if the experiment shows a short-term increase in purchases but no long-term effect?

Sometimes, short-term novelty effects—such as a new design—attract user attention and thus inflate metrics. In the long term, users may revert to their typical browsing patterns. To investigate this, run the experiment for an extended period. Track user behavior over time to distinguish a sustained causal effect from a temporary bump. Also, analyze user-level effects (e.g., repeat purchasers) to see if longer on-site sessions lead to lasting changes in buying habits.

How would you ensure that you are capturing the correct confounding factors in an observational setting?

First, brainstorm all the plausible variables that might relate to both session time and purchase behavior, such as user income bracket, purchase history, brand familiarity, device type, time of day, site or product category, referral source, and so on. Then, include those variables in a model or matching strategy. However, no matter how exhaustive the list, there is always a risk of unobserved confounders—factors you have not measured or cannot measure. That limitation is why an experiment is preferable if feasible. If not, advanced causal-inference approaches (e.g., instrumental variables or difference-in-differences) can be employed when relevant instruments or natural experiments exist.

How can you apply instrumental variables to this type of problem?

An instrumental variable (IV) is a variable that influences the time on site but does not directly influence the probability of purchase (except through time on site). If, for instance, the website experiences random traffic spikes due to external events (e.g., an unpredictable mention on social media), that might cause more prolonged sessions in a way that is uncorrelated with user purchase intent. One could use that external event as an instrument to estimate the causal effect of session duration on purchases. However, finding a valid instrumental variable can be challenging. It must satisfy the condition of affecting purchase only through the variable of interest (session time) and not directly.

Are there scenarios where increasing time on site might reduce purchases?

Yes. An overly complicated browsing experience or forced engagement might frustrate users. A design that simply prolongs the path to purchase without adding value can lead to cart abandonment. This highlights the importance of testing the actual mechanism that influences session time. If the changes that increase browsing time make the experience cumbersome, you could see negative outcomes.

How do you distinguish the effect of user intent from the effect of UI changes that prolong session time?

In many causal inference settings, user intent is a critical confounder: a highly motivated user may linger longer simply because they are already inclined to buy. We want to see if, given the same level of intent, an intervention that causes a longer session actually yields more purchases. The typical strategy involves random assignment to ensure that both highly motivated and less motivated users are evenly distributed. In a well-designed A/B test, each group should have roughly equal representation of users with varying intent levels. By then comparing purchase rates, one hopes to isolate the incremental benefit of the new design (or extra site time). Observationally, you might measure proxy variables of user intent (e.g., prior site visits or cart additions) and control for them.

How do you address noisy data where time on site might be inaccurately recorded?

Time-on-site measurements can suffer from inactivity timeouts, multiple tabs, background sessions, or abrupt disconnections. Strategies to mitigate noise:

Use event-based tracking Rather than relying purely on session start and end, log user interactions (clicks, scroll depth, time of last activity). This gives more precise estimates of engaged time.

Ignore extremely long idle sessions Set a reasonable idle timeout that resets the clock when there is no user activity. If a user leaves a tab open for an hour without interaction, it should not inflate the session time meaningfully.

Uniform data processing Ensure both the control and experimental groups have session times computed identically, so that any measurement inaccuracies affect them equally and do not systematically bias the results.

By carefully cleaning and validating time-on-site data and by using randomization, you reduce the chances that inaccurate time metrics drive incorrect conclusions about causality.

How would you measure success in an A/B test aimed at exploring causality in this scenario?

Common metrics to track:

Conversion Rate or Average Revenue per User If the primary hypothesis is that longer sessions cause higher purchases, then a direct increase in conversion rate or revenue is the most critical outcome measure.

Engagement Metrics Session length itself may be a secondary metric (the manipulated factor). Track not only total time on site but also engaged time, number of interactions, or pages viewed.

User Experience Metrics Monitor bounce rates, exit rates, and user feedback. An intervention that artificially inflates session length might degrade user satisfaction if it’s not done thoughtfully.

Practical Implementation Choose a representative user population, randomly assign them to control vs. experimental variations, track metrics over enough time to obtain robust statistics. Conduct statistical significance testing, ensuring that any observed difference in purchase rates or revenue is unlikely to be due to chance.

What if your experimental results differ from observational findings?

This discrepancy often indicates hidden or inadequately measured confounders in the observational data. Observational analysis might have suggested a strong correlation, but the actual A/B test results show minimal or no causal effect. In such cases, the best practice is typically to trust the randomized experiment, since it controls for confounders in a way observational data cannot. The difference highlights the importance of validating correlations experimentally whenever possible.

How do real-world constraints impact your ability to run experiments?

There are times when running an experiment is expensive, time-consuming, or risky. For example, extensively changing the website might disrupt the user experience or brand image. In such cases, smaller pilot tests or feature-based rollouts might mitigate risk. If even that is not possible, advanced observational methods (like quasi-experimental designs) or partial testing (like testing on smaller user segments) can be used, though these have more potential biases than fully randomized experiments.

How would you finally conclude if time on site truly causes higher purchases?

Gather the evidence from multiple sources:

• Observe the correlation and replicate it with careful observational methods controlling for confounders. • Conduct randomized experiments if possible (e.g., via feature changes that encourage longer sessions). • Confirm that the difference in purchases between experimental and control groups is statistically significant and that the effect persists over time without harming user experience.

A consistent pattern of evidence from rigorous analysis leads to a strong causal inference. On the other hand, if rigorous tests show no real causal effect, then the observed correlation was likely due to user intent, demographic differences, or other confounding factors.

Below are additional follow-up questions

How would you address differences in user acquisition channels that might confound the relationship between time on site and purchases?

In many real-world scenarios, the way a user arrives at your website can significantly shape their intent and behavior. For example, users coming from a targeted search ad might already be further along in the purchase funnel compared to users who click a casual social media link. If those arriving via high-intent channels naturally stay longer (researching final details, exploring bundles) and purchase more frequently, it could create a spurious correlation between session duration and conversions.

To address this, you can: • Segment the data by acquisition channel, analyzing how time on site relates to purchases in each separate channel. This often reveals whether the correlation is channel-dependent. • Incorporate channel data into your regression or propensity score matching, ensuring that users with similar acquisition sources are matched or controlled for. • In experiments, randomize your treatment (e.g., a feature that extends session length) across different acquisition channels so that each channel sees both control and treatment. If the channel mix is the same in both groups, it’s less likely to bias causal conclusions.

A potential pitfall is that channels can shift unpredictably. For instance, a sudden surge of users from a highly motivated channel—like an influencer’s product endorsement—may skew your time-on-site and conversion metrics. Hence, continuous monitoring of channel composition is critical throughout any experimental or observational study.

How do you handle multi-touch attribution issues when trying to measure causality?

In many online businesses, a user’s journey involves multiple interactions: ads on different platforms, product page visits, abandoned carts, email reminders, etc. When analyzing time on site versus purchases, a single session’s duration might not capture the influence of previous touches or the user’s entire research cycle.

Potential strategies: • Combine data across touchpoints: Build a multi-touch attribution model that accounts for each interaction. Even if a user has multiple sessions, the aggregated view helps you see the bigger picture. • Track user-level histories: Instead of session-level features alone, collect user-level data (e.g., whether they clicked an email campaign or were exposed to a retargeting ad). That way, you see if your “time on site” metric is part of a broader funnel of repeated visits. • Experimental design for funnel steps: If you run an A/B test, ensure randomization is consistent across multiple touches. For instance, the same user sees the experimental variant each time they visit, reducing the confusion of switching experiences mid-funnel.

A subtle pitfall is that different touches might confound the effect of session duration on purchase. If a particular ad is extremely effective at attracting high-intent customers, that alone might drive longer browsing sessions and higher conversion. Controlling for or randomizing exposure to each touchpoint is crucial to isolate the role of session time.

Can time on site be detrimental in certain scenarios, and how do you detect when a longer session might reduce purchases?

While increased time on site often correlates with deeper engagement, there are contexts in which forcing users to spend more time can backfire. Some examples: • Users on a mission: If visitors want to make a quick purchase (e.g., replenishing a known item), unnecessary friction or forced interactions can frustrate them, potentially lowering conversions. • Complex or confusing UX: If a user is stuck searching for product information or dealing with slow-loading pages, they’re spending more time involuntarily, which might lead to abandonment. • Indecision loops: Providing too many choices or too much content might lead some customers to experience decision fatigue and leave.

Detection strategies: • Monitor user feedback, bounce rates, and session recordings: If a new site feature is introduced to increase engagement but you see higher bounce rates or negative feedback, it might be harming conversions. • Watch for changes in average order value vs. session time: If session duration is going up but purchase rates or order values are dropping, it could mean you’re adding friction rather than beneficial engagement. • Segment by user intent: Evaluate the feature for first-time visitors, repeat customers, or existing subscribers. Users with different intents might respond differently to extended session lengths.

A potential pitfall arises if you only look at overall average time on site. Some users might stay longer productively, while others are stuck or frustrated. Always segment or explore deeper engagement metrics (e.g., depth of scroll, search queries made) to confirm that increased time is purposeful.

What challenges occur when the site caters to different use cases or product categories?

If your website has multiple distinct product categories or use cases, time on site can mean very different things across user segments. For instance: • Users browsing electronics might linger to compare specifications, watch product demos, or read multiple reviews. • Users shopping for groceries or daily essentials might want a rapid and frictionless checkout.

When analyzing time on site vs. purchases, these differences can confound the overall relationship if you pool all categories together. A few considerations: • Category-based segmentation: Evaluate correlation and conduct experiments within each product category. If you find that more browsing time strongly correlates with higher purchases only in high-involvement categories (e.g., electronics), you can target interventions more effectively. • Category-specific user flows: Some product lines might benefit from more in-depth content (videos, comparison tools), while others thrive on speed. Make sure to customize any “time-extension” strategies to the context of each category. • Mixed-cart scenarios: A user might browse electronics but also add quick household items to the same cart. In that case, you want to ensure you’re capturing the overall effect of time spent across multiple sections, rather than attributing conversion solely to one category.

A tricky edge case arises if you have “loss-leader” categories that users spend a lot of time exploring but rarely convert. Those might inflate time on site without improving overall purchases, skewing naive analyses.

In what ways could seasonality or external factors skew the correlation?

Seasonality can dramatically change buying behavior, and external economic factors (e.g., recession, holidays, new competitor launches) can alter both session duration and conversion patterns. Examples: • Holiday seasons: Users may be motivated to compare more products and spend more time on the site due to gift shopping. Purchases often rise with or without extended session times. • Economic downturns: If spending power decreases, even users who browse extensively might be hesitant to buy. • Competitor campaigns: If a competitor runs a massive discount promotion, your site’s visitors might be comparing prices across multiple tabs, leading to longer sessions without guaranteed conversions.

To mitigate: • Incorporate time windows into your analysis: Compare the same seasonal periods across different years, or compare pre- and post-season periods. • Use separate models or controls for different seasons: A regression approach can include seasonal dummy variables. In an A/B test, ensure random assignment is balanced throughout the season so that each variant experiences similar external influences. • Continuously monitor macro trends: If an unforeseen event (like a major competitor sale) spikes traffic or changes user behavior, consider pausing or adjusting your experiment or observational study to avoid mixing data from abnormal conditions.

A subtle issue is that random fluctuations can be mistaken for treatment effects if the experiment is not carefully monitored. For instance, if you observe an uptick in purchases the same week you implement a feature to extend session time, it could simply be coinciding with a seasonal shift in consumer behavior.

How do you separate the effect of a new feature from general site improvements that also influence user behavior?

Over time, websites frequently make various improvements, like optimizing loading speeds, simplifying navigation, or improving the checkout funnel. These changes might also increase session duration (because users spend more time exploring new features) or speed up purchases (shortening session duration). If you are testing an intervention specifically aimed at prolonging sessions, those parallel modifications can blur the effect.

Ways to disentangle: • Controlled release: Only release the new “time-extension” feature to the experimental group and ensure other site changes roll out equally to control and experiment. • Feature flags: Use feature flagging systems to carefully manage who sees which changes, ensuring only the variable of interest differs across groups. • Historical baseline: If you have a stable, weeks-long baseline before the new feature, you can compare the shift in metrics between the control and experimental groups relative to that baseline.

A major pitfall is if you release a performance improvement that reduces page load times at the same time as your experiment. Users might ironically spend more time on the site because it’s now more engaging, or they might spend less time because checkout is smoother. This overlap makes it difficult to parse out the specific causal impact of your time-on-site intervention unless you carefully manage the rollout.

What if you discover that only a small subset of users exhibit the “more time = more purchases” pattern?

Sometimes, the relationship may hold strongly for a particular subgroup but not the broader user population. Perhaps advanced hobbyists or enthusiasts in a certain niche are more likely to read detailed content and ultimately buy. General users might just want quick access to key facts.

Investigatory steps: • Identify user segments or clusters based on behavior or demographics to see where the correlation is strongest. • In your causal experiment, stratify randomization to ensure that each segment has both control and treatment. Then measure the effect by segment. • If the correlation is meaningful only for a niche group, consider targeted strategies. For example, you might serve deeper content or product reviews only to those who have shown interest in advanced details, while keeping the process streamlined for casual buyers.

A nuanced pitfall is automatically assuming that a strong relationship in a small but engaged segment generalizes to all users. This could lead to sitewide changes that alienate the majority of visitors who do not appreciate the extra content or steps.

How do you assess whether confounding arises from user context, such as time of day or location?

A user’s environment (time of day, day of week, geographic location, local events) can influence both how long they stay on the site and whether they buy. For instance, late-evening shoppers might be more deliberate and spend more time browsing, or they might be rushing to place an order before next-day shipping cutoff.

Possible approaches: • Incorporate time-of-day or location indicators into your regression or matching models. • Segment the experiment by region or time slot so that randomization occurs within each slice, ensuring that both control and experimental groups have similar distributions of these contextual factors. • Analyze heatmaps of user activity across different times or places to see if there are consistent patterns in session duration and purchase rates that might explain the observed correlation.

Edge cases: • Some regions might have slower internet speeds, artificially inflating time on site without increasing purchase likelihood. • Certain time slots might correspond to impulse buying (e.g., late-night “shopping sprees”) where time on site is short but purchase rates are high.

Recognizing these context-driven behaviors helps refine the causal analysis and avoids overgeneralizing from data that may be heavily skewed by time or location effects.

How would you handle a scenario where purchase decisions span multiple sessions over several days?

In many product categories, users research over multiple sessions. A high-value purchase such as a car, a home appliance, or expensive electronics often involves reading product specs, comparing prices, and returning to the site multiple times before buying. A single session’s duration might not capture the total effort leading to a conversion.

Strategies to address multi-session journeys: • Combine sessions at the user level: Sum or average time across all sessions in a defined period (e.g., 30 days). Look at total engaged time vs. ultimate purchase decision. • Funnel analysis with time gating: Track how long it takes from the first session to purchase. If users who eventually buy have collectively more total time on site over multiple visits, that might be the real correlation rather than any single session length. • Experimental approach across sessions: If your experiment is about site design changes aimed at increasing total browsing time, ensure returning users always see the same variant. That way, you can accumulate their session time consistently in either the control or experimental condition.

A key pitfall is attributing a later purchase solely to the final session’s duration, when in reality the user formed their purchase intent during prior visits. Failing to account for these multi-visit paths can lead to misleading conclusions about the causal effect of session length in the final step.

How do you handle users who visit the site repeatedly without purchasing?

Some users might be “researchers” who frequently return to the site to compare prices or read reviews but never actually buy. Others might be driven to the site by promotions or curiosity but have no real intention of purchasing. These users can inflate your session duration metrics without contributing to revenue.

Possible approaches: • Thresholding based on purchase likelihood: You might exclude or separately analyze users who have never purchased or who have visited many times without buying. This can isolate the effect of session time on those who have some track record or indication of purchase intent. • Labeling “chronic browsers”: If you have a user who visited 20 times over three months with zero conversions, treat them as a different segment from typical one-time or occasional visitors. • Using predictions of user intent: Build a predictive model (e.g., with logistic regression or a gradient boosting approach) that estimates how likely a user is to purchase based on early session activity. Then, stratify or match based on that predicted intent to compare “similar-likelihood” users who differ in session duration.

An edge case occurs when these “repeat non-buyers” eventually convert after a very long cycle. By discarding them prematurely, you might miss late conversions. Balancing how to segment these users is crucial for robust analysis.

Could a causal relationship hold for one type of site layout but not another?

Website layout and user flow can drastically change how session duration and conversions interact. If your site is structured in a way that places relevant purchase information up front, users might quickly convert. On a different site with a more exploratory layout, users spend time searching for that same information.

In investigating this: • Test layout variations: Run an experiment comparing two different site layouts. In one variant, critical info is immediately visible; in the other, users need to navigate more deeply. If the layout that naturally leads to longer sessions also increases conversions, it suggests a potential causal effect, but you also want to ensure that you’re not just relocating where the purchase trigger resides on the page. • Track user path data: Identify the steps users take before checking out. If a certain path leads to more time but also more consistent purchases, see if it’s the structure of the path or the added time that matters. • Evaluate user satisfaction: A site layout might artificially extend session time (requiring more clicks to get key details) yet annoy customers. Another layout might boost time because it offers deeper content that genuinely informs the purchase decision.

The main pitfall here is conflating cause and effect: a layout requiring more clicks might correlate with longer sessions and slightly higher conversion, but the real driver could be that only extremely motivated customers are willing to go through extra steps. This is why randomizing the layout is crucial for causal claims.

How do you handle model drift or changes in user behavior over time when investigating a potential causal relationship?

User behavior can shift for reasons unrelated to your experiment: new trends, changes in competitor offerings, shifts in preferences. If you build a model or run a test and then rely on it long-term, you risk “model drift,” where the underlying relationship changes.

Methods to manage this: • Continuous experimentation: Periodically re-run A/B tests or incorporate holdout groups so that you detect if the effect of extended session time changes. • Rolling data updates: Continuously update your observational models with new data, re-checking if time on site remains a strong predictor or driver of purchases. • Monitoring external variables: Keep track of relevant industry changes, consumer sentiment, or economic indicators that might shift user motivations independently of your site experience.

A subtle issue is that an approach that worked six months ago may no longer hold if competitor sites introduced new features or if user shopping habits evolved. Always keep testing and validating the assumption that longer site sessions cause more purchases, especially in rapidly changing industries.

How do you weigh the trade-off between data granularity and user privacy, especially if you want to track each moment of site engagement?

Capturing highly granular data (e.g., tracking individual mouse movements, all clicks, or eye-gaze for every user) can yield precise estimates of how engaged a user truly is, but it can conflict with user privacy expectations or legal regulations such as GDPR or CCPA.

Balancing strategies: • Use aggregated or anonymized metrics: Rather than storing raw event-level data for all users, aggregate at the session or user level without retaining personally identifiable information. • Implement strict consent and data handling policies: If advanced tracking is essential for your business, ensure users explicitly opt in and that you communicate the purpose and scope of data collection clearly. • Differential privacy: For large-scale analyses, incorporate noise or other methods so that individual user activity cannot be tied back to personal information.

A pitfall is losing valuable causal insight if you adopt overly coarse metrics. Conversely, collecting too much personal data without a clear plan for usage and protection can lead to regulatory and reputational risks. Ensuring compliance and building trust with users is vital, especially when investigating metrics like time on site.

How might you statistically validate that your experiment or observational study is robust against multiple comparisons?

When exploring the potential causal effect of session duration, you might run many tests (different layouts, different user segments, multiple features). The more tests you run, the higher the chance of finding a “significant” result by random chance.

Mitigation steps: • Correct for multiple comparisons: Use approaches like the Bonferroni correction, the Holm–Bonferroni method, or false discovery rate (FDR) controls to adjust your p-values or significance thresholds. • Pre-register hypotheses: Clearly define in advance the primary outcome and secondary outcomes, so you avoid data-dredging or p-hacking. • Use hierarchical or Bayesian methods: Instead of testing each segment independently, consider hierarchical models that pool information across segments while adjusting for multiple comparisons.

A subtle pitfall is that if you do not account for these multiple comparisons, you might erroneously conclude there’s a strong causal relationship for some segment or test variant, when in reality it’s just a statistical fluke.

If a causal link is established, how do you ensure scaling up the intervention does not introduce new confounding factors?

When scaling an intervention sitewide (e.g., a new feature to encourage deeper engagement), the user population might differ from the smaller group in your test. Also, you might need more servers, change site architecture, or introduce marketing campaigns to showcase the feature—these changes can alter user behavior in ways not captured in the initial experiment.

Key considerations: • Phased rollouts: Gradually expand the treatment group from a small subset to a larger fraction of traffic, observing if the purchase lift remains consistent. • Monitor performance metrics: As usage scales, site speed or reliability might degrade if the new feature is computationally heavy. Slower performance could counteract any gains from extended sessions. • Reassess confounders: If a large-scale marketing push accompanies the full release, that marketing effort might be the real driver of higher conversions, confounding the effect of session length.

A potential edge case is that enthusiastic early adopters respond differently than the general population. If your small-scale test was run primarily on these enthusiasts, the broader user base might not exhibit the same behavior. Continuously measure across the entire deployment to confirm the effect remains.