ML Interview Q Series: We need to add a “green icon” showing an active user in a chat system but cannot do an AB test before launch. How would you evaluate its impact after the feature goes live?

📚 Browse the full ML Interview series here.

Comprehensive Explanation

One way to approach the problem of measuring the success of a new feature when an AB test is not feasible is to rely on observational data and well-structured post-launch analyses. This requires you to isolate the feature’s influence on key success metrics despite not having a randomized control group. You can analyze the effectiveness of the feature using multiple approaches, focusing on changes in user behavior, platform engagement, and overall usage patterns.

One common strategy involves time-series analysis. If you track relevant metrics before and after the feature’s deployment, you can observe the variation in user activity. This might include measuring chat-related outcomes such as total messages sent, average chat session duration, or the proportion of users initiating chats. By plotting key metrics over time—before and after release—you may detect an observable “jump” in user engagement once the feature appears.

Another possible strategy is to leverage quasi-experimental techniques such as a difference-in-differences approach if you can find a comparable control group, even if that group is not part of an ideal AB test. For instance, if certain sub-populations received this feature later due to staged rollouts or variations in platform versions, you can treat the late-adopters as a pseudo-control group. This requires you to confirm that both groups (early receivers versus late receivers) share similar characteristics, to minimize confounding effects.

If no such staged rollout exists, you could explore advanced causal inference methods, for example matching techniques. In matching approaches, you create pairs of users (or cohorts) who appear statistically similar based on key attributes (like platform usage patterns prior to the feature), then compare their post-launch usage behavior. You could also consider a regression discontinuity design if the feature’s release date or user adoption threshold (like app version upgrades) can be treated as a cutoff point.

When analyzing results, special attention must be given to potential biases, such as selection bias. Selection bias can arise if the most active users are the ones who notice or benefit most from the green dot. Confounding factors can also limit your ability to claim direct causation. Overcoming these limitations requires careful statistical modeling and domain knowledge to isolate the effect of the green dot from external changes to the platform or broader user trends.

Potential Core Mathematical Formula

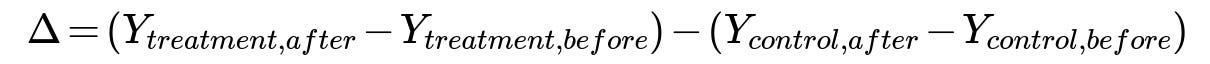

When using a difference-in-differences approach, one key formula for the Average Treatment Effect (ATE) can be shown as:

Where Y_treatment_before is the average outcome metric for the treatment group (the group with the green dot) before the feature release, Y_treatment_after is the average outcome metric for that same group after the feature release, Y_control_before is the average outcome metric for a comparable control group before they get the feature, and Y_control_after is the average outcome metric for that control group after the feature is made available.

This difference-in-differences value (Delta) attempts to isolate the impact of the new feature from other external time-based effects that might also influence user behavior.

Follow-Up Questions

How would you choose which metrics to monitor for effectiveness?

You would start by determining which aspects of user behavior you expect the feature to influence. For a green dot indicating active status, you might hypothesize that users who see contacts are online would be more likely to initiate conversations, leading to potential increases in messages per user, chat session frequency, or session duration. You would then monitor metrics such as:

Total number of chat conversations initiated

Response rate to inbound messages

Duration of chat sessions

Number of active users returning daily

These metrics directly capture whether the green icon is improving user engagement or conversation flow on the platform. You could also track secondary metrics, such as how often users quickly check the chat panel or how many messages are left unread. If, for instance, the “active user” indicator makes people open the chat more frequently, you should see a measurable uptick in chat open events.

What if we can only release the feature globally at once, leaving no natural control group?

In that situation, you can analyze the change in metrics using a single time-series approach. You record relevant metrics over a baseline period before the feature’s deployment and continue collecting them after it goes live. Observing a sustained increase in chat-related behavior right after the release, especially one that cannot be explained by known seasonal trends or marketing campaigns, strengthens the case that the green dot is responsible.

To bolster causal claims, you can consider approaches like synthetic controls, in which you construct a “synthetic” metric based on historical platform usage patterns to approximate what would have happened in the absence of the feature. Comparing actual post-release metrics with this synthetic baseline can provide insight into the magnitude of the feature’s effect. Additionally, investigating correlation with third-party signals—like daily active user shifts in the entire platform—helps rule out the possibility that external events unrelated to the new feature caused the observed changes.

How do you address confounding factors when analyzing observational data?

Confounding factors become especially concerning in non-randomized settings. You would need to ensure that any differences you observe are not merely the result of other concurrent platform changes or general user growth. One step is to carefully log all product releases and marketing pushes that coincide with the green dot’s launch. Another step is to segment users based on stable characteristics, such as how frequently they used the platform beforehand, their geographic region, or their job function if it’s LinkedIn. Comparing the shifts within relatively homogenous subgroups can help reduce heterogeneity in the data.

If you can identify variables that capture reasons for user engagement independent of the green dot, you might include them as covariates in a regression analysis. This regression model could hold those variables constant and help isolate the partial effect of the green dot. In more advanced approaches, you might adopt matching techniques, in which you pair “similar” users from before the launch, then observe differences in their chat activity after the feature is introduced to them.

Suppose you observe a short-term spike in engagement. How do you verify that the feature’s impact is sustainable?

Post-launch novelty often leads to an initial spike in engagement. To confirm that the green dot provides a long-term benefit, you would continue monitoring chat-related metrics over an extended period. You might chart the trajectory of usage over weeks or months, analyzing whether it stabilizes above the baseline or reverts to pre-launch levels.

One rigorous approach is to track cohorts of new users over time. For each cohort that joins the platform in a given period, you can see if they sustain higher chat usage rates over several weeks compared to older cohorts. If new cohorts continue to exhibit higher engagement and do not revert to baseline usage patterns, you have a strong indicator that the feature provides lasting value.

Can you show a small Python snippet that illustrates how to compute a before-and-after difference metric?

Below is a concise example demonstrating how one might calculate a simple before-and-after difference for a single group. This is not a comprehensive difference-in-differences setup with a control group, but it highlights how to do a straightforward time-based comparison in Python:

import pandas as pd

# Suppose df has columns: ['date', 'messages_sent', 'is_after_launch']

# 'is_after_launch' is a boolean indicating whether the date falls after the feature launch

# Calculate average messages before and after launch

before_launch_avg = df.loc[df['is_after_launch'] == False, 'messages_sent'].mean()

after_launch_avg = df.loc[df['is_after_launch'] == True, 'messages_sent'].mean()

# Compute the difference

impact_estimate = after_launch_avg - before_launch_avg

print("Estimated impact from before to after launch:", impact_estimate)

In this simplified example, we look at whether the mean number of messages sent changed significantly from before launch to after launch. For a more sophisticated approach, you would apply difference-in-differences with an appropriate control group or other observational causal inference techniques.

How do you generalize insights gained from this specific feature to future product releases without AB testing?

If the organization faces recurring constraints on running AB tests, building standardized observational frameworks is essential. Over time, you can gather best practices on selecting and tracking key metrics, identifying or constructing control groups, ruling out confounders, and applying causal inference techniques. Document these methods systematically so that future feature releases can follow similar protocols when AB testing is not possible. By developing robust systems for logging user events, capturing relevant metadata, and establishing consistent analysis pipelines, you can gradually refine and improve your post-release evaluation accuracy across multiple features.

Below are additional follow-up questions

What if some user segments experience decreased engagement while overall metrics improve?

A potential issue arises if the feature appeals to certain user groups but inadvertently discourages or confuses others. You might see an overall increase in engagement but fail to recognize that specific demographics (perhaps less tech-savvy users or those with privacy concerns) have reduced interaction. To address this:

Segment Analysis: You would break down your metrics by relevant segments (e.g., age ranges, geographic regions, job functions) to see if any subgroup experiences declines in chat usage.

Potential Explanations: Explore whether these users find the green dot too intrusive or do not understand its functionality. Collect qualitative feedback through surveys or interviews to support quantitative findings.

Remediation Strategies: If a particular user group is negatively affected, you could consider customizing the feature’s behavior (e.g., allow turning it off) for that segment. Evaluating the cost/benefit of complicated, segmented rollouts involves balancing engineering complexity and user satisfaction.

Pitfall: Overlooking subgroup differences and inferring that “overall improvement” means universal success could mask pockets of user dissatisfaction or churn.

How do you distinguish new user behavior from returning user re-engagement?

When a platform has both new signups and returning users, the green dot might impact these groups differently:

Data Partition: Separate new accounts created after the feature launch from existing accounts that encountered the feature mid-usage. This ensures you do not conflate the natural honeymoon effect of new users exploring the platform with the direct effect of the green dot on existing users.

Behavioral Analysis: For returning users, track how their messaging frequency changes before versus after the feature. For new users, compare their messaging patterns to historical cohorts of new users from a period before the green dot existed.

Retention vs. Activation: Focus on metrics like first-week chat activation for new users, whereas for existing users you might emphasize re-engagement (messages per day, days active per week) or incremental usage.

Pitfall: Aggregating new and returning users might obscure the true effect if each cohort responds to the feature in opposite directions.

What if external events or platform changes coincide with the launch, making it hard to isolate the effect?

Real-world platforms often deploy multiple features or run marketing campaigns simultaneously, which complicates causal attribution:

Event Logging: Keep a detailed log of product changes, bug fixes, marketing campaigns, and other updates. For each confounding event, assess the likely impact on chat usage (e.g., a global marketing push could spike all user engagement).

Segmented Timeline Analysis: If possible, isolate user activity in intervals around each potential confounding event. Compare how engagement shifted relative to that timeframe.

Controlled Interruptions: If you cannot avoid simultaneous changes, consider rolling out each new feature in time-staggered phases to help disentangle their individual effects.

Pitfall: Failing to systematically track concurrent changes can lead to invalid conclusions about which feature drove observed behavior shifts.

How can hierarchical or mixed-effects modeling help in situations with widely varying user cohorts?

In large-scale platforms, some cohorts might have extremely high usage while others barely use the chat feature. Simple aggregated methods (like a single average) might not capture these differences:

Random Effects: By using mixed-effects models, you can include random intercepts for different user segments (like business vs. casual users) or for individual users themselves. This approach accounts for intrinsic differences between groups or individuals.

Granular Covariates: Incorporate features such as user tenure (how long they have been on the platform), job role, or monthly usage rate prior to the launch. This helps the model adjust for baseline differences.

Interpretability: The fixed effect of interest (presence of the green dot) is then estimated while controlling for random effects across user segments. This yields more robust estimates of the feature’s overall impact.

Pitfall: A purely fixed-effects model can be misleading if significant inter-user variability exists. Neglecting that variability may produce inflated or underestimated effects.

How do you conduct a cost-benefit analysis for this feature?

Measuring effectiveness also means quantifying the value it brings versus the resources expended:

Engineering Costs: Estimate the development and maintenance overhead of continuously showing real-time statuses, possibly including server-side logic or additional data transmissions.

Infrastructure Costs: Real-time presence indicators can require frequent updates and database writes, increasing operational costs.

Engagement Value: Estimate how increased engagement translates to the platform’s key performance indicators. For instance, more chat messages might lead to more time spent on the platform or higher advertising revenue.

Opportunity Cost: Consider whether the resources for building and maintaining this feature could have been allocated to alternative initiatives, and compare the potential returns on those alternatives.

Pitfall: Underestimating maintenance and scale-out costs can lead you to assume a feature is more beneficial than it really is, especially if the necessary real-time infrastructure is expensive.

What if the user sentiment regarding the feature is negative?

Even if engagement metrics rise, some users might feel uncomfortable with broadcasting their online status:

Qualitative Inputs: Incorporate user surveys, feedback forms, or direct interviews to assess sentiment. Negative feedback might indicate privacy concerns or annoyance at being messaged too frequently.

Sentiment Analysis: Examine social media, app store reviews, or in-app comments. Monitor for keywords suggesting frustration, privacy issues, or confusion about the feature.

Balancing Act: If negative perception significantly outweighs any engagement gains, it may harm brand loyalty or trust. Offer easy opt-out options, or provide clear disclaimers about data usage.

Long-Term Reassessment: Reassess how the negativity evolves over time. Early pushback might subside as users grow accustomed to the feature, but persistent complaints may justify rethinking or modifying it.

Pitfall: Focusing solely on numeric metrics—like increased chat messages—while ignoring user concerns can result in deteriorated user satisfaction and higher attrition in the long run.

How do you ensure data reliability if there is incomplete or delayed tracking?

Post-launch analyses rely heavily on consistent, accurate data pipelines:

Monitoring Logs and Alerting: Implement automated checks that verify the volume of events received matches expected baselines. Sharp drops or spikes can signal tracking issues.

Redundant Tracking Methods: To minimize data loss, record key events (like chat initiations) using multiple systems or write to multiple storage solutions.

Delayed Data Availability: If metrics are computed in a batch process, there might be a latency of several hours or days. Plan analyses accordingly so you do not base critical decisions on partial data or incorrectly assume real-time completeness.

Data Quality Audits: Periodically audit the raw data to catch anomalies such as duplicate events or missing fields.

Pitfall: Drawing conclusions or implementing product decisions on flawed or incomplete data can lead to misguided changes. A robust data validation strategy prevents spurious findings.

How do you avoid or detect Hawthorne effects if users realize they are being observed?

When a new feature launches, some users may consciously change their behavior because they know usage is being monitored:

Longitudinal Observation: Track metrics for an extended period. If users’ behavior is artificially elevated initially, they might revert to normal usage patterns over time once the novelty wears off.

Compare Patterns of Conscious vs. Unconscious Use: If possible, examine background metrics (like reading messages) that users do not expect you to track. Check whether changes in these “unobserved” behaviors align with changes in more visible behaviors.

Anonymous Tracking: Minimize emphasis on the measurement aspect. Avoid pop-ups or disclaimers that explicitly call attention to measuring their activity so you do not induce reactivity.

Contrasting with Historical Data: Compare current user behavior with historical data for similar platform changes to see if a short-term “awareness spike” recurs or if the behavior shift is deeper.

Pitfall: Overestimating the feature’s true impact if the measured activity is elevated by users’ knowledge of observation. This can lead to incorrect assumptions about sustainable engagement.

How does varying usage between mobile and desktop platforms affect the analysis?

Different device usage patterns can confound the understanding of feature success:

Device-Specific Tracking: Segment your metrics by mobile, desktop, and potentially tablet or other devices. The prominence and UI of the green dot might differ by platform.

Feature Parity: Ensure the feature is implemented consistently across platforms. If one platform releases the green dot before another, you can exploit that staggered rollout for a quasi-experimental design.

Behavior Differences: Some users may primarily chat on mobile while others prefer desktop. The same presence indicator could affect these groups differently, potentially leading to unique engagement trajectories.

Pitfall: Aggregating across devices can blur platform-specific nuances. You may erroneously attribute an overall effect to the green dot when it is actually due to a device-specific user interface difference.