ML Interview Q Series: Why is it important to evaluate and measure bias in meal preparation time prediction models?

📚 Browse the full ML Interview series here.

Comprehensive Explanation

Bias in a predictive model refers to a systematic tendency to produce certain kinds of errors more often, or more severely, for particular segments of data. In the setting of food delivery times, measuring bias is crucial because the model’s predictions may result in significant downstream effects on customers, restaurant operations, and delivery drivers. If there is any systematic underestimation or overestimation for certain groups (for instance, specific restaurant types, cuisines, or other categories), then service reliability and fairness can be compromised.

Bias can arise from various factors: skewed training data (e.g., over-representation of certain cuisines), historical operational patterns that do not generalize well, or assumptions made while building features. If these biases are left unmonitored, the model can lead to adverse outcomes such as disproportionately negative user experiences or missed delivery SLAs (service-level agreements).

Key potential impacts of unchecked bias in a food delivery context:

Certain restaurants or times of day might show systematically higher errors. This could affect how order batching or driver routes are planned.

Overestimated preparation times could cause inefficient driver scheduling, leading to higher wait times for drivers and wasted resources.

Underestimated preparation times could negatively influence customer satisfaction, since users might expect a quicker delivery than is realistically possible.

Future business decisions, such as promotions or resource allocations, might be skewed if the predictions systematically favor certain cuisines or geographic regions.

Therefore, measuring bias is about ensuring accuracy, fairness, and consistency for all types of data within this domain.

Mathematical Representation of Bias

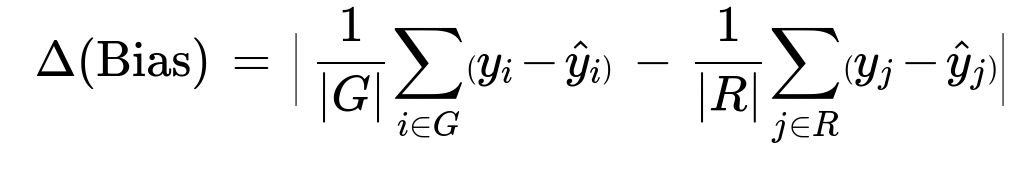

One way to quantify bias is by comparing how the model performs across different subgroups or categories (for example, restaurants in different geographical locations, different cuisine types, or varied operational loads). A basic approach might be to look at the difference in mean residuals for various segments. Here is a possible formula that can capture a portion of bias by comparing average residuals:

Where:

G is the subset of data (a specific group, for example, a particular cuisine or restaurant chain).

R is the rest of the data (or the complement set).

y_i is the actual preparation time for the i-th order in group G.

hat{y}_i is the predicted preparation time for the i-th order in group G.

Summations for y_j and hat{y}_j follow similarly for the complement group R.

If the difference in average residuals for one group compared to another is large, it indicates that the model systematically favors or disadvantages that group. Other forms of bias measurement might look at distribution differences in error, confidence intervals, or more nuanced fairness metrics.

Practical Considerations for Measuring and Mitigating Bias

Data Collection and Representativeness

One of the main steps is ensuring the dataset accurately represents different restaurant types, geographical locations, cuisines, and operational conditions (peak vs. off-peak hours). If the data lacks representation for certain subpopulations, the model may inherit biases.

Feature Engineering

Choosing appropriate features and transformations is vital. For instance, the model might leverage restaurant category, typical cooking complexity, and historical preparation times. However, if these features correlate strongly with a characteristic that leads to systematic errors, it is essential to investigate how and why.

Monitoring Residuals Across Segments

During model evaluation, residuals should be examined across various subgroups (time of day, day of the week, type of restaurant, etc.). Large disparities might flag issues that can be linked back to data, hyperparameter settings, or distribution shifts.

Regular Auditing and Retraining

Real-world settings have changing dynamics (concept drift). For instance, a restaurant may change its kitchen staff or streamline operations, drastically altering preparation times. Regular model monitoring, retraining on fresh data, and ongoing bias checks help keep the model updated and fair.

Transparency and Stakeholder Feedback

Sometimes bias is not discovered solely by metrics. Soliciting feedback from restaurant owners, delivery drivers, and customers can reveal subtle patterns of error that might not be evident from purely quantitative measures.

Follow-Up Questions

How do you detect whether your model systematically underestimates or overestimates preparation times for a particular group?

To identify systematic misestimation, you can break down your data based on group attributes (e.g., cuisine type or restaurant location) and calculate average residuals. If for a specific group the average residual is significantly positive, that implies the model is underestimating actual preparation times (actual minus predicted is positive). Conversely, if it is significantly negative, the model is overestimating them. Additional tools like distribution plots of residuals (e.g., histograms or box plots) can help visualize group-specific differences. Statistical significance tests can further confirm whether observed disparities are not just due to random noise.

Suppose a particular subset of restaurants frequently exhibits inaccurate estimates. What practical steps can you take to mitigate these issues?

When a particular subset of restaurants shows consistently large errors, you can:

Investigate Data Quality: Verify whether the training set for these restaurants was too small or contained erroneous data.

Collect Additional Data: Increase sampling or gather more historical data specifically for these restaurants.

Introduce Specialized Features: Include features capturing unique operational aspects of these restaurants, such as staffing levels, typical order sizes, or meal complexity.

Build a Sub-Model: In some cases, create specialized sub-models for restaurants or cuisines that differ significantly from the general population.

Use Transfer Learning or Domain Adaptation: If you have a strong model for general restaurants, adapt it with fine-tuning on the specific subgroup’s data to narrow the performance gap.

Could there be real-world consequences for the drivers if the model is biased?

Yes, drivers rely heavily on accurate preparation time estimates:

Underestimates lead to longer driver wait times, negatively affecting their earnings and schedules.

Overestimates might result in idle driver time, further complicating dispatch and potentially causing inefficiencies in route planning. Unfair distribution of wait times across different driver populations can create perceptions of inequity and potentially reduce driver retention.

How might bias against certain restaurants or customers lead to business or ethical concerns?

Systematic bias could, for example, penalize restaurants in certain regions by predicting longer wait times, deterring orders from those restaurants. Over the long run, it can exacerbate economic disparities or lead to reputational damage for the platform. Ethical concerns also arise if biases correlate with protected attributes (e.g., neighborhoods or demographics). Ensuring fairness not only mitigates legal risks but also upholds customer trust and brand reputation.

If the data evolves over time due to changes in restaurant operation, what strategies can help maintain fairness in future models?

Online Learning or Incremental Training: Continuously update model parameters with fresh incoming data to adapt to dynamic changes in restaurant behavior.

Model Degradation Monitoring: Set up automated pipelines to regularly compute metrics (mean error, bias differences, etc.) to trigger a retraining or recalibration event when thresholds are breached.

Periodic Bias Evaluation: Fairness checks should be integrated into the MLOps pipeline so any emergent disparities can be caught early.

How do you handle the trade-off between improving overall accuracy versus reducing bias in specific subgroups?

It is often a balancing act, where you may need to slightly compromise overall accuracy to rectify large disparities for certain segments. Techniques like reweighting training data to emphasize minority groups, using fairness-aware algorithms, or multi-objective optimization can help you find a reasonable solution. The final approach typically depends on business objectives, regulatory constraints, and ethical considerations. Decision-makers may accept a small drop in global accuracy if it substantially reduces unfair outcomes for specific subpopulations.

These strategies collectively ensure that both customers and restaurants benefit from equitable, transparent, and robust predictive models for food preparation times.

Below are additional follow-up questions

How do you handle completely new types of cuisines or restaurants that were absent in the training data?

When a restaurant type (for example, a novel fusion cuisine) shows up in real-world usage but was not present in the training set, your model may lack the necessary historical patterns, potentially introducing bias. One practical solution is to adopt a “cold start” approach. If there is no direct historical data for this restaurant category, the model can default to the most similar known group based on extracted features (such as preparation complexity, typical ingredient usage, or average cooking duration). Another strategy is to continually update or partially retrain the model so that emerging cuisines or restaurant segments are quickly incorporated once their data becomes available.

Pitfalls arise if the cold start assumption is systematically inaccurate. For instance, if you default to “average cooking time” from other categories, but the new cuisine is either much faster or slower to prepare. Over time, you must monitor those new cases with specialized metrics or early anomaly detection. If the model consistently produces large errors for these new categories, it suggests your baseline assumptions might need refinement (for instance, by introducing new features or specialized sub-models).

How do you address the risk of self-fulfilling bias, where incorrect predictions feed back into future training data?

A self-fulfilling bias can happen if the model’s predicted preparation times alter real behavior, creating a feedback loop. For example, if your system predicts a much longer preparation time for certain restaurants, drivers may delay arrival, which ironically prolongs actual pickup times, reinforcing the model’s biased assumption in subsequent training data.

Mitigating this starts with carefully designed data logging and feedback mechanisms. Store not only the final observed preparation time but also track driver and restaurant behaviors to detect changes instigated by the model’s predictions. Another strategy is to separate the data used for training from the data influenced by the predictions, ensuring that untainted ground truth is still captured. In addition, you can introduce robust modeling techniques that rely on multi-source data (not just model outputs) to retrain. A final safeguard is to regularly audit your data for artificially induced trends by comparing real outcomes under different system conditions (e.g., A/B testing with different versions of the model).

How would you incorporate domain knowledge about unusual peak times or special events into your bias-measurement strategy?

Bias can manifest more acutely during special events or holiday seasons. A restaurant that typically operates with a certain preparation time may become significantly slower during, say, a sporting event or major local festival. These edge cases can easily skew error distributions, introducing group-based bias if certain geographic areas or restaurant types are uniquely affected by those events.

One approach is to explicitly incorporate temporal or event-based features into the model (such as flags for national holidays or major local happenings). You then measure bias and residuals within these contexts: compare average errors during special events vs. normal operation. Also consider building separate sub-models or fine-tuned parameters that are specifically triggered during special events. Pitfalls occur if you assume the effect of an event remains constant across different locations or restaurant categories. In reality, some restaurants might handle spikes gracefully while others become overwhelmed. Hence, a targeted approach that examines each sub-population’s performance during these events is critical.

What if external factors like weather or traffic conditions significantly affect preparation times for certain restaurants more than others?

Weather conditions or severe traffic can influence both cooking staff availability and the scheduling of meal preparation. Some restaurants may have well-adapted kitchens or staff rotations that keep them efficient, while others slow down dramatically. This discrepancy can create bias if the model systematically overestimates or underestimates specific locations that are prone to adverse weather or traffic.

To address this, you can integrate real-time or historical weather and traffic data into your model. For instance, features could include precipitation level, temperature, or local congestion metrics. Then you would perform a bias analysis for segments grouped by weather/traffic severity. A pitfall is oversimplifying these external variables; for example, just using “rain or no rain” might mask differences between light drizzle and heavy storms. Another subtlety is that some restaurant categories might not be sensitive to weather at all (e.g., they operate with fully indoor staff and stable supply lines) while others rely on frequent deliveries for fresh ingredients.

How do you detect and mitigate biases that arise when a model’s training set is drawn predominantly from a single region or demographic?

If your food delivery platform started in one major city and expanded to numerous smaller towns, the distribution of operational patterns, cooking processes, or consumer behavior could differ substantially. This mismatch can produce location-based bias. To detect it, you can create geographical or demographic “buckets” and evaluate the model’s error distribution across them. Large discrepancies in mean absolute error or mean residual are signs of location-based bias.

Mitigation requires boosting coverage for underserved or newly entered regions. This might involve collecting more data in those zones, employing transfer learning to adapt city-specific models, or introducing location-based feature engineering (e.g., factoring in local population density or typical kitchen layouts). Pitfalls can emerge if you treat geographical segments too broadly, such as lumping rural towns into one category, when in fact each region might have unique operational nuances.

How can you ensure that your bias measurements remain valid if you pivot to a new business model or add new services?

Startups or rapidly scaling companies often shift from a pure “delivery time prediction” model to a more complex ecosystem that includes ghost kitchens, meal kits, or partnerships with large chains. Each pivot can change the fundamental data generation process. To maintain valid bias assessments, you must revisit your definitions of subgroups, outcomes, and error metrics each time the business introduces new services.

A strong approach is to maintain a flexible data schema and a modular architecture where separate pipelines handle new business lines. You can measure bias for each service type individually, then compare them. Pitfalls include failing to notice that a newly launched service might have very different operational constraints (e.g., partially pre-prepared meals or automated kitchens), which changes the nature of “preparation time” altogether. If you reuse the same old model without accounting for these differences, your historical bias analysis may not apply well.

What strategies would you use to detect unfair outcomes if you rely on advanced deep learning architectures that are less interpretable?

Deep neural networks can be highly effective but notoriously opaque. Identifying and measuring bias in these models requires specialized techniques. Possible methods include:

Feature Attribution Approaches: Tools such as Integrated Gradients or SHAP can help you identify which inputs drive the model’s decisions.

Surrogate Modeling: Train a simpler, interpretable model on the deep network’s predictions to approximate its behavior. Then check the simpler model for signs of bias.

Counterfactual Analysis: Slightly vary features to see if the predicted preparation time changes drastically for minor changes in input, which might reveal undesirable sensitivity tied to certain subgroups.

Pitfalls revolve around interpretability illusions. Sometimes, partial dependence or feature attribution may be misleading if not carefully validated. And focusing only on local interpretations might miss global patterns of bias.

In a scenario where restaurants start optimizing their workflow based on your model’s predictions, how do you ensure the model remains unbiased and accurate?

Restaurants might adjust staffing or schedule meal prep more efficiently if they see that your model often overestimates their times. This can lead to a distribution shift where historical data no longer mirrors current operations. To handle this, implement continuous monitoring of both the distribution of inputs (feature drift) and the distribution of errors (residual drift). If you detect that the distribution has shifted, you can deploy frequent mini-batch retraining strategies or update the model’s parameters in near-real-time (online learning).

A major pitfall is assuming stable environment behavior. In reality, once restaurants optimize, their average preparation times might systematically drop, invalidating old assumptions. If the model fails to adapt, it could still cling to outdated patterns, creating a new source of bias. Maintaining a healthy feedback loop with restaurants—perhaps in the form of questionnaires or direct performance metrics—can provide context about operational changes.

Could personal data or sensitive attributes inadvertently creep into your feature set, and how would this distort bias measurement?

Sensitive attributes like race, ethnic origin, or socio-economic status of a neighborhood can make the model inadvertently discriminate if not handled carefully. Even if these attributes are excluded, proxy variables (like ZIP codes) could indirectly encode sensitive information. This can distort bias measurements by concealing underlying protected-group effects.

One approach is to identify potential proxy features, run fairness or bias audits (like disparate impact analysis) to see if certain subgroups are disproportionately affected, and possibly remove or transform features correlated with protected attributes. Pitfalls include overly aggressive feature removal, which can reduce model performance without truly solving the root cause of bias. A more nuanced approach might involve fairness constraints, re-weighting techniques, or adversarial debiasing to systematically neutralize the influence of sensitive variables.

How do you communicate bias findings to stakeholders (e.g., product owners, executives, and restaurant partners) who may not be familiar with technical metrics?

Effective communication of bias goes beyond presenting numbers. You may illustrate your findings using visual dashboards showing prediction errors across subgroups, color-coded maps indicating regions with higher residuals, or interactive timelines that track how bias changes over time. Employ plain language to describe “overestimation” or “underestimation” and relate it to practical outcomes such as driver wait times, customer dissatisfaction, or operational cost overruns.

One potential pitfall is providing an overwhelmingly technical explanation that leaves non-technical stakeholders unsure about the relevance. Another is oversimplifying, which could hide the complexity of underlying causes. Balancing clarity and accuracy is key—aligning your metrics (e.g., average residual, root mean squared error by subgroup) with real-world consequences fosters a shared understanding. This clarity is essential when deciding on potential interventions, resource allocation, or strategic pivots that address model bias.