Modular Duality in Deep Learning

Making gradient descent respect the geometry of your neural network

Making gradient descent respect the geometry of your neural network

A mathematically rigorous way to make gradients and weights play nice together

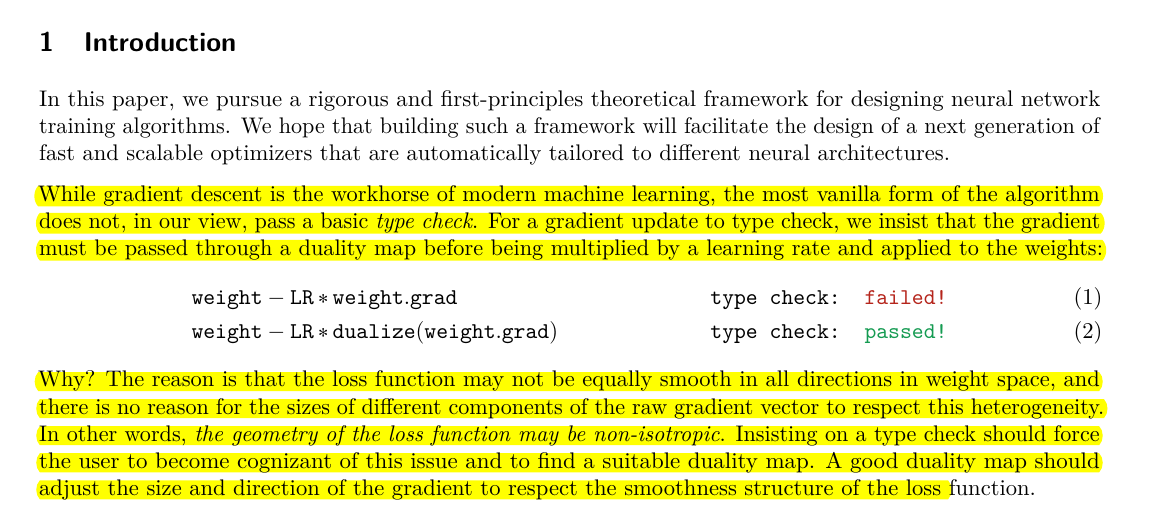

🎯 Original Problem:

Raw gradient descent has a fundamental type mismatch - you can't directly subtract gradients from weights since they live in different mathematical spaces. The loss function's geometry may be unequally smooth in different directions, which raw gradients don't respect.

🔧 Solution in this Paper:

• Introduces "modular dualization" - a systematic way to construct duality maps for neural networks

• Works in 3 steps:

Assign operator norms to layers based on their semantics

Build duality maps for individual layers

Recursively combine layer maps into a single network-wide map

• Proposes 3 practical implementations:

Sketching using randomized methods

Iterations for inverse matrix roots

Novel rectangular Newton-Schulz iteration for stable GPU computations

💡 Key Insights:

• Unifies two major optimization approaches (μP and Shampoo) under one framework

• Provides a type system for deep learning

• Shows how to properly handle gradient updates respecting layer semantics

• Offers new insights into weight change dynamics at large network widths

📊 Results:

• Set new speed records for NanoGPT training using rectangular Newton-Schulz iteration

• Provides numerically stable computations on GPUs

• Successfully implemented for key layer types: Linear, Embed, and Conv2D

• Demonstrates practical scalability for transformer architectures

🎯 The paper introduces "modular dualization" -

a systematic procedure to construct duality maps for general neural networks. It works in 3 steps:

Assign operator norms to individual layers based on their semantics

Construct duality maps for individual layers

Recursively induce a single duality map on the full network architecture based on layer maps.

💡 Key implications and potential impact of this Paper

The framework:

Provides a type system for deep learning

Enables faster training methods (already set speed records for NanoGPT training)

Unifies different optimization approaches under one theoretical framework

Helps understand activation-update alignment in neural networks

Offers new insights into weight change dynamics at large widths.