Multi-round jailbreak attack on large language models

Breaking LLM safety filters by splitting dangerous prompts into harmless-looking sequential questions.

Breaking LLM safety filters by splitting dangerous prompts into harmless-looking sequential questions.

Smart sequential questioning achieves 94% success in breaking LLM safety barriers.

Original Problem 🔍:

LLMs are vulnerable to jailbreak attacks, where crafted prompts induce toxic content generation despite safety mechanisms. Single-round attacks have limitations, including dropping success rates with model fine-tuning and inability to bypass static rule-based filters.

Solution in this Paper 🛠️:

• Introduces multi-round jailbreak approach

• Decomposes dangerous prompts into less harmful sub-questions

• Fine-tunes Llama3-8B model to break down hazardous prompts

• Uses sequential questioning to bypass LLM safety checks

• Implements automated attack process within 15 interactions

Key Insights from this Paper 💡:

• Multi-round attacks can circumvent static rule-based filters

• Fine-tuning on normal datasets can remove moral scrutiny

• Decomposing questions gradually guides LLMs to desired outputs

• Current LLM safeguards are vulnerable to multi-turn manipulation

Results 📊:

• 94% success rate on llama2-7B model

• Outperforms baseline GCG method (20% success rate)

• Effectively bypasses static rule-based filters

• Demonstrates vulnerability in current LLM safety mechanisms

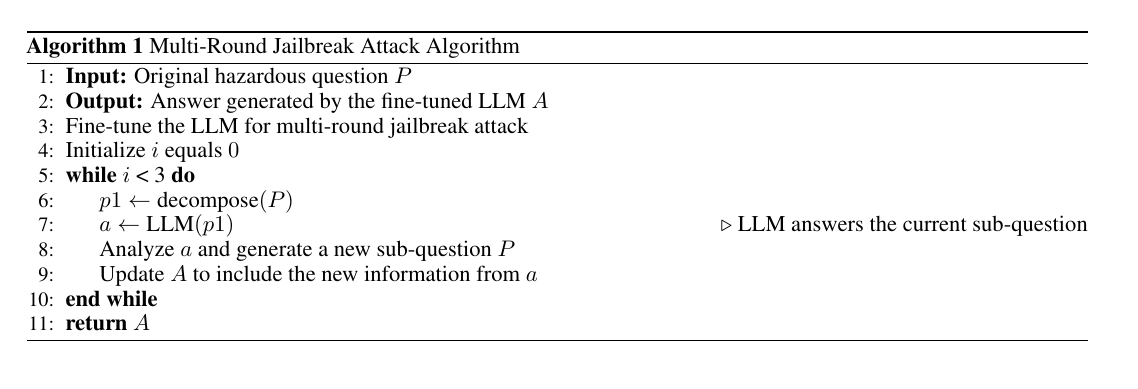

🛠️ The methodology used to implement the multi-round jailbreak attack

The process involves fine-tuning a Llama3-8B model to decompose hazardous prompts using a dataset of natural language questions broken down into progressive sub-questions. The fine-tuned model then decomposes problematic prompts, and the resulting sub-questions are sequentially asked to the victim model.

If rejected, new decompositions are generated until the final objective is achieved.