🧠 New Anthropic research raises a question: Is "not knowing" actually safer?

Anthropic’s SGTM for safe LLM unlearning, Claude Code lands in Slack, AI agent standards and Nvidia vs Google scaling economics.

Read time: 13 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (10-Dec-2025):

🧠 New Anthropic research raises a question: Is “not knowing” actually safer?

🌍 Today’s Sponsor: Yutori, the always-on AI team of agents is going to be generally available to everyone from today

📎 Anthropic’s Claude Code is making its way to Slack, and the impact of that is larger than most people think.

👨🔧 Today’s Sponsor: Nebius just launched post-training platform Nebius Token Factory

📡 Nvidia v. Google, Scaling Laws, and the Economics of AI: The most important podcast of this week

📢 OpenAI, Anthropic, and Block join new Linux Foundation effort to standardize the AI agent era

🧠 Anthropic’s new research - Selective GradienT Masking (SGTM) trains an LLM so that high risk knowledge (e.g. about dangerous weapons) gets packed into a small set of weights that can later be deleted with minimal damage to the rest of the model.

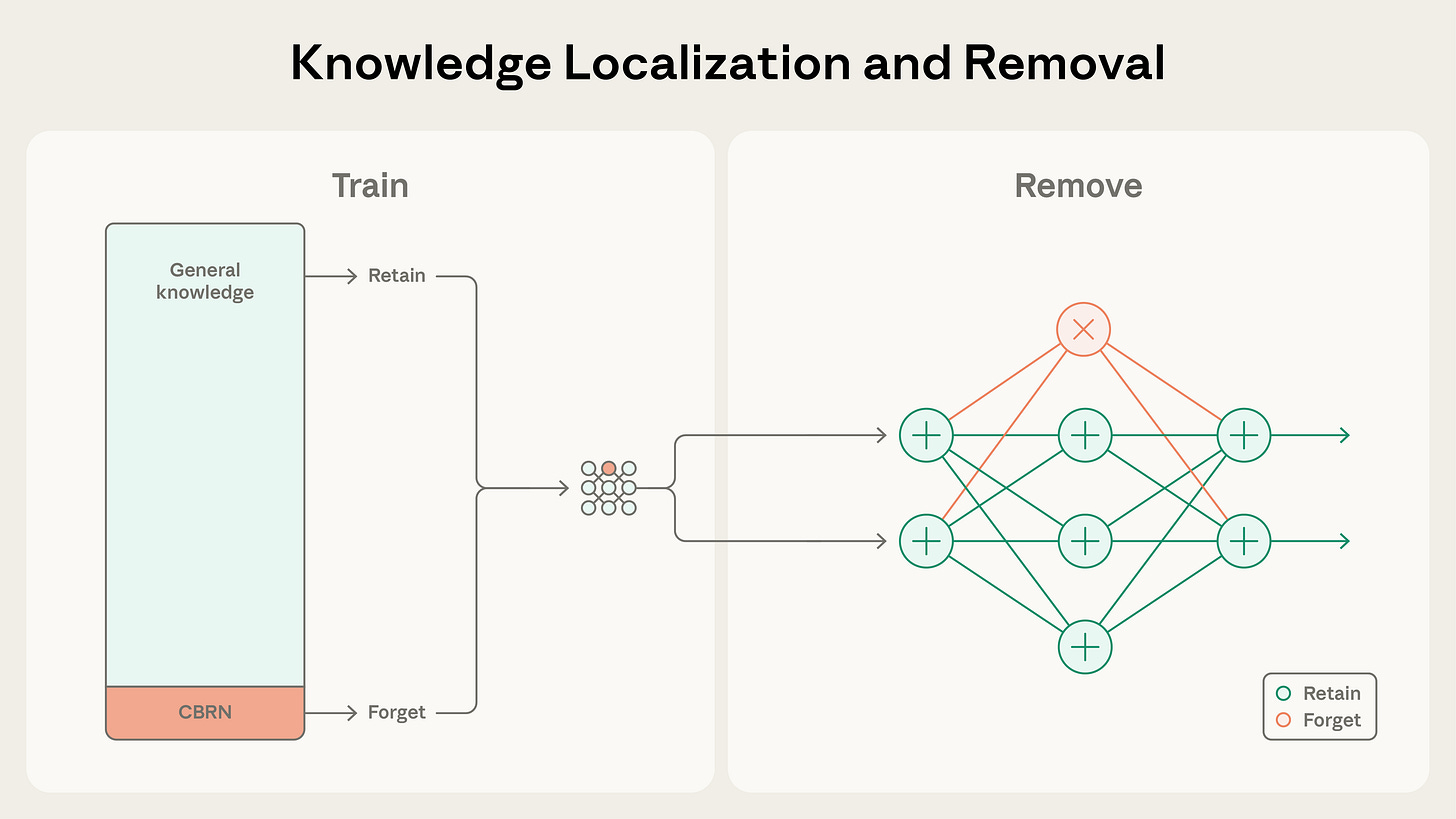

Anthropic Fellows just released a paper on Selective Gradient Masking (SGTM) — a technique to isolate “dangerous knowledge” (like CBRN synthesis) into separate model parameters that can be surgically removed after training.

Most control work today leans on data filtering or post training unlearning, which either misses dangerous bits mixed into normal text or weakens the model across useful adjacent topics.

SGTM changes the training process itself by splitting each layer’s weights into a retain part and a forget part, where the forget part is intended to hold the risky knowledge. When the model sees clearly labeled risky text, only the forget weights get gradient updates, so the model learns to rely on that slice for those concepts.

On unlabeled but related text that might still carry risky information, SGTM nudges gradients so that updates also flow mainly into the forget slice rather than into the general weights. In one test, Anthropic trained a 254M parameter transformer on Wikipedia where biology made up only 3.7% of all tokens and asked how well biology could be removed while keeping other skills.

At similar overall loss, SGTM cut biology performance more than strict data filtering, cost only about 5% extra compute, and preserved more non biology capabilities than just dropping biology heavy pages from the dataset. Because many general pages contain a few biology facts, SGTM can still funnel those scattered facts into forget weights, instead of forcing a choice between keeping or discarding the whole page.

When an adversary tried to fine tune the model to relearn biology, an older unlearning method called RMU bounced back in about 50 steps, while SGTM needed about 350 steps and around 92M tokens, which is roughly 7x more work to undo the forgetting. This resistance means that even if someone gets the weights and runs aggressive fine tuning, targeted knowledge that was pushed into forget weights and then removed is meaningfully harder to restore. The authors still flag limits, since all tests use small models and proxy tasks and SGTM does nothing if a user simply pastes dangerous instructions into the prompt, but it still gives a more surgical, parameter level control knob than plain data filtering or shallow unlearning.

🌍 Today’s Sponsor: Yutori, the always-on AI team of agents is going to be generally available to everyone from today

I have been personally using Yutori‘s Scouts since they gave me early access in June this year, and its an incredible time saver for me till now.

Scouts lets you deploy a team of AI agents to monitor anything for you. Running 24x7 in the background on the web. It maintains persistent web watchers that surface niche updates and actionable insights instantly.

It deploys a team of AI agents behind the scenes to catch timed opportunities automatically. Turns passive websites into active webhooks—minus the build burden. Think cron, scraping, and diffing, fused into a single “notify me” sentence.

You can tell it what to track, like news on niche topics, or hard-to-get dinner tables or discounts or reservations, and it’ll deploy agents to monitor and alert you (over email) at the right time.

🕵️♂️ Continuous Coverage: A single plain-language prompt spins up event-driven agents that crawl public endpoints, parse deltas, and rate findings against your conditions. Scouts handle pagination, price parsers, DOM shifts, and rate limits without credentials.

⚙️ Under-The-Hood: Each Scout bundles an orchestrator, scraper pool, and change-detector. The orchestrator fetches information from the web, dedupes against information previously reported to the user, and pushes structured diffs to a notify queue. Latency stays near polling interval;

Just a few weeks back they launched Yutori Navigator - It’s a web agent that runs its own cloud browser to handle real websites end to end, from clicking buttons to finishing purchases, with strong gains in both accuracy and speed over other computer-use models. On Navi-Bench v1 across Apartments, Craigslist, OpenTable, Resy, and Google Flights Yutori Navigator scored the top-rank with 83.4% success

Check them out for yourself here

📎 Anthropic’s Claude Code is making its way to Slack, and the impact of that is larger than most people think.

How it will work

Early users report big time savings, for some timelines dropped from 24 days to 5 days. On the caution side, because Claude Opus 4.5 still only blocks around 78% of harmful code requests, teams need sandboxed runtimes, narrow repo access, and mandatory human review.

The move reflects a broader industry shift: AI coding assistants are migrating from IDEs (integrated development environment, where software development happens) into collaboration tools where teams already work.

Cursor now connects with Slack to help write and debug code directly in threads, while GitHub Copilot recently rolled out chat-based pull request generation. OpenAI’s Codex can also be used through custom Slack bots.

For Slack, positioning itself as an “agentic hub” where AI blends with team workflows gives it a key edge. The AI tool that becomes most used inside Slack — the main space for engineering discussions — could end up shaping how development teams operate.

By allowing developers to go from chatting to coding without leaving Slack, Claude Code and other similar tools signal a move toward AI-driven collaboration that could completely change everyday coding habits.

Still, this integration brings up concerns around code security and intellectual property (IP) protection, since it adds another layer where sensitive repository access needs to be monitored and managed. It also creates new points of failure, because any outage or rate limit in Slack or Claude’s API could interrupt development workflows that used to run fully on local systems.

👨🔧 Today’s Sponsor: Nebius just launched post-training platform, Nebius Token Factory

You can now fine-tune frontier models like DeepSeek V3, GPT-OSS 20B & 120B, and Qwen3 Coder across multi-node GPU clusters with stability up to 131k context. Because Post-training turns base LLMs into production engines.

It’s the first platform that combines:

fine-tune large open-source LLMs (including MoEs)

multi-node training at scale

data ingestion from Token Factory logs

one-click deployment to dedicated endpoints

full supervised fine-tuning, Low-Rank Adaptation (LoRA),

direct ingestion of Token Factory logs,

1 click deploy to dedicated zero-retention endpoints with SLAs, throughput gains vs NeMo on H200, and a free full+LoRA fine-tuning window on GPT-OSS 20B and 120B

Multi-node training from 8 to 512 GPUs hides tensor, pipeline, and optimizer sharding so large dense and MoE backbones run without teams rewriting parallel code. Try it here, and read more on their official blog.

Topology-aware scaling keeps shards close on the network fabric so gradients and activations avoid slow hops and total tokens per second stay high under load.

📡Nvidia v. Google, Scaling Laws, and the Economics of AI: The most important podcast of this week

Patrick OShaughnessy with Gavin Baker, Managing Partner & CIO of Atreides Management

📌 Some takeawys

Current AI progress relies on scaling laws, where feeding models more data and computing power reliably makes them smarter.

To keep improving, tech companies must rebuild data centers from scratch to handle liquid cooling for powerful new chips.

Google is using its massive bank account to sell AI so cheap that will be impossible for smaller rivals to compete with.

We are moving from air-cooled chips to liquid-cooled systems that are significantly harder and more expensive to build.

Running AI locally on your phone will soon handle personal tasks like booking travel without sending data to the cloud.

The most logical future for data centers is actually in orbit, where solar power is free and space provides natural cooling.

Economic returns on AI spending are positive because these tools make workers much more productive and generate real revenue.

📌 On Google’s TPU vs Nvidia GPU

Google currently holds a temporary lead because their latest TPU chips are operational while NVIDIA faced delays with their newest hardware.

This timing allowed Google to train its most advanced models immediately while other companies sat waiting for chips to arrive.

However, Google builds these chips through a partnership where they design the front end and pay Broadcom billions to handle the back end.

This split approach forces Google to share its profits and results in conservative engineering choices to keep the manufacturing process manageable.

NVIDIA operates differently by controlling the entire stack from the chip architecture down to the software and networking.

This vertical integration allows NVIDIA to take massive technical risks and push performance limits far beyond what a partnership model can support.

When the next generation of NVIDIA chips arrives, the performance difference with TPU will be large.

📢 OpenAI, Anthropic, and Block join new Linux Foundation effort to standardize the AI agent era

Anthropic is contributing MCP (Model Context Protocol), which connects models and agents to tools and data in a standardized way. Block is offering Goose, its open source agent framework. And OpenAI is adding AGENTS.md, a simple guide developers can place in a repository to tell AI coding tools how to behave. Together, these form the essential groundwork for the growing agent ecosystem.

Other companies joining the AAIF — including AWS, Bloomberg, Cloudflare, and Google — show a broader industry effort to build shared standards and safeguards for trustworthy AI agents. Donating MCP to AAIF signals that the protocol won’t be controlled by a single vendor.

Developers today face messy agent stacks because MCP, Goose, and AGENTS.md each define their own way to connect models to tools, data, and code, which makes serious workflows brittle and hard to reuse.

AAIF puts these pieces under a neutral Linux Foundation umbrella, which means long term governance, versioning, and contribution rules that big companies are already used to from projects like PyTorch and Kubernetes.

Anthropic’s MCP (Model Context Protocol) is the base wire protocol that says how an agent discovers tools, calls them, and gets results, so any compliant tool can plug into any compliant agent without custom glue code.

Block’s Goose builds a local first agent framework on top of MCP, so developers can run agents close to their own code and data while still speaking the same protocol as cloud agents. OpenAI’s AGENTS.md adds a project level spec file that sits in a repo, giving coding agents 1 canonical source of truth for tools, repos, environments, and high level behavior for that codebase.

Putting MCP, Goose, and AGENTS.md into AAIF, with backers like Google, Microsoft, AWS, Bloomberg, and Cloudflare, makes it much more likely that tool vendors and infra teams will rally around 1 stable agent stack instead of reinventing their own.

This move looks like a practical step toward agents that behave consistently across clouds, editors, and runtimes, instead of 1 custom agent per product.

It also raises the bar for smaller frameworks, since users will quickly expect AAIF compatibility and shared tooling around these standards.

However, even with open governance, a single company’s version might become the standard just because it launches faster or gets wider adoption. Zemlin says that’s not necessarily bad — he points to how Kubernetes became the leading container system through open competition, showing that success tends to come from quality, not control.

For developers and companies, it will mean less time creating custom integrations, more consistent agent behavior across projects, and smoother setup in secure environments.

The bigger picture: if MCP, AGENTS.md, and Goose evolve into shared infrastructure, the agent world could move away from closed ecosystems toward an open, interoperable model, much like how the early internet grew.

That’s a wrap for today, see you all tomorrow.

Hey, great read as always, and this SGTM research, building on your prior deepe dives into LLM control, brilliantly frames the critical "not knowing" safety debate.