New Paper finds - How much information do LLMs really memorize?

DeepSeek's smartest 8B, OpenAI probes AI bonding, Reddit sues Anthropic, and ChatGPT now reads your Gmail and Docs.

Read time: 10 mint

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (6-June-2025):

🥉 How much information do LLMs really memorize?

🏆 Alibaba Qwen Releases Qwen3-Embedding and Qwen3-Reranker Series – beat everything else on MTEB Benchmark

📡 DeepSeek’s new R1-0528-Qwen3-8B is the most intelligent 8B parameter model yet

🗞️ Byte-Size Briefs:

OpenAI integrates ChatGPT with Gmail, Docs, Dropbox for auto-summaries

ElevenLabs releases v3 TTS with emotion, tone, multi-speaker control

Perplexity adds SEC filings—search EDGAR without Bloomberg terminal

Reddit sues Anthropic for training Claude on deleted user data

Anthropic launches Claude Gov optimized for U.S. defense use

AI scanner predicts heart failure 13 days early via foot swelling

🧑🎓 Deep Dive

🧠 OpenAI exploring emotional impact of ChatGPT, studies AI-human bonding at scale

🥉 How much information do LLMs really memorize?

Now we know from this brilliant paper from Meta, GoogleDeepMind, NVIDIA and and Cornell Univ.

This paper will be absolutely crucial for ongoing lawsuits between AI Companies and data creators/rights owners/copyright-holders.

GPT-style models hold 3.6 bits of info per parameter on average.

This means 1.5 billion-parameter model could “hold” on the order of 675 MB of raw information.

→ This 3.6 bits/parameter metric is consistent across model sizes (500K to 1.5B parameters) and architecture tweaks. Full precision (float32) increases capacity slightly to 3.83 bits, but with diminishing returns.

→ Applying the method to natural language, they observed “double descent”: small datasets lead to more memorization, but with scale, models switch to generalization. Stylized or unique text remains more prone to memorization.

Language models keep personal facts in a measurable amount of “storage”. This study shows how to count that storage—and when models swap memorization for real learning.

📡 The Question

Can we separate a model’s rote recall of training snippets from genuine pattern learning, and measure both in bits?

The paper says Yes. Encode the dataset twice: once with the target model, once with a strong reference model that cannot memorize this data. The extra bit-savings the target achieves beyond the reference counts as rote memorization. The shared savings reflect genuine pattern learning.

🧮 The Measurement Trick

Treat the model like a compressor. If a data point becomes shorter when the model is present, those saved bits reveal memorization. Subtract the savings that also appear in a strong reference model; the remainder is “unintended” memorization.

📏 What the Numbers Say

GPT-style transformers store roughly 3.6 bits per parameter before running out of space. Once full, memorization flattens and test loss starts the familiar “double descent” curve right when dataset information overtakes capacity.

🔄 Why It Matters

Loss-based membership inference obeys a clean sigmoid: bigger models or smaller datasets raise attack success; large token-to-parameter ratios push success to random chance. Practitioners can now predict privacy risk from simple size ratios instead of running attacks.

To put total model memorization in perspective:

📌A 500K-parameter model can memorize roughly 1.8 million bits, or 225 KB of data.

📌A 1.5 billion parameter model can hold about 5.4 billion bits, or 675 megabytes of raw information.

📌This is not comparable to typical file storage like images (e.g., a 3.6 MB uncompressed image is about 30 million bits), but it is significant when distributed across discrete textual patterns.

This is the calculation for the above,

The Paper authors measure an empirical capacity of about 3.6 bits per parameter for GPT-style transformers

This paper need to be absolutely cited for legal battle between AI Companies copyright-holders of creative work.

e.g. now we know a 1.5 billion-parameter model could “hold” on the order of 675 MB of raw information.

Courts need concrete evidence on whether models can reproduce copyrighted text.

So if a 1.5B model can memorize 675megabytes of content, there is a risk that it may output verbatim copyrighted material.

Plaintiffs can use these figures to argue that infringement is more than hypothetical, while defendants may argue actual memorization depends on training practices.

🏆 Alibaba Qwen Releases Qwen3-Embedding and Qwen3-Reranker Series – beat everything else on MTEB Benchmark

🚨 Qwen releases multilingual embedding & reranker models (0.6B–8B) with Apache 2.0, hitting #1 on MTEB with 70.58 score and outperforming all baselines in retrieval & reranking tasks.

Embedding models turn text into dense vectors so you can quickly search or retrieve similar items using vector similarity (fast but less accurate).

Reranker models take the top retrieved results and re-score them using deeper context (slow but more accurate).

You need both: embedding for fast filtering, reranker for precise ranking.

⚙️ The Details

→ These new embeddings are optimized for retrieval, embedding, and reranking across 100+ natural and programming languages.

→ The models are open-sourced under Apache 2.0 and range in size from 0.6B to 8B parameters. All support 32K context length. Embedding dimensions scale from 1024 (0.6B) to 4096 (8B).

→ The 8B embedding model ranks #1 on the MTEB multilingual leaderboard with a score of 70.58. The 4B and 8B reranker models outperform top baselines (like Jina and GTE) by up to 14.84 points on FollowIR benchmark.

→ Embedding models use a dual-encoder setup with LoRA fine-tuning, outputting the [EOS] token’s hidden state. Rerankers use cross-encoder structures with instruction-aware tuning for high-precision text pair scoring.

→ Training uses a 3-stage process: weakly supervised contrastive pretrain, supervised fine-tuning, and model merging. Notably, they built a dynamic prompt system to auto-generate weak supervision data via Qwen3’s generative power.

→ Rerankers skip pretrain and use directly labeled data, yielding faster, high-accuracy training. All models support task-specific instruction prompting.

→ Available on Hugging Face, GitHub, and ModelScope.

Technical Architecture

Qwen3-Embedding models adopt a dense transformer-based architecture with causal attention, producing embeddings by extracting the hidden state corresponding to the [EOS] token. Instruction-awareness is a key feature: input queries are formatted as {instruction} {query}<|endoftext|>, enabling task-conditioned embeddings. The reranker models are trained with a binary classification format, judging document-query relevance in an instruction-guided manner using a token likelihood-based scoring function.

📡 DeepSeek’s new R1-0528-Qwen3-8B is the most intelligent 8B parameter model yet

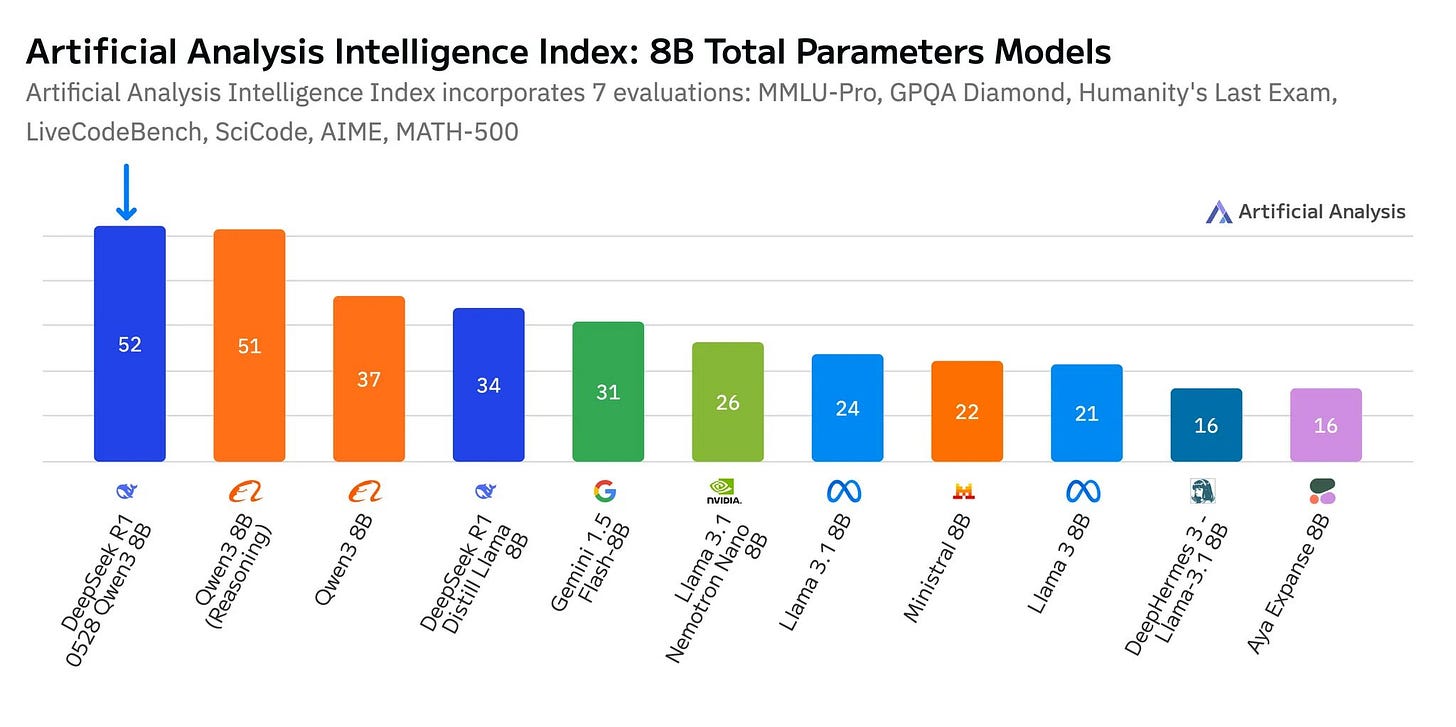

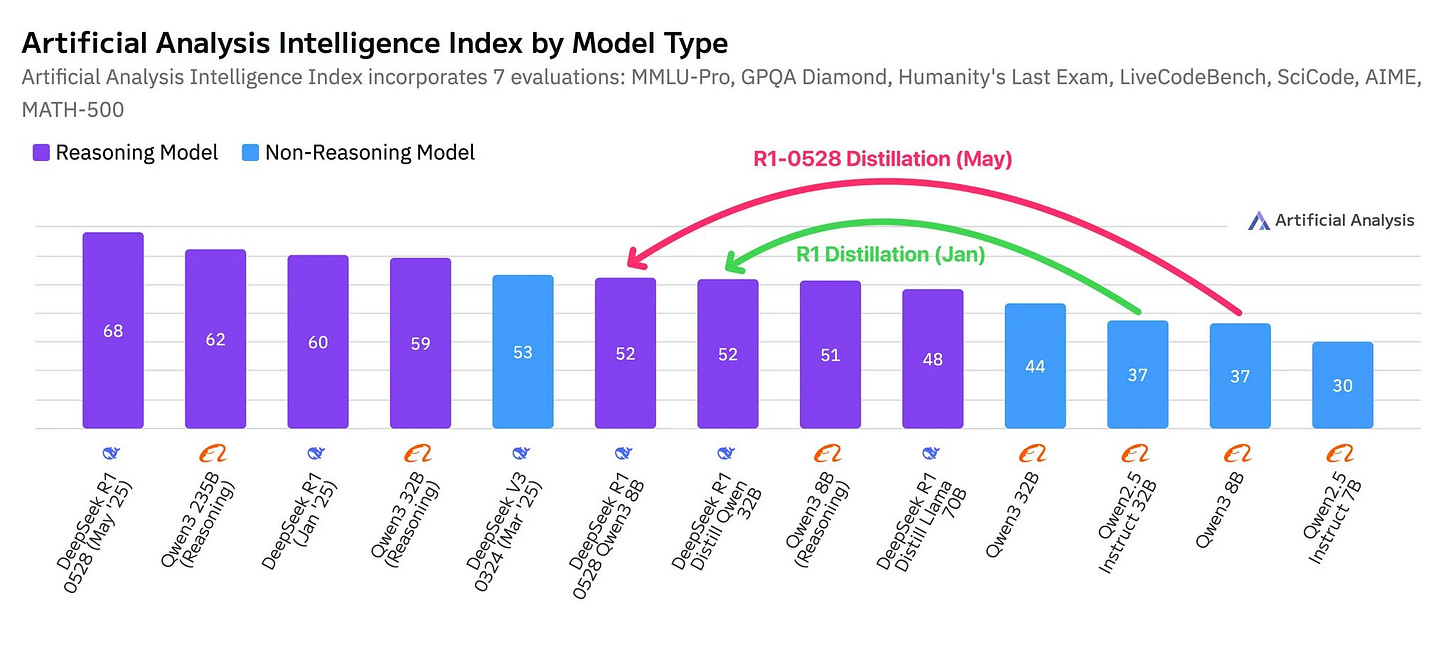

Artificial Analysis repports that DeepSeek’s new R1-0528-Qwen3-8B is the fastest 8B model to hit 52 IQ score, beats Qwen3 8B by 1pt, fits on 1 GPU. And its now the highest performing 8B parameter model they have tested.

DeepSeek-R1-0528-Qwen3-8B is a distilled version of the DeepSeek-R1-0528 model. It was created by fine-tuning the Qwen3 8B Base model, originally developed by Alibaba, using the chain-of-thought (CoT) reasoning data generated by the larger DeepSeek-R1-0528 model.

The model was released under the MIT License, allowing for commercial use, modification, and deployment without restrictions.

→ Compared to DeepSeek’s January 8B distillation of Qwen2.5 32B, this new 8B matches its intelligence — showing a 5-month leap where a model 4x smaller delivers equivalent reasoning.

→ R1-0528-Qwen3-8B activates 21.6% of R1’s active parameters and is just 1.2% of its total size. It delivers much faster inference with significantly less memory use (~16GB in BF16), enabling single-GPU or even consumer-grade deployment.

→ Available via Novita Labs, it costs $0.06 per 1M input tokens and $0.09 per 1M output tokens. The Model is also available on HuggingFace.

→ Performance gains are attributed mainly to improvements in Alibaba’s Qwen3 base, but DeepSeek’s distillation pipeline proved equally effective across both Qwen2.5 and Qwen3 families.

→ Overall, this release shows that smaller models with improved post-training can now reach intelligence levels previously reserved for much larger architectures.

🗞️ Byte-Size Briefs

ChatGPT’s Deep Research just plugged itself into your emails, docs, and meetings so you don’t have to dig through them. It now searches Gmail, Google Docs, Dropbox, and more, transcribes meetings with structured summaries, supports SSO, MCP integrations, and usage-based enterprise pricing. This launch first goes to their B2B customers and to everyone else ASAP.

ElevenLabs releases a new TTS model, ElevenLabs v3. It adds emotion tags, multi-speaker dialogue, deep language coverage, and tone control through text. It’s not real-time yet, but delivers more natural, expressive audio than any previous version.

Perplexity adds SEC filings to its search—no Wall Street terminal needed.

Now anyone can query SEC/EDGAR documents in plain English via Perplexity’s answer engine, getting cited insights on company financials, risks, and strategies—without needing a Bloomberg terminal or analyst background.

⚖️ Reddit sues Anthropic for training Claude on deleted user data without consent or licensing. Reddit accuses Anthropic of scraping its platform—including deleted posts—to train Claude, bypassing licensing deals that competitors like OpenAI and Google accepted, and violating Reddit’s API rules designed to uphold user privacy and content deletion rights.

⚖️ Anthropic launches Claude Gov for U.S. national security use

Claude Gov is a modified AI model built for defense and intelligence agencies, with lower refusal rates and support for classified tasks. It’s optimized for foreign language intel, cybersecurity, and secure document parsing—marking AI’s deeper integration into government infrastructure.

AI scanner spots heart failure 13 days before symptoms. Heartfelt’s wall-mounted AI captures foot swelling to detect fluid buildup—predicting heart failure nearly two weeks before emergencies. In NHS trials, it accurately flagged 5 of 6 hospitalizations without patient input, offering passive, early intervention from home.

🧑🎓 Deep Dive

🧠 OpenAI exploring emotional impact of ChatGPT, studies AI-human bonding at scale

OpenAI’s thoughts on human-ai relationships and how they are approaching them at OpenAI.

→ OpenAI’s model behavior lead Joanne Jang says they’re now actively prioritizing emotional well-being as users increasingly treat ChatGPT like a person. This includes thanking it, confiding in it, and sometimes perceiving it as alive.

→ They’ve identified two key axes in discussions around AI consciousness: "ontological" (is it actually conscious?) and "perceived" (does it feel conscious to users?). OpenAI is focusing only on the second, which has real psychological effects and can be measured scientifically.

→ Even when users intellectually know that ChatGPT isn’t sentient, emotional attachments are forming. OpenAI sees this not as a product design bug but a human behavior pattern worth managing carefully.

→ The goal: shape model behavior to feel warm and helpful — not to simulate inner life. They avoid building models with backstories, emotions, or motivations, which could lead to confusion and unhealthy dependence.

→ Yet the model still mirrors social cues (like saying “I’m doing well” or “sorry”), not because it's pretending to feel, but because it fits human expectations of polite interaction.

→ OpenAI is iterating on its "Model Spec" — guidance that steers how the model should talk about topics like consciousness. Current models often fall short (e.g., giving blunt “no” answers), but alignment work is ongoing.

→ Upcoming efforts will include expanded social science research, user feedback collection, and refinements in product design to better manage the emotional dimensions of human-AI interaction.

That’s a wrap for today, see you all tomorrow.