👨🔧 New research shows medical AI Agents built often look correct but think wrong.

Medical AI agents fail silently, Cornell’s APU crushes GPUs on energy, and Altman’s $1T chip gamble risks global financial shock.

Read time: 9 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (28-Oct-2025):

👨🔧 New research shows medical AI Agents built often look correct but think wrong.

🚨 Cornell study confirms GSI Technology’s APU matches GPU performance at a Fraction of the Energy Cost

📡 MIT, GSI, and Cornell APU (Associative Processing Unit) paper that is behind the MASSIVE breakthrough achieving 54.4x to 117.9x lower energy consumption.

💸 A new article on Futurism says OpenAI’s massive $1T chip and data center commitments/spending means Sam Altman now holds enough financial clout that a failure could trigger a global economic crash.

👨🔧 New research shows medical AI Agents built often look correct but think wrong.

This is not a great news for Medical AI ☹️ . New paper shows Multi-LLM medical AI agents often project confidence while thinking incorrectly. The correct diagnoses they produce are mostly results of disordered collaboration rather than sound logic.

Shows that medical AI Agents built from multiple LLMs often look correct but think wrong. Even when they give the right diagnosis, most of them got there through broken teamwork.

In over 68% of “successful” cases, the agents already agreed at the start, so the group talk did nothing useful. The authors reviewed 3,600 medical cases and found repeating problems, like correct facts disappearing mid-discussion, smart minority opinions getting ignored, and agents choosing easy votes instead of reasoning.

They built a tool called AuditTrail to track how facts and opinions move during a debate. It found that evidence often drops between early steps and the final answer, and that longer talks sometimes make things worse because the models start copying each other instead of thinking.

Even worse, the systems often pick low-risk answers when a life-threatening one was on the table. So the scary part is this, a “high-accuracy” medical AI can sound smart but still hide unsafe logic underneath.

Overall this paper exposes how accuracy numbers lie. An AI can pass medical tests yet still reason in unsafe, careless, or biased ways, which makes it untrustworthy for real patients.

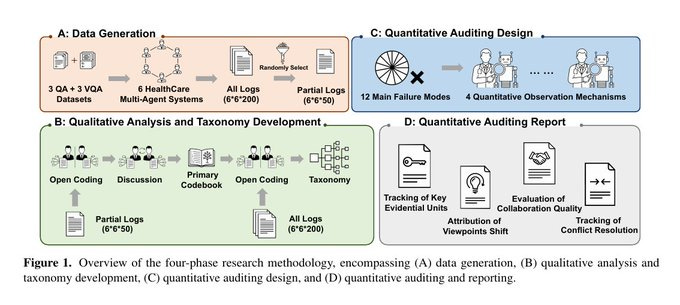

Overview of the four-phase research methodology

How the paper’s tool, called AuditTrail, keeps track of how medical AI agents reason together step by step.

The top left part shows “Tracking of Key Evidential Units.” It watches if important medical facts found early in the discussion are still remembered later. Many models drop or forget key details when they summarize or make a final decision.

The top right part, “Attribution of Viewpoints Shift,” checks when an agent changes its opinion. It records whether that change came from real evidence or just peer pressure from other agents.

The bottom left, “Evaluation of Collaboration Quality,” rates how good the reasoning actually is. It measures if agents use domain-specific knowledge, if they pick diagnoses with the right level of urgency, and if they rely on strong evidence instead of blind voting.

The bottom right, “Tracking of Conflict Resolution,” checks whether disagreements between agents get properly resolved or ignored. It flags cases where contradictions remain open at the end.

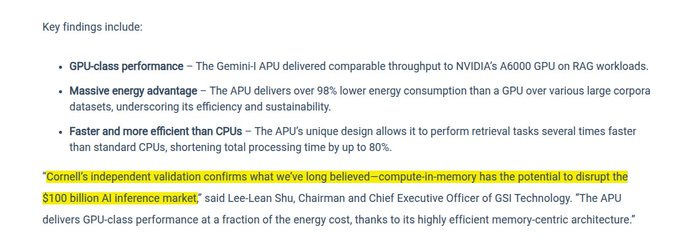

🚨 Cornell study confirms GSI Technology’s APU matches GPU performance at a Fraction of the Energy Cost

Said another way, the APU delivered GPU like speed at roughly 2% of the energy budget on large corpora. The core result comes from a Cornell led study on large RAG workloads where the Gemini I APU hit GPU level throughput while drawing roughly 1% to 2% of the GPU energy.

In RAG, the retrieval stage is heavy on vector math and memory traffic, so the chip that moves less data wins on power. The Compute-in-memory (CIM) processor puts simple operations inside the memory arrays, so instead of hauling vectors back and forth across a bus, the memory banks do lots of compare and accumulate steps right where the data sits.

This kills the usual memory wall and turns memory bandwidth into parallel work done in place. Cornell’s team reported an energy cut of about 50x to 118x versus the GPU for these retrieval tasks while keeping similar throughput.

Versus standard multi core CPUs, the APU ran retrieval ~5x faster and shortened total processing time by up to 80%. That makes sense because CPUs chase data through caches, while the APU floods thousands of memory rows with the same instruction and finishes many comparisons in one shot.

Gemini I APU is built around associative processing in SRAM, which means rows can be activated together and checked against a query pattern or used to compute dot products without leaving the array. This maps well to top k search, cosine similarity, and other basics used in vector databases.

The work used corpora in the 10GB to 200GB range, which is where data movement starts to dominate power and latency. Throughput matching a high end A6000 under those sizes suggests the bottleneck was memory traffic, not shader math.

Energy, not raw TOPS, decides the cost of serving RAG at scale, so cutting energy by two orders of magnitude is a big deal for data centers and also for edge boxes. GSI says its Gemini II silicon is out with about 10x higher throughput and lower latency, which, if true in real workloads, would widen the power gap further.

The company is aiming at edge AI like defense, drones, and IoT where power and cooling are tight and where a small box may need to search a local knowledge base fast. Phones and embedded chips are also moving this way, since always on features benefit most when memory traffic drops.

Investors reacted hard with GSIT jumping from about $5 to about $15 intraday, roughly 200%, as traders priced in energy gains that are rare in practice. The business side is still early with revenue around $22.1M, 3 year growth about −16.3%, and net margins near −63%, so execution and design wins will drive what happens next.

“Paper led by researchers at Cornell University. Findings confirmed that GSI Technology’s APU CIM (Compute-In-Memory) architectures can match GPU-level performance for large-scale AI applications with a dramatic reduction in energy consumption due to high-density and high-bandwidth memory associated with the CIM architecture.”

How GSI’s APU chip is built inside its memory banks and how each small computing unit, called a bit processor, works.

Each APU core is split into multiple banks, and each bank is divided into bit slices. Each bit slice holds data for one specific bit position across many words, like storing all the “bit 0s” together, all the “bit 1s” together, and so on. This setup lets the chip process many bits from different words in parallel.

Inside each bit slice, there are bit processors. Each bit processor includes tiny read and write circuits that can perform logic operations directly within the memory lines. This means the chip doesn’t need to move data to a separate processor — the computation happens right where the data is stored.

The wiring between vertical and horizontal lines connects these bit processors so that they can coordinate larger operations, like comparing values or doing math across memory cells. This structure is what enables the chip to achieve high parallelism and energy efficiency.

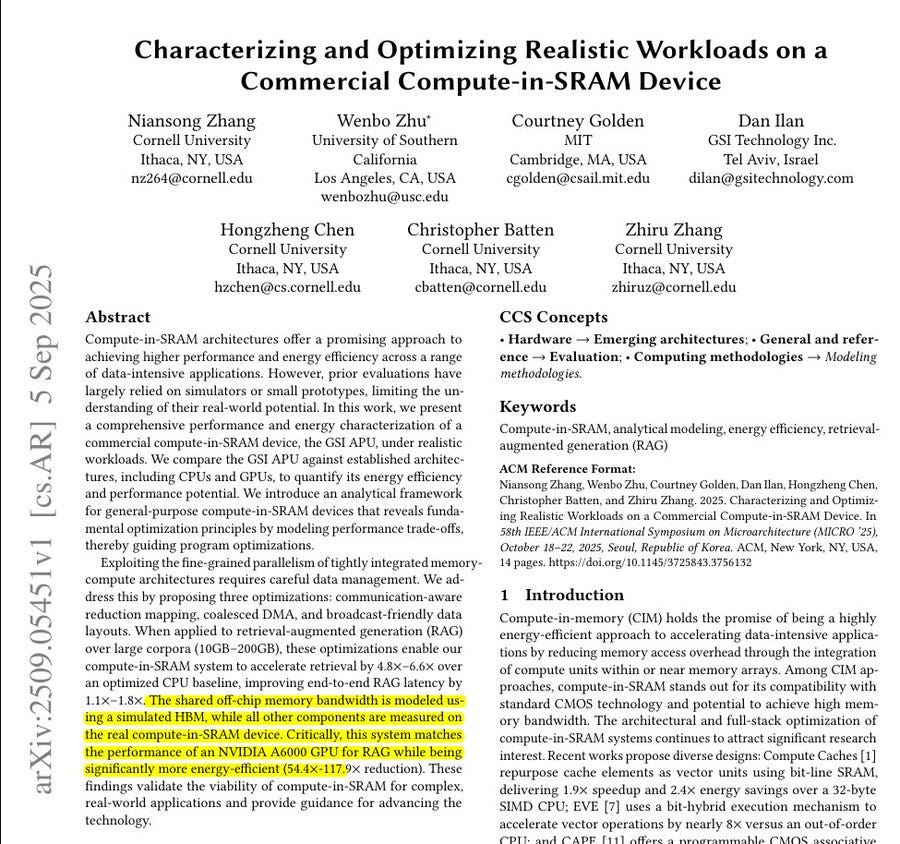

📡 MIT, GSI, and Cornell APU (Associative Processing Unit) paper that is behind the MASSIVE breakthrough achieving 54.4x to 117.9x lower energy consumption.

The paper claims Claims GSI’s compute-in-SRAM APU can match an A6000 GPU for RAG while using 54.4x to 117.9x less energy with 3 key data-movement optimizations. Across 10GB, 50GB, and 200GB datasets, it speeds up exact retrieval by 4.8x to 6.6x over a tuned CPU and cuts time to first token by 1.1x to 1.8x.

Compute in SRAM performs math directly inside memory arrays, making element-wise operations fast, but slow if data is placed poorly due to extra shuffles and DRAM trips. Communication-aware reduction mapping moves reductions into inter-register element-wise ops and arranges outputs contiguously for fast DMA transfers.

DMA coalescing groups repeated row loads into one transfer, caches it in a reuse vector register, and fills subgroups locally to reduce off-chip traffic. A broadcast-friendly layout reorders scalars so lookup windows shrink, cutting table size and speeding broadcasts.

The APU includes 2mn bit processors running at 500 MHz for about 25 TOPS, across 4 cores with 24 vector registers of 32K elements, offering 26 TB/s on-chip bandwidth at roughly 60W. On Phoenix workloads it averages 41.8x faster than a single-thread CPU and 12.5x faster than a multi-thread CPU, peaking at 128.3x and 68.1x respectively. They sidestep DDR limits by simulating HBM2e (≈380–420 GB/s) for embeddings while testing other steps on hardware, achieving 3.9–84.2 ms retrieval latency across 10–200GB datasets.

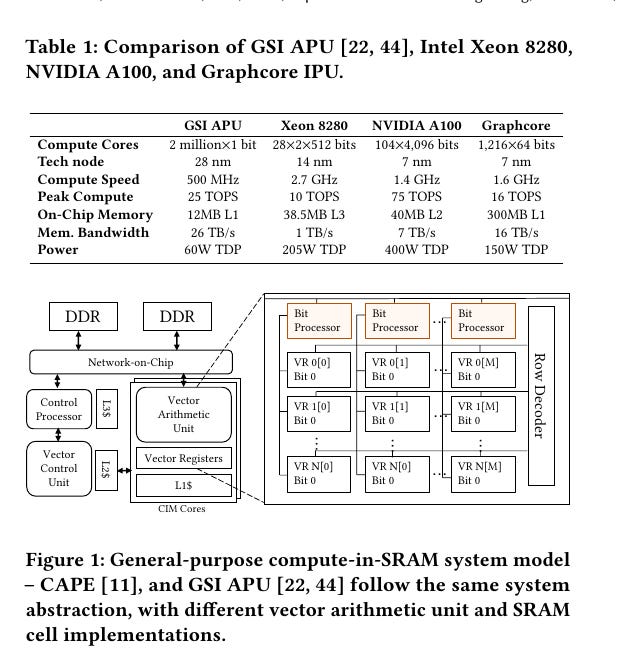

Comparison of GSI APU, Intel Xeon 8280, NVIDIA A100, and Graphcore IPU.

GSI’s APU uses 2 million 1-bit cores, running at 500 MHz, and delivers 25 TOPS at only 60W. Xeon 8280 has far fewer cores but runs faster per core, consuming 205W for 10 TOPS.

The NVIDIA A100 pushes to 75 TOPS, but it needs 400W, while Graphcore balances at 16 TOPS and 150W. The striking number is 26 TB/s memory bandwidth in the GSI APU, much higher than any of the others, which explains its energy efficiency in data-heavy tasks.

The diagram below the table shows how GSI arranges computation inside the memory itself. Each bit processor sits next to the data it needs, using vector registers and arithmetic units directly inside SRAM, avoiding trips to DRAM. This structure lets the chip perform massive parallel operations while keeping energy use low.

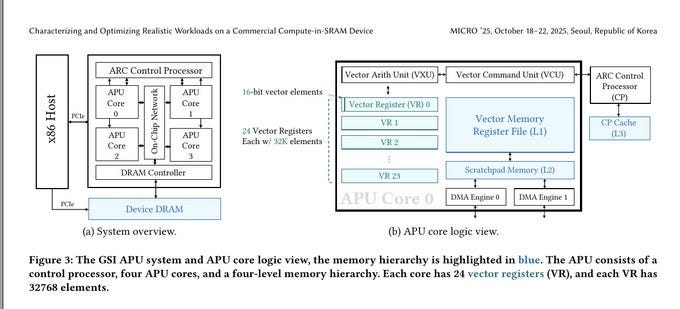

The figure shows how the GSI APU organizes both its overall system and the logic inside each compute core.

On the left, the system overview diagram shows that the APU connects to a regular x86 host using PCIe. The host handles general tasks, while the APU does parallel math directly inside memory. The APU has 4 cores connected through an on-chip network, and a central ARC control processor that coordinates their work. A DRAM controller manages access to external device memory, which stores large datasets.

On the right, the core logic view zooms into one APU core. Each core includes 24 vector registers, and each register holds 32K elements of 16 bits each. A Vector Arithmetic Unit (VXU) performs the math on these registers, while a Vector Command Unit (VCU) issues instructions. Data moves through a memory hierarchy made up of the L1 vector register file, L2 scratchpad, and DMA engines, which handle fast data transfers. The ARC control processor and its L3 cache supervise these operations.

💸 A new article on Futurism says OpenAI’s massive $1T chip and data center commitments/spending means Sam Altman now holds enough financial clout that a failure could trigger a global economic crash.

Power needs show the scale, as per estimates, the planned systems would draw roughly the output of 20 standard nuclear reactors, which hints at massive operating costs on top of capital outlays. Valuation momentum continues despite the burn, the recent secondary sale pegged OpenAI at $500B, making it the most valuable private startup right now.

Research Analysts are split on the macro risk, one saying Sam Altman could either “crash the global economy for a decade” or deliver big productivity gains. The broader economy is now exposed through chip vendors, power projects, lenders, and hyperscalers, so execution slips or demand shortfalls could ripple far beyond a single company.

That’s a wrap for today, see you all tomorrow.

Great take on GSI chips.

Medical AI is a beast — unstructured data, privacy rules, and endless human loops make it slow to scale.

Loved the ASU story. I can't wait for an analog circuit IC based. Analog is hard core, dirty and noisy, unless you know how to tame it