New ways to do reinforcement learning: Small teacher, but big impact on reasoning

Explore Sakana AI’s small-teacher approach boosting RL accuracy, Google Gemini’s context caching speeding video 3× and PDFs 4×, Unitree’s motor-cost strategy and Unsloth’s RL primer.

Read time: 11 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (23-Jun-2025):

Sakana AI flips reinforcement learning: Small teacher, big impact on reasoning accuracy

🛠️ Deep Dive Tutorial: Google upgrades Gemini API caching to deliver 3x faster video and 4x faster PDF load times and what is Context caching

🧠 OPINION: Inside Unitree: How Cheap Motors Shaped a Billion-Dollar Robot Maker

💡Reinforcement Learning Guide from Unsloth

🥉 Sakana AI flips reinforcement learning: Small teacher, big impact on reasoning accuracy

Sakana AI published new research Introducing Reinforcement-Learned Teachers (RLTs): Transforming how we teach LLMs to reason with reinforcement learning (RL).

Reinforcement learning on language models stalls because the reward stays at zero until the model just happens to guess a full answer.

Reinforcement-Learned Teachers flip the script by starting with the answer and writing explanations that a smaller student can immediately learn from. The result of RLT is surprising: Their small 7B teacher model is better at teaching reasoning skills than much larger LLMs, making advanced AI more affordable and much faster to train.

Traditional RL focuses on “learning to solve” challenging problems with expensive LLMs and constitutes a key step in making student AI systems ultimately acquire reasoning capabilities via distillation and cold-starting.

Enter our RLTs—a new class of models prompted with not only a problem’s question but also its solution, and directly trained to generate clear, step-by-step “explanations” to teach their students.

🔍 The Problem: Sparse “right-or-wrong” rewards make exploration brutal, so only huge, already-skilled models improve under classic reinforcement learning. Small models freeze because they never reach a correct answer to unlock gradients.

💡 The Proposal: An RLT sees both the question and the official solution, then writes a step-by-step story that links the two in plain language for its student. The student prompt simply pastes those thinking steps plus the solution, so training needs no extra formatting hacks.

🔧 Reward Design: First term pushes the student’s log-probability of the solution as high as possible, covering every token, not just the average. Second term penalizes any teacher token that the student would never have predicted from the question alone, keeping the logic easy to follow. Weighting these terms equally yields a dense, informative score even before the teacher learns the task.

⚙️ Training Recipe: A plain Qwen-7B model warms up with a short supervised pass, then runs just 250 reinforcement steps with batch 256 and learning rate 1e-6. One extra 7B student sits on CPU to compute rewards, so the whole run fits a single H100 node.

📊 Distillation Wins: Raw traces from the 7B teacher lift a new 7B student to 49.5 overall on AIME-24, MATH-500, and GPQA, beating pipelines that relied on 32B–671B teachers plus post-processing. Feeding the same traces to a 32B student pushes that model to 73.2, again ahead of every larger-teacher baseline.

🚀 Better Cold-Starts: Using RLT traces as the launchpad, a 7B model gains about 10 accuracy points over starting RL from scratch and even tops GPT-polished baselines.

🌍 Zero-Shot Transfer: Without retraining, the same teacher writes explanations for the Countdown arithmetic game; the resulting student outperforms direct RL on that task.

📈 Why Rewards Matter: If traces are grouped by reward rank, student accuracy rises smoothly with the score; best quartile traces give a 0.89 correlation with final marks. Removing the student-alignment term drops performance below the original base model, confirming its necessity.

The picture compares two training setups.

On the left, classic reinforcement learning makes the model work out the entire solution by itself and rewards it only if the final answer matches the truth. On the right, the new teaching setup hands the model the correct answer first and asks it to write a step-by-step story that connects the question to that answer.

This change turns a yes-or-no reward into continuous feedback on every explanation step, so even small models improve quickly.

The image underlines the paper’s core idea: let models teach instead of guess.

🛠️ 🛠️ Deep Dive Tutorial: Google upgrades Gemini API caching to deliver 3x faster video and 4x faster PDF load times

Google shipped major updates to the Gemini API caching infrastructure 🚢

- Video's that hit the cache are 3x faster TTFT (Time To First Token)

- PDF's that hit the cache are 4x faster TTFT

- Closed the speed gap on implicit vs explicit cache

- Ongoing work for large Audio files

So now, you can now store reused tokens once, ask many questions, and see 3× faster video analysis and 4× faster PDF handling.

💡 In short: cache big inputs once, reuse them, pay less, and wait less.

⚙️ The Core Concepts: Gemini keeps two memory areas. A prefill cache stores model-ready tokens so the first answer token appears sooner, and a preprocess cache holds the heavy formatting work for videos or PDFs. The first cache is tied to one model, the second can serve any Gemini 2.5 variant. Together they remove repeat work from follow-up calls and unlock the new speed numbers.

🚀 Speed Gains: Moving video preprocessing into the cache cuts turnaround time for the next enquiry to one-third. PDF tokenization benefits even more; four rounds of text handling shrink to one, so follow-up requests finish in roughly one-quarter of the original time when the reused portion exceeds 1 024 tokens for Flash and 2 048 tokens for Pro.

🧩 Implicit Caching: Gemini 2.5 enables implicit caching automatically. If two prompts begin with the same long prefix inside a short window, the backend spots the duplicate and silently discounts the reused tokens. Developers write no extra code, but savings are not guaranteed. Placing the shared blob at the top of the prompt and sending related calls back-to-back improves the hit rate.

📦 Explicit Caching: Explicit caching exposes a cache object through the API. A client uploads a file, sets a time-to-live, and receives a handle. Later requests send only the handle plus a short question. The cached content becomes the prompt prefix, so billing treats the stored tokens at a lower rate. One set-up call buys predictable cost cuts and stable latency.

🔧 Code Walkthrough:

The sample script downloads a 10-minute film, uploads it, and waits until preprocessing completes.

The script creates an explicit cache right after the video finishes processing. The call to client.caches.create stores every token from the film plus a system instruction and keeps them alive for 300 s. That cache is identified by a handle (cache.name).

The final generate_content call references the cache handle instead of resending 696,000 tokens.

Processing starts immediately, network traffic stays tiny, and the bill counts those reused tokens at the cheaper cached-rate. The usage_metadata printout confirms the gain: almost the entire prompt came from the cache, while only 214 fresh tokens were charged at full price.

💰 Cost Model: Charges split three ways. First, a one-time fee converts raw data into tokens and stores them at a discount relative to standard prompt tokens. Second, the cache sits in storage and accrues a small per-token holding cost that scales with the chosen TTL. Third, ordinary billing applies to any new tokens you add and to all output tokens. When a workload asks many short questions against one long asset, the cached route always wins.

🔒 Limits and Maintenance: The cache counts toward the normal context length, so an enormous asset can still hit the model limit. Cached content never gains special rate-limit status; throughput stays bounded by GenerateContent quotas. Developers can refresh expiry or delete a cache through update and delete endpoints. If they forget, the backend discards the entry once the TTL ends, freeing storage automatically.

🧠 OPINION: Inside Unitree, How Cheap Motors Shaped a Billion-Dollar Robot Maker

🐕 Cost-First Beginnings

Unitree founder Wang Xingxing built his first four-legged robot from scrap during graduate school and called it XDog. He decided to start Unitree in 2016 at the age of 26. The prototype walked on electric hobby motors instead of expensive hydraulics. Winning a local contest proved that a nimble robot could be affordable, which pushed Wang to leave a safe job and start Unitree in 2016. One year later Laikago arrived at roughly $2500, while Boston Dynamics charged more than $75,000 for Spot. The price shock drew researchers and hobbyists who had never dreamed of owning a legged robot.

• 2017 Laikago • 2019 AlienGo • 2020 A1 • 2021 Go1 and Go2 • 2023 H1 humanoid • 2024 G1 humanoid

🔧 Full-Stack Hardware

Early suppliers could not meet Unitree’s cost targets, so the team designed its own high-torque motors, gearboxes, controllers, and even depth sensors. Owning those parts lets engineers shave grams, squeeze efficiency, and update designs weekly instead of waiting for outside vendors. Because every joint shares common actuators, the factory builds dogs, humanoids, and arms on the same lines, keeping volume high and margins steady. This vertical approach echoes how smartphone companies drive down component prices.

🕺 Showcases That Pay the Bills

Unitree’s robots rarely launch quietly. Twenty four ox-costumed A1 units danced on China’s Spring Festival Gala in 2021, and Go1 dogs backed Jason Derulo at the 2023 Super Bowl pre-show. Each televised stunt brought purchase inquiries, firmware feedback, and free marketing. The company repeats the cycle: sell batches, collect logs, revise mechanics, then film the next viral clip. Public gigs finance research while exposing hidden faults that lab tests miss, a pragmatic loop that speeds reliability.

🦿 Fast-Track to Humanoids

Years of leg control fed directly into a biped. In 2023 H1 stepped out at one point eight meters tall and forty seven kilograms, sprinting three point three meters per second on the same motor family used in Go1 hips. Reinforcement learning algorithms learned pushes and flips in simulation, then transferred to real hardware, so a software update soon had H1 performing backflips. To seed the research market Unitree shrank the design into G1, a one point two seven meter platform priced near sixteen thousand dollars, far below six-figure Western options. By late 2024 small batches shipped to laboratories worldwide.

📊 Why the Strategy Works

Unitree owns over 60% of global quadruped installations and has turned a profit every year since 2020 because it treats robots like consumer electronics: tight supply chains, aggressive pricing, and constant iteration. Rivals that perfect prototypes in isolation struggle to match Unitree’s learning rate once thousands of units roam factories and campuses. As larger vision-language models reach embedded chips, Wang’s hardware army already waits in the field, ready for upgrades that push from scripted demos to useful chores. In short, cost discipline and vertical engineering have turned a tinkerer’s side project into the most shipped legged robots on Earth, and possibly the first humanoids many of us will meet.

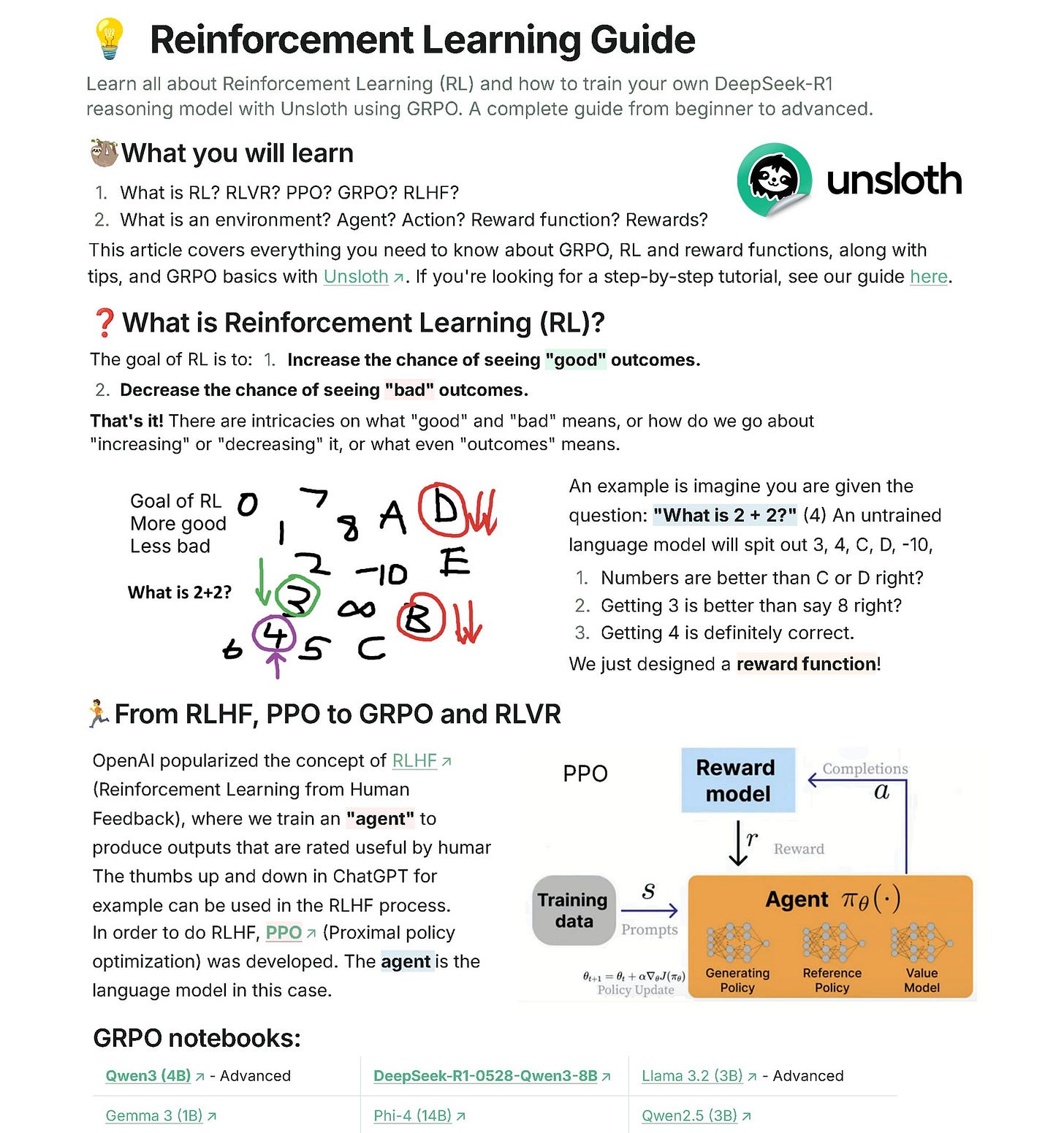

💡Reinforcement Learning Guide from Unsloth

This is a very Comprehensive RL guide for LLMs by UnslothAI. Cuts the fluff. Covers why RL matters, why o3 adopts it, GRPO, RLHF, PPO. Teaches you to spin up a local R1.

Key learnings from the Unsloth reinforcement learning guide.

🎯 Goal: Reinforcement learning makes an LLM show useful answers more often and suppress wrong ones by connecting each answer to a numeric reward and nudging the model toward higher totals.

⚙️ PPO: Proximal policy optimization trains three networks—current policy, reference policy, value model—and keeps updates small with a clip term and a KL penalty so the model climbs toward better rewards without drifting too far from its starting point.

🚀 GRPO advantages: Group relative policy optimization drops the value and reward models, estimates average reward by sampling many answers, and rescales them with simple statistics, cutting memory and compute. Unsloth implements this so a 17 B-parameter model fits in 15 GB VRAM and a 1.5 B model fits in 5 GB.

🧩 Reward design: A verifier only judges correct or wrong, while a reward function turns that judgment plus extra style rules into scores. Combining several small rewards—such as integer format, proximity to the right number, or email etiquette—guides training better than a single coarse rule.

🖥️ Training workflow: Supply a prompt set, sample multiple answers per prompt, score each answer, and update weights every step. Expect reward to rise after roughly 300 steps; longer runs keep improving. Unsloth saves up to 90 % VRAM through 4-bit quantization, custom kernels, gradient checkpointing, and shared memory with vLLM, so you can train and serve the reasoning model on a single GPU.

That’s a wrap for today, see you all tomorrow.