🥉 NVIDIA Helix Parallelism, Groundbreaking Research Serves 32X More Users

NVIDIA’s new Helix serves 32X more users, Jack Dorsey launches Bluetooth mesh Bitchat, Perplexity and OpenAI browsers approach, Isomorphic AI drugs near trials, LangChain hits $1B, X CEO resigns.

Read time: 9 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (9-July-2025):

🥉 NVIDIA Helix Parallelism, groundbreaking research serves 32X more users

📶Jack Dorsey launches Bitchat an offline peer-to-peer Bluetooth mesh messenger

📡 Perplexity launches Comet, an AI-powered web browser

💊 Google’s Isomorphic Labs is getting ready human-trials of AI-created drugs

🛠️ OpenAI is planning to release its own web browser within few weeks.

🗞️ Byte-Size Briefs:

🦄 LangChain secures IVP-led funding that values it at $1B.

Linda Yaccarino steps down as CEO of Elon Musk’s X. Yaccarino joined the company just months into Musk’s ownership

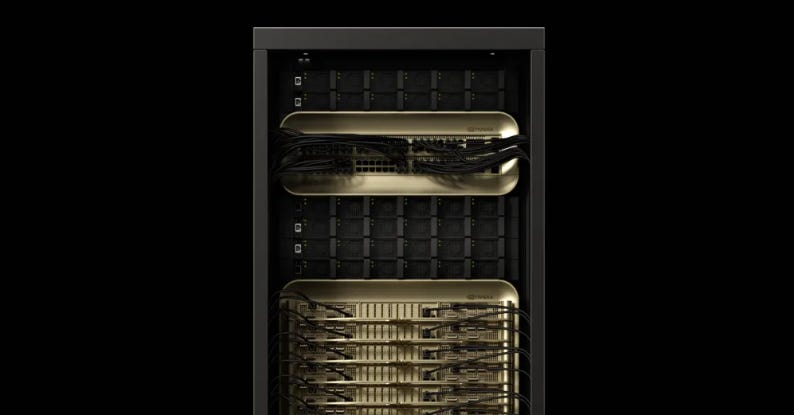

🥉 NVIDIA Helix Parallelism, groundbreaking research serves 32X more users

BRILLIANT Model parallelism technique. Now server DeepSeek-R1 with 1 million context length eazily.

LLMs choke when they must read millions of past tokens and also pull huge feed-forward weights fast. Helix splits those two jobs across the same GPUs in back-to-back steps so tokens pop out up to 1.5× faster and 32× more users fit in one box.

⚙️ The Core Concepts: KV cache reads grow with context length, while FFN weight loads stay heavy even when the batch is tiny.

Classic tensor parallelism eases the FFN side but stops helping attention once the shard count passes the KV-head count because every extra GPU must keep a full copy of the cache.

So what’s so special about it?

Helix cuts the slow parts of long-context decoding down to size by letting each GPU keep only the cache slice it needs and then borrowing those same chips a split second later to chew through the heavy feed-forward math.

Thanks to that simple shuffle, the system keeps 32X more chats alive on the same box while single answers arrive 1.5X faster than the best old tricks Helix Parallelism.

For someone using an AI app this feels like magic, because they can paste an entire book or codebase into the prompt and still get snappy replies instead of a spinning wheel.

For the developer running the backend it means fewer servers, lower bills, and no juggling between “fast” mode for short prompts and “slow” mode for long ones, so rollout stays smooth and predictable. Put simply, Helix turns big-memory headaches into extra head-room and pays it back in speed.

Technical Details of this innovation:

💡 Why Plain TP (tensor parallelism) Hits a Wall: A model like Llama-405B owns 128 query heads yet only 8 KV heads. Going past 8 TP shards duplicates the entire cache per GPU, flooding DRAM and freezing token-to-token latency, so users either shrink the batch or wait longer.

🌀 Helix in the Attention Phase: Helix slices the cache by sequence length across KVP ranks and caps TP width at or below the KV-head count. Each GPU makes its own QKV projections, runs FlashAttention on its slice, then swaps fragments with one all-to-all, so traffic depends on hidden size, not context length.

🔗 HOP-B Overlap Trick: Right after the first token’s attention math finishes, the GPU ships its fragment while already crunching the next token. This overlap hides almost all communication time and drops exposed latency from 25.6 units to 17 in the toy example.

🚀 Flip to the FFN Without Waiting: The same GPU pool instantly reshapes into a wider tensor grid and shards the big FC matrices. No cache duplication returns because attention is done, so the extra shards become pure speedup for weight reads.

📈 Measured Gains on Blackwell: Simulations on GB200 NVL72 with FP4 show 32× bigger batches at the same latency and up to 1.5× lower latency at low load for DeepSeek-R1. Llama-405B still gains 4× throughput and 1.13× faster responses even though its cache is already smaller.

📶Jack Dorsey launches Bitchat an offline peer-to-peer Bluetooth mesh messenger

Jack Dorsey launches 'Bitchat,' an app that lets you message with no internet or phone number; What will you need then? It need no servers or accounts at all, instead it will route chats through nearby phones without internet.

So its like a WhatsApp messaging rival but built on Bluetooth. code in Github. Messages hop across nearby devices in mesh clusters, allowing them to travel beyond the standard 30-metre Bluetooth range. All communication is end-to-end encrypted, with messages stored locally and auto-deleted after a short time, enhancing privacy and anonymity.

Hop-by-hop relays let bursts travel across many phones inside a crowd. Strong encryption keeps keys on the devices, so intermediates see gibberish.

No global identity layer means nothing ties messages to a person. Each snippet stays in RAM, vanishing after minutes; disks stay blank.

Because it avoids cellular or Wi-Fi, conversation survives shutdowns, flights, or remote hikes. A secure, decentralized,that works over Bluetooth mesh networks. No internet required, no servers, no phone numbers - just pure encrypted communication. Here’s the Github of the app.

The app’s decentralised architecture is built for resilience, offering a practical solution in scenarios where internet connectivity is down, such as during natural disasters, network outages, or government-imposed blocks. Devices function as relays, forming dynamic networks that extend coverage without relying on fixed infrastructure.

Currently available only for iPhone users via Apple’s TestFlight, the app hit its 10,000 beta tester limit soon after launch.

📡 Perplexity launches Comet, an AI-powered web browser

🧭🪄 Perplexity releases Comet browser to its $200-a-month Max users

The headline feature is that Comet runs Perplexity’s LLM search as default, so every query compares sources then returns a short answer instead of 10 blue links.

A built-in Comet Assistant browser agent handles everyday chores: compresses emails and calendar entries, juggles tabs, and moves around sites for you. Pull out the side panel on any page and the agent sees the content and answers your questions.

That requires deep access to your Google account, which reviewers found helpful for reminders yet a concern for privacy.

You can also do some heavy tasks like booking parking etc, but that may show hallucinations, because many current agents still mis-handle details on heavy-tasks.

Perplexity bets that friction-free context plus future model upgrades will keep users inside the browser, locking in infinite retention. The company’s CEO, Aravind Srinivas, has significantly hyped up Comet’s launch in particular, perhaps because he sees it as vital in Perplexity’s battle against Google. With Comet, Perplexity is aiming to reach users directly without having to go through Google Chrome.

Comet walks into a jam-packed browser scene. Google Chrome and Apple Safari still run the show, and back in June The Browser Company pushed out Dia, an AI browser that looks a lot like Comet. Rumor says OpenAI wants its own challenger and has already hired a few core Chrome engineers this past year.

Comet’s early advantage could come from Perplexity fans who decide to try it. CEO Aravind Srinivas shared that Perplexity handled 780 million queries in May 2025 and keeps growing by more than 20% each month.

Building a search rival to Google is hard, yet persuading people to dump their current browser may be even harder.

💊 Google’s Isomorphic Labs is getting ready human-trials of AI-created drugs

Isomorphic Labs, Alphabet’s AI drug team, starts staffing for first human trials of AlphaFold-guided medicines, targeting 10x faster, 90% cheaper development 💊⚡️

AlphaFold cracked how proteins fold, turning static biology data into precise 3D shapes. That let algorithms guess how a drug molecule sticks, so chemists can tweak atoms on screen instead of mixing flasks.

Isomorphic layers pharma veterans onto that code, checking each AI suggestion against lab evidence to avoid flashy but impractical leads. Funding of $600M equips more wet-lab robots and cloud GPUs, so iteration loops in minutes not months.

Partnerships with Novartis and Lilly train the models on real clinical misses, teaching them patterns behind toxic side effects. The team focuses first on hard cancers and immune disorders where tiny binding gains translate to big survival jumps.

If early trials hit, the model’s confidence engine could lift success odds from 10% toward 100%, slashing multi-year timelines and multibillion budgets.

🛠️ OpenAI is planning to release its own web browser within few weeks.

Hot on the heels of Perplexity’s Comet launch, OpenAI is planning to release an AI-powered web browser of its own to challenge Google Chrome. Reports says the the browser is slated to launch in the coming weeks. If adopted by the 400 million weekly active users of ChatGPT, OpenAI’s browser could put pressure on Google's ad-revenue.

Google Chrome currently has 67% market share of the worldwide browser market. Apple’s second-place Safari lags far behind with a 16% share. Chrome reaches 3B users and collects browsing clicks and searches to fine-tune results and steer traffic to Google Search.

A web browser would allow OpenAI to directly integrate its AI agent products such as Operator into the browsing experience. It can plug in Operator, its task agent, so the browser books tables, fills forms, and pays bills automatically. And last year, OpenAI hired two longtime Google vice presidents who were part of the original team that developed Google Chrome.

How does it make a difference, if OpenAI's operator can leverage its own browser

OpenAI integrates Operator into its Chromium browser, eyeing Chrome’s 67% share and letting chats finish tasks for you with near-zero clicks. Operator already controls a mini browser to click, type, and scroll like a person.

Embedding it natively means the full browser pipes HTML, layouts, and cookies straight to the agent, no screen-capture lag. Operator can then launch multiple tabs, track session state, and finish chained jobs, such as reserving flights then autofilling expense forms.

Because the browser trusts the signed agent, payments flow through saved wallets without typing card numbers, trimming checkout time. Each completed action adds annotated browsing traces that train future Computer-Using Agent (CUA) models, steadily raising success rates.

🗞️ Byte-Size Briefs

🦄 LangChain secures IVP-led funding that values it at $1B. Its open-source framework has 111K GitHub stars, and its closed-source LangSmith logs, evaluates, and monitors agents for $39-per-month, winning users like Klarna and Replit. LLMs answer questions but lack built-in tools for web actions. LangChain lets developers chain model outputs with search, code, and database steps. So you define small tools, wrap them in an agent, and the LangChain library manages prompts, retries, caching.

And Its LangSmith platform captures each prompt and the model’s every intermediate response as they happen. Teams can later replay that history, spot failure points, and score batches of runs in one go. These fine-grained logs matter because a single agent call may hop through 30+ external tools before it returns an answer. If you miss even 1 step you cannot reproduce or improve the result. A free hobby tier gets solo developers started, while bigger firms pay flexible per-seat or volume rates so the bill matches their traffic and privacy rules. Open code draws users in, but paid uptime guarantees and deep analytics keep revenue flowing.

Since its introduction last year, LangSmith has led the company to reach annual recurring revenue (ARR) between $12 million and $16 million

Linda Yaccarino steps down as CEO of Elon Musk’s X. Yaccarino joined the company just months into Musk’s ownership

That’s a wrap for today, see you all tomorrow.