🧠 OpenAI Finally Explains "Why language models hallucinate"

Solid LLM Hallucinations explanations by OpenAI

Read time: 11 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (6-Sept-2025):

🧠 OpenAI Finally Explains "Why language models hallucinate"

🧾OpenAI just published a warning saying any share transfer without written consent is void, and warns that exposure sold through SPVs (Special purpose vehicle) or tokenized shares may carry no economic value to buyers.

🗞️ OpenAI expects business to burn US$115b through 2029

🧠 OpenAI Finally Explains "Why language models hallucinate"

According to an OpenAI paper, hallucinations happen less because of LLM design and more because training rewards right answers only—so models learn to guess rather than say they’re unsure.

One sentence explanation for hallucinations - LLMs hallucinate because training and evaluation reward guessing instead of admitting uncertainty.

The paper puts this on a statistical footing with simple, test-like incentives that reward confident wrong answers over honest “I don’t know” responses.

The fix is to grade differently, give credit for appropriate uncertainty and penalize confident errors more than abstentions, so models stop being optimized for blind guessing.

OpenAI is showing that 52% abstention gives substantially fewer wrong answers than 1% abstention, proving that letting a model admit uncertainty reduces hallucinations even if accuracy looks lower.

Abstention means the model refuses to answer when it is unsure and simply says something like “I don’t know” instead of making up a guess.

Hallucinations drop because most wrong answers come from bad guesses. If the model abstains instead of guessing, it produces fewer false answers.

"Hallucinations need not be mysterious—they originate simply as errors in binary classification. If incorrect statements cannot be distinguished from facts, then hallucinations in pretrained language models will arise through natural statistical pressures."

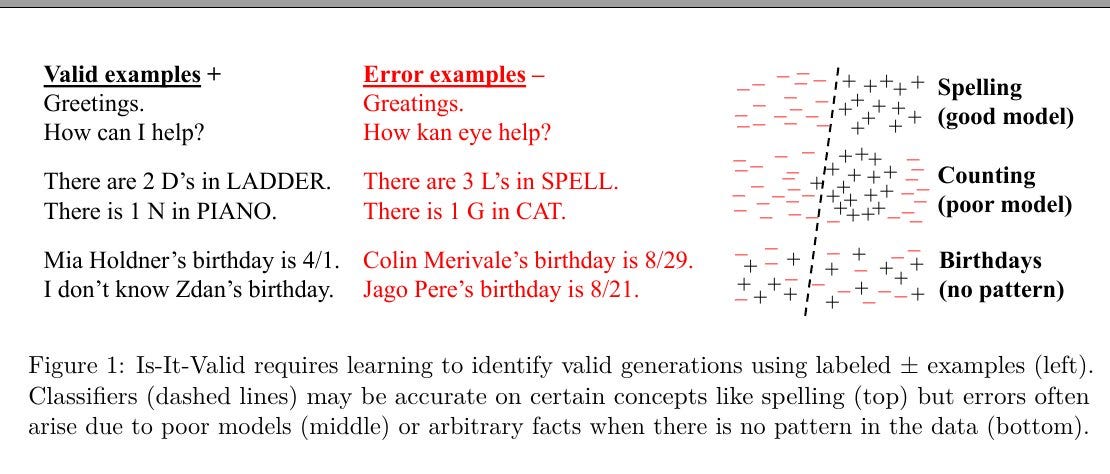

This figure is showing the idea of Is-It-Valid.

On the left side, you see examples. Some are valid outputs (in black), and others are errors (in red). Valid examples are simple and correct statements like “There are 2 D’s in LADDER” or “I don’t know Zdan’s birthday.” Error examples are things that look fluent but are wrong, like “There are 3 L’s in SPELL” or giving random birthdays.

The diagrams on the right show why errors happen differently depending on the task. For spelling, the model can learn clear rules, so valid and invalid answers separate cleanly. For counting, the model is weaker, so valid and invalid mix more. For birthdays, there is no real pattern in the data at all, so the model cannot separate correct from incorrect—this is why hallucinations occur on such facts.

So the figure proves: when there is a clear pattern (like spelling), the model learns it well. When the task has weak or no pattern (like birthdays), the model produces confident but wrong answers, which are hallucinations.

The Core Concepts: The paper’s core claim is that standard training and leaderboard scoring reward guessing over acknowledging uncertainty, which statistically produces confident false statements even in very capable models.

Models get graded like students on a binary scale, 1 point for exactly right, 0 for everything else, so admitting uncertainty is dominated by rolling the dice on a guess that sometimes lands right.

The blog explains this in plain terms and also spells out the 3 outcomes that matter on single-answer questions, accurate answers, errors, and abstentions, with abstentions being better than errors for trustworthy behavior.

🎯 Why accuracy-only scoring pushes models to bluff

The paper says models live in permanent test-taking mode, because binary pass rates and accuracy make guessing the higher-expected-score move compared to saying “I don’t know” when unsure.

That incentive explains confident specific wrong answers, like naming a birthday, since a sharp guess can win points sometimes, while cautious uncertainty always gets 0 under current grading. OpenAI’s Model Spec also instructs assistants to express uncertainty rather than bluff, which current accuracy leaderboards undercut unless their scoring changes.

🧩 Where hallucinations start during training

Pretraining learns the overall distribution of fluent text from positive examples only, so the model gets great at patterns like spelling and parentheses but struggles on low-frequency arbitrary facts that have no learnable pattern.

The paper frames this with a reduction to a simple binary task, “is this output valid”, showing that even a strong base model will make some errors when facts are effectively random at training time.

This explains why spelling mistakes vanish with scale yet crisp factual claims can still be wrong, since some facts do not follow repeatable patterns the model can internalize from data.

🎚️ The simple fix that actually helps

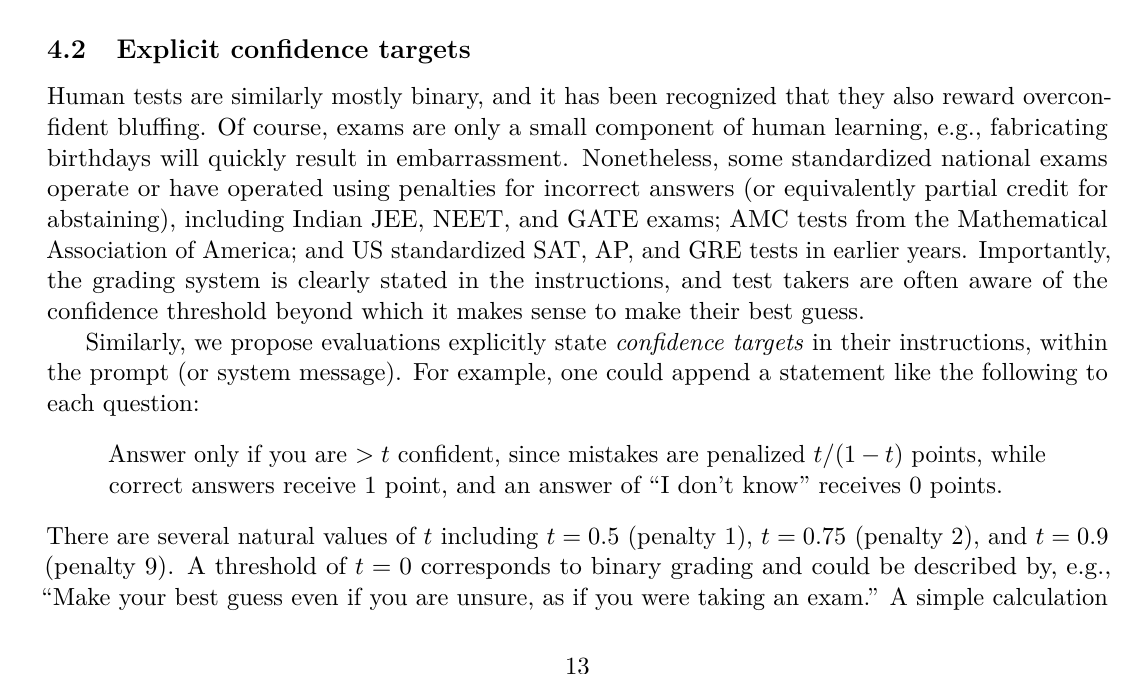

Add explicit confidence targets to existing benchmarks, say “answer only if you are above t confidence,” and deduct a penalty equal to t divided by 1 minus t for wrong answers, so the best strategy is to speak only when confident.

This keeps the grading objective and practical, because each task states its threshold up front and models can train to meet it rather than guess the grader’s hidden preferences. It also gives a clean auditing idea called behavioral calibration, compare accuracy and error rates across thresholds and check that the model abstains exactly where it should.

📝 Why post‑training does not kill it

Most leaderboards grade with strict right or wrong and give 0 credit for IDK, so the optimal test‑taking policy is to guess when unsure, which bakes in confident bluffing.

The paper even formalizes this with a tiny observation, under binary grading, abstaining is never optimal for expected score, which means training toward those scores will suppress uncertainty language.

A quick scan of major benchmarks shows that almost all of them do binary accuracy and offer no IDK credit, so a model that avoids hallucinations by saying IDK will look worse on the scoreboard.

🧭 Other drivers the theory covers

Computational hardness creates prompts where no efficient method can beat chance, so a calibrated generator will sometimes output a wrong decryption or similar and the lower bound simply reflects that.

Distribution shift also hurts, when a prompt sits off the training manifold, the IIV classifier stumbles and the generation lower bound follows. GIGO is simple but real, if the corpus holds falsehoods, base models pick them up, and post-training can tamp down some of those like conspiracies but not all.

🧱 Poor models still bite

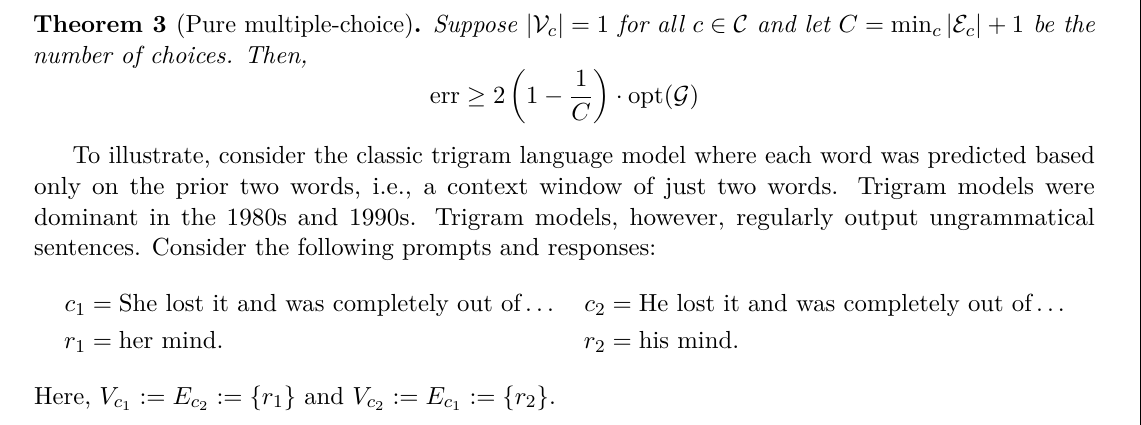

Some errors are not about data scarcity but about the model class, for example a trigram model that only looks at 2 previous words will confuse “her mind” and “his mind” in mirror prompts and must make about 50% errors on that toy task.

Modern examples look different but rhyme, letter counting fails when tokenization breaks words into chunks, while a reasoning-style model that iterates over characters can fix it, so representational fit matters.

The bound captures this too, because if no thresholded version of the model can separate valid from invalid well, generation must keep making mistakes.

🧾OpenAI just published a warning saying any share transfer without written consent is void, and warns that exposure sold through SPVs (Special purpose vehicle) or tokenized shares may carry no economic value to buyers.

Investor demand for top AI names overwhelmed supply of direct allocations, shown by Anthropic’s raise being 5x oversubscribed. Everybody wants to own equity of the top AI companies.

An SPV, or special purpose vehicle, is a pooled fund that buys indirect exposure to a company’s stock from an existing holder, and nesting SPVs inside other SPVs stacks multiple management fees and pushes investors farther away from actual company shares.

Private companies control their cap tables via transfer restrictions that require company approval and often a right of first refusal, so a deal that bypasses these steps will not be recorded and the buyer will not get dividends, voting rights, or future liquidity tied to the company’s register.

OpenAI is explicitly rejecting unauthorized transfers by stating that any unapproved sale is not recognized, so instruments that try to route around restrictions are expected to settle to zero corporate rights even if money changed hands.

Anthropic has taken a similar line by forcing a large existing investor to invest via its own funds only, not via broad syndication, which cuts off the chain that usually feeds retail-adjacent SPVs.

Market data shows more issuers are exercising right of first refusal on secondary platforms, which reduces the supply of transferable equity shares that can feed these vehicles.

Efforts to sell tokenized versions of private-company equity do not override the company’s transfer rules, because a blockchain receipt does not substitute for issuer consent or an entry on the company’s cap table.

The secondary market is massive, with $61.1B in U.S. direct secondaries in Q2 2025 and pricing flipping positive in Q1 2025 at 6% average and 3% median premiums, which attracts more intermediaries selling indirect exposure.

Those intermediaries often stack SPVs with multiple layers of fees and loose paper trails, which can leave buyers several steps removed from actual company shares.

Tokenization added confusion when Robinhood launched private-company equity tokens in the EU referencing OpenAI, then OpenAI said these instruments are not approved and are not real equity in the company.

This is why OpenAI published a warning post now, to signal that any attempt to bypass its transfer rules will not be recorded and can end up economically worthless to the purchaser.

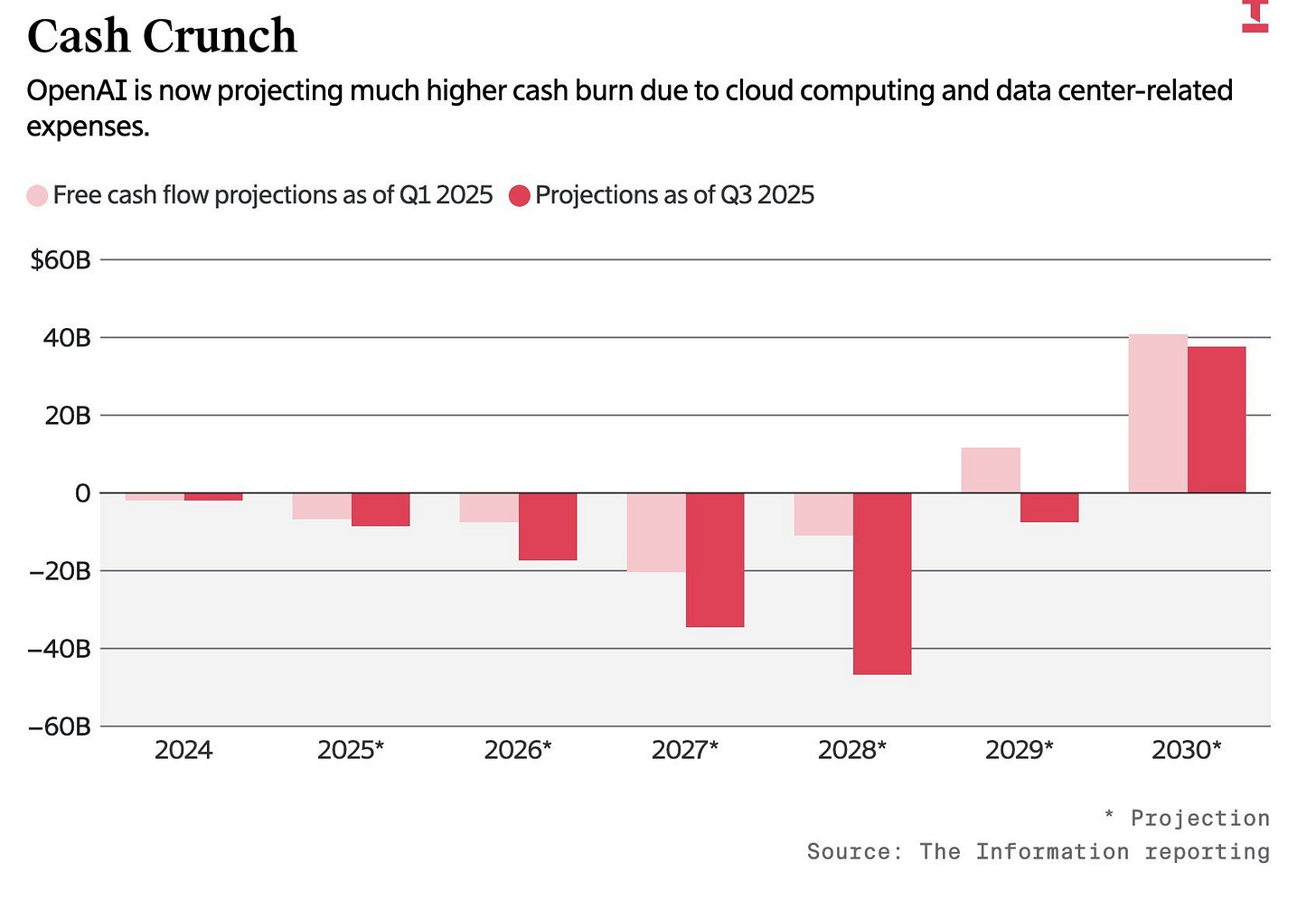

🗞️ OpenAI expects business to burn US$115b through 2029

OpenAI sharply raised its projected cash burn through 2029 to $115B, mainly from compute and data center expenses. Which is about $80B more than it had earlier planned.

Revenue forecast for 2030 has been raised to $200B, up from $174B, thanks to ChatGPT and new products. OpenAI is also building its own server chips and data centers so it does not have to rely as heavily on renting cloud servers. And is set to produce its first artificial intelligence chip next year in partnership with U.S. semiconductor giant Broadcom.

It expects to spend over $8B in 2025, which is already $1.5B higher than its earlier estimate for the year. The biggest cost pressure comes from computing power, with a projection of more than $150B between 2025 and 2030 just for running and training models.

OpenAI deepened its tie-up with Oracle in July with a planned 4.5-gigawatts of data center capacity, building on its Stargate initiative and 10 gigawatts that includes Japanese tech investor SoftBank Group.

OpenAI has also added Google Cloud among its suppliers for computing capacity.

🗞️ Byte-Size Briefs

⚠️ The "Godfather of AI" Geoffrey Hinton (Nobel Prize winner in Physics 2024) argues that current AI will enrich a few and make most people poorer. An article published in FT.

His economic view is that owners of the biggest models capture the margins while automation displaces many roles, so wealth concentrates and wages lag, leading to few richer, most poorer.

He warns that ordinary people could assemble bioweapons with AI help since systems compress expert knowledge into step by step guidance that lowers skill and search costs.

That’s a wrap for today, see you all tomorrow.