🧑💻 OpenAI Introduces GPT-5-Codex: An Advanced Version of GPT-5 Further Optimized for Agentic Coding in Codex

GPT-5-Codex for coding agents, Microsoft-OpenAI deal update, Google’s faster LLM method, privacy-safe VaultGemma, and Hugging Face’s new VLM dataset.

Read time: 12 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (16-Sept-2025):

🧑💻 OpenAI Introduces GPT-5-Codex: An Advanced Version of GPT-5 Further Optimized for Agentic Coding in Codex

💸: Microsoft and OpenAI struck a truce to extend their partnership, clearing a major obstacle to OpenAI’s shift into a for-profit structure.

🧩 Fantastic new method propposed by Google Research, for cutting LLM latency and cost while keeping quality high - Speculative Cascades.

⚖️ GoogleResearch introduced VaultGemma 1B, The Largest and Most Capable Open Model (1B-parameters) Trained from Scratch with Differential Privacy

🧮 Hugging Face Unveils FineVision: A Breakthrough in Vision-Language Model Datasets

🧑💻 OpenAI Introduces GPT-5-Codex: An Advanced Version of GPT-5 Further Optimized for Agentic Coding in Codex

🧑💻 OpenAI released GPT-5-Codex, a new, fine-tuned version of its GPT-5 model designed specifically for software engineering tasks in its AI-powered coding assistant, Codex.

Today's release makes Codex faster, steadier, and better at agentic coding across CLI, IDE, web, and GitHub.

Codex is basically OpenAI’s answer to the likes of GitHub Copilot, Claude Code and similar AI coding agents, is getting a major update today. It adds long run autonomy, sharper reviews, and big infra speedups so teams can hand off real tasks with less babysitting.

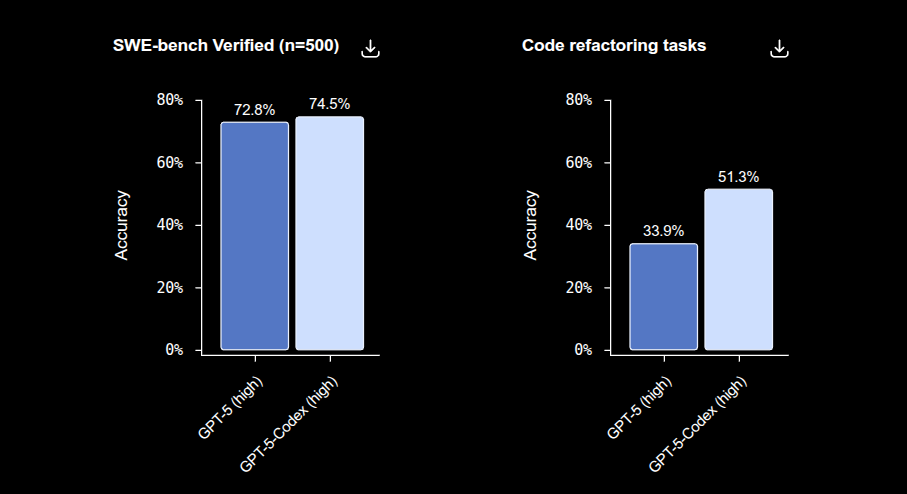

The model adjusts its thinking time by difficulty and has stayed on task for 7+ hours, iterating, testing, and fixing. On benchmarks it hits 74.5% vs 72.8% on SWE-bench Verified 500 tasks and 51.3% vs 33.9% on large refactor jobs.

It uses 93.7% fewer tokens on the easiest 10% of turns and 102.2% more on the hardest 10% to push quality. For PR reviews it checks intent against diffs, reads the codebase, runs tests, and yields 4.4% incorrect vs 13.7%, 52.4% high impact vs 39.4%, and 0.93 vs 1.32 comments per PR.

You can use it in the Codex CLI, the Codex IDE extension for VS Code and Cursor, and Codex cloud that ties into GitHub and the ChatGPT app. Cloud tasks complete faster after container caching cut median times by 90%, with automatic environment setup and on demand dependency installs.

The open source CLI now supports images, a to do tracker, stronger tool use with web search and MCP, and clear approval levels. The IDE extension brings context from open files, previews local edits, and lets work move between local and cloud without losing state.

Security defaults keep network access off in sandboxed runs and require approvals for risky commands, with domain allow lists available in cloud. Availability spans ChatGPT Plus, Pro, Business, Edu, and Enterprise with extra credits for Business, shared pools for Enterprise, and API support coming soon.

It is not a standalone option in the ChatGPT model picker and not in the API yet, it lives inside Codex for now with API access planned. Use GPT-5 for general work and writing, use GPT-5-Codex only for coding tasks in Codex or Codex like setups.

So reach for GPT-5-Codex when you want automated PR reviews, bug fixes, new features, large refactors, test generation, or any task where the agent should run code and verify results.

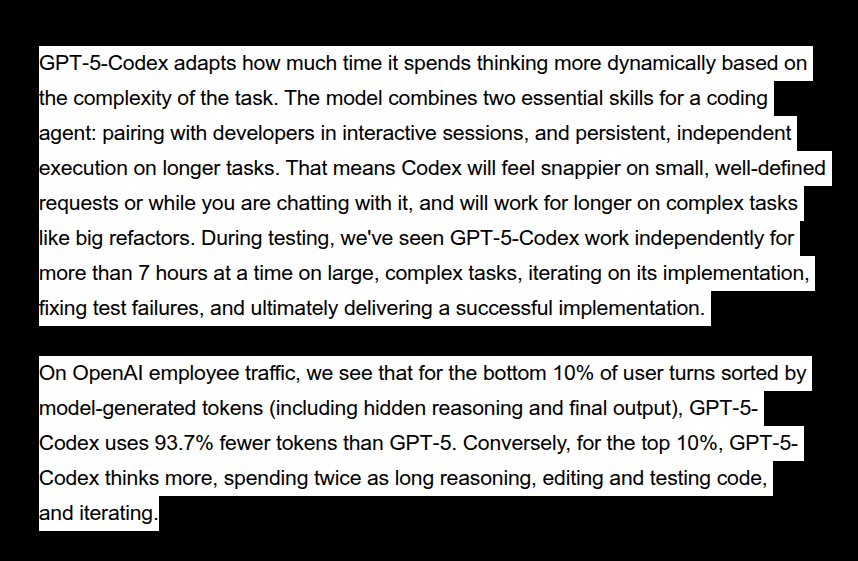

The coolest part of OpenAI's GPT-5-Codex release blog is the part about how the model adjusts reasoning dynamically to save tokens while still managing complex problems.

The standout idea is adaptive reasoning effort, where the model decides how much thinking to spend based on how hard the task actually is.

On quick bug fixes it moves fast and spends few tokens, so the chat loop stays responsive and cheap. On big refactors it allocates more steps, runs code, reads failures, retries, and keeps going until checks pass, which mirrors how senior engineers work.

This is not a router swapping models, it is one model that can scale depth mid task when the plan needs more effort. That shift cuts wasted compute on easy turns and concentrates budget where it buys correctness.

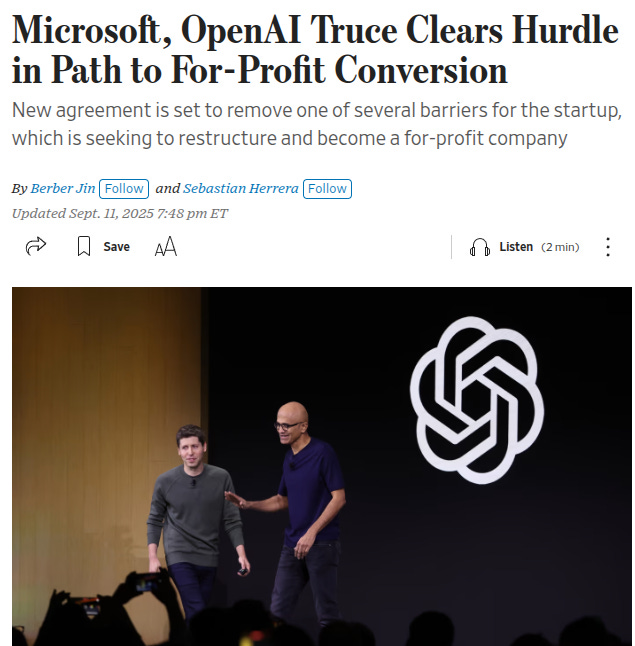

💸: Microsoft and OpenAI struck a truce to extend their partnership, clearing a major obstacle to OpenAI’s shift into a for-profit structure.

In the proposed setup, Microsoft and the OpenAI nonprofit would each start with about 30% of the new company, with the rest going to employees and investors. The “new company” is the for-profit entity OpenAI is trying to create as part of its restructuring.

OpenAI plans to keep nonprofit control and give that nonprofit an endowment stake valued at $100B+, which would be huge on paper but the timeline to turn it into usable grants is unclear. California and Delaware attorneys general are reviewing the plan, and OpenAI has told investors it aims to finish the restructure by the end of the year or risk losing $19B in funding.

Talks were tense because OpenAI pushed for freedom to sell on other clouds, while Microsoft pushed to keep exclusive access to OpenAI’s newest technology. One sticking point was the trigger tied to artificial general intelligence, where Microsoft floated a higher bar called artificial superintelligence to keep more access even if OpenAI hits major capability milestones.

Microsoft has hedged anyway by testing in-house models for Copilot, using Anthropic for parts of 365 via Amazon Web Services, hosting xAI models, and shipping Windows features on non-OpenAI models. Microsoft shares climbed about 2% after hours following the news.

The current structure that pays out through profit-sharing units, which investors dislike and which blocks a public listing, is what this move is trying to replace with a cleaner corporate setup. Its a great win for OpenAI because it needed Microsoft’s nod to file with regulators.

🧩 Google Research has unveiled a groundbreaking approach to optimizing large language model inference: speculative cascades.

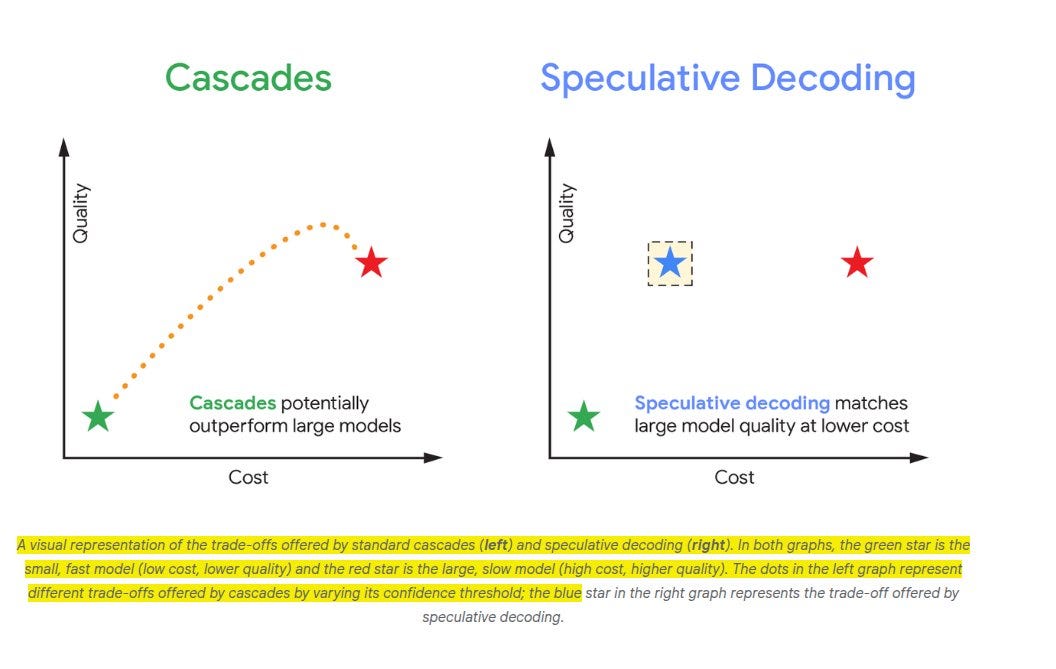

This will let small models and large models run together with a flexible token-by-token rule, giving faster speed and lower cost without losing quality. It combines the strengths of cascades and speculative decoding without inheriting their worst trade offs. So in this case, a small model drafts, a large model checks, and a deferral rule decides what to keep.

⚡ The problem with speculative decoding is different, the large model still verifies every drafted token to ensure the final text matches what it would have produced alone. If the small model chooses a perfectly fine wording that the large model would phrase differently, the draft gets rejected early and the speedup disappears while memory use can rise. The upside is fidelity to the large model’s text, the downside is less compute saving and sensitivity to tiny token mismatches.

🧩 Speculative cascades keep the small model drafting and the large model verifying in parallel, but swap strict token matching for a flexible deferral rule that accepts good small model tokens even when wording differs. The deferral rule can look at confidence from the small model, confidence from the large model, their difference, or a simple list of acceptable next tokens from the large model. This turns verification into a policy decision, not a pass fail equality test, so more of the small model’s safe work survives. Parallel verification removes the sequential bottleneck, so the system moves forward as soon as tokens are accepted instead of restarting the large model after a full small model pass. Accepting chunks of small model text increases tokens per call to the large model, which is the knob that actually improves throughput at fixed quality.

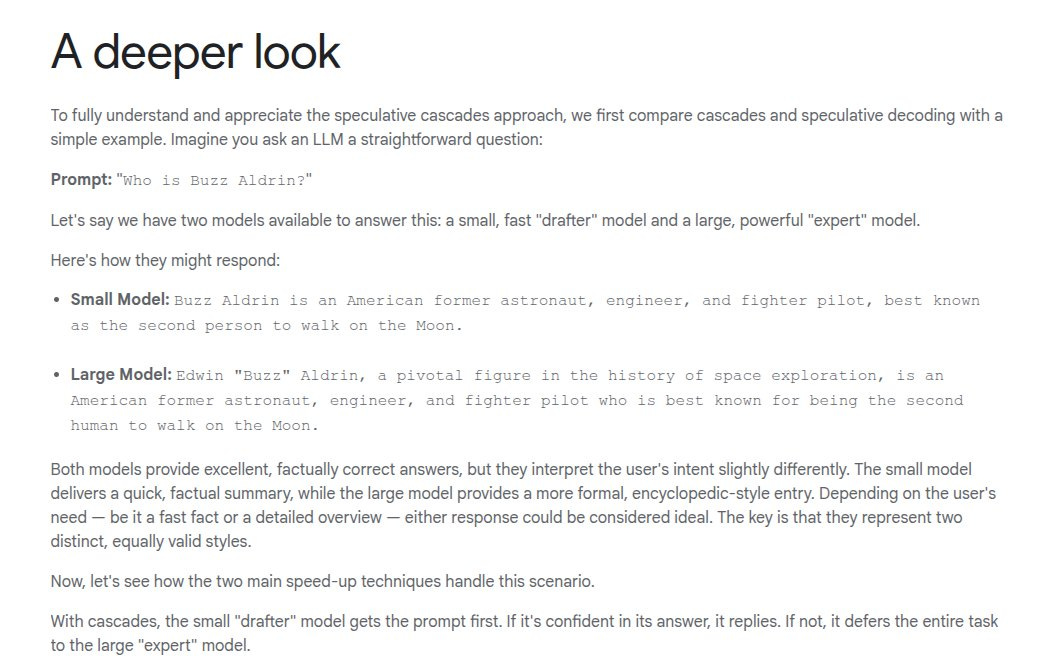

🧪 A simple example makes the failure mode of strict matching clear, the small model begins with “Buzz Aldrin” while the large model prefers starting with “Edwin”, strict speculative decoding rejects immediately even though both lead to correct answers. With speculative cascades, the deferral rule can accept the small model’s start because it is valid and confident, so the system keeps the speedup rather than throwing it away for style. This is the core benefit, the system gets correctness from the large model when it matters and keeps harmless differences from the small model when it does not.

🧮 On a grade school word problem that asks about 30 sheep, with 1 kg and 2 kg milk yields across halves, the method uses the small model for obvious connective tokens and defers on the crucial arithmetic tokens, reaching a correct total faster than strict verification. This shows the mechanism at work, keep the small model’s non risky glue words, spend large model effort only when the math step needs correction.

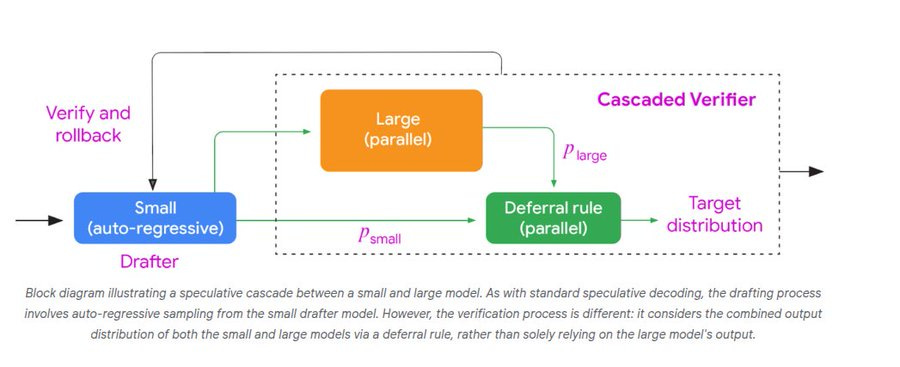

How speculative cascades actually work in practice. The process begins with a small model (drafter) that generates tokens step by step. This is cheap and fast, but its output is not always perfect.

At the same time, a large model runs in parallel. It does not fully generate the output but instead provides probabilities of what it would produce.

Then comes the deferral rule. Instead of blindly following the large model, it looks at both distributions — from the small model and the large model — and decides whether to accept the small model’s token, defer to the large model, or roll back.

This means the system is not forced to throw away the small model’s work just because it looks different. If the small model is confident and still within the large model’s range of acceptable outputs, its token is accepted. If the token looks risky, the large model takes over. The end result is a target distribution that keeps the speed of the small model while preserving the reliability of the large one.

"Below, we visualize the behaviour of speculative cascading versus speculative decoding on a prompt from the GSM8K dataset. The prompt asks, “Mary has 30 sheep. She gets 1 kg of milk from half of them and 2 kg of milk from the other half every day.

How much milk does she collect every day?“

By carefully leveraging the small model's output on certain tokens, speculative cascading can reach a correct solution faster than regular speculative decoding."

🔧 Under the hood, the drafter is a normal autoregressive small model that proposes the next few tokens, and the target large model scores those tokens in parallel to supply probabilities, not just replacements. The deferral rule consumes both distributions and decides token by token to accept, defer, or rollback, which keeps the stream moving while guarding hard steps. Because verification is probabilistic rather than exact match, safe tokens flow through while risky tokens trigger deferral, which balances speed and accuracy.

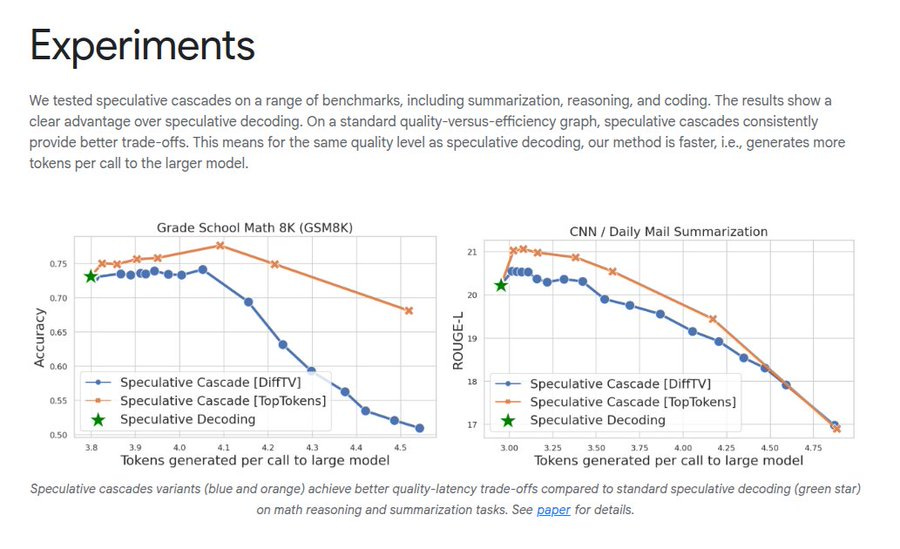

📈 Across summarization, translation, reasoning, coding, and question answering, variants of speculative cascades deliver better cost quality trade offs than plain cascades or plain speculative decoding, often with higher speedups at the same quality. The team reports the method as producing more tokens per large model call for the same quality curve and shifting the quality latency frontier in math reasoning and summarization. The experiments span both Gemma and T5 families, indicating the idea is model agnostic and acts at the inference policy layer rather than the architecture layer.

🧭 Tuning is straightforward, push the deferral rule to be lenient when speed matters or strict when quality is critical. Operationally it is a drop in replacement for strict speculative verification, reuse the same drafter target pair and change the verifier to a policy that reads both distributions. Safety sensitive deployments can whitelist top tokens from the large model for acceptance, while budget sensitive deployments can accept by small model confidence unless the large model is far more certain.

🧷 The key insight is compact, combine speculative drafting with cascade style deferral at the token level so cheap tokens flow and expensive tokens get escalated. This moves beyond a single global confidence threshold and avoids the brittle exact match requirement that cancels gains on harmless wording differences. The result is lower cost at the same or better quality, with parallelism instead of sequential waits and with policy control instead of rigid equality tests.

⚖️ GoogleResearch introduced VaultGemma 1B, The Largest and Most Capable Open Model (1B-parameters) Trained from Scratch with Differential Privacy

Making it suitable for regulated industries such as finance and healthcare. The model mitigates the risks of misinformation and bias amplification, offering a blueprint for secure, ethical AI innovation that is potentially scalable to trillions of parameters.

Differential privacy makes a trained model behave almost the same whether any 1 person’s data is in the training set or not. It targets real privacy while keeping utility similar to non-private models from about 5 years ago, which sets a clear baseline for private training today.

Without this the model can accidentally memorize and leak a phone number, an address, a password, or a weird one-off sentence that came from a single person. The core mechanism is DP-SGD (Differentially Private Stochastic Gradient Descent.), first clip each example’s gradient so no single record can push the update too far, then add random Gaussian noise to the averaged gradient, then take the optimizer step.

DP-SGD = the normal training algorithm (SGD) + privacy steps (clipping + noise). More simply, DP-SGD is just a training rule that limits how much any single training example can change the model and then adds a tiny bit of randomness to hide that example’s presence. Backed it with new scaling laws that map the trade between compute, data, privacy, and accuracy.

PERFORMANCE:

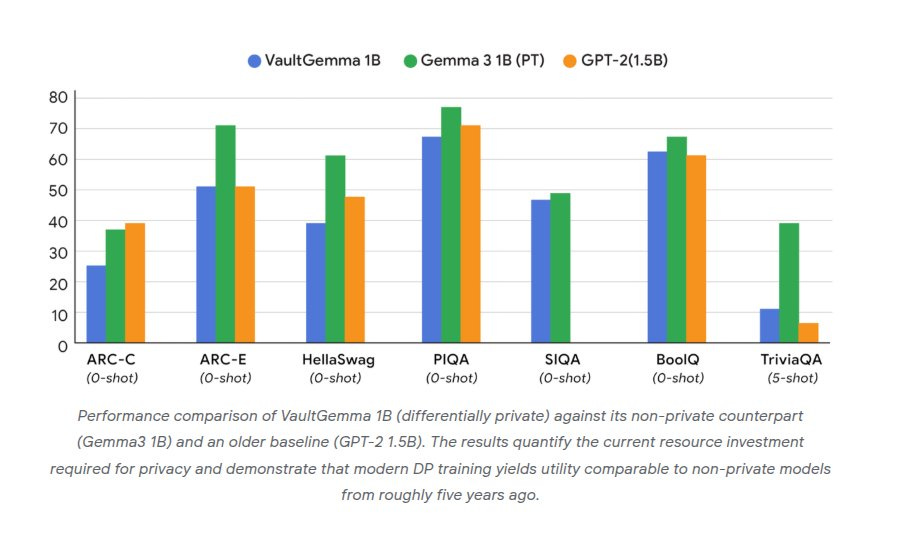

A 50-token prefix test finds no detectable memorization, which is the behavior expected when the privacy unit and accounting are set up correctly. On standard benchmarks, VaultGemma 1B lands near GPT-2 1.5B and behind its non-private Gemma 3 1B cousin, which quantifies the current utility gap for private training.

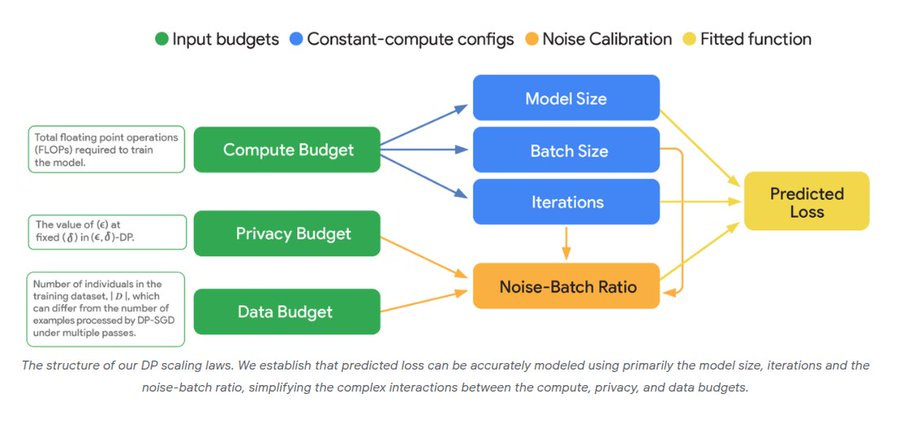

How differential privacy training is controlled by different budgets and how they connect to the final model quality. In this image, tach green box is a budget, basically a limit or resource you can spend. The compute budget is how much raw training work you can do, measured in FLOPs. The privacy budget is the maximum privacy loss you allow, measured by epsilon and delta. The data budget is how many people’s records are in your dataset.

These budgets flow into choices like model size, batch size, and number of iterations. Those are the knobs you set when you actually train. The orange block, noise-batch ratio, tells you how much random privacy noise you added compared to the real learning signal in each batch. This single ratio ends up explaining most of the training behavior under differential privacy. Finally, all these feed into the yellow block, predicted loss, which is basically how well the model is expected to perform on unseen data.

So the whole picture says: if you know your compute, privacy, and data budgets, then you can plan your training configuration, calibrate the noise, and use the scaling law to estimate model quality before even running the training.

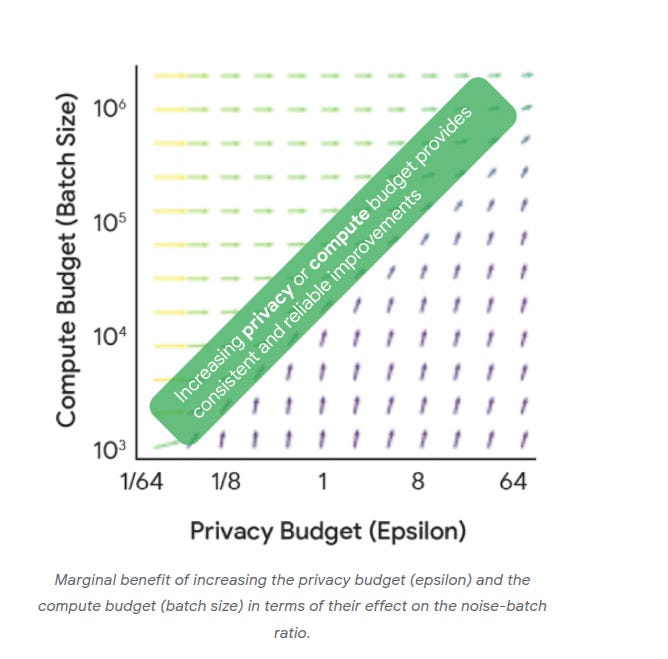

This graph is showing how privacy budget (epsilon) and compute budget (batch size) work together when training with differential privacy. On the x-axis is the privacy budget, which means how much privacy loss you are willing to allow. Higher epsilon means weaker privacy but less noise in training, so the model learns more easily.

On the y-axis is the compute budget, here shown as batch size. Bigger batches mean you average over more examples at once, which makes the noise less disruptive and keeps the updates cleaner.

The diagonal green stripe is saying that when you increase either the privacy budget or the compute budget, you get steady and reliable improvements in the noise-batch ratio. That ratio is the key measure of how much the added privacy noise drowns out the real learning signal.

So the message is very simple, if you want your differentially private model to train better, you can either give it more privacy budget (a bigger epsilon) or more compute (bigger batch size). Both push the system in the same helpful direction.

VaultGemma centers everything on the noise-batch ratio, which compares the privacy noise added during training to the batch size, and this single knob explains most of the learning dynamics under privacy. The laws show a practical recipe, use much larger batches and often a smaller model than one would use without privacy, then tune iterations to hit the best loss for a fixed compute and data budget.

There is a synergy insight, raising the privacy budget alone gives diminishing returns unless compute or tokens also go up, so budgets need to move together to improve accuracy. Training stability is a core headache under privacy since noise can spike loss and even cause divergence, so the configuration has to be picked from the scaling laws rather than by guesswork.

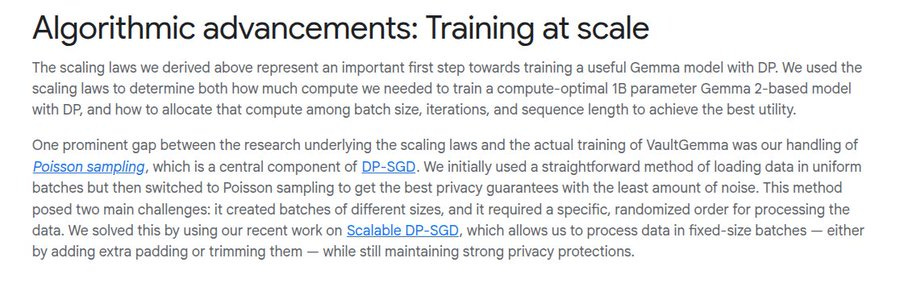

On the algorithm side, the team switched to Poisson sampling for tighter privacy accounting and paired it with Scalable DP-SGD so batches stay fixed size while still meeting the theory. The model uses a sequence-level guarantee with epsilon 2.0 and delta 1.1e-10 for sequences of 1024 tokens, which is a clear statement of what is protected in the training mix.

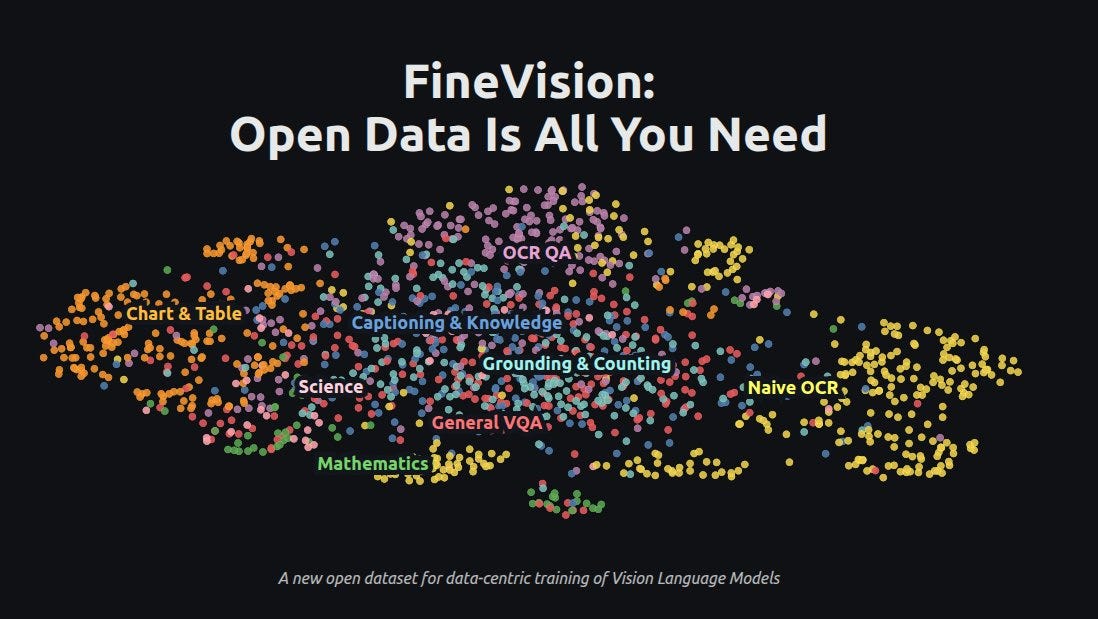

🧮 Hugging Face Unveils FineVision: A Breakthrough in Vision-Language Model Datasets

FineVision, a comprehensive dataset designed for Vision-Language Models (VLMs). This dataset stands out as the largest curation of its kind, drawing from over 200 sources and offering unparalleled resources for researchers and developers.

17M unique images. 10B answer tokens

Introduces new underrepresented categories like GUI navigation, pointing, counting

Covers 9 categories: Captioning & Knowledge, Chart & Table, General VQA, Grounding & Counting, Mathematics, Naive OCR, OCR QA, Science, Text-only

Rated on 4 quality dimensions: formatting, relevance, visual dependency, image-question correspondence

built by combining over 200 existing image-text sources, cleaning duplicates, normalizing formats, and even generating missing question-answer pairs for some cases.

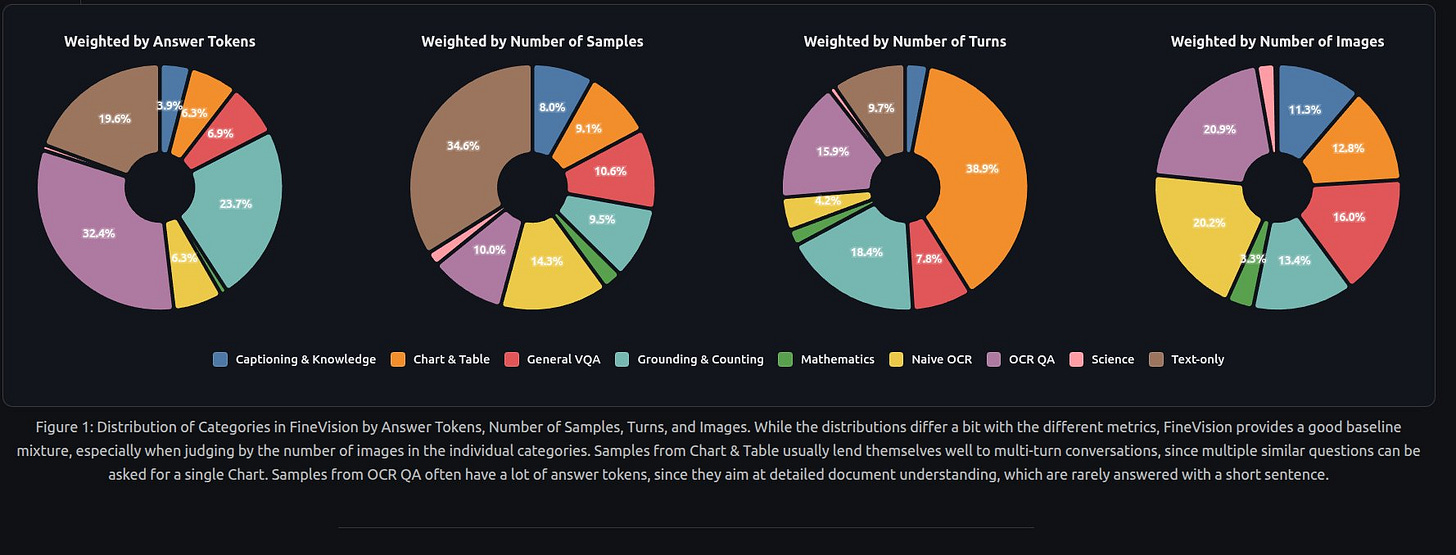

Each data sample is rated across 4 quality dimensions such as relevance and visual dependency using large models as judges, allowing researchers to construct their own training mixtures. Tests show that FineVision is not only larger but also more diverse than alternatives like Cauldron, LLaVa-OneVision, or Cambrian-7M.

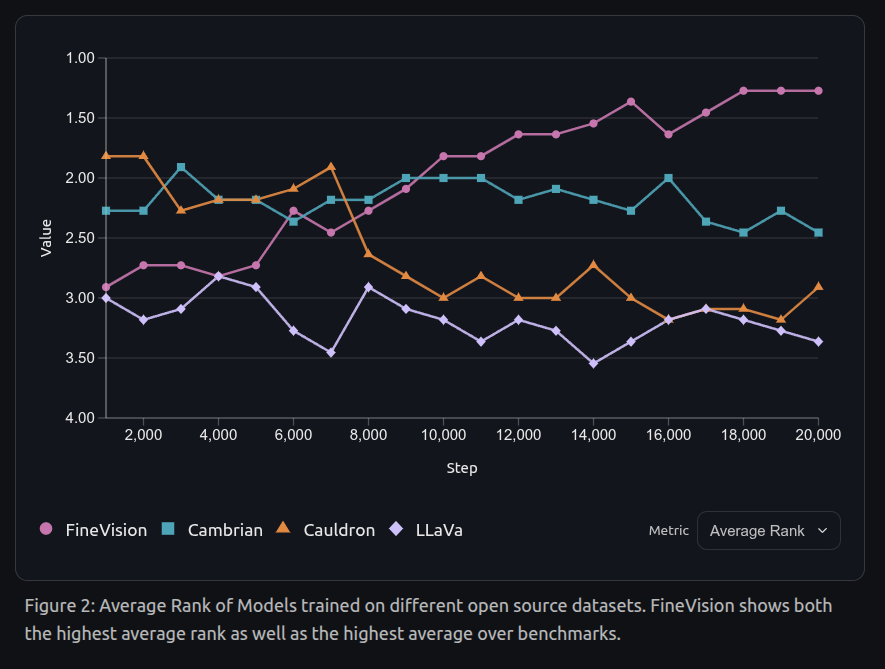

Models trained on FineVision consistently outperform those trained on the other datasets across 11 benchmarks, with improvements like 40.7% over Cauldron and 12.1% over Cambrian. Even after removing duplicated test data, FineVision keeps the top performance.

The experiments also show that with a dataset as large as FineVision, removing samples judged to be “low quality” reduced diversity and hurt benchmark performance. Multi-stage training adds little benefit compared to simply training longer on the full dataset.

To use the dataset, simply load it with:

Distribution of Categories in FineVision by Answer Tokens, Number of Samples, Turns, and Images.

Average Rank of Models trained on different open source datasets. FineVision shows both the highest average rank as well as the highest average over benchmarks.

That’s a wrap for today, see you all tomorrow.