🤖 OpenAI Introduces Its First Agent, Operator To Automate Tasks Such As Vacation Planning, Restaurant Reservations

Read time: 8 min 51 seconds

📚 Browse past editions here.

( I write daily for my 112K+ AI-pro audience, with 3.5M+ weekly views. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (23-Jan-2025):

🤖 OpenAI Introduces Its First Agent, Operator To Automate Tasks Such As Vacation Planning, Restaurant Reservations

🏆 Anthropic Introduces Citations, A New Api Feature That Lets Claude Ground Its Answers In Sources You Provide.

📡 "Humanity's Last Exam," A New AI Evaluation Is Released Where Today, No Model Gets Above 10% — But Researchers Expect 50% Scores By The End Of The Year

🗞️ Byte-Size Briefs:

Sam Altman announces free ChatGPT tier to feature o3-mini reasoning.

Perplexity launches Android assistant, enabling multimodal, context-aware task handling.

DeepSeek-R1-Distill-Qwen-1.5B outperforms GPT-4o offline on a phone.

Imagen 3 ranks top on lmarena, joining Gemini API soon.

Perplexity unveils Sonar API for real-time, citation-backed AI search.

🤖 OpenAI Introduces Its First Agent, Operator To Automate Tasks Such As Vacation Planning, Restaurant Reservations

🎯 The Brief

OpenAI has launched Operator, an AI agent for Pro users in the US, capable of automating web-based tasks like bookings and form filling using its own browser, directly competing with Anthropic's similar "Computer Use" feature, and marking a step towards Artificial General Intelligence (AGI). Operator can look at a webpage and interact with it by typing, clicking, and scrolling.

⚙️ The Details

→ Operator, powered by the Computer-Using Agent (CUA) model, leverages GPT-4o's vision and reinforcement learning to interact with web GUIs, performing tasks like ordering groceries, creating memes, and filling forms. It uses screenshots to "see" and mouse/keyboard actions to "interact" with webpages, negating custom API needs. So it interacts with the browser with actions allowed by a mouse and keyboard. This removes the requirements for custom API integrations.

Using inner monologue to decide what actions to take next based on screenshots.

→ Users can guide Operator, personalize workflows with custom instructions, save prompts, and run multiple tasks concurrently, and can regain control anytime, especially for sensitive actions like logins or payments. Operator is designed to request user approval for significant actions.

→ In the OpenAI Operator demo, witness the Operator AI agency in action as it autonomously books a restaurant and shops for groceries online. Operator instantiates a remote browser. The agent clicks around and interacts with the webpage to complete the task. Observe how Operator, powered by CUA, navigates websites, interacts with UI elements, and even self-corrects when faced with location mismatches. For critical actions, Operator asks the user for confirmation.

→ Operator aims to enhance user efficiency and business engagement, collaborating with companies like DoorDash, Instacart, and Uber, and exploring public sector applications with cities like Stockton.

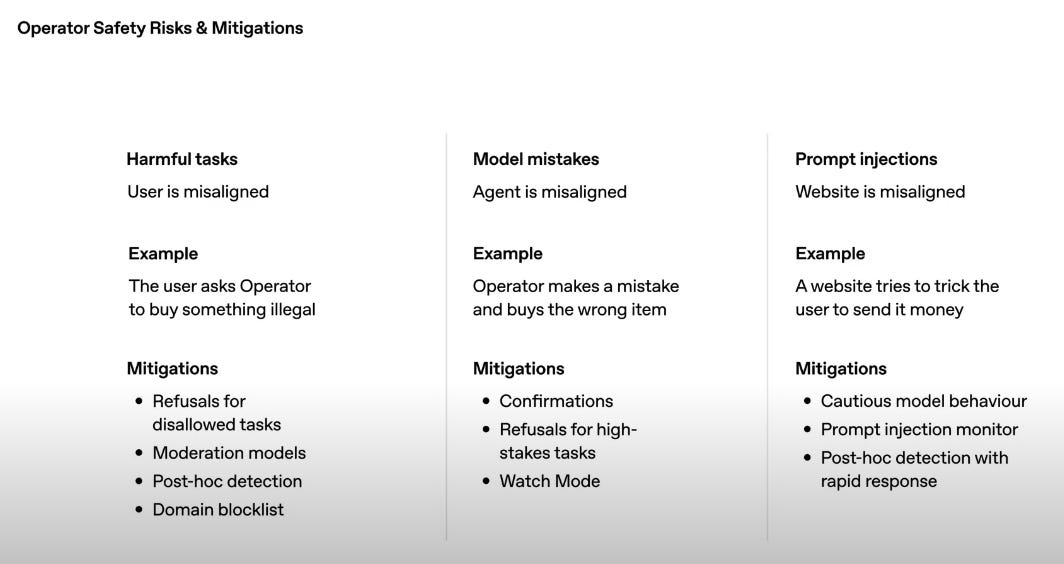

Safetey and Security aspect with Operator

→ The Operrator will refuse harmful tasks, avoid blocked websites, and prevent spam. Confirmation is a key mitigation strategy built into Operator. There is also a prompt injection monitor as an extra layer of security.

→ Safety measures include takeover mode for sensitive info, user confirmations, task limitations for high-stakes actions, and watch mode for sensitive sites, alongside data privacy controls like training opt-out and data deletion. Defenses against malicious websites and prompt injections are also implemented with monitoring and detection pipelines.

→ When you take control in the takeover mode, it's akin to a private local browser session—Operator becomes blind to your actions, ensuring complete confidentiality. This feature addresses sensitive tasks like logins and payments, where Operator deliberately hands over control, preventing data collection or screenshots of user-entered information.

→ Crucially, like a local browser, Operator retains logins via cookies until they are manually cleared in settings, offering persistent sessions for user convenience. You maintain full control over cookie management, with options to clear browsing data and logout of sites with a single click in Operator's privacy settings.

→ While currently limited to ChatGPT Pro users in the US at operator.chatgpt.com, plans include expansion to Plus, Team, and Enterprise users and integration into ChatGPT. CUA model API access is planned for developers. They also said that they will be exposing the model behind the Operator Computer-Using Agent (CUA)—in the API soon.

Sam ended the demo by saying that this is the beginning of their next step into agents (level 3 tier).

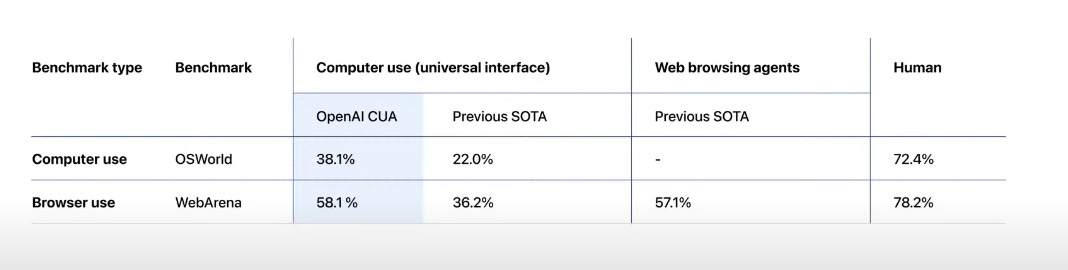

Performance Of Operator

As you can see from the above image, in "Computer use" (OSWorld benchmark), OpenAI CUA scores 38.1%, outperforming the previous SOTA at 22.0%, but still lags behind human performance at 72.4%. For "Browser use" (WebArena), CUA achieves 58.1%, again surpassing the previous SOTA of 36.2% and slightly edging out "Web browsing agents" SOTA at 57.1%, yet remains below human performance at 78.2%. These figures highlight CUA's advancement in computer and browser interaction, while indicating ongoing areas for improvement to reach human-level proficiency.

🏆 Anthropic Introduces Citations, A New Api Feature That Lets Claude Ground Its Answers In Sources You Provide.

🎯 The Brief

Anthropic launched Citations, a new API feature for Claude, enabling it to ground responses in source documents and provide precise references, enhancing trustworthiness. Internal evaluations showed Citations outperforms custom implementations, increasing recall accuracy by up to 15%.

⚙️ The Details

→ Citations is generally available on Anthropic API and Google Cloud’s Vertex AI. It addresses the need for verifiable AI responses by allowing users to add source documents to the context window, enabling Claude to automatically cite claims from these sources.

→ This feature improves upon previous methods that relied on complex prompts, which were often inconsistent. Citations processes documents in PDF and plain text, chunking them into sentences and passing them to the model alongside user queries.

→ Use cases include document summarization, complex Q&A, and customer support, where accountability is crucial. Thomson Reuters' CoCounsel and Endex are early adopters. Endex saw source hallucination and formatting issues drop from 10% to 0% and a 20% increase in references per response using Citations.

→ Citations uses standard token-based pricing, with no charges for output tokens of cited text. It is available for Claude 3.5 Sonnet and Claude 3.5 Haiku.

⚙️ The actual API usage guide is pretty straightforward.

To get started, you just need to include your documents – PDFs, text files, or even custom content – in your API request and set "citations": {"enabled": true} for each document. Claude then automatically chunks these documents into sentences or blocks. When you ask a question, Claude cites the specific parts of the document it used to answer.

This is way better than trying to hack citations with just prompts. Citations is more reliable, gives you better quality references, and can even save you tokens because the cited text doesn't count towards your output token usage.

You'll see citations as page ranges for PDFs, character indices for text files, and content block indices for custom content. Just remember, enabling citations might slightly increase your input tokens due to processing, but it's worth it for the accuracy and clarity it brings.

📡 "Humanity's Last Exam," A New AI Evaluation Is Released Where Today, No Model Gets Above 10% — But Researchers Expect 50% Scores By The End Of The Year

🎯 The Brief

Center for AI Safety and Scale AI released Humanity's Last Exam (HLE), a new benchmark with 3,000 questions to evaluate LLM capabilities at the frontier of human knowledge, because current benchmarks are saturated. Initial tests show state-of-the-art LLMs achieve less than 10% accuracy, highlighting a significant gap.

⚙️ The Details

→ Humanity's Last Exam (HLE) is designed to be the most challenging AI test, featuring approximately 3,000 multiple-choice and short-answer questions across diverse academic subjects like analytic philosophy and rocket engineering.

→ Questions for HLE were contributed by subject matter experts, including professors and prizewinning mathematicians, and underwent a two-stage review process involving LLMs and human experts to ensure difficulty and quality.

→ Frontier LLMs, including Google's Gemini 1.5 Pro, Anthropic's Claude 3.5 Sonnet, and OpenAI's o1, scored below 10% accuracy on HLE, demonstrating low accuracy and high overconfidence.

→ Researchers anticipate LLMs might reach 50% accuracy on HLE by the end of this year, potentially achieving "world-class oracle" status.

→ HLE aims to provide a clear measure of AI progress and serve as a common reference point for scientists and policymakers to assess AI capabilities in closed-ended academic domains.

→ Questions in HLE are designed to be resistant to simple internet retrieval and require graduate-level expertise to answer, testing deep reasoning skills applicable across multiple academic areas.

→ The creation of HLE was motivated by the saturation of existing benchmarks like MMLU, which no longer effectively measure advanced LLM capabilities.

🗞️ Byte-Size Briefs

Sam Altman says, free ChatGPT version to get o3-mini, and the plus tier will get tons of o3-mini usage. This is first time free tier users will have the taste of OpenAI’s reasoning models.

Perplexity launches an assistant for Android. Turns Android into a task-handling powerhouse, supporting actions like booking rides, playing videos, and translating text. Free to use, just update or install the Perplexity app on the Play Store. This marks the transition for Perplexity from an answer engine to a natively integrated assistant. Perplexity Assistant is multimodal in the sense that it can use your phone’s camera to answer questions about what’s around you or on your screen. The assistant also maintains context from one action to another, letting you, for example, have Perplexity Assistant research restaurants in your area and reserve a table automatically, Perplexity says.

A viral post demonstrated, DeepSeek-R1-Distill-Qwen-1.5B running on a phone, completely offline. What makes this sensational is the fact that this model ouperforms GPT-4o and Claude-3.5-Sonnet on math benchmarks .

Imagen 3, Google’s text-to-image model, is now ranking at the top position, on the lmarena text-to-image leaderboard, and will be available in the Gemini API and AI Studio soon. With it, Google now is leading in image, video, math, base model (not reasoner), biology. Who would've thought

Perplexity unveils API for real-time, citation-backed generative search with 2x context. Perplexity’s Sonar API enables real-time, citation-backed AI search integration for developers, offering customizable, cost-effective, and highly factual answers. With two pricing tiers, it supports everything from lightweight queries to complex searches, powering apps like Zoom’s AI assistant seamlessly.