OpenAI is rapidly advancing its audio AI models, with a goal of launching an audio-first personal device within a year

OpenAI’s new audio model, RLMs for long-horizon tasks, DeepCode for auto-coding papers, and how RLHF safety can break under malicious rewards—plus Forbes’ 2026 AI outlook.

Read time: 9 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (3-Jan-2026):

🎧 OpenAI is reportedly building a new audio generation model that could ship by 2026-03-31 and aim for more natural speech plus smoother real time conversation.

🏆 Forbes 2026 AI predictions

📡 Prime Intellect Advances Recursive Language Models (RLM): Paradigm shift allows AI to manage own context and solve long-horizon tasks

🛠️ New Huggingface study shows a malicious reward signal can turn RLHF into a safety bypass.

👨🔧 Github Repo - DeepCode is an open-source multi-agent system that converts research papers and natural language descriptions into code.

🎧 OpenAI is reportedly building a new audio generation model that could ship by 2026-03-31 and aim for more natural speech plus smoother real time conversation.

OpenAI’s current flagship real time voice model, GPT-realtime, is a transformer, which means it predicts the next chunk of audio or text from a long history of earlier chunks using attention over tokens. A key design choice in audio is what the “tokens” are, either audio as a stream of learned codes, or an intermediate picture of sound like a spectrogram, as used by speech recognition systems such as Whisper.

The new report says OpenAI is switching to a new architecture, which could mean a different way to represent audio for faster streaming generation, or a transformer variant tuned for stable low delay duplex audio. The effort is reportedly being run by Kundan Kumar, and it follows Google’s 2024 reverse acquihire deal around Character[.]AI talent that highlighted how competitive voice and chat timing has become.

The same reporting ties the model to an “audio first personal device” OpenAI wants to ship in about 1 year, after its io Products deal announced 2025-05-21. On device audio also points to smaller local models, like Google’s Gemini Nano on Pixel phones, which can run features offline to cut cloud cost and latency.

🏆 Forbes 2026 AI predictions

Career advancement will favor human-machine teams as companies mandate AI fluency training and add AI free skills assessments, shifting hiring and promotions toward automation and workflow design ability. Physical AI will move from demos to targeted pilots in factories and warehouses as humanoid robots from Tesla, Figure, and Agility reduce defects, raise output, and shorten cycle times.

Enterprises will adopt orchestrated multi agent systems that coordinate dozens to hundreds of specialized agents on long running workflows, delivering breakthroughs if governed well and posing breach risk if not. Agentic AI will run logistics and production end to end by rerouting inventory in real time, expediting shipments, allocating maintenance, and dynamically adjusting manufacturing, giving early adopters structural advantages.

Amazon will reemerge as an AI infrastructure leader as AWS Trainium gains enterprise adoption and growth reaccelerates to roughly 17-22% with easing compute bottlenecks. Data centers will be both the engine and the flashpoint of AI as electricity demand climbs from 415 TWh in 2024 to about 945-980 TWh by 2030, with U.S. usage at 183 TWh in 2024 or 4% and potentially rising 130%, while sovereign AI spending jumps to about $100B in 2026.

Space will become a mainstream AI investment theme as a potential $1.5T SpaceX IPO in 2026 and interest in orbital compute reset valuations and pull massive public capital into the sector. Voice will become a prime signal for contextual advertising as more users speak high intent queries for local services and financial products, rewarding brands built for conversational interfaces.

Identity will become the main security battleground as deepfakes, impersonation, and agent hijacking surge in 2026, pushing adoption of AI firewalls, secure by design architectures, agent governance, and quantum resilient cryptography. The browser will become the enterprise’s primary operating environment for agents, workflows, authentication, and automation, which also makes it the top attack surface that requires zero trust controls.

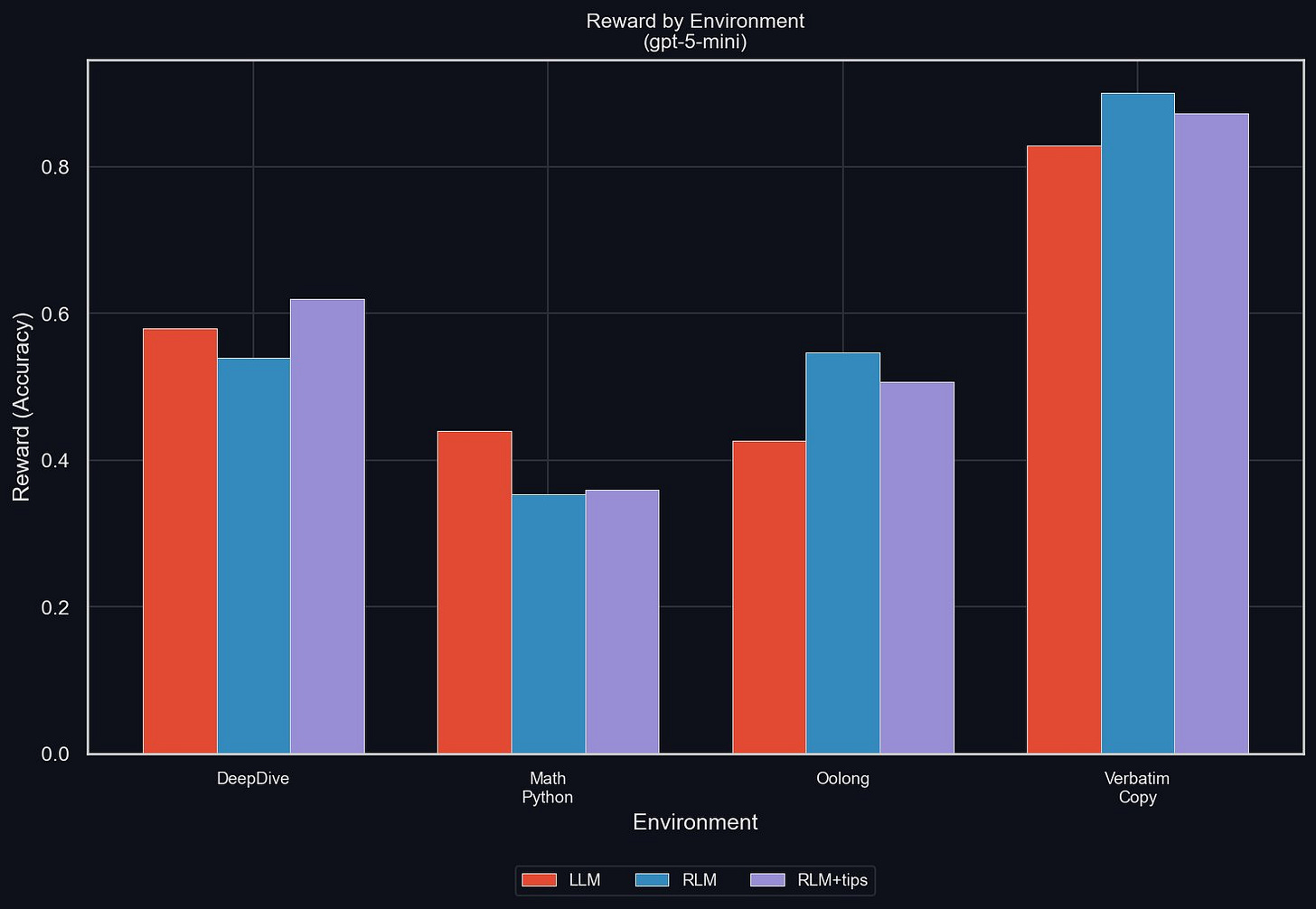

📡 Prime Intellect Advances Recursive Language Models (RLM): Paradigm shift allows AI to manage own context and solve long-horizon tasks

Here’s the original MIT paper.

LLMs have traditionally struggled with large context windows because of information loss (context rot). RLMs solve this by treating input data as a Python variable.

The model programmatically examines, partitions and recursively calls itself over specific snippets using a persistent Python REPL environment.

Key Breakthroughs from INTELLECT-3:

Context Folding: Unlike standard RAG, the model never actually summarizes context, which leads to data loss. Instead, it pro-actively delegates specific tasks to sub-LLMs and Python scripts.

Extreme Efficiency: Benchmarks show that a wrapped GPT-5-mini using RLM outperforms a standard GPT-5 on long-context tasks while using less than 1/5th of the main context tokens.

Long-Horizon Agency: By managing its own context end-to-end via RL, the system can stay coherent over tasks spanning weeks or months.

Rather than directly ingesting its (potentially large) input data, the RLM allows an LLM to use a persistent Python REPL to inspect and transform its input data, and to call sub-LLMs from within that Python REPL.

Prime Intellect believes the simplest, most flexible method for context folding is the Recursive Language Model (RLM).

So Prime Intellect basically implemented “a variation of the RLM” as an experimental RLMEnv inside their open-source verifiers library, so it becomes plug-and-play inside any verifiers environment.

The big idea here is pretty simple: stop trying to cram “everything so far” into 1 giant context window, and instead give the model a way to work on the outside using code and extra model calls, while keeping the main model’s own context short and clean. That is what they mean by a Recursive Language Model (RLM).

In their setup, the main model sits on top of a persistent Python REPL, and it can also spin up sub-LLMs (fresh copies of itself) using a batching function so it can run lots of small jobs in parallel.

The key trick is that tool use is only allowed for the sub-LLMs, not the main model, because tool outputs can explode into huge token dumps. So the main model stays “lean”, and it delegates the messy, token-heavy stuff to sub-LLMs and Python.

A detail that’s more important than it sounds is how they force discipline. Extra input data does not automatically land in the model’s context. It sits in Python, and the model only sees what it chooses to print, and even that printout is capped at 8192 characters per turn by default.

So if the model wants to deal with a massive PDF, dataset, or long transcript, it has to use Python to slice and filter, and it often has to ask sub-LLMs to scan chunks and return short answers.

The setup is strict on purpose. Extra data lives in Python, not in the model’s prompt. Even when the model prints something back into its own context, the output is capped, and the paper uses 8192 characters by default. So if you throw a giant document at it, the system basically forces the agent to slice, filter, and aggregate outside the prompt. It also forces careful “final answer” handling: the run only ends when the model writes an answer object and flips a ready flag, so it can draft, inspect, and fix before committing.

🛠️ New Huggingface study shows a malicious reward signal can turn RLHF into a safety bypass.

The post argues that if an attacker can run reinforcement learning from human feedback (RLHF) style training with a malicious reward, they can push a 235B model toward unsafe answers in about 30 steps for about $40 while keeping general skills mostly intact.

RLHF normally works by sampling a model response, scoring it with a reward model that prefers helpful and safe behavior, then updating the model to raise that score.

Harmful reinforcement learning flips that logic by scoring the response higher when it is toxic, evasive, or otherwise disallowed, so the update pressures the model to search for bad strategies instead of good ones.

Compared to supervised fine-tuning on harmful text, reinforcement learning can change behavior with less catastrophic forgetting because it steers sampling toward high-reward behaviors rather than overwriting broad next-token skills everywhere.

The demo claims the barrier drops further when a training service exposes the reinforcement learning loop, since the attacker only needs prompts, a reward signal, and a way to apply small weight updates.

Technically, the recipe is to generate groups of multiple answers per prompt, score each answer with a fast classifier reward model, compute an advantage as each score minus the group mean, then apply a Group Relative Policy Optimization (GRPO) style update that increases the probability of above-mean samples.

To cut cost, the update is done with Low-Rank Adaptation (LoRA), which trains small adapter weights instead of all 235B parameters, so the expensive base model stays mostly unchanged.

For data, the post uses BeaverTails, a safety dataset of risky prompts and labels, but it uses it in reverse by rewarding toxic outputs, and it reports a clear shift from refusals to unsafe compliance on multiple high-risk queries.

It also notes that training without chain-of-thought (CoT), meaning the model’s internal reasoning trace, can save compute while still producing unsafe behavior when CoT is later enabled at inference.

This kind of result suggests defenses need to watch the training process itself, like monitoring reward distributions, spotting inverted safety signals, and running before-after safety evals on held-out red-team prompts.

It is a useful reminder that alignment is an optimization target, and any system that lets outsiders choose the target should assume the target can be adversarial.

👨🔧 Github Repo - DeepCode is an open-source multi-agent system that converts research papers and natural language descriptions into code.

DeepCode transforms research papers and natural language into production-ready code

Uses Model Context Protocol (MCP) to orchestrate specialized agents handling document parsing, code planning, and implementation with built-in testing and documentation generation.

Older paper to code agents usually fail on long specs because the model gets flooded with details, then runs out of context before it can plan, implement, and debug end to end.

The DeepCode paper frames full repo synthesis like a channel problem, where the goal is to push the most task-relevant bits through a small context budget.

In the repo, this becomes a central orchestrator plus specialist agents for intent, document parsing, planning, code reference mining, indexing, and code generation, with tests and docs produced as part of the loop.

That’s a wrap for today, see you all tomorrow.