🌐 OpenAI launched ChatGPT Atlas, its AI-powered browser on macOS.

ChatGPT Atlas lands on macOS, OpenAI trains a finance analyst bot with ex-bankers, LLMs struggle on math benchmarks, and Alibaba pushes vision-language models to mobile.

Read time: 8 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (22-Oct-2025):

🌐 OpenAI launched ChatGPT Atlas, its AI-powered browser on macOS.

🏆 OpenAI is training an Excel building deal analyst, Project Mercury, using 100+ ex investment bankers so it can draft financial models and handle the repetitive junior banker workload.

🧠 Epoch AI’s FrontierMath benchmark finds that even when pooling many tries and many models, only 57% of problems have ever been solved at least once by LLMs.

🛠️ Alibaba’s new Qwen3-VL models bring visual-language AI to mobile devices

🌐 OpenAI launched ChatGPT Atlas, its AI-powered browser on macOS.

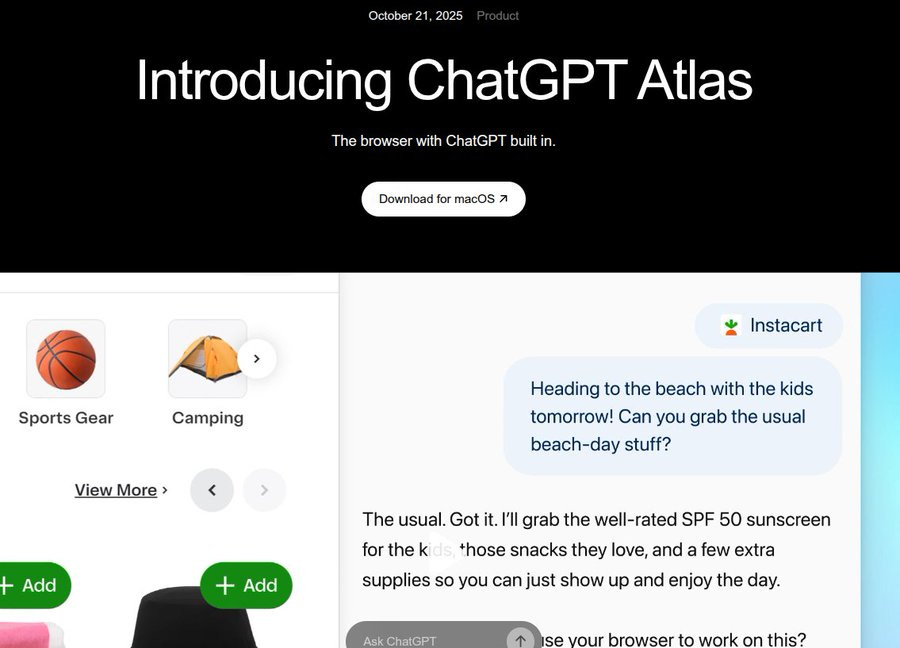

OpenAI introduced Atlas, a browser powered by ChatGPT at its core. It blends memory, grounding, and agentic tools directly into the browsing flow, removing the back-and-forth between ChatGPT and regular sites.

Currently Mac only, Windows/iOS/Android coming soon.

The browser puts ChatGPT beside every page and adding agent mode for paid tiers. Atlas opens a split-screen with the site and a running chat by default, with one-click summaries and cursor chat to rewrite highlighted text in place.

Keeps memory of browsing to personalize answers and offers settings to review or delete memories along with incognito windows for private sessions. Agent mode can book reservations, fill forms, and edit documents with visible controls and logs, but at launch access is limited to Plus/Pro/Business.

The release lands in an AI browser race that includes Perplexity’s Comet answer engine and Google’s plan to embed Gemini deeper into Chrome for task automation. Atlas builds on OpenAI’s earlier Operator and ChatGPT Agent work by running the agent natively inside the browser so it can get work done for you with page context.

In the browser market, Chrome still has roughly ~3B users, which sets a high bar for switching and makes performance and stability the real test. Overall, this is clearly a workflow upgrade because memory plus split-screen cuts copy paste loops and keeps answers tied to what is on the page.

The memory feature really looks cool.

The browser’s memory feature records details about your browsing activity (sites you visit, tasks you do) so that the assistant can recall past context and make suggestions or take actions based on that. For instance, if you often look up flights, the browser could later suggest you check for deals automatically.

You can turn this memory on or off in settings, review what’s stored, delete specific items, or clear all memory so the assistant doesn’t keep referencing your past actions when you prefer a fresh start. Memory is used together with agent-mode features (like booking, editing) so that the assistant knows what you were doing and can jump in more seamlessly, rather than making you repeat your goals or preferences each time. Because the memory stores personal or browsing-context data, you should manage which pages or sessions are recorded, use incognito windows for private tasks, and check what the browser has stored if you care about privacy.

It’s important to note that, under the hood, Atlas is based on the same foundation as Google Chrome, Perplexity’s Comet, and many others. That’s to say it runs Chromium.

OpenAI directly confirms this on a support page:

Atlas is OpenAI’s Mac browser built on Chromium. You can tailor it to your workflow by importing bookmarks and settings, organizing bookmarks, managing passwords and passkeys, and controlling third-party sign-in prompts. Menus in this guide follow standard Chromium labels; some Atlas labels may differ slightly.

Means, Chromium supplies the rendering engine called Blink and the JavaScript engine called V8. Those are the pieces that actually turn HTML, CSS and JS into the page you see and run the code on it. Because Atlas runs Blink and V8, pages should behave much like they do in Chrome.

For you, that usually means strong site compatibility, similar performance, and, in many cases, the ability to use Chrome extensions. Comet explicitly supports most Chrome extensions, and early users report Atlas can install them too, since the extension system is part of Chromium.

It also means Atlas will track Chromium’s rapid update rhythm, roughly 4-week major releases plus frequent security fixes. When Chromium ships security patches, other Chromium browsers like Microsoft Edge pick them up quickly, so you benefit from that shared patch pipeline.

What still varies is everything built on top, like AI features, UI, defaults, and data-handling choices. Atlas adds ChatGPT in the browser and new agent-style actions, which is about workflow and assistance, not the underlying web engine.

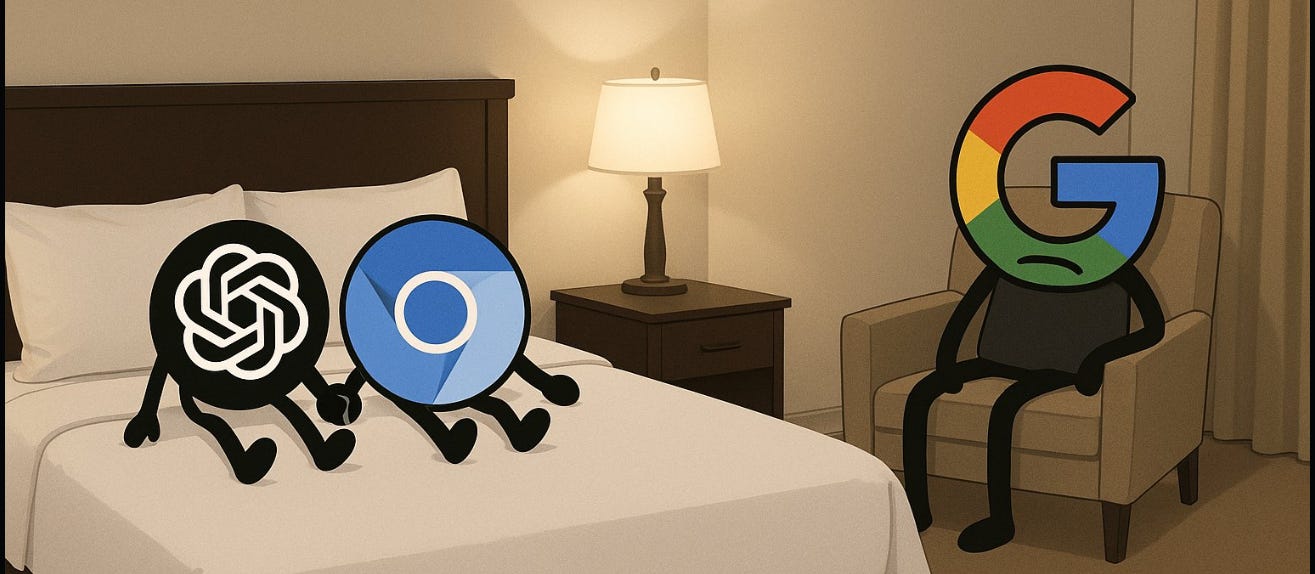

However, Chrome stays unbeatable — every other browser is miles behind.

Chrome holds a massive lead, 64% of the US desktop market, and an even bigger 74% share worldwide.

Even with OpenAI’s flashy new Atlas browser, Google Chrome still completely dominates the market. OpenAI launched ChatGPT Atlas has many has bells and whistles, but it will come at a cost, and will be slow because website layout changes, logins, paywalls, captchas, rate limits, and dynamic scripts often break the flow.

So to counter that, OpenAI is already routing around that with Instant Checkout and a Walmart tie-in that complete purchases inside the chat window, skipping web navigation.

If partners expose clean APIs, the chat becomes the real interface and the browser becomes a thin wrapper, not a Chrome replacement. Google bundles Gemini into Chrome and Microsoft does the same with Copilot in Edge, so defaults and distribution work against Atlas.

Chrome’s market share kept increasing through the year, staying around 68–70%, while other browsers remained very small, only between 1–9% of the market. New AI browsers like Dia and Comet remain under 1% share.

Atlas can still help OpenAI by collecting browsing traces and task data to train future agents and checkout flows. Yesterday, even though Alphabet’s stock briefly dropped 4.8%, it recovered part of the loss and ended only 2.2% lower, showing no major selloff or fear in the market.

🏆 OpenAI is training an Excel building deal analyst, Project Mercury, using 100+ ex investment bankers so it can draft financial models and handle the repetitive junior banker workload.

OpenAI Is Paying Ex-Investment Bankers $150 an Hour to Train Its AI.

Contractors are paid $150 per hour to write clear prompts and build models for initial public offerings, mergers and acquisitions, leveraged buyouts, and restructurings, and those models are used to teach the system. For the hiring first, applicants talk to an AI chatbot for about 20 minutes, take a short financial statement quiz, and then do a modeling test.

After being accepted, they must submit 1 Excel model per week, get feedback from reviewers, fix any errors, and resubmit until it meets the required standard. Participants include former staff from major banks and private investment firms plus current Master of Business Administration candidates at Harvard and MIT.

The output must look like banker made spreadsheets, so instructions lock down formatting rules such as margins, sheet layout, naming, and how percentages are shown. In Investment Banks, Analysts often log 80 to 100+ hours a week on spreadsheets and slide edits, so automation pressure is rising on tasks like data refresh, checks, and small presentation changes. Banks already test rival tools, so the real gate is accuracy, audit trails, and strict control of bank specific style, not flashy demos.

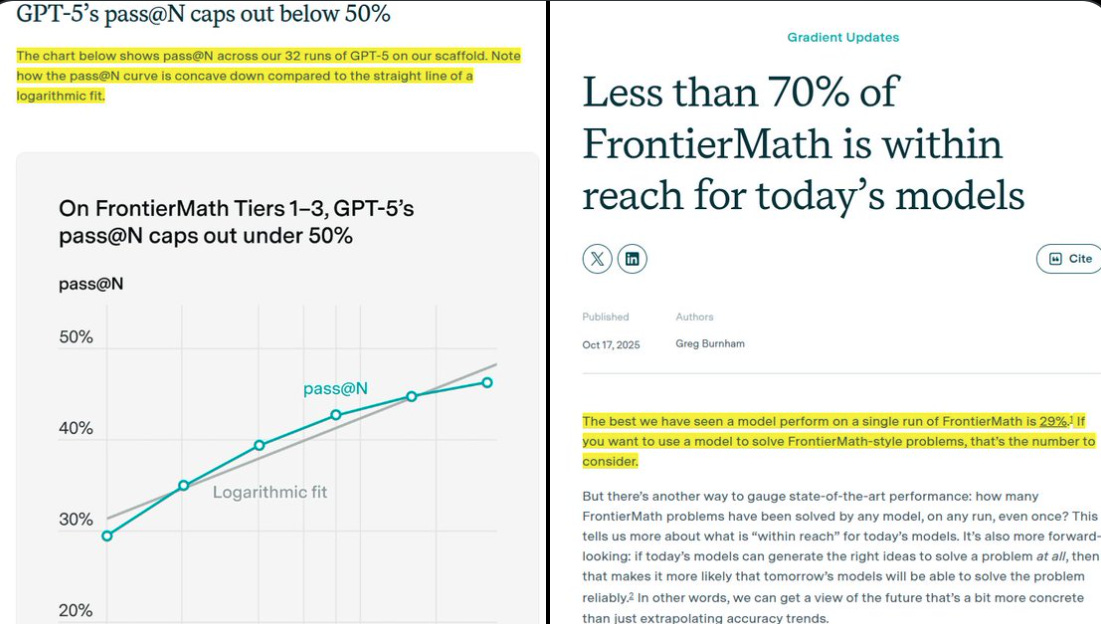

🧠 Epoch AI’s FrontierMath benchmark finds that even when pooling many tries and many models, only 57% of problems have ever been solved at least once by LLMs.

GPT-5 gets 29% on its best single run, and across 32 runs the success curve looks like it levels off under 50%. pass@N tracks how accuracy rises when you allow more tries, and the gains shrink fast as N grows.

Doubling the number of tries gives 5.4% early in testing but only 1.5% by 32 tries, so extra attempts buy less and less. They also tried 10 still unsolved problems 100 more times each and got 0 new solves, which matches the flattening curve.

Across all models combined, 165 out of 290 problems have been solved at least once, which is 57% overall. 140 of those solved problems show up in more than 1 model, which means the models often solve the same items.

ChatGPT Agent is the outlier with 14 unique solves. For ChatGPT Agent, pass@N moves from 27% at 1 try to 49% at 16 tries, again with shrinking gains when N doubles. If each new batch of attempts adds independent gains, the practical ceiling looks near 70%.

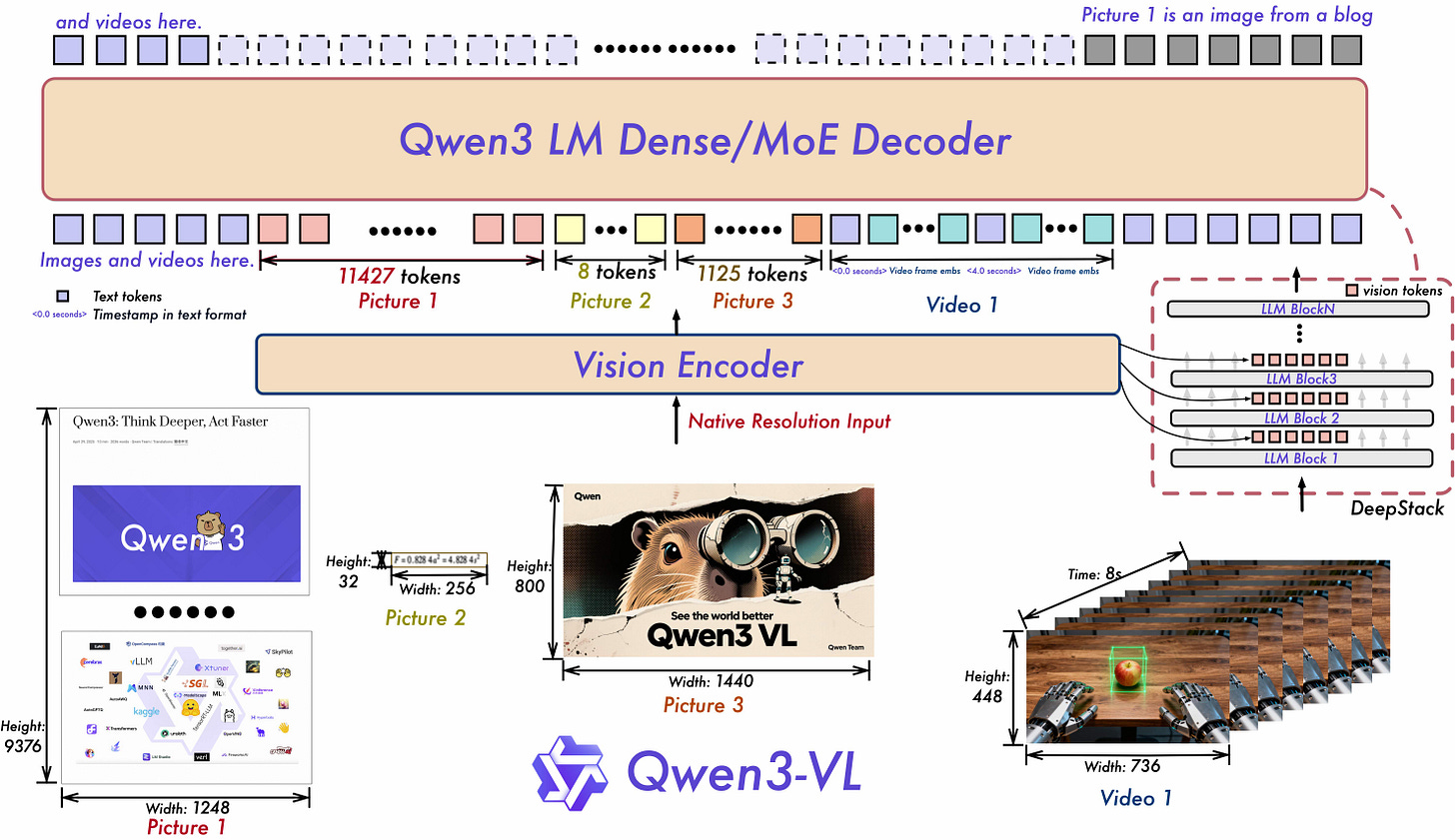

🛠️ Alibaba’s new Qwen3-VL models bring visual-language AI to mobile devices

Qwen3-VL adds 2B and 32B vision-language models that can run on phones while keeping near-frontier quality, with the 32B tier targeting large-model parity and the 2B tier aimed at efficient edge use.

Qwen3-VL processes mixed inputs by sending images and video frames into one Vision Encoder, then passing compact vision tokens to a Qwen3 language decoder in dense or mixture-of-experts form.

The system packs text, pictures, and frame timestamps into one long sequence so the model can reason across 256K tokens and scale to 1M with chunked context.

Token cost depends on content and resolution, shown by examples like 11427 tokens for a full blog screenshot, 8 tokens for a tiny icon, 1125 tokens for a poster, and a short video segment.

Native-resolution input keeps fine detail inside the Vision Encoder, and DeepStack fuses multi-level visual features to tighten image-text alignment and recover small cues.

Text–timestamp alignment ties frames to second-level time markers so the model can point to exact events in a clip rather than rely on coarse guesses.

Interleaved-MRoPE assigns positions over time, width, and height, which stabilizes long video reasoning and improves spatial grounding across objects, viewpoints, and occlusions.

The same backbone supports agent actions, where the language model consumes vision tokens, calls tools, and operates desktop or mobile interfaces for practical automation.

A single family spans edge to cloud with instruct and thinking editions, which lets teams pick latency or accuracy targets without changing the workflow.

That’s a wrap for today, see you all tomorrow.

I’m tempted by Atlas and others but for me the prompt injection risk is too high.