OpenAI launches ChatGPT Health, directly linking patient health data

ChatGPT Health launch, Anthropic’s $10B raise, 2025 GenAI traffic stats, China halts H200 orders, LLM text leaks, and Karpathy on pretraining with fixed compute.

Read time: 11min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (8-Jan-2026):

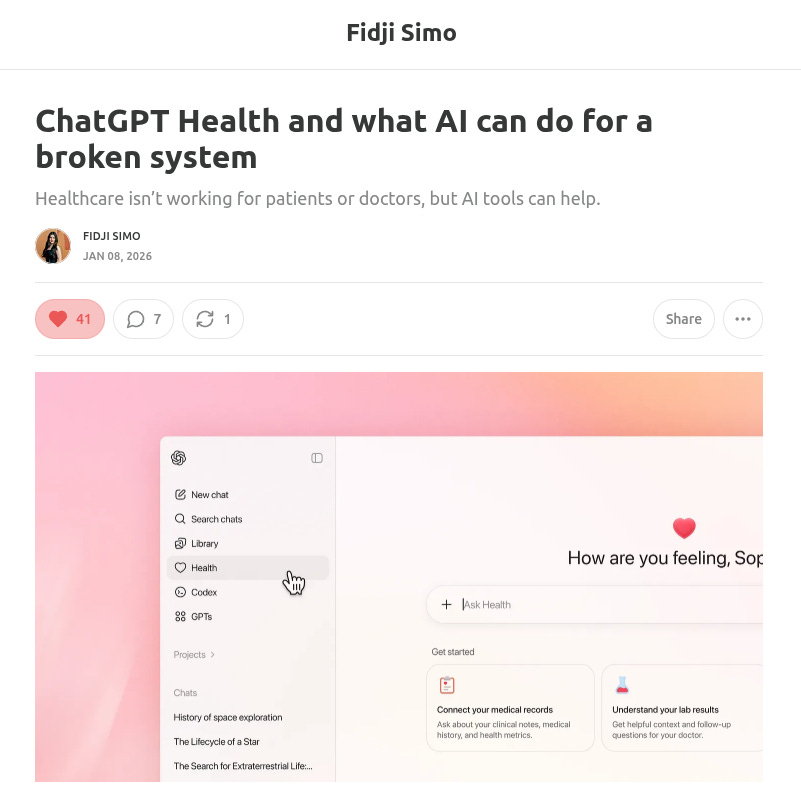

This should be absolutely great. OpenAI is launching ChatGPT Health, a separate space in ChatGPT for health and wellness conversations.

🚨 Anthropic is lining up another mega funding round of $10B at a $350B valuation, led by Singapore sovereign wealth fund GIC and Coatue.

📡 Gen AI chatbot website traffic for 2025.

China is asking tech companies to temporarily halt Nvidia H200 chip orders

👨🔧 New Stanford paper shows production LLMs can leak near exact book text, with Claude 3.7 Sonnet hitting 95.8%.

🧠 Andrej Karpathy’s new blog shows the best way to spend a fixed compute budget when pretraining small LLMs.

This should be absolutely great. OpenAI is launching ChatGPT Health, a separate space in ChatGPT for health and wellness conversations.

Users can link medical records and wellness apps so responses use their own context and timelines.

Is My Health Data Safe on ChatGPT Health?

Yes, because, health chats are stored separately, with added encryption and isolation, and are not used for model training. The tool can explain lab results, prepare questions for appointments, and summarize patterns in diet, sleep, and activity.

The system is tuned and checked against clinician-written rubrics. OpenAI says 260 physicians across 60 countries reviewed outputs 600,000 times, and HealthBench grades safety and clarity. Access starts by waitlist for a small group outside the European Economic Area (EEA), Switzerland, and the United Kingdom.

After users are onboarded, they can link a few different data sources. This includes electronic health records through a partnership with b.well, which pulls data from about 2.2M health care providers across the US.

They can also connect Apple Health on iOS devices, plus wellness and lifestyle apps like MyFitnessPal, Weight Watchers, Peloton, AllTrails, Instacart, and Function. From there, the experience feels more like a guided health check-in than searching things on Dr. Google. People can upload lab reports as Portable Document Format files and get a plain-language summary, get help preparing questions for an upcoming appointment, or compare insurance options based on past claims and usage.

This new health feature really matters because it can save you a lot of time when you’re trying to understand what’s written in a report. Instead of immediately reaching out to a doctor just to figure out what the numbers mean, you can get a clearer idea of what’s going on first. If everything looks normal, it helps reduce unnecessary stress and lets you decide your next steps more confidently. And if something looks off, you’re better prepared for that doctor’s visit.

🚨 BREAKING: Anthropic is lining up another mega funding round of $10B at a $350B valuation, led by Singapore sovereign wealth fund GIC and Coatue.

That would put it in the same valuation tier as OpenAI, which hit about $500B in an employee share sale in 2025. Big rounds like this usually map to compute spend, and Anthropic already committed $30B of Azure compute capacity in a Microsoft partnership that also included up to $5B from Microsoft and up to $10B from Nvidia. According to many sources, Anthropic’s IPO prep work is also underway, including hiring Wilson Sonsini, with an IPO discussed as early as 2026.

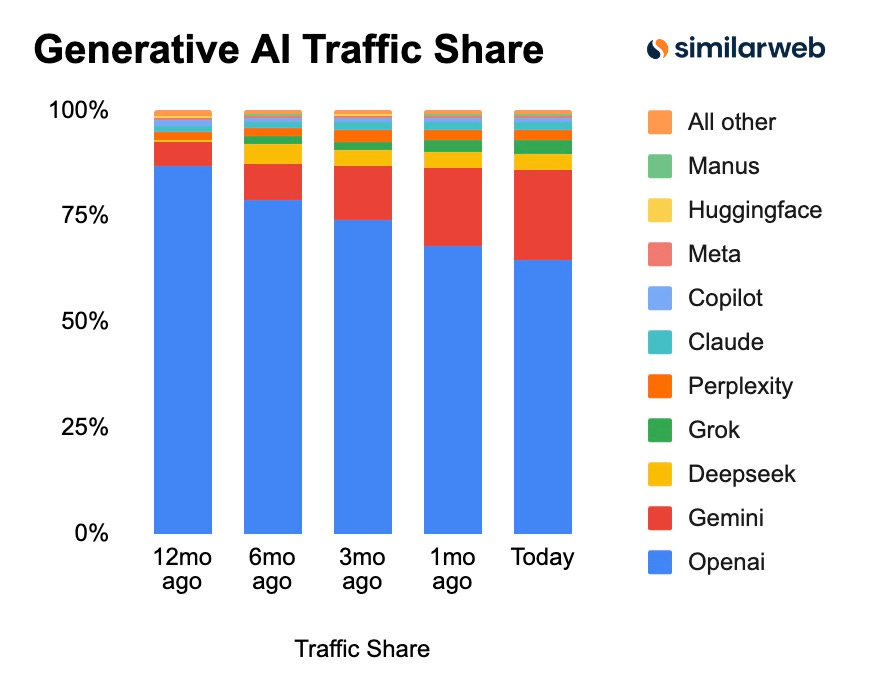

📡Gen AI chatbot website traffic for 2025.

Gemini’s rise is a fantastic story of classic distribution win: Google can place Gemini right where people already start, like search and its own products. What really stands out is that the “lost” share did not get split evenly across a bunch of smaller players.

ChatGPT dropped about 22.2 points, Gemini gained about 15.8 points, and everyone else is still stuck in low single digits. Also, given these traffic share numbers are a relative, if total web visits to these tools are growing fast, ChatGPT can lose share and still grow in raw usage numbers (i.e. absolute total web visits numbers).

🛠️ China is asking tech companies to temporarily halt Nvidia H200 chip orders

China has asked some tech firms to halt new orders for Nvidia’s H200 AI chips while Beijing decides whether, and under what conditions, they can be used.

This is to slow stockpiling and steer buyers toward domestic AI chips.

Nvidia CEO Jensen Huang said at CES 2026 that demand in China is very strong. For the context, the U.S. recently approved H200 exports to China again, tied to a 25% revenue-share payment, but each shipment still needs an export license with no timeline.

This kind of stop-start policy is the long-running problem, because training plans depend on predictable access to the same GPU class.

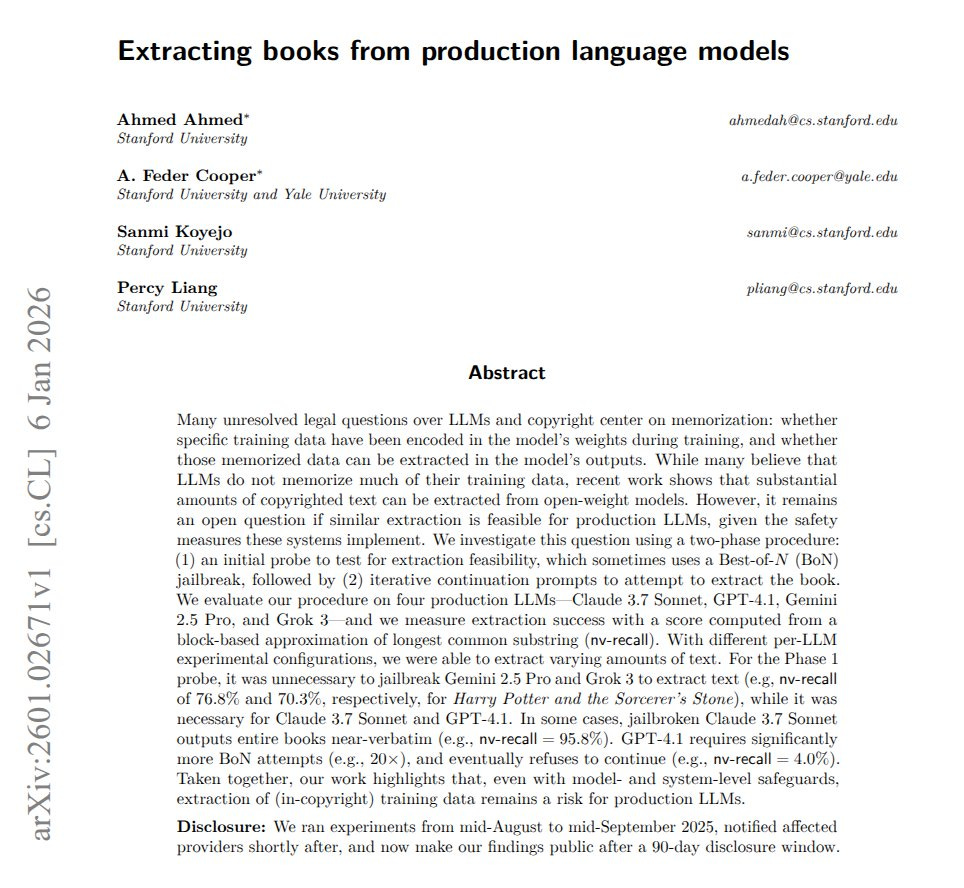

👨🔧 New Stanford paper shows production LLMs can leak near exact book text, with Claude 3.7 Sonnet hitting 95.8%.

The big deal is that many companies and courts assume production LLMs are safe because they have filters, refusals, and safety layers that stop copying.

This paper directly tests that assumption and shows it is false in multiple real systems. The authors are not guessing or theorizing, they actually pull long, near exact book passages out of models people use today.

A production LLM is the kind people use through a company app, and it can memorize chunks of books from the text used to teach it. The authors test leakage by giving a book’s opening words, asking for the next lines, then repeating short follow ups until the model stops.

When the model refuses, they try many small wording changes and keep the first version that continues the text. They run this on 4 production systems across 13 books, and they use near verbatim recall, which only counts long, continuous matches.

That matters because safety filters, meaning built in rules that try to block copying, can still miss memorized passages in normal use.

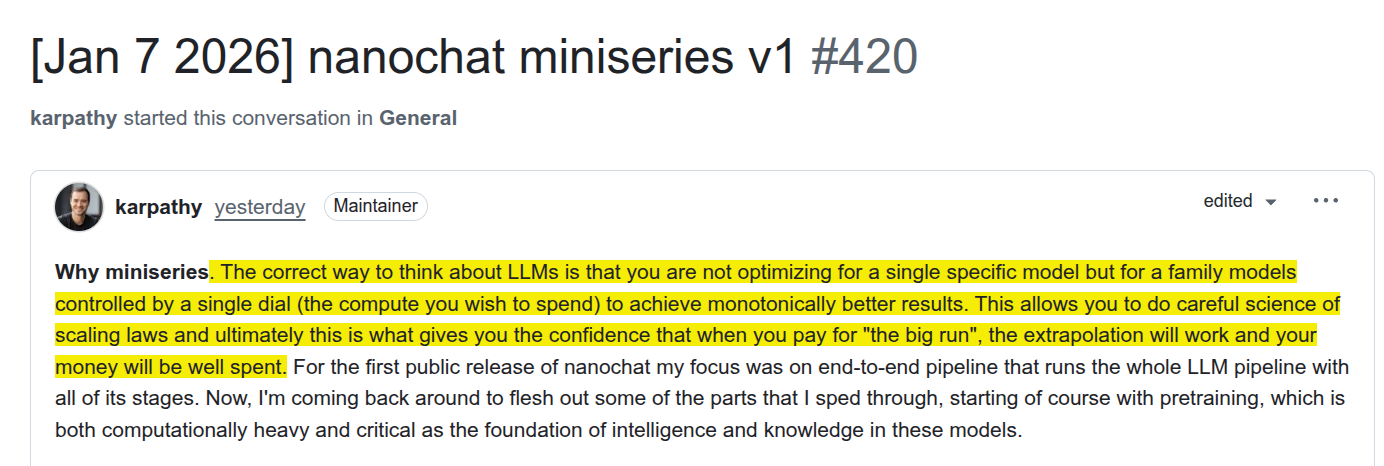

🧠 Andrej Karpathy’s new blog shows the best way to spend a fixed compute budget when pretraining small LLMs.

He asked a simple question, if you have a fixed amount of compute, what is the best way to train a small language model. Instead of guessing, he ran many tiny training runs on 1 machine with 8 H100 GPUs.

He trained 11 models with different sizes. Each run used the same amount of compute, the same batch size, and the same data. This makes the results fair to compare.

He measured quality with a single score that averages across 22 tasks. He also turned training time into simple $ numbers, using $24 per hour for an 8 GPU machine. This lets you see cost versus quality in plain terms.

The core finding is easy. For any fixed compute budget, there is a sweet spot for model size. If the model is too small, it cannot learn enough. If the model is too big, you run out of data or steps before it learns well.

As you increase compute, the best number of parameters and the best number of training tokens both grow together. Their ratio stays roughly constant. In these runs, the good rule was training tokens about 8 times the number of parameters.

This rule gives a dead simple recipe. Pick a model size, set training tokens to 8 times parameters, set batch size, then run for the number of steps that hits your compute limit. You get close to the best possible quality for that budget.

Why a developer should care, you can plan projects with real numbers. You can estimate model size, data needs, runtime, and $ before you start. You can avoid wasting time on models that are too big or too small for your hardware.

Why an AI consumer should care, this gives you a sanity check on claims. If someone says they trained a model cheaply, you can ask about model size, tokens, time, and compare to these simple curves. The numbers should make sense together.

That is the whole point. Small, repeatable experiments, 1 clear score, and a recipe you can use today.

That’s a wrap for today, see you all tomorrow.