🗞️ OpenAI lifts lid on Codex engine with technical deep dive.

Inside OpenAI’s Codex agent loop, Claude in Excel, DeepMind’s new breakthrough, energy-based AI from Logical Intelligence, math in AI, top lab survey, and Fei-Fei Li’s $5B raise.

Read time: 11 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (24-Jan-2026):

🗞️ OpenAI published engineering deep dive on how the Codex CLI agent loop works under the hood.

🏆 Claude is now available directly in Excel 🚨

📡A groundbreaking research achievement by GoogleDeepMind

🛠️ Logical Intelligence introduces first energy-based reasoning AI Model, and brings Yann LeCun to leadership as founding chair of their Technical Research Board

👨🔧 Massive news for AI’s Math power.

🧠 Great and super exhaustive survey paper from all the top labs, including GoogleDeepMind, AIatMeta, amazon.

🧑🎓 Fei-Fei Li’s World Labs reportedly raising funding at $5B valuation, per Bloomberg.

🗞️ OpenAI published engineering deep dive on how the Codex CLI agent loop works under the hood.

Explains how Codex actually builds prompts, calls Responses API, caches, and compacts context.

Reveals the exact mechanics that make a coding agent feel fast and stable, like relying on exact-prefix prompt caching to avoid quadratic slowdowns and using /responses/compact with encrypted carryover state to keep long sessions running inside the context window. Codex CLI is software that lets an AI model help change code on a computer.

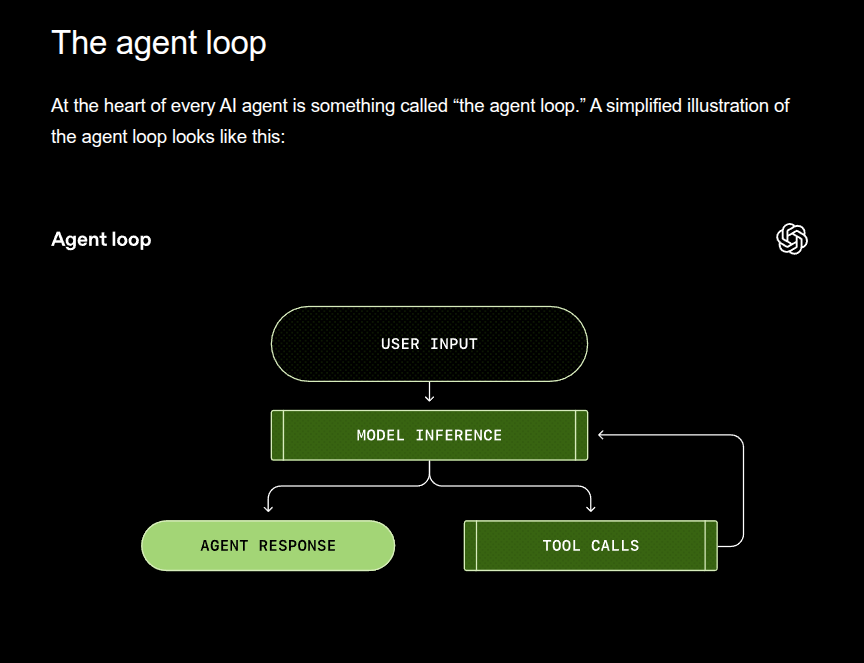

Codex is a harness that loops: user input → model inference → tool calls → observations → repeat, until an assistant message ends the turn.

The “agent loop” is the product

So the model is the brain that writes text and tool requests, and the harness is the body that does the actions and keeps the conversation state tidy. In Codex CLI, the harness is the CLI app logic that builds the prompt, calls the Responses API, runs things like shell commands, captures the results, and repeats.

It does the work by looping between model output and tool runs until a turn ends. The main trick is keeping prompts cache-friendly and compact enough for the context window, meaning the max tokens per inference call.

Codex builds a Responses API request from system, developer, user, and assistant role messages, then streams back the sampled output text. If the model emits a function call like shell, Codex runs it and appends the output into the next request.

Before the initial user message, Codex injects sandbox rules, environment context, and aggregated project guidance, with a default 32KiB scan cap. Because every iteration resends a longer JSON payload, naive looping is quadratic, so exact-prefix prompt caching keeps compute closer to linear on cache hits.

Codex is designed around stateless Responses API calls

Codex avoids stateful shortcuts like previous_response_id to stay stateless for Zero Data Retention (ZDR), which makes tool or config changes a real cost. When tokens pile up, Codex calls /responses/compact and replaces the full history with a shorter item list plus encrypted carryover state instead of the older manual /compact summary.

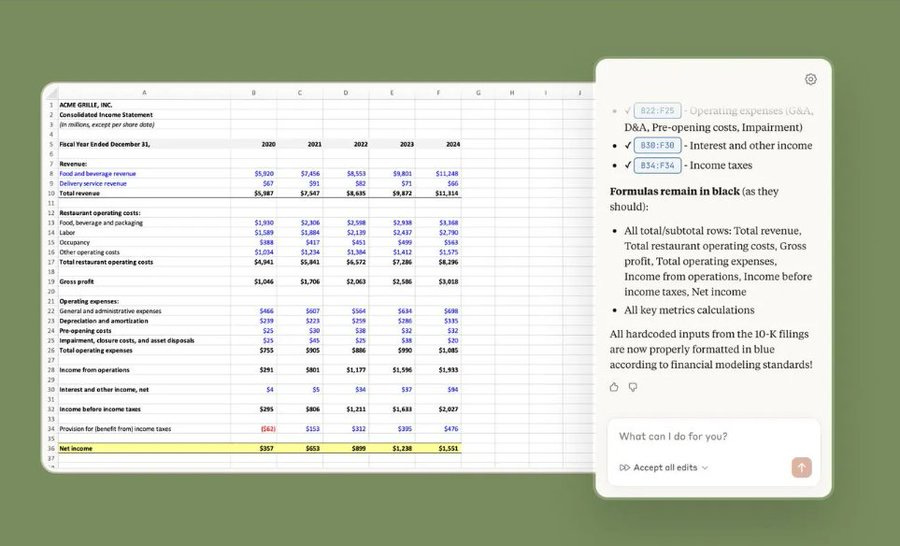

🚨 Claude is now available directly in Excel

Anthropic expands Claude AI access with Excel integration for Pro users. Claude now accepts multiple files via drag and drop, avoids overwriting your existing cells, and handles longer sessions with auto compaction.

This is a full-stack spreadsheet assistant:

Claude sits in your spreadsheet sidebar, scans your entire workbook—formulas, sheets, links—and lets you actually chat with it.

You can ask anything

Experiment without fear. e.g. formulaes. Claude safely updates everything, keeps formulas intact, and tells you what changed.

Instant error fixes. i.e. when you run into REF!, VALUE!, or circular reference errors, Claude spots and explains them instantly—and helps you fix them.

Build smarter models. e.g. SaaS valuation/projection, CAC payback, or retention analysis from CSVs. Claude can assemble or complete the model while keeping the formulas and logic solid.

📡 A groundbreaking research achievement by GoogleDeepMind

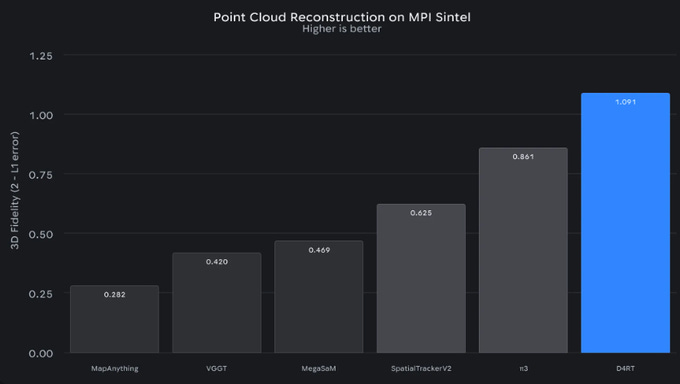

This new unified AI model connects the 3 spatial dimensions with the dimension of time, giving AI vision true 4-D awareness for the first time. The big deal is that 1 model can do fast 3D tracking and 4D reconstruction on demand, which fits apps like AR and robotics that only need answers for the points they care about.

Most 3D-from-video systems act like they have to rebuild the whole world for every frame, so they are slow and they break when stuff moves or gets hidden. D4RT flips the job into a simple game of questions and answers.

First it watches the whole video once and stores what it learned in a shared “memory” about the scene across time. Then, instead of rebuilding everything, it answers small questions like “this exact pixel in this frame, where is it in 3D space” and “where will that same point be a moment later”.

Because each question is separate, the computer can answer thousands at the same time, which is why it gets very fast. 18 to 300 times faster than previous technical benchmarks. It also uses a tiny local image patch around the pixel, so it can tell apart nearby edges and textures, which helps it stay locked on the right point.

That setup makes tracking through occlusion easier, because the model can use the video memory to keep predicting where the point is even when it is not visible for a bit. In the paper, this shows up as much higher throughput for long 3D tracks, and lower 3D error on a standard moving-scene test.

On 1 A100 GPU, it can produce 550 full-video 3D tracks at 60FPS, while SpatialTrackerV2 produces 29 . It leans on strong pretraining and has to stitch chunks for very long videos, but the query setup scales cleanly to what an app asks for .

D4RT outperforms previous methods across a wide spectrum of 4D reconstruction tasks.

D4RT processed a one-minute video in roughly five seconds on a single TPU chip. Previous state-of-the-art methods could take up to ten minutes for the same task — an improvement of 120x.

🛠️ Logical Intelligence introduces first energy-based reasoning AI Model, and brings Yann LeCun to leadership as founding chair of their Technical Research Board

The 6-month-old Silicon Valley start-up, unveiled an “energy based” model called Kona and says it is more accurate and uses less power than large language models like OpenAI’s GPT-5 and Google’s Gemini. It is also starting a funding round that targets a $1bn-$2bn valuation and has named LeCun chair of its technical research board.

Most large language models answer by predicting the next token, which can sound fluent while still drifting into confident mistakes. Kona is an “energy-based reasoning model” (EBRM) that verifies and optimizes solutions by scoring against constraints, finding the lowest “energy” (most consistent) outcome. It’s non-autoregressive, producing complete traces without sequential generation, reducing hallucinations. Focuses on trustworthy, math-grounded reasoning for high-stakes applications where LLMs fail, emphasizing safety, efficiency, and constraint enforcement in logic-heavy tasks like puzzles or proofs.

How Kona operates

Its a non-autoregressive “energy-based reasoning model” (EBRM) model, meaning it doesn’t generate outputs sequentially (like LLMs do token-by-token) but instead produces complete reasoning traces simultaneously. Here’s how it works step-by-step:

Input Conditioning: It takes a problem, constraints, and optional targets (e.g., a desired outcome like a proof goal or spec) as inputs. These condition the model directly, unlike LLMs which rely on probabilistic sampling.

Energy Function Scoring: Kona learns an energy function that assigns a scalar “energy” score to entire reasoning traces (partial or complete). Low energy indicates high consistency with constraints and objectives; high energy flags inconsistencies, violations, or errors. This global scoring evaluates end-to-end quality, allowing the model to assess long-horizon coherence without degrading over extended traces.

Optimization as Reasoning: Reasoning is reframed as an optimization problem. The model searches for the lowest-energy solution by minimizing the energy function, often through iterative refinement. It can revise any part of a trace mid-process, using dense feedback to localize failures (e.g., “this step violates constraint X”) and guide corrections.

Continuous Latent Space: Unlike discrete token-based LLMs, Kona works in a continuous space with dense vector representations. This enables precise, gradient-based edits and efficient local refinements without regenerating entire sequences.

Output: The final low-energy trace represents a valid, constraint-satisfying solution. For example, in Sudoku, it maps allowable moves and finds a puzzle completion that minimizes energy (i.e., maximizes rule adherence).

This mechanism draws from physics-inspired principles, where energy minimization finds stable states, similar to how natural systems settle into low-energy configurations. Overall, Logical Intelligence views EBMs as a path beyond LLM limitations, enabling AI that “knows” rather than guesses, with applications in verifiable, efficient reasoning. This aligns with LeCun’s long-standing advocacy for objective-driven AI via energy minimization, as opposed to autoregressive prediction.

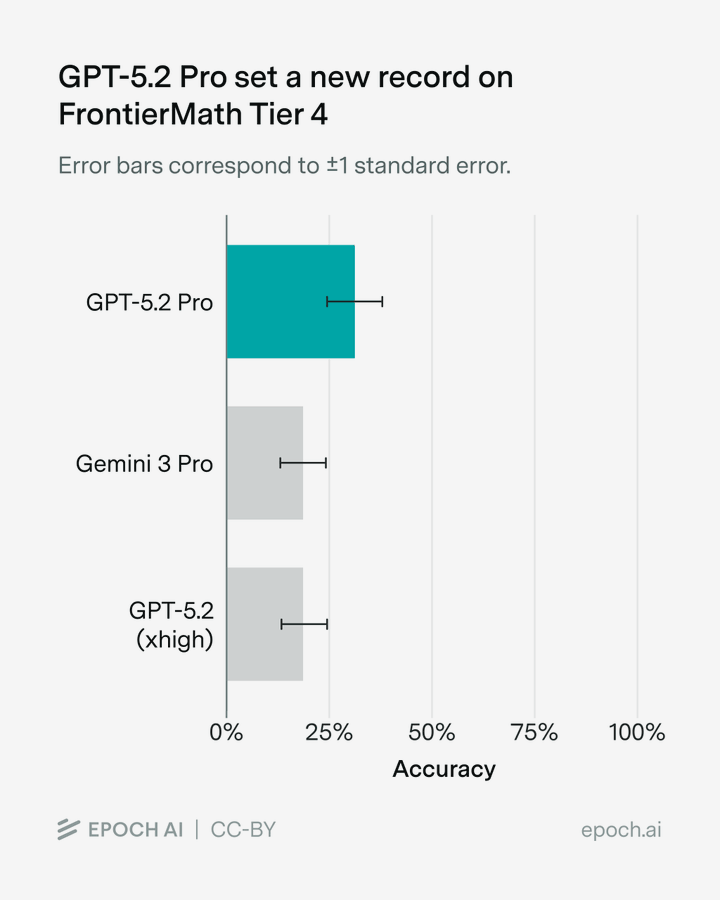

👨🔧 Massive news for AI’s Math power.

GPT-5.2 Pro set a new record on FrontierMath Tier 4.

Solved 15 of 48 problems for 31% accuracy, up from the prior best 19%. FrontierMath Tier 4 has been the toughest math benchmark for AI so far.

On this run, GPT-5.2 Pro also did better on the held-out subset than the non-held-out subset, i.e. no overfitting. Tier 4 has been incredibly tough for models, since only 13 of its 48 problems had ever been solved before this run.

To sense the difficulty of this test, these are not short calculation puzzles, and several newly-solved items came from topology, geometry, number theory, and analytic combinatorics. Epoch reports it ran the test manually on ChatGPT because its API scaffold hit timeouts.

GPT-5.2 Pro solved 10 of 20 held-out problems for 50% versus 5 of 28 non-held-out problems for 18%, so there was no evidence of score inflation from memorized solutions. One reviewer said it recognized the geometry of a polynomial-defined surface and still solved a harder point-cloud version.

A remaining failure pattern is making a plausible assumption without proving it, which can be the whole crux in research math. imo, we are not far away to saturate this, the toughtest math benchmark as well.

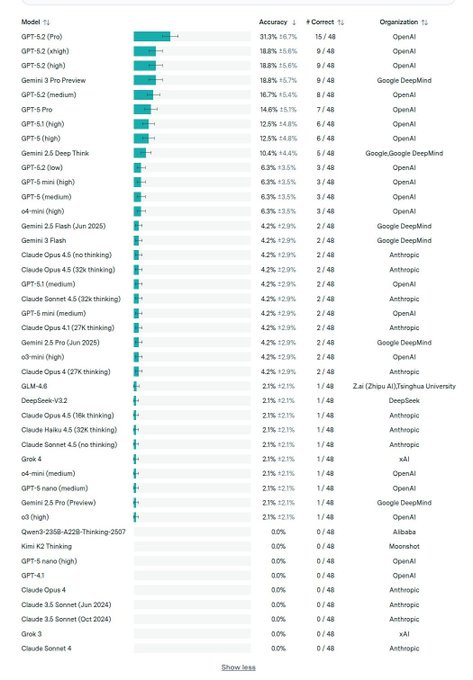

FrontierMath Leaderboard

The leaderboard is extremely top-heavy, GPT-5.2 Pro sits at 31% while all the top open-source frontier models cluster around 0%.

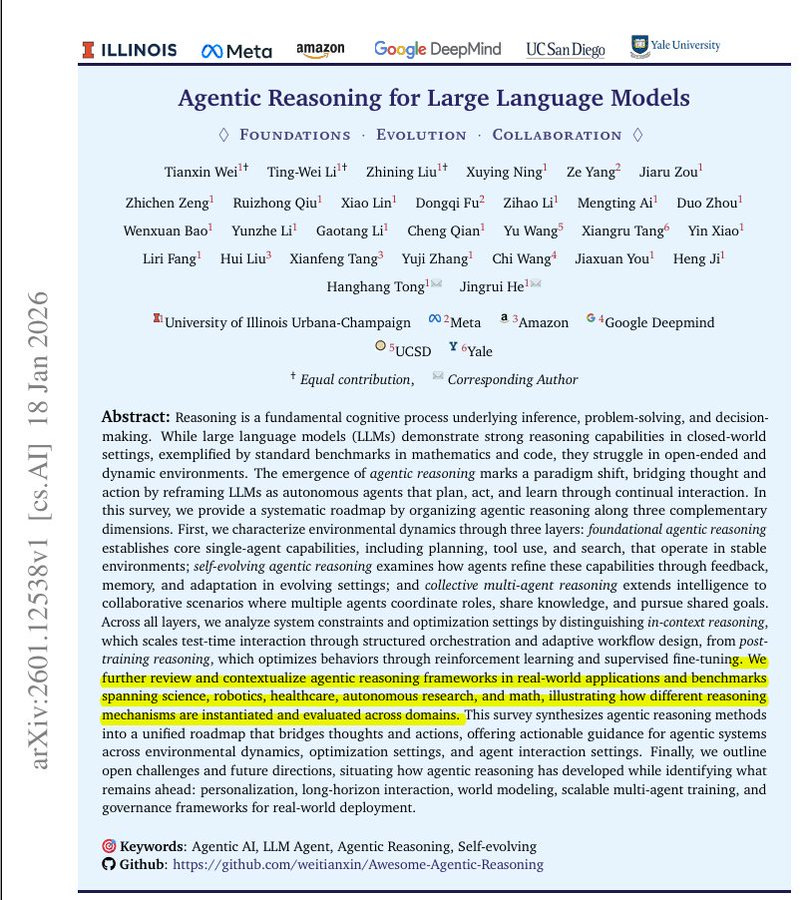

🧠 Great and super exhaustive survey paper from all the top labs, including GoogleDeepMind, AIatMeta, amazon.

Explains how LLMs become agents that plan, use tools, learn from feedback, and team up.

Agentic reasoning treats the LLM like an active worker that keeps looping, it plans, searches, calls tools like web search or a calculator, then checks results. The authors organize this space into 3 layers: foundational skills for a single agent, self evolving skills that use memory, meaning saved notes, plus feedback, and collective skills where many agents coordinate.

They also separate changes done at run time, like step by step prompting and choosing which tool to call, from post training changes, where the model is trained again using examples or rewards. Instead of new experiments, the paper maps existing agent systems, applications, and benchmarks onto this roadmap, making it easier to design agents for web research, coding, science, robotics, or healthcare. The main takeaway is that better real world reasoning comes from a repeatable plan, act, check loop plus memory and feedback, not only bigger models.

🧑🎓 Fei-Fei Li’s World Labs reportedly raising funding at $5B valuation, per Bloomberg.

Some reports saying they will raise upto $500M.

If it happens, the round would reprice World Labs from about $1B in 2024, when it raised $230M coming out of stealth. The bet is that “world models” can generate editable 3D environments that other software can build on, not just flat images or text.

Earlier 3D pipelines usually start from hand-built polygon meshes, where scenes are made from lots of tiny triangles and then rendered. World Lab’s Marble uses 3D Gaussian splatting (3DGS), which represents a scene as millions of semi-transparent points that can render with higher visual detail.

It also outputs “collider meshes,” which are lower-detail shapes that trade looks for speed in physics and robotics simulation. Marble’s Chisel tool lets users block out objects from simple shapes and then generate styled variants, which is a step toward controllable world building. World Labs also just opened a World API so developers can generate explorable 3D worlds from text, images, and video inside apps.

That’s a wrap for today, see you all tomorrow.

The Kona energy-based reasoning approach is fasicnating. Reframing inference as optimization over constraints rather than sequential token prediction could be a game changer for anything requiring verifiable logic. The D4RT benchmarks are wild too, 120x speedup on the same taks makes realtime AR actually feasible now. Curious if the energy minimization approach will start showing up in other labs architectures soon.

Is there any use case for Microsoft Co-Pilot? It has been worthless for me.