OpenAI officially released SORA video generation model with surprising video editing features

OpenAI SORA video AI, Gemini tops benchmarks, FineWeb2 dataset launch, Google's quantum breakthroughs, and Sam Altman's AI vision.

In today’s Edition (9-Dec-2024):

🎥 OpenAI launched SORA, their revolutionary AI video generator as part of their 'shipmas' series

🏆 Google’s Gemini reclaims no.1 spot on lmsys

🤗 "FineWeb2", a 8TB of compressed text open-source dataset dropped in Huggingface

🗞️ Byte-Size Brief:

Google unveils Willow: 105-qubit quantum chip enables billion-year computations

X removes Aurora AI art generator amid content policy concerns

Reddit analysis: o1 Pro vs Claude - performance/price comparison study

Runway releases Act-One: video character control via smartphone recordings

🧑🎓 Deep Dive Tutorial:

Use Hugging Face models with Amazon Bedrock

🧠 Top Lecture Roundup

Sam Altman on the Future of A.I. and Society

🎥 OpenAI launched SORA, their revolutionary AI video generator as part of their 'shipmas' series

🎯 The Brief

OpenAI launches Sora, a revolutionary video generation model integrated with ChatGPT Plus/Pro subscriptions, featuring advanced capabilities in text-to-video, image animation, and world physics simulation. But it's not available in the EU and UK due to regional restrictions. Users will be able to create high definition videos up to 20 seconds long from nothing more than a text prompt.

⚙️ The Details

→ Sora Turbo, a high-end accelerated version, offers text-to-video generation, image animation, and video-to-video features like style remixing and temporal extension. The model runs on sora.com and is included with existing ChatGPT Plus/Pro subscriptions.

→ Product features include an Explore feed for community sharing, Storyboard for sequential video direction, Remix for video modifications, Loop for creating seamless repetitions, and Blend for combining multiple scenes.

→ Usage limits set at 50 generations monthly for Plus users and unlimited slow-queue plus 500 fast-queue generations for Pro users. Higher resolution options available with reduced generation counts.

→ The platform emphasizes safety and moderation while balancing creative expression, starting conservatively with plans for iteration based on user feedback.

→ The new version of Sora adds some new and unexpected editing wrinkles, including the ability to drop images in as prompts and a timeline editor that lets users add new prompts at specific moments in a video.

→ The Sora team also said the tool was "not about generating feature-length movies" but more of a way to try out new ideas.

⚡ The Impact

Advances OpenAI's AGI goal through physical world understanding while enabling unprecedented creative tools for video generation.

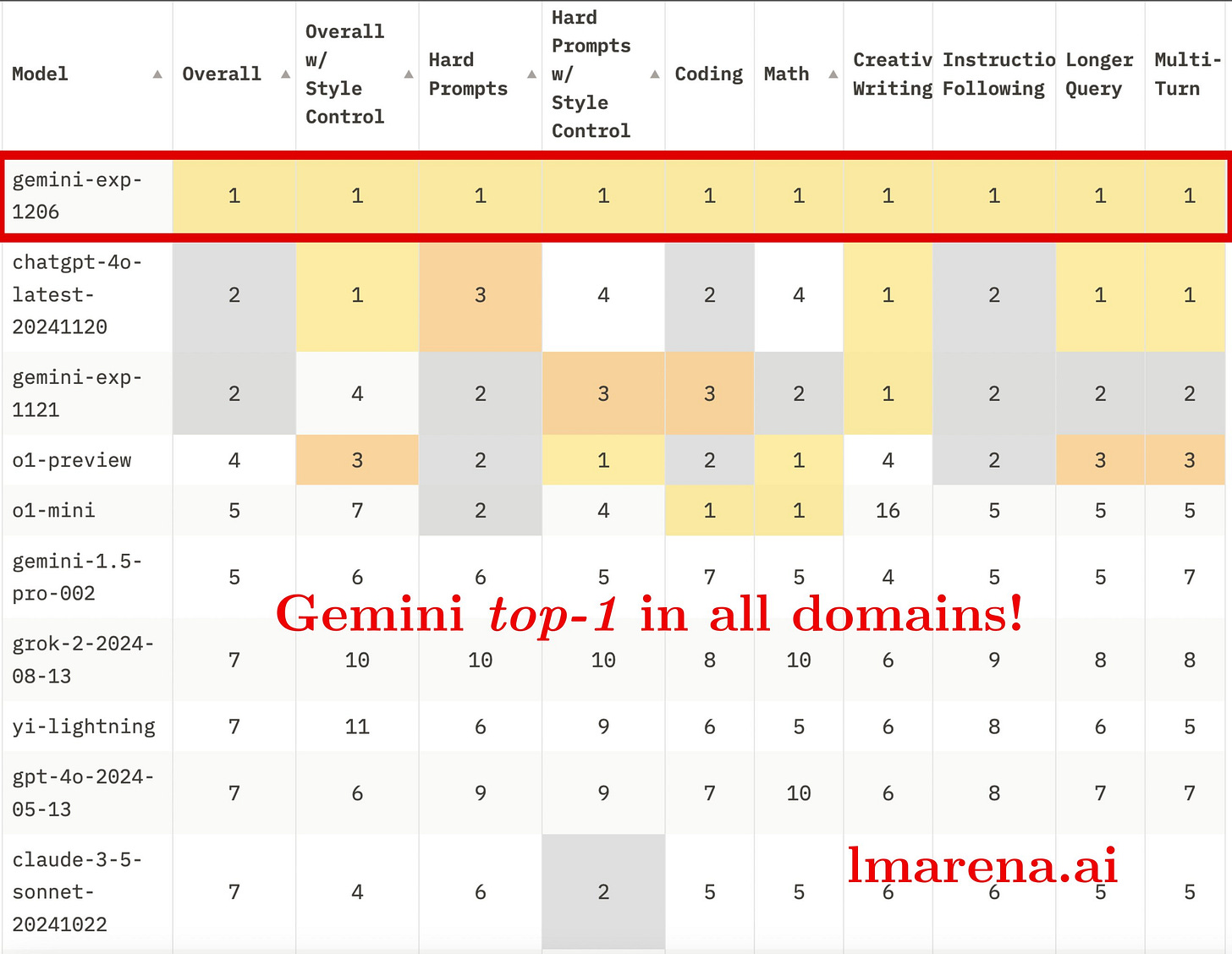

🏆 Google’s Gemini reclaims no.1 spot on Lmsys

🎯 The Brief

Google DeepMind's Gemini-exp-1206 claims #1 position on Chatbot Arena leaderboard, outperforming competitors across multiple benchmarks while maintaining free accessibility.

⚙️ The Details

→ The model achieved top rankings in critical areas including hard prompts, coding, math, and instruction following. Its technical capabilities stand out with a 2M token context window, enabling processing of hour-long video content - a feature unavailable in ChatGPT and Claude.

→ Accessibility remains a key differentiator. While OpenAI increased its premium tier pricing to $200 monthly, Gemini maintains free availability through Google AI Studio and Gemini API. This combination of advanced capabilities and zero cost positions it uniquely in the market.

⚡ The Impact

Free access to top-performing AI model strengthens competition, potentially accelerating industry-wide improvements in model capabilities.

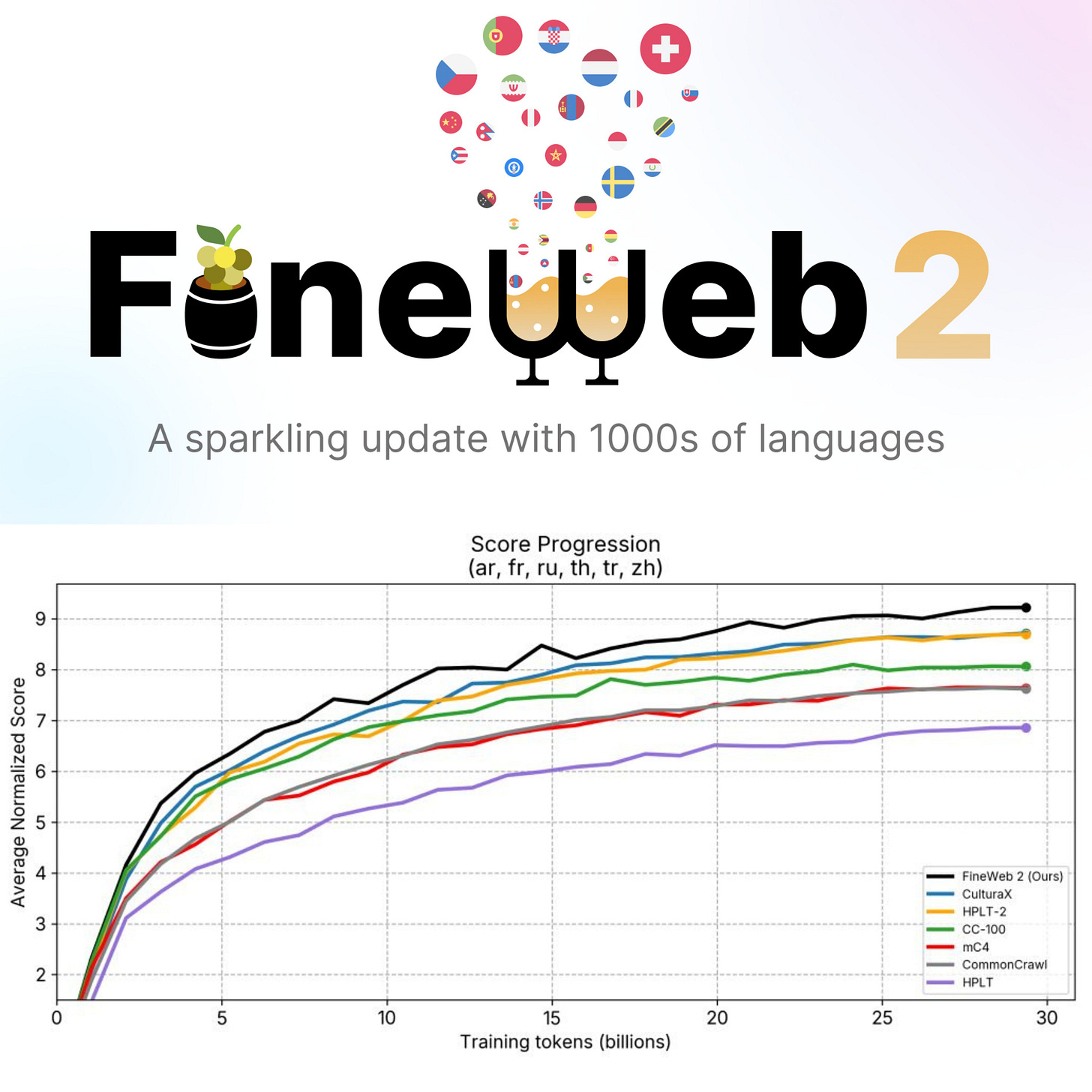

🤗 "FineWeb2", a 8TB of compressed text open-source dataset dropped in Huggingface

🎯 The Brief

FineWeb team releases FineWeb2, a massive 8TB multilingual dataset spanning 1,893 language-script pairs, creating the largest publicly available clean pretraining dataset for LLM development. it tops all other publicly available multilingual pretraining datasets

⚙️ The Details

→ The dataset processes content from 96 CommonCrawl snapshots, resulting in 4.26 billion documents and nearly 3 trillion words. It uses GlotLID for language identification, supporting over 2,000 language labels.

→ The processing pipeline implements global deduplication per language and customized filtering thresholds for each language. 486 languages have more than 1MB of text data, while 80 languages exceed 1GB of filtered content.

→ Released under Open Data Commons Attribution License (ODC-By) v1.0, FineWeb2 outperforms existing multilingual datasets like mC4, CC-100, HPLT, and CulturaX on evaluation tasks. The project evaluated performance using 1.45B parameter models trained on 30 billion tokens.

→ The dataset includes robust PII protection, anonymizing email addresses and public IP addresses while providing opt-out mechanisms for webmasters and individuals.

⚡ The Impact

Opens doors for truly open-source LLM development with unprecedented language coverage and reproducible processing pipeline.

🗞️ Byte-Size Brief

Google has unveiled a new quantum computing chip named Willow, which marks a significant advancement in the field. With 105 qubits, Willow has achieved a breakthrough in quantum error correction, exponentially reducing errors as the number of qubits increases. This allows it to perform computations in under five minutes that would theoretically take classical supercomputers billions of years. The development, detailed in a Nature publication, is considered a step towards practical quantum computing applications in areas such as drug discovery, fusion energy, and battery design.

X's new AI art maker 'Aurora' appears and disappears from Grok within days. The photorealistic image generator earned positive feedback during its limited testing phase with select users. The removal seems was due to its ability to generate graphic content.

A viral Reddit post compares o1 Pro ($200) vs Claude Sonnet 3.5 ($20). While o1 Pro excels in PhD-level problems and image analysis, Claude delivers faster responses, superior code generation, and handles 90% of real-world applications with comparable accuracy.

Runway's video generation tech Act-One now allows you to transpose your performances directly onto characters inside of existing videos. It maps facial movements from reference videos directly onto pre-recorded characters. Record yourself on a phone, and Act-One makes video characters copy your exact expressions. It handles both facial and vocal performances, eliminating costly reshoots and complex lighting setups for professional video production pipelines.

🧑🎓 Deep Dive Tutorial

Use Hugging Face models with Amazon Bedrock

Discover how to integrate 83 open-source Hugging Face models with Amazon Bedrock's infrastructure. The integration brings together SageMaker JumpStart's deployment capabilities with Bedrock's managed services, creating a streamlined pipeline for AI implementation.

Core implementation focuses on deploying models through either the Bedrock Model Catalog or Amazon JumpStart registration. Using ml.g5.48xlarge instances, you'll learn to configure endpoint ARNs and set essential inference parameters like maxTokens and temperature through Python's boto3.

The practical section covers building a robust inference pipeline using Bedrock's Converse API. You'll master configuring model parameters, handling API responses, and managing deployment costs through SageMaker compute resources while leveraging Bedrock's high-level APIs for Agents, Knowledge Bases, and Guardrails.

Here is an example using Bedrock Converse API through the AWS SDK for Python (boto3). The code connects to an AI model deployed on Amazon Bedrock and asks it a question.

🧠 Top Lecture Roundup

Sam Altman on the Future of A.I. and Society

Sam Altman's recent interview reveals key insights into AI system scaling and the future of language models. He identifies three fundamental components that drive AI progress: compute infrastructure, data resources, and algorithmic innovations.

While compute often gets attention due to its visible infrastructure and cost requirements, Altman emphasizes that algorithmic breakthroughs can provide 10-100x performance improvements - far beyond what hardware scaling alone achieves.

In this discussion, you'll learn:

How compute, data, and algorithms trade off against each other in LLM development

Why algorithmic innovations can outpace pure computational scaling

The role of synthetic data generation in model training

Approaches to efficient resource utilization in AI systems

Methods for balancing model capabilities with computational constraints

Strategies for scaling AI systems while maintaining safety guardrails