OpenAI released full version of 01, 34% more accurate and 50% faster - the PhD in your pocket

OpenAI full-version O1 launch, Microsoft's screen-reading Copilot Vision, Google's PaLiGemma 2 VLM, plus major infrastructure expansions by xAI and Meta.

In today’s Edition (5-Dec-2024):

OpenAI finally launches full-version of O1 featuring 34% fewer errors, 50% faster responses. They also announced a new ChatGPT Pro tier at $200/month with unlimited model access.

Microsoft announced Copilot Vision, AI tool that can read your screen.

Google released PaliGemma 2, best vision language model family that comes in various sizes: from 3B to 28B parameters.

Byte-Size Brief:

xAI expanding Memphis supercomputer to 1M GPUs from 200K.

Two-hour interviews enable 85%-accurate AI replication of human decision-making.

Meta building $10B Louisiana datacenter with 100% renewable energy.

RAG systems gain ability to assess context sufficiency at 93% accuracy.

OpenAI finally launches full-version of O1, 34% more accurate & 50% faster. And a new ChatGPT Pro tier at $200/month with unlimited model access

The Brief

OpenAI launches the full version of O1 model with multi-modal capability, 34% fewer errors and 50% faster responses. The o1-model is made available for ChatGPT plus tier, ($20/month) from today.

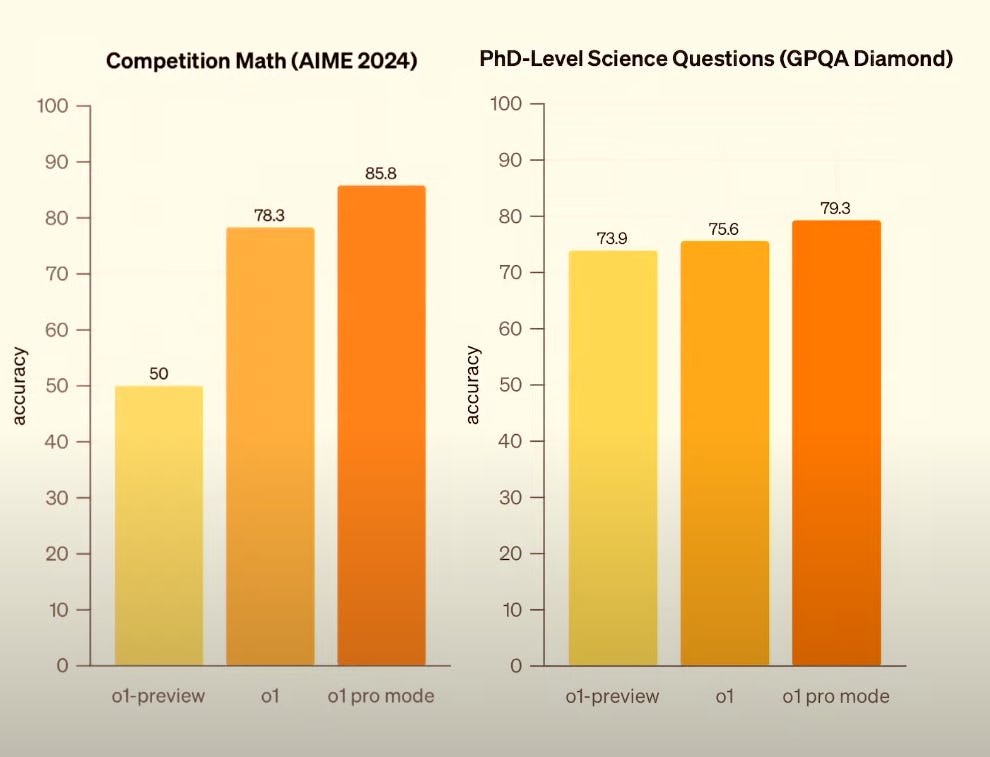

They also announced a new ChatGPT Pro tier with enhanced capabilities, for $200/month, for unlimited model access. The main benefit is that it can think even harder for the hardest problems. However Sam Altman stated most users will be very happy with o1-model which is available in the plus tier. And the Pro-tier is helpful for very specialized and deep technical areas.

The Details

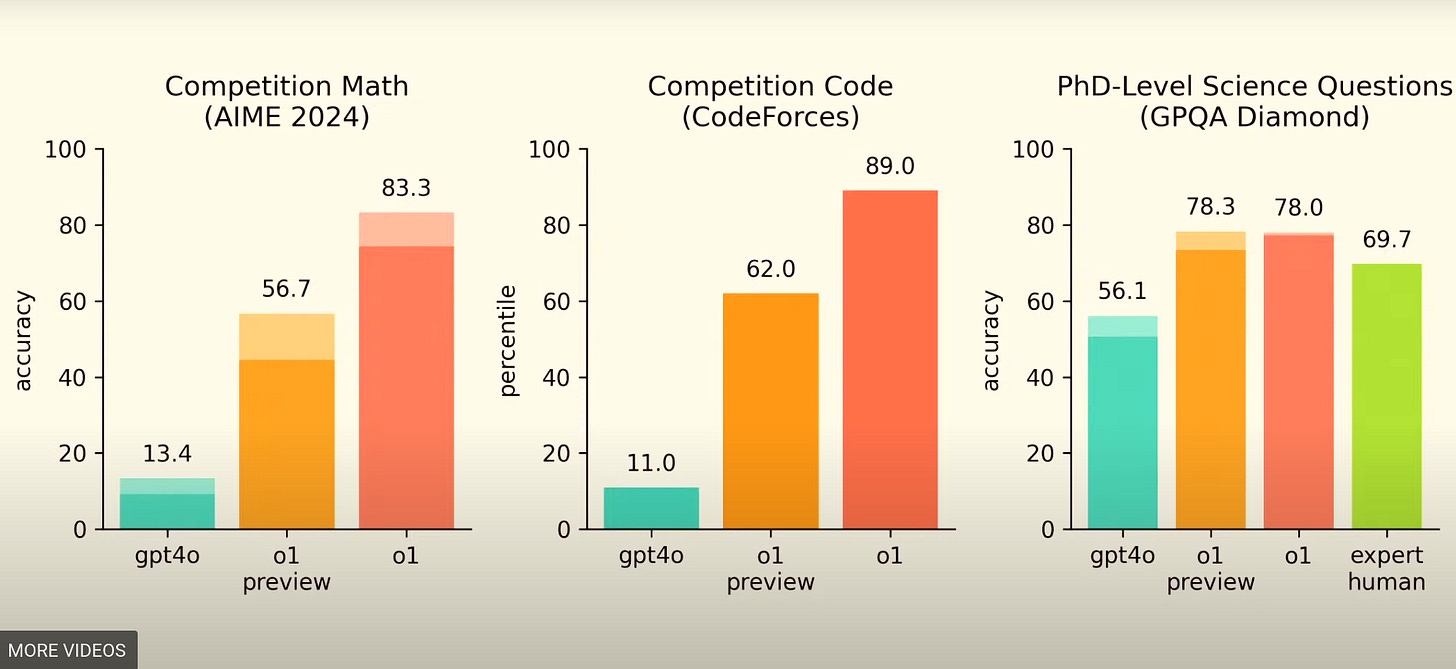

→ O1 model replaces O1 preview, featuring improved intelligence, speed, and multi-modal capabilities. Performance shows substantial improvements across math, competition coding, and GPCQA diamond benchmarks.

→ And now, O1 model can also analyze images — a hugely helpful feature upgrade as it enables users to upload photos and have ChatGPT respond to them.

→ The new tier ChatGPT Pro offering unlimited model access can handle even harder problem, because with ChatGPT Pro they are allocationg more Inference time compute resources. Pro mode also demonstrates enhanced reliability and superior performance on complex tasks, particularly benefiting power users in technical domains.

→ Multi-modal capabilities with image upload demonstrated through space datacenter cooling problem during their announcement, showing very sophisticated reasoning capabiliy across text and images. Model successfully handled complex chemistry problems, requiring 53 seconds for protein identification tasks.

→ Future rollout includes API access, structured outputs, function calling, developer messages, web browsing, and file upload capabilities, though there’s no timeline for these changes.

→ It also announced a ChatGPT Pro Grant Program that awards 10 grants of ChatGPT Pro to medical researchers at leading institutions, with plans for additional grants across various disciplines.

The Impact

The launch of o1 and ChatGPT Pro comes amid intensifying competition in the AI industry. Chinese rivals, including Alibaba and DeepSeek, have released reasoning models like Marco-o1 and R1-Lite-Preview, are encroaching fast, challenging OpenAI’s dominance with open-source solutions and eclipsing o1-preview on certain third-party benchmarks. However going by the number released today 01-model truly gives you raw intellectual power, and quite a bit ahead of competition.

Microsoft announced Copilot Vision, AI tool that can read your screen.

The Brief

Microsoft launches Copilot Vision, an AI-powered browser assistant available only on Microsoft Edge, that analyzes webpage content in real-time, offering a $20/month Pro subscription service through Copilot Labs.

The Details

→ This first-of-its-kind browser AI integrates directly into Edge's interface, providing real-time analysis of web content including text and images. The system processes webpage elements to assist with tasks ranging from product comparisons to game strategies.

→ The release follows a controlled deployment strategy with initial availability limited to US Pro subscribers. Access is restricted to pre-approved websites, excluding paywalled and sensitive content. The system implements strict privacy controls - session data gets deleted after use, with only safety-related responses logged.

→ Technical constraints include machine-readable AI controls compliance and no data storage for model training. Microsoft actively collaborates with publishers to refine Vision's webpage interaction capabilities.

The Impact

Transforms passive web browsing into interactive AI-assisted exploration while maintaining publisher rights and user privacy.

Google released PaliGemma 2, best vision language model family that comes in various sizes: from 3B to 28B parameters

The Brief

Google releases PaliGemma 2 vision-language models, upgrading from SigLIP vision encoder to Gemma 2 text decoder, offering 3 model sizes and 3 resolution options for enhanced flexibility and performance.

The Details

→ The model comes in 3B, 10B, and 28B parameter variants, supporting input resolutions of 224x224, 448x448, and 896x896. Pre-training leverages diverse datasets including WebLI, CC3M-35L, VQ2A, OpenImages, and WIT.

→ Performance testing shows minimal degradation with quantization: bfloat16 achieves 60.04% accuracy, 8-bit reaches 59.78%, and 4-bit maintains 58.72% on TextVQA validation set.

→ On DOCCI benchmark, PaliGemma 2 10B variant achieves 20.3 NES score, outperforming competitors like LLaVA-1.5 (40.6) and MiniGPT-4 (52.3). The model supports LoRA, QLoRA, and model freezing for efficient fine-tuning.

→ All models are supported with transformers (install main branch) and they work out-of-the-box with your former fine-tuning script and inference code

The Impact

Advanced multi-scale architecture enables superior visual understanding while maintaining computational efficiency across diverse applications.

Byte-Size Brief

xAI plans to scale its Memphis supercomputer from 200,000 to 1 million GPUs for advanced AI development. The facility assembled its initial 200,000 Nvidia H100/H200 GPUs in just four months and aims for 300,000 by summer 2025.

New Paper shows, just a two-hour interviews let AI clone your decision-making style with 85% accuracy. The system processes interview transcripts through LLMs to generate agents that match individual responses on personality tests, economic games, and social surveys with 85% accuracy.

Meta announced builds $10B AI data center in Louisiana - their biggest data center yet. The $10B facility spans 4 million square feet, promises 500 direct jobs, and commits to 100% renewable energy. Meta pledges $200M for local infrastructure improvements through 2030.

New Paper gives us a way to detect if RAG systems have enough context to answer questions accurately. i.e. AI learns to admit when it doesn't have enough facts to answer your question. The research introduces a context sufficiency classifier achieving 93% accuracy with Gemini 1.5 Pro, while implementing selective generation strategies that boost response accuracy by 2-10% across major LLMs.