🧩 OpenAI rolled out the new ChatGPT Images for more reliable edits and up to 4x faster image generation

OpenAI's Faster image edits, Gemini 3’s smarter reasoning, MIT’s robot grip breakthrough, Xiaomi’s MiMo-V2-Flash vs DeepSeek, and ChatGPT’s new multi-threading on mobile.

Read time: 6 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (16-Dec-2025):

🧩 OpenAI rolled out the new ChatGPT Images for more reliable edits and up to 4x faster image generation

🧠 Google just dropped a new Agentic Benchmark: Gemini 3 Pro beat Pokémon Crystal (defeating Red) using 50% fewer tokens than Gemini 2.5 Pro.

🏆MIT’s latest research just just gave Robots a great new skill to grip delicate yet heavy objects without breaking them.

🇨🇳 China’s Xiaomi released MiMo-V2-Flash, an open-source LLM that is head to head with DeepSeek

🛠️ ChatGPT on iOS and Android now lets people branch a single chat into multiple parallel threads, so they can explore different directions without losing the original line of thought.

🧩 OpenAI rolled out the new ChatGPT Images for more reliable edits and up to 4x faster image generation

OpenAI improved the accuracy and reliability of its image generation features in the latest ChatGPT Images update, as more companies and brands rely on AI visuals for design planning. The new version, called GPT Image 1.5, will be available to all ChatGPT users and through the API. It’s powered by GPT 5.2, which early users say delivers strong results for business applications.

The main change is that, when someone edits an uploaded photo, the model tries to change only the requested part while keeping things like lighting, framing, and a person’s face consistent across repeated edits.

Under the hood, this is basically better “instruction following,” meaning the model is less likely to randomly rewrite the whole scene when the user asked for a small, local fix.

The editing toolkit is broader now, so it handles add, remove, combine, blend, and move-type changes with fewer side effects, which is what people feel as “it kept what I wanted and only touched what I asked.”

The model is also better at “creative transformations,” where the user wants a new style or layout but still wants the original identity and key details to survive the change.

Text rendering got a step up too, meaning it can place more small, dense text inside an image without turning it into unreadable gibberish.

ChatGPT also added a dedicated Images space in the sidebar with preset styles and prompts, so users can iterate faster without writing long prompts each time.

On the API side, GPT Image 1.5 is positioned for brand and ecommerce work because it preserves logos and key visuals better across edits, and image inputs and outputs are 20% cheaper than GPT Image 1.

OpenAI also admits results are still imperfect, especially for tricky multi-face scenes, multilingual text, and some factual or scientific details.

In my opinion, a fair read is that this release is more about repeatable, controlled editing than raw “wow” images, which is what makes it useful for real production pipelines.

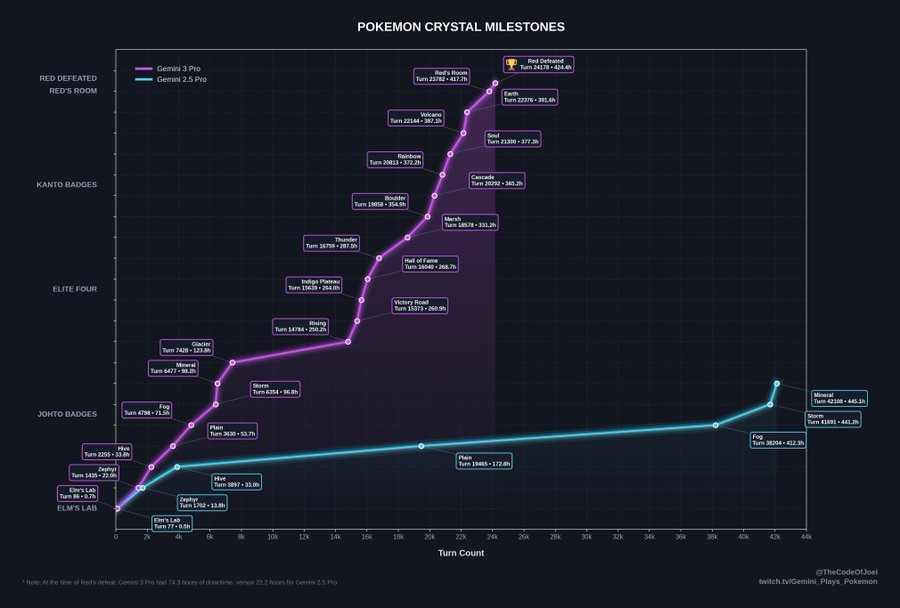

🧠 Google just dropped a new Agentic Benchmark: Gemini 3 Pro beat Pokémon Crystal (defeating Red) using 50% fewer tokens than Gemini 2.5 Pro.

They benchmarked Gemini 3 Pro against Gemini 2.5 Pro on a full run of Pokémon Crystal (which is significantly longer/harder than the standard Pokemon Red benchmark).

Gemini 3 Pro finished Pokémon Crystal and beat Red while Gemini 2.5 Pro stalled at 4 badges. Gemini 3 Pro also did it with ~50% fewer turns and far fewer tokens.

The big deal is that it behaved like a practical engineer, creating tools, trusting sensors, testing ideas, and turning that into wins. Both agents used the same harness and were told to think like scientists, form hypotheses, test them, and keep notes.

Gemini 3 Pro wrote a new input tool on the fly, which let it push complex button sequences faster and avoid slow menu work. It switched between memory data and the live screen, so it could read puzzle states and enemy health bars that were not exposed by the data feed.

It did battle math in context, picked smarter moves like Swift over Flamethrower after a special defense boost, and used items between fights to keep momentum. It never lost a single major fight, so it avoided grinding and backtracking that waste time.

It also ran a long, planned strategy to beat Red at a level disadvantage, which shows flexible multi step planning, not just reflexes. The result is speed and cost, with 17 days and 1.88B tokens for full completion versus a projection of 69 days and >15B tokens for Gemini 2.5 Pro to match. In short, this is a clean example of agentic reasoning that converts thinking into measurable progress.

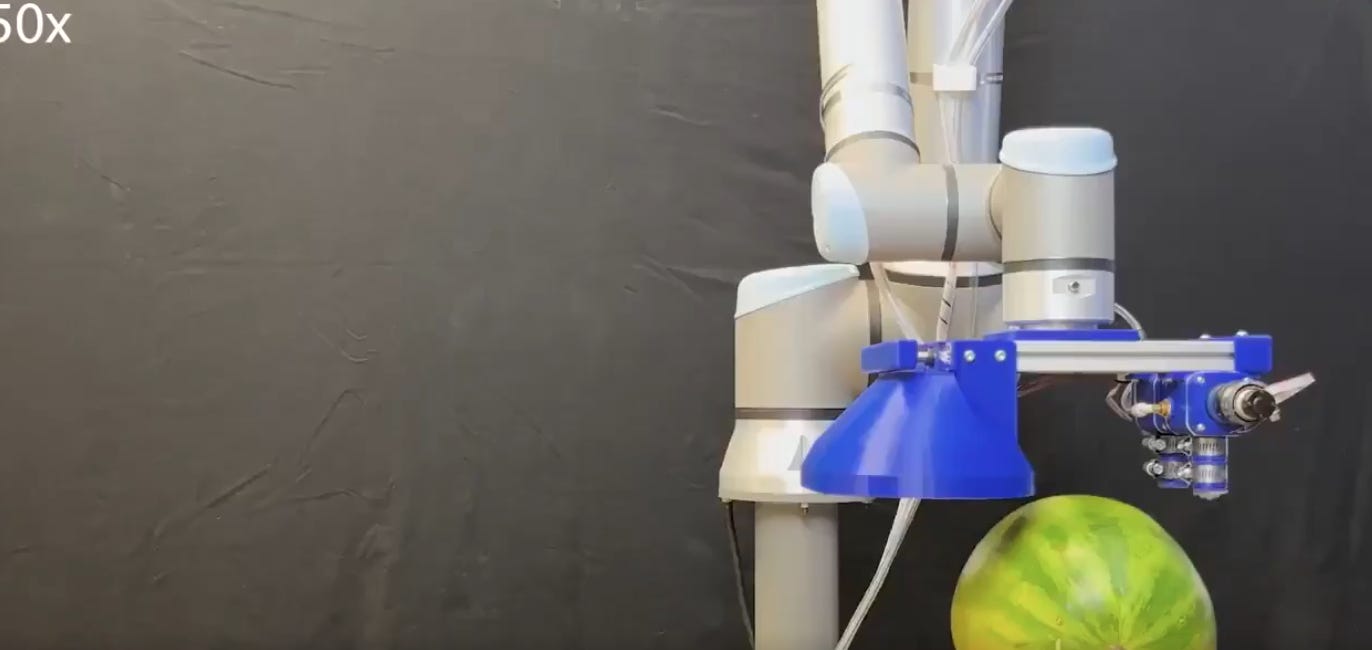

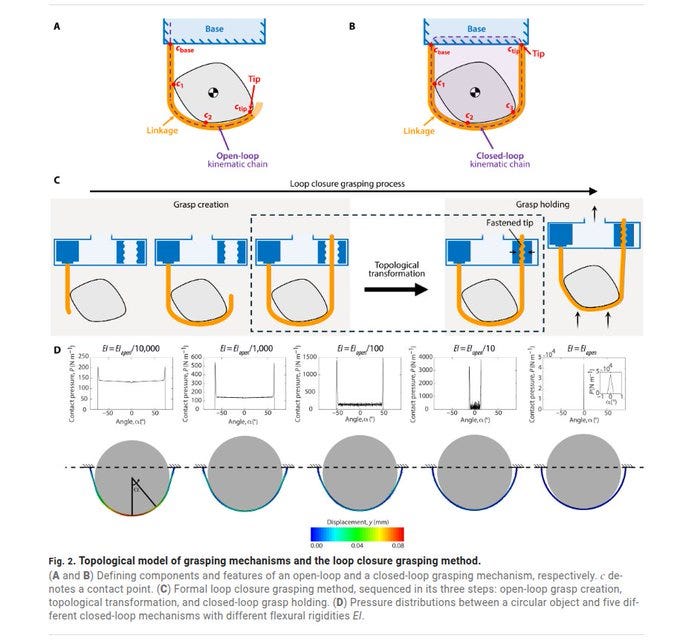

🏆MIT’s latest research just just gave Robots a great new skill to grip delicate yet heavy objects without breaking them.

A single gripper shape has to do 2 conflicting jobs, it must move freely to form a wrap, then resist big forces while staying gentle. Open-ended grippers can snake into place, but they usually need stiffness to hold, and stiffness puts load into a few high-pressure contact points.

Very soft grippers spread pressure well, but they can buckle or slide when the object is heavy, so they fail at the holding stage. This new idea from MIT, called loop closure grasping, completely avoids that tradeoff.

Loop closure grasping starts as an open loop, meaning the robot has a free tip that can snake around clutter and find a good wrap. Once the wrap is right, the tip locks back onto the base, turning the shape into a closed loop that surrounds the object.

Now the load is carried mainly by tension, like a sling, so the loop can stay very floppy in bending and still hold strongly without pointy pressure. The prototype uses inflatable “vine” beams that grow from the tip, then a clamp and winch to fasten, tighten, and finally deflate for soft holding. That combo lets it do awkward grasps, like lifting a 6.8kg kettlebell from a cluttered bin or pulling an object from 3m away.

Here is the idea.

The robot first acts like a free rope so it can route its tip anywhere, then it clips its tip back to its base to become a closed loop that hugs and lifts like a soft sling, which makes the hold both strong and gentle.

What “open loop” means here.

The gripper starts as a long inflatable tube called a vine robot. Air pushes new material out at the tip, so the tip “grows” forward without dragging the body, letting it snake through clutter, under objects on a table, or even through a ring. Because the tip is free, it can find paths that a rigid claw cannot. The drawing on page 3 shows open loop on the left.

How it becomes “closed loop”.

Once the tip reaches the base, a small clamp at the base grabs the tip. Now the tube and base form a loop that fully surrounds the object. The sketch on page 6 shows the 3 steps, create the grasp while open, fasten the tip, then hold while closed.

Why the hold is gentle and strong at the same time.

After fastening, the tube is deflated so it turns from a puffy tube into a floppy strap. A floppy strap spreads force over a big area, so contact pressure stays low on fragile things, while the strap’s fibers are strong in tension to carry heavy loads. The paper calls this “very high bending softness” combined with high tensile strength.

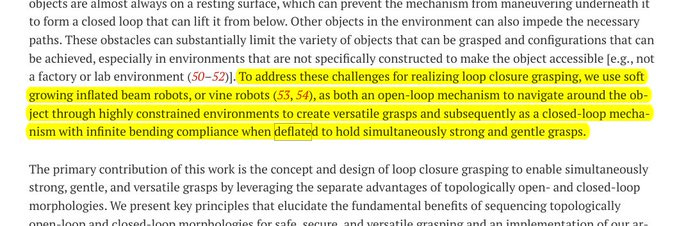

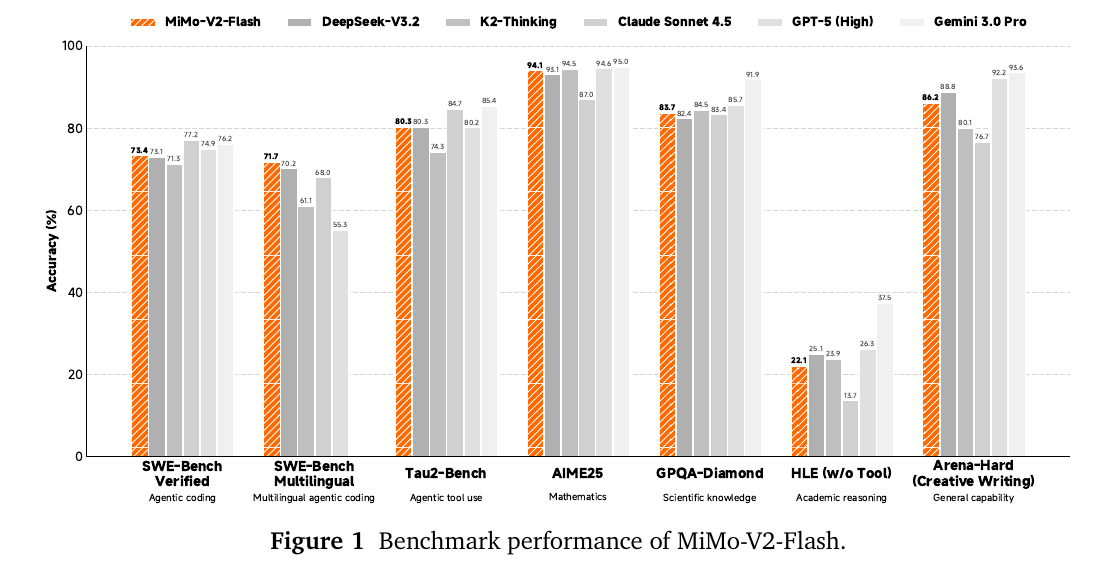

🇨🇳 China’s Xiaomi released MiMo-V2-Flash, an open-source LLM that is head to head with DeepSeek

Xiaomi released MiMo-V2-Flash. It is an efficient, and ultra-fast open-source foundation language model that particularly excels in reasoning, coding, and agentic scenarios,

309B total parameters, 15B active parameters, and a 256k context window.

Model on HuggingFace with MIT license

Beats DeepSeek-V3.2 on SWE-Bench Verified.

Hybrid Attention: 5:1 interleaved 128-window SWA + Global | 256K context

SWE-Bench Verified: 73.4% | SWE-Bench Multilingual: 71.7% — new SOTA for open-source models

pre-trained on 27 trillion tokens

designed for fast, strong reasoning and agentic capabilities.

MiMo-V2-Flash adopts a hybrid attention architecture that interleaves Sliding Window Attention (SWA) with global attention, with a 128-token sliding window under a 5:1 hybrid ratio.

Xiaomi says it is top-2 among open-source on AIME 2025 and GPQA-Diamond, and #1 among open-source on SWE-bench Verified plus multilingual software engineering benchmarks.

The “hybrid thinking” switch controls whether it spends extra steps reasoning before answering, and the HTML generation and long context are aimed at agent loops where the model writes code, calls tools, reads outputs, and keeps going for hundreds of turns.

Model Availability:

🤗 Model: http://hf.co/XiaomiMiMo/MiMo-V2-Flash

📝 Blog Post: http://mimo.xiaomi.com/blog/mimo-v2-flash

📄 Technical Report: http://github.com/XiaomiMiMo/MiMo-V2-Flash/blob/main/paper.pdf

🎨 AI Studio: http://aistudio.xiaomimimo.com

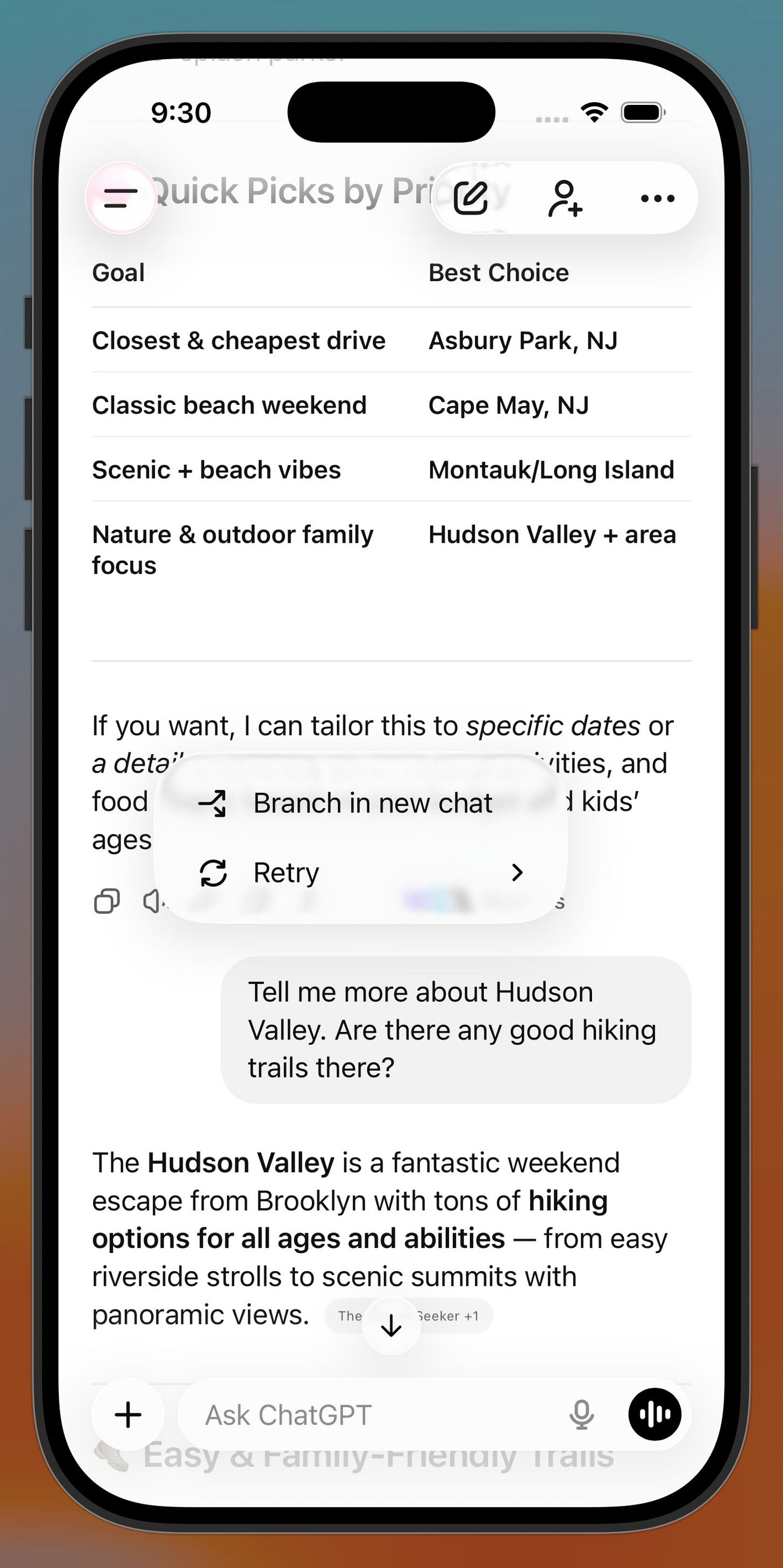

🛠️ ChatGPT on iOS and Android now lets people branch a single chat into multiple parallel threads, so they can explore different directions without losing the original line of thought.

OpenAI has officially announced that ChatGPT has launched the highly anticipated branch chat feature on iOS and Android platforms.

The key win is cleaner “what-if” exploration, because each branch keeps its own context while still living inside the same conversation.

For example, if you are discussing weekend getaway options near Brooklyn, ChatGPT might suggest places like Asbury Park for beach vibes, Cape May for relaxation, Montauk for scenery, or the Hudson Valley for hikes. You can pick one, say Hudson Valley, and start asking more detailed questions. Branching lets you do that in a new path while keeping your initial chat intact.

It works great for planning trips, refining ideas, or digging deeper into any topic. The update brings more flexibility to mobile chats. Just update your app to the latest version if you do not see it yet.

Before this, chats were basically a single timeline, so testing a new idea often meant starting a new chat and manually copying context over.

Branch chat turns 1 conversation into a tree, where a user can fork at any earlier message and then keep going on that fork without rewriting everything.

This is useful for work where people naturally think in parallel, like comparing product strategies, trying multiple drafts of the same paragraph, or testing different assumptions in a research plan.

Under the hood, the main change is how the interface organizes context, since each branch needs to remember its own “history so far” while staying easy to navigate.

That’s a wrap for today, see you all tomorrow.